Docker-Compose部署Redis(v7.2)主从模式

环境

- docker desktop for windows 4.23.0

- redis 7.2

一、前提准备

1. redis配置文件

因为Redis 7.2 docker镜像里面没有配置文件,所以需要去redis官网下载一个复制里面的redis.conf

博主这里用的是7.2.3版本的redis.conf,这个文件就在解压后第一层文件夹里。

2. 下载redis镜像

docker pull redis:7.2

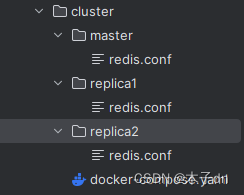

3. 文件夹结构

如下建立cluster文件夹,并复制出三份conf文件到如图位置。

二、docker-compose

docker-compose文件具体内容如下。

version: '3.8'

networks:

redis-network:

driver: bridge

ipam:

driver: default

config:

- subnet: 172.30.1.0/24

services:

redis-master:

container_name: redis-master

image: redis:7.2

volumes:

- ./master/redis.conf:/usr/local/etc/redis/redis.conf

# - ./master/data:/data

ports:

- "7001:6379"

command: ["redis-server", "/usr/local/etc/redis/redis.conf"]

networks:

redis-network:

ipv4_address: 172.30.1.2

redis-replica1:

container_name: redis-replica1

image: redis:7.2

volumes:

- ./replica1/redis.conf:/usr/local/etc/redis/redis.conf

# - ./replica1/data:/data

ports:

- "7002:6379"

command: ["redis-server", "/usr/local/etc/redis/redis.conf"]

depends_on:

- redis-master

networks:

redis-network:

ipv4_address: 172.30.1.3

redis-replica2:

container_name: redis-replica2

image: redis:7.2

volumes:

- ./replica2/redis.conf:/usr/local/etc/redis/redis.conf

# - ./replica2/data:/data

ports:

- "7003:6379"

command: ["redis-server", "/usr/local/etc/redis/redis.conf"]

depends_on:

- redis-master

networks:

redis-network:

ipv4_address: 172.30.1.4

需要注意以下几点

- 这里自定义了

bridge子网并限定了范围,如果该范围已经被使用,请更换。 - 这里没有对

data进行-v挂载,如果要挂载,请注意宿主机对应文件夹权限问题。

三、主从配置

1.主节点配置文件

主节点对应的配置文件是master/redis.conf,需要做以下修改

-

bind

将bind 127.0.0.1 -::1修改为bind 0.0.0.0,监听来自任意网络接口的连接。 -

protected-mode

将protected-mode设置为no,关闭保护模式,接收远程连接。 -

masterauth

将masterauth设置为1009,这是从节点连接到主节点的认证密码,你可以指定为其他的。 -

requirepass

将requirepass设置为1009,这是客户端连接到本节点的认证密码,你可以指定为其他的。

2.从节点配置文件

把上面主节点的配置文件复制粘贴,然后继续做以下更改,就可以作为从节点配置文件了

- replicaof

旧版本添加一行replicaof redis-master 6379,表示本节点为从节点,并且主节点ip为redis-master,端口为6379。这里你也可以把ip填成172.30.1.2,因为在docker-compose中我们为各节点分配了固定的ip,以及端口是6379而不是映射的700x,这些都是docker的知识,这里不再赘述。

redis在5.0引入了replica的概念来替换slave,所以后续的新版本推荐使用replicaof,即便slaveof目前仍然支持。

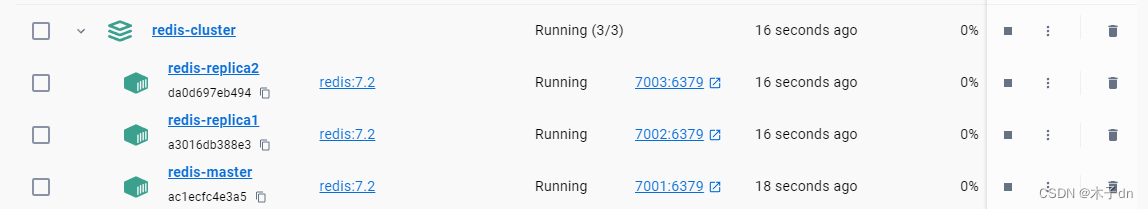

四、运行

配置好三个节点的配置文件后,用以下命令运行整个服务

docker-compose -p redis-cluster up -d

查看主节点日志,可以看到主节点向172.30.1.3和172.30.1.4两个从节点同步数据,并且连接正常,以及一系列success。

2024-01-05 15:12:59 1:M 05 Jan 2024 07:12:59.008 * Opening AOF incr file appendonly.aof.1.incr.aof on server start

2024-01-05 15:12:59 1:M 05 Jan 2024 07:12:59.008 * Ready to accept connections tcp

2024-01-05 15:13:00 1:M 05 Jan 2024 07:13:00.996 * Replica 172.30.1.4:6379 asks for synchronization

2024-01-05 15:13:00 1:M 05 Jan 2024 07:13:00.996 * Full resync requested by replica 172.30.1.4:6379

2024-01-05 15:13:00 1:M 05 Jan 2024 07:13:00.996 * Replication backlog created, my new replication IDs are '5bef8fa8e58042f1aee8eae528c6e10228a0c96b' and '0000000000000000000000000000000000000000'

2024-01-05 15:13:00 1:M 05 Jan 2024 07:13:00.996 * Delay next BGSAVE for diskless SYNC

2024-01-05 15:13:01 1:M 05 Jan 2024 07:13:01.167 * Replica 172.30.1.3:6379 asks for synchronization

2024-01-05 15:13:01 1:M 05 Jan 2024 07:13:01.167 * Full resync requested by replica 172.30.1.3:6379

2024-01-05 15:13:01 1:M 05 Jan 2024 07:13:01.167 * Delay next BGSAVE for diskless SYNC

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.033 * Starting BGSAVE for SYNC with target: replicas sockets

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.033 * Background RDB transfer started by pid 20

2024-01-05 15:13:05 20:C 05 Jan 2024 07:13:05.035 * Fork CoW for RDB: current 0 MB, peak 0 MB, average 0 MB

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.035 * Diskless rdb transfer, done reading from pipe, 2 replicas still up.

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.052 * Background RDB transfer terminated with success

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.052 * Streamed RDB transfer with replica 172.30.1.4:6379 succeeded (socket). Waiting for REPLCONF ACK from replica to enable streaming

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.052 * Synchronization with replica 172.30.1.4:6379 succeeded

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.052 * Streamed RDB transfer with replica 172.30.1.3:6379 succeeded (socket). Waiting for REPLCONF ACK from replica to enable streaming

2024-01-05 15:13:05 1:M 05 Jan 2024 07:13:05.052 * Synchronization with replica 172.30.1.3:6379 succeeded

接着看看从节点日志,可以看到Connecting to MASTER redis-master:6379,向主节点连接并申请同步数据,以及一系列success。

2024-01-05 15:13:01 1:S 05 Jan 2024 07:13:01.166 * Connecting to MASTER redis-master:6379

2024-01-05 15:13:01 1:S 05 Jan 2024 07:13:01.166 * MASTER <-> REPLICA sync started

2024-01-05 15:13:01 1:S 05 Jan 2024 07:13:01.166 * Non blocking connect for SYNC fired the event.

2024-01-05 15:13:01 1:S 05 Jan 2024 07:13:01.167 * Master replied to PING, replication can continue...

2024-01-05 15:13:01 1:S 05 Jan 2024 07:13:01.167 * Partial resynchronization not possible (no cached master)

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.033 * Full resync from master: 5bef8fa8e58042f1aee8eae528c6e10228a0c96b:0

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.035 * MASTER <-> REPLICA sync: receiving streamed RDB from master with EOF to disk

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.038 * MASTER <-> REPLICA sync: Flushing old data

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.038 * MASTER <-> REPLICA sync: Loading DB in memory

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.056 * Loading RDB produced by version 7.2.3

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.056 * RDB age 0 seconds

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.056 * RDB memory usage when created 0.90 Mb

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.056 * Done loading RDB, keys loaded: 1, keys expired: 0.

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.057 * MASTER <-> REPLICA sync: Finished with success

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.057 * Creating AOF incr file temp-appendonly.aof.incr on background rewrite

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.057 * Background append only file rewriting started by pid 21

2024-01-05 15:13:05 21:C 05 Jan 2024 07:13:05.067 * Successfully created the temporary AOF base file temp-rewriteaof-bg-21.aof

2024-01-05 15:13:05 21:C 05 Jan 2024 07:13:05.068 * Fork CoW for AOF rewrite: current 0 MB, peak 0 MB, average 0 MB

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.084 * Background AOF rewrite terminated with success

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.084 * Successfully renamed the temporary AOF base file temp-rewriteaof-bg-21.aof into appendonly.aof.5.base.rdb

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.084 * Successfully renamed the temporary AOF incr file temp-appendonly.aof.incr into appendonly.aof.5.incr.aof

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.093 * Removing the history file appendonly.aof.4.incr.aof in the background

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.093 * Removing the history file appendonly.aof.4.base.rdb in the background

2024-01-05 15:13:05 1:S 05 Jan 2024 07:13:05.101 * Background AOF rewrite finished successfully

五、测试

用你喜欢的docker容器连接工具或者redis连接工具来连接主节点redis服务,只要能进入redis-cli就行。这里以docker容器连接为例。

- 主节点设置一个字段并查看从节点信息

root@ac1ecfc4e3a5:/data# redis-cli

127.0.0.1:6379> auth 1009

OK

127.0.0.1:6379> set num 67899

OK

127.0.0.1:6379> get num

"67899"

127.0.0.1:6379> INFO replication

# Replication

role:master

connected_slaves:2

slave0:ip=172.30.1.4,port=6379,state=online,offset=3388,lag=1

slave1:ip=172.30.1.3,port=6379,state=online,offset=3388,lag=1

master_failover_state:no-failover

master_replid:5bef8fa8e58042f1aee8eae528c6e10228a0c96b

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:3388

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:3388

- 从节点获取

root@a3016db388e3:/data# redis-cli

127.0.0.1:6379> auth 1009

OK

127.0.0.1:6379> get num

"67899"

测试成功。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!