4.MapReduce 序列化

2024-01-09 10:02:27

概述

序列化是分布式计算中很重要的一环境,好的序列化方式,可以大大减少分布式计算中,网络传输的数据量。

序列化

序列化

对象 --> 字节序例 :存储到磁盘或者网络传输

MR 、Spark、Flink :分布式的执行框架 必然会涉及到网络传输

java 中的序列化:Serializable

Hadoop 中序列化特点: 紧凑、速度、扩展性、互操作

Spark 中使用了其它的序例化框架 Kyro

反序例化

字节序例 —> 对象

java自带的两种

Serializable

此处是 java 自带的

序例化方式,这种方式简单方便,但体积大,不利于大数据量网络传输。

public class JavaSerDemo {

public static void main(String[] args) throws IOException, ClassNotFoundException {

Person person = new Person(1, "张三", 33);

ObjectOutputStream out = new ObjectOutputStream(new FileOutputStream("download/person.obj"));

out.writeObject(person);

ObjectInputStream in = new ObjectInputStream(new FileInputStream("download/person.obj"));

Object o = in.readObject();

System.out.println(o);

}

static class Person implements Serializable {

private int id;

private String name;

private int age;

public Person(int id, String name, int age) {

this.id = id;

this.name = name;

this.age = age;

}

@Override

public String toString() {

return "Person{" +

"id=" + id +

", name='" + name + '\'' +

", age=" + age +

'}';

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}

}

非Serializable

public class DataSerDemo {

public static void main(String[] args) throws IOException {

Person person = new Person(1, "张三", 33);

DataOutputStream out = new DataOutputStream(new FileOutputStream("download/person2.obj"));

out.writeInt(person.getId());

out.writeUTF(person.getName());

out.close();

DataInputStream in = new DataInputStream(new FileInputStream("download/person2.obj"));

// 这里要注意,上面以什么顺序写出去,这里就要以什么顺序读取

int id = in.readInt();

String name = in.readUTF();

in.close();

System.out.println("id:" + id + " name:" + name);

}

/**

* 注意: 不需要继承 Serializable

*/

static class Person {

private int id;

private String name;

private int age;

public Person(int id, String name, int age) {

this.id = id;

this.name = name;

this.age = age;

}

@Override

public String toString() {

return "Person{" +

"id=" + id +

", name='" + name + '\'' +

", age=" + age +

'}';

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

}

}

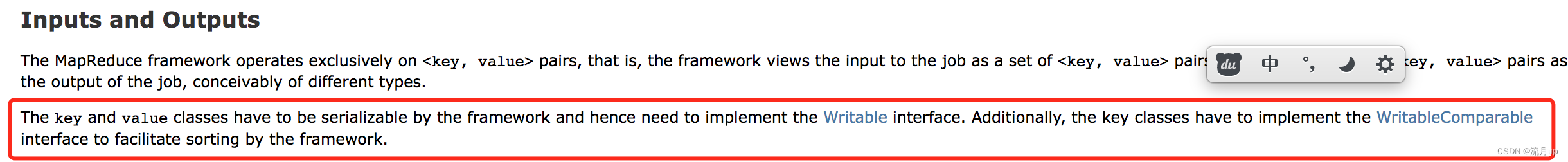

hadoop序例化

The key and value classes have to be serializable by the framework and hence need to implement the Writable interface. Additionally, the key classes have to implement the WritableComparable interface to facilitate sorting by the framework.

注意:Writable 两个方法,一个 write ,readFields

@InterfaceAudience.Public

@InterfaceStability.Stable

public interface Writable {

void write(DataOutput out) throws IOException;

void readFields(DataInput in) throws IOException;

}

实践

public class PersonWritable implements Writable {

private int id;

private String name;

private int age;

// 消费金额

private int consumption;

// 消费总金额

private long consumptions;

public PersonWritable() {

}

public PersonWritable(int id, String name, int age, int consumption) {

this.id = id;

this.name = name;

this.age = age;

this.consumption = consumption;

}

public PersonWritable(int id, String name, int age, int consumption, long consumptions) {

this.id = id;

this.name = name;

this.age = age;

this.consumption = consumption;

this.consumptions = consumptions;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

public int getConsumption() {

return consumption;

}

public void setConsumption(int consumption) {

this.consumption = consumption;

}

public long getConsumptions() {

return consumptions;

}

public void setConsumptions(long consumptions) {

this.consumptions = consumptions;

}

@Override

public String toString() {

return

"id=" + id +

", name='" + name + '\'' +

", age='" + age + '\'' +

", consumption=" + consumption + '\'' +

", consumptions=" + consumptions;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeInt(id);

out.writeUTF(name);

out.writeInt(age);

out.writeInt(consumption);

out.writeLong(consumptions);

}

@Override

public void readFields(DataInput in) throws IOException {

id = in.readInt();

name = in.readUTF();

age = in.readInt();

consumption = in.readInt();

consumptions = in.readLong();

}

}

/**

* 统计 个人 消费

*/

public class PersonStatistics {

static class PersonStatisticsMapper extends Mapper<LongWritable, Text, IntWritable, PersonWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = value.toString().split(",");

int id = Integer.parseInt(split[0]);

String name = split[1];

int age = Integer.parseInt(split[2]);

int consumption = Integer.parseInt(split[3]);

PersonWritable writable = new PersonWritable(id, name, age, consumption, 0);

context.write(new IntWritable(id), writable);

}

}

static class PersonStatisticsReducer extends Reducer<IntWritable, PersonWritable, NullWritable, PersonWritable> {

@Override

protected void reduce(IntWritable key, Iterable<PersonWritable> values, Context context) throws IOException, InterruptedException {

long count = 0L;

PersonWritable person = null;

for (PersonWritable data : values) {

if (Objects.isNull(person)) {

person = data;

}

count = count + data.getConsumption();

}

person.setConsumptions(count);

PersonWritable personWritable = new PersonWritable(person.getId(), person.getName(), person.getAge(), person.getConsumption(), count);

context.write(NullWritable.get(), personWritable);

}

}

public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException {

Configuration configuration = new Configuration();

String sourcePath = "data/person.data";

String distPath = "downloadOut/person-out.data";

FileUtil.deleteIfExist(configuration, distPath);

Job job = Job.getInstance(configuration, "person statistics");

job.setJarByClass(PersonStatistics.class);

//job.setCombinerClass(PersonStatistics.PersonStatisticsReducer.class);

job.setMapperClass(PersonStatisticsMapper.class);

job.setReducerClass(PersonStatisticsReducer.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(PersonWritable.class);

job.setOutputKeyClass(NullWritable.class);

job.setOutputValueClass(PersonWritable.class);

FileInputFormat.addInputPath(job, new Path(sourcePath));

FileOutputFormat.setOutputPath(job, new Path(distPath));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

# person.data

1,张三,30,10

1,张三,30,20

2,李四,25,5

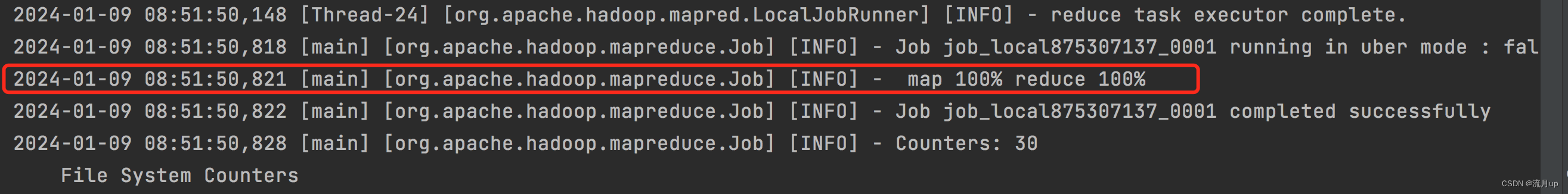

上述执行结果如下:

分片/InputFormat & InputSplit

org.apache.hadoop.mapreduce.InputFormat

org.apache.hadoop.mapreduce.InputSplit

日志

执行

序列化测试小程序,关注以下日志

# 总共加载一个文件,分隔成一个

2024-01-06 09:19:42,363 [main] [org.apache.hadoop.mapreduce.lib.input.FileInputFormat] [INFO] - Total input files to process : 1

2024-01-06 09:19:42,487 [main] [org.apache.hadoop.mapreduce.JobSubmitter] [INFO] - number of splits:1

结束

至此,MapReduce 序列化 至此结束,如有疑问,欢迎评论区留言。

文章来源:https://blog.csdn.net/2301_79691134/article/details/135408810

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!