spark sql写入mysql报错:Caused by: java.lang.IllegalArgumentException: Can‘t get JDBC type for void

2024-01-09 19:21:57

一、问题描述

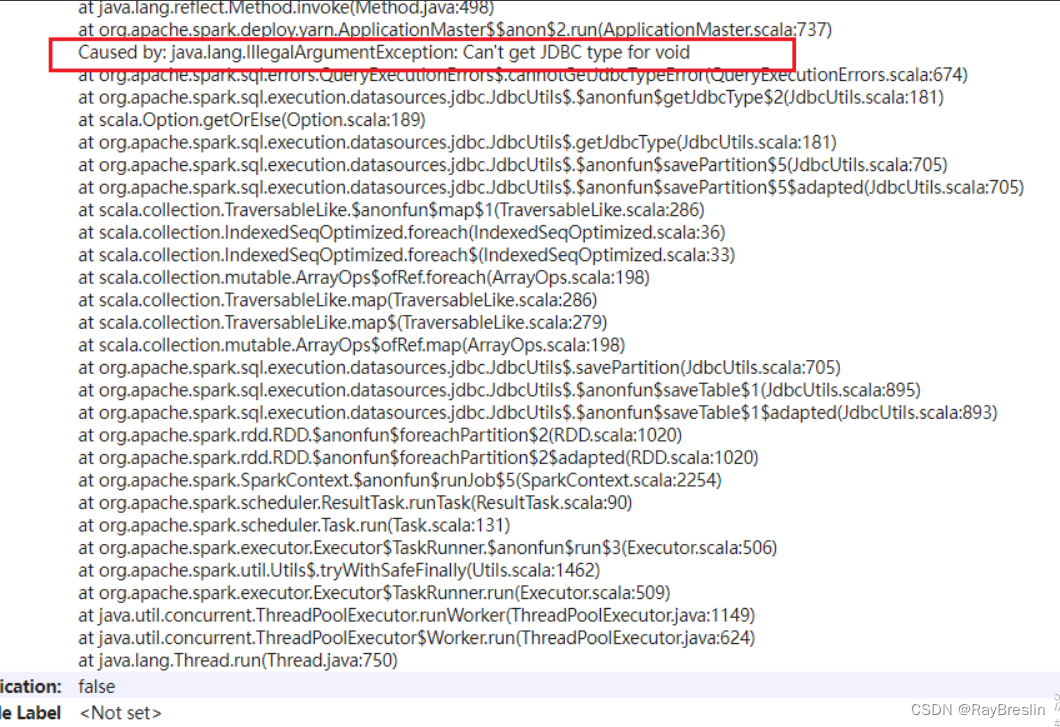

spark sql写入mysql报错:Caused by: java.lang.IllegalArgumentException: Can’t get JDBC type for void

Caused by: java.lang.IllegalArgumentException: Can't get JDBC type for void

at org.apache.spark.sql.errors.QueryExecutionErrors$.cannotGetJdbcTypeError(QueryExecutionErrors.scala:674)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$getJdbcType$2(JdbcUtils.scala:181)

at scala.Option.getOrElse(Option.scala:189)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.getJdbcType(JdbcUtils.scala:181)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$savePartition$5(JdbcUtils.scala:705)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$savePartition$5$adapted(JdbcUtils.scala:705)

at scala.collection.TraversableLike.$anonfun$map$1(TraversableLike.scala:286)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:198)

at scala.collection.TraversableLike.map(TraversableLike.scala:286)

at scala.collection.TraversableLike.map$(TraversableLike.scala:279)

at scala.collection.mutable.ArrayOps$ofRef.map(ArrayOps.scala:198)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.savePartition(JdbcUtils.scala:705)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$saveTable$1(JdbcUtils.scala:895)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$.$anonfun$saveTable$1$adapted(JdbcUtils.scala:893)

at org.apache.spark.rdd.RDD.$anonfun$foreachPartition$2(RDD.scala:1020)

at org.apache.spark.rdd.RDD.$anonfun$foreachPartition$2$adapted(RDD.scala:1020)

at org.apache.spark.SparkContext.$anonfun$runJob$5(SparkContext.scala:2254)

at org.apache.spark.scheduler.ResultTask.runTask(ResultTask.scala:90)

at org.apache.spark.scheduler.Task.run(Task.scala:131)

at org.apache.spark.executor.Executor$TaskRunner.$anonfun$run$3(Executor.scala:506)

at org.apache.spark.util.Utils$.tryWithSafeFinally(Utils.scala:1462)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:509)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

二、问题原因

写入时sourcesql是

select cat1,cat2 from (

select cat1 as cat1,null as cat2 from test

union

select null as cat1,cat2 as cat2 from test

)ttt

这样会导致部分字段类型无法确认,而写入mysql是

resultDF.write

.format("jdbc")

.option("dbtable", sinkTable)

.option("url", mysqlUrl)

.option("user", mysqlUser)

.option("password", mysqlPwd)

.option("truncate", "true")

.mode(SaveMode.Overwrite)

.save()

mysql对应字段是有类型,例如varchar等,而源头字段类型是void,所以无法写入。

三、解决方法

将结果强制转换为需要的类型即可

select cast(cat1 as string),cast(cat2 as string) from (

select cat1 as cat1,null as cat2 from test

union

select null as cat1,cat2 as cat2 from test

)ttt

文章来源:https://blog.csdn.net/u010886217/article/details/135456602

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!