ML Design Patterns——Rebalancing

Simply put

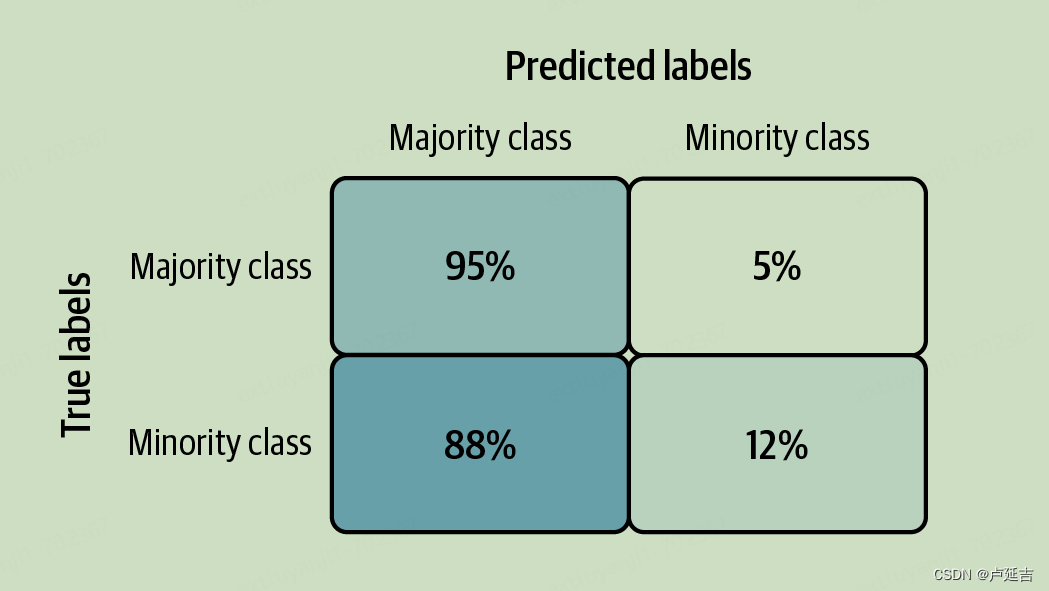

Rebalancing is a common technique used in machine learning to address the issue of imbalanced data distribution. In many real-world datasets, the number of samples in different classes can vary significantly, leading to biased model training and poor predictive performance. By adjusting sample weights and data distribution, the goal of rebalancing is to enable the model to learn more effectively and make accurate predictions.

There are several common approaches to rebalancing, including undersampling, oversampling, and combination methods. Each approach has its own advantages and trade-offs, and the choice depends on the specific dataset and problem at hand.

- Undersampling: This approach involves reducing the number of samples from the majority class (the class with more samples) to match the number of samples in the minority class (the class with fewer samples). By randomly removing samples from the majority class, the data becomes more balanced, allowing the model to give equal importance to both classes during training. However, undersampling can result in loss of information if the removed samples contain important patterns.

- Oversampling: In this approach, the number of samples in the minority class is increased by duplicating or generating synthetic examples. Duplicating existing samples can lead to overfitting and reduced generalization performance. Synthetic oversampling techniques, such as Synthetic Minority Over-sampling Technique (SMOTE), generate new samples by interpolating between existing samples or by creating synthetic instances based on the characteristics of the minority class. This helps to augment the minority class and balance the overall data distribution.

- Combination methods: These methods aim to combine the advantages of both undersampling and oversampling. One common approach is to perform undersampling on the majority class while oversampling the minority class. By reducing the number of samples in the majority class and augmenting the minority class, both classes are represented more equally, leading to better model performance.

When rebalancing the data, it is essential to consider the potential impact on the model’s performance, as well as the characteristics of the dataset. It is recommended to evaluate the rebalanced datasets using appropriate evaluation metrics and validation techniques to determine the most effective rebalancing strategy.

It is important to note that rebalancing alone may not always be the optimal solution. It is just one approach to mitigate the effects of imbalanced data. Additionally, other techniques such as cost-sensitive learning, ensemble methods, and anomaly detection can be explored in conjunction with rebalancing to enhance model performance in imbalanced datasets.

In summary, rebalancing is a powerful technique in machine learning to address the issue of imbalanced data distribution. By adjusting sample weights and data distribution, rebalancing methods aim to provide equal importance to all classes during model training, resulting in improved predictive performance. However, the choice of rebalancing approach should be based on careful consideration of the dataset characteristics and evaluation of the rebalanced datasets.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!