k8s的二进制部署

k8s的二进制部署:源码包部署

k8s master01:192.168.66.10 kube-apiserver kube-controller-manager kube-scheduler etcd

k8s master02“ 192.168.66.20 kube-apiserver kube-controller-manager kube-scheduler

node节点01:192.168.66.30 kubelet kube-proxy etcd

node节点02:192.168.66.40 kubelet kube-proxy etcd

负载均衡: nignix+keepalive:master 192.168.66.50

backup:192.168.66.60

etcd: 192.168.66.10

192.168.66.30

192.168.66.40

所有 :master01 node 1,2

systemctl stop firewalld

setenforce 0

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a

###

swapoff -a

k8s在设计时,为了提升性能,默认是不使用swap分区,kubenetes在初始化时,会检测swap是否关闭

##

master1:

hostnamectl set-hostname master01

node1:

hostnamectl set-hostname node01

node2:

hostnamectl set-hostname node02

所有

vim /etc/hosts

192.168.66.10 master01

192.168.66.30 node01

192.168.66.40 node02

vim /etc/sysctl.d/k8s.conf

#开启网桥模式:

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

#网桥的流量传给iptables链,实现地址映射

#关闭ipv6的流量(可选项)

net.ipv6.conf.all.disable_ipv6=1

#根据工作中的实际情况,自定

net.ipv4.ip_forward=1

wq!

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

##

sysctl --system

yum install ntpdate -y

ntpdate ntp.aliyun.com

date

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker.service

systemctl enable docker.service

mkdir -p /etc/docker

tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://t7pjr1xu.mirror.aliyuncs.com"]

}

EOF

systemctl daemon-reload

systemctl restart docker

部署第一个组件:

存储k8s的集群信息和用户配置组件:etcd

etcd是一个高可用---分布式的键值对存储数据库

采用raft算法保证节点的信息一致性,etcd是用go语言写的

etcd的端口:2379:api接口 对外为客户端提供通讯

2380:内存服务的通信端口

etcd一般都是集群部署,etcd也有选举leader的机制,至少要3台,或者奇数台

k8s的内部通信依靠证书认证,密钥认证:证书的签发环境

master01:

cd /opt

投进来三个证书

![]()

mv cfssl cfssl-certinfo cfssljson /usr/local/bin

chmod 777 /usr/local/bin/cfssl*

##

cfssl:证书签发的命令工具

cdssl-certinfo:查看证书信息的工具

cfssljson:把证书的格式转化为json格式,变成文件的承载式证书

##

cd /opt

mkdir k8s

cd k8s

把两个证书投进去:

![]()

vim etcd-cert.sh

vim etcd.sh

q!

chmod 777 etcd-cert.sh etcd.sh

mkdir /opt/k8s/etcd-cert

mv etcd-cert.sh etcd-cert/

cd /opt/k8s/etcd-cert/

./etcd-cert.sh

ls

##

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem

ca-config.json: 证书颁发的配置文件,定义了证书生成的策略,默认的过期时间和模版

ca-csr.json: 签名的请求文件,包括一些组织信息和加密方式

ca.pem 根证书文件,用于给其他组件签发证书

server.csr etcd的服务器签发证书的请求文件

server-key.pem etcd服务器的私钥文件

ca.csr 根证书签发请求文件

ca-key.pem 根证书私钥文件

server-csr.json 用于生成etcd的服务器证书和私钥签名文件

server.pem etcd服务器证书文件,用于加密和认证 etcd 节点之间的通信。

###

cd /opt/k8s/

把 zxvf etcd-v3.4.9-linux-amd64.tar.gz 拖进去

![]()

tar zxvf etcd-v3.4.9-linux-amd64.tar.gz

ls etcd-v3.4.9-linux-amd64

mkdir -p /opt/etcd/{cfg,bin,ssl}

cd etcd-v3.4.9-linux-amd64

mv etcd etcdctl /opt/etcd/bin/

cp /opt/k8s/etcd-cert/*.pem /opt/etcd/ssl/

cd /opt/k8s/

./etcd.sh etcd01 192.168.66.10 etcd02=https://192.168.66.30:2380,etcd03=https://192.168.66.40:2380

开一台master1终端

scp -r /opt/etcd/ root@192.168.66.30:/opt/

scp -r /opt/etcd/ root@192.168.66.40:/opt/

scp /usr/lib/systemd/system/etcd.service root@192.168.66.30:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.66.40:/usr/lib/systemd/system/

node1:

vim /opt/etcd/cfg/etcd

node2:

vim /opt/etcd/cfg/etcd

一个一个起

从master01开始

systemctl start etcd

systemctl enable etcd

systemctl status etcd

master:

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.66.10:2379,https://192.168.66.30:2379,https://192.168.66.40:2379" endpoint health --write-out=table

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.66.10:2379,https://192.168.66.30:2379,https://192.168.66.40:2379" --write-out=table member list

master01:

#上传 master.zip 和 k8s-cert.sh 到 /opt/k8s 目录中,解压 master.zip 压缩包

cd /opt/k8s/

vim k8s-cert.sh

unzip master.zip

chmod 777 *.sh

vim controller-manager.sh

vim scheduler.sh

vim admin.sh

#创建kubernetes工作目录

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

mkdir /opt/k8s/k8s-cert

mv /opt/k8s/k8s-cert.sh /opt/k8s/k8s-cert

cd /opt/k8s/k8s-cert/

./k8s-cert.sh

ls

cp ca*pem apiserver*pem /opt/kubernetes/ssl/

cd /opt/kubernetes/ssl/

ls

cd /opt/k8s/

把 kubernetes-server-linux-amd64.tar.gz 拖进去

tar zxvf kubernetes-server-linux-amd64.tar.gz

cd /opt/k8s/kubernetes/server/bin

cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

ln -s /opt/kubernetes/bin/* /usr/local/bin/

kubectl get node

kubectl get cs

cd /opt/k8s/

vim token.sh

#!/bin/bash

#获取随机数前16个字节内容,以十六进制格式输出,并删除其中空格

BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

#生成 token.csv 文件,按照 Token序列号,用户名,UID,用户组 的格式生成

cat > /opt/kubernetes/cfg/token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

wq!

chmod 777 token.sh

./token.sh

cat /opt/kubernetes/cfg/token.csv

cd /opt/k8s/

./apiserver.sh 192.168.66.10 https://192.168.66.10:2379,https://192.168.66.30:2379,https://192.168.66.40:2379

netstat -natp | grep 6443

cd /opt/k8s/

./scheduler.sh

ps aux | grep kube-scheduler

./controller-manager.sh

ps aux | grep kube-controller-manager

./admin.sh

kubectl get cs

kubectl version

kubectl api-resources

vim /etc/profile

G

o

source <(kubectl completion bash)

source /etc/profile

在所有 node 节点上操作 node1,2:

mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}

cd /opt

node.zip 到 /opt 目录中

unzip node.zip

chmod 777 kubelet.sh proxy.sh

master01:

cd /opt/k8s/kubernetes/server/bin

scp kubelet kube-proxy root@192.168.66.30:/opt/kubernetes/bin/

scp kubelet kube-proxy root@192.168.66.40:/opt/kubernetes/bin/

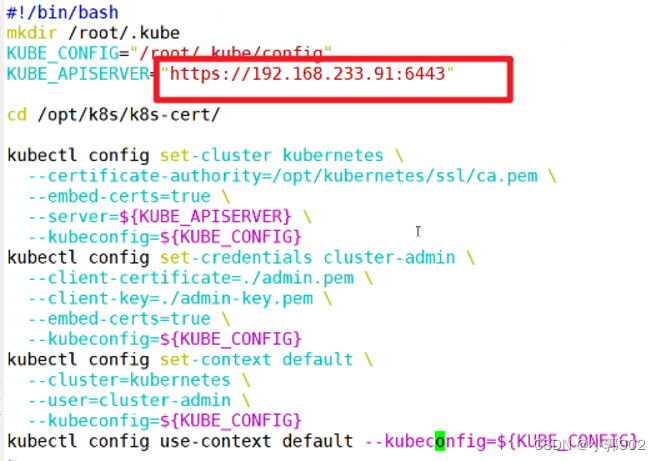

master 01:

mkdir -p /opt/k8s/kubeconfig

cd /opt/k8s/kubeconfig

把kubeconfig.sh 拖进去

chmod 777 kubeconfig.sh

./kubeconfig.sh 192.168.66.10 /opt/k8s/k8s-cert/

scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.66.30:/opt/kubernetes/cfg/

scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.66.40:/opt/kubernetes/cfg/

node1,2:

cd /opt/kubernetes/cfg/

ls

master01:

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

#RBAC授权,使用户 kubelet-bootstrap 能够有权限发起 CSR 请求证书,通过CSR加密认证实现INIDE节点加入到集群当中,kubelet获取master的验证信息和获取API的验证

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

node1:

cd /opt/

chmod 777 kubelet.sh

./kubelet.sh 192.168.66.30

master01:

kubectl get cs

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE 12s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

kubectl certificate approve node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE

##上面的密钥对

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE 2m5s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

##必须是Approved,Issued 才成功

node2:

cd /opt/

chmod 777 kubelet.sh

./kubelet.sh 192.168.66.40

master01:

kubectl get cs

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE 12s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

kubectl certificate approve node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE

##上面的密钥对

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-duiobEzQ0R93HsULoS9NT9JaQylMmid_nBF3Ei3NtFE 2m5s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

##必须是Approved,Issued 才成功

master01:

kubectl get node

在node1:

#上传 cni-plugins-linux-amd64-v0.8.6.tgz 和 flannel.tar 到 /opt 目录中

cd /opt/

docker load -i flannel.tar

mkdir -p /opt/cni/bin

tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

node2:

#上传 cni-plugins-linux-amd64-v0.8.6.tgz 和 flannel.tar 到 /opt 目录中

cd /opt/

docker load -i flannel.tar

mkdir -p /opt/cni/bin

tar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin

在 master01 节点上操作

#上传 kube-flannel.yml 文件到 /opt/k8s 目录中,部署 CNI 网络

cd /opt/k8s

kubectl apply -f kube-flannel.yml

kubectl get pod -n kube-system

kubectl get pod -o wide -n kube-system

//在node1,2 节点上操作

#上传 coredns.tar 到 /opt 目录中

cd /opt

docker load -i coredns.tar

//在 master01 节点上操作

#上传 coredns.yaml 文件到 /opt/k8s 目录中,部署 CoreDNS

cd /opt/k8s

kubectl apply -f coredns.yaml

kubectl get pods -n kube-system

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=system:anonymous

kubectl run -it --rm dns-test --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

exit

master02:

systemctl stop firewalld

setenforce 0

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

swapoff -a

hostnamectl set-hostname master02

所有的机器做映射

master02:

vim /etc/sysctl.d/k8s.conf

#开启网桥模式:

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

#网桥的流量传给iptables链,实现地址映射

#关闭ipv6的流量(可选项)

net.ipv6.conf.all.disable_ipv6=1

#根据工作中的实际情况,自定

net.ipv4.ip_forward=1

wq!

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

##

sysctl --system

yum install ntpdate -y

ntpdate ntp.aliyun.com

date

master01:

scp -r /opt/etcd/ root@192.168.66.20:/opt/

scp -r /opt/kubernetes/ root@192.168.66.20:/opt

scp -r /root/.kube root@192.168.66.20:/root

scp /usr/lib/systemd/system/{kube-apiserver,kube-controller-manager,kube-scheduler}.service root@192.168.66.20:/usr/lib/systemd/system/

master02:

vim /opt/kubernetes/cfg/kube-apiserver

systemctl start kube-apiserver.service

systemctl enable kube-apiserver.service

systemctl start kube-controller-manager.service

systemctl enable kube-controller-manager.service

systemctl start kube-scheduler.service

systemctl enable kube-scheduler.service

ln -s /opt/kubernetes/bin/* /usr/local/bin/

kubectl get nodes

kubectl get cs

kubectl get pod

##node节点和master02并没真正建议通信。只是从etcd信息

在nginx 1,2

cat > /etc/yum.repos.d/nginx.repo << 'EOF'

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/centos/7/$basearch/

gpgcheck=0

EOF

yum install nginx -y

vim /etc/nginx/nginx.conf

events {

worker_connections 1024;

}

#添加

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

#日志记录格式

#$remote_addr: 客户端的 IP 地址。

#$upstream_addr: 上游服务器的地址。

#[$time_local]: 访问时间,使用本地时间。

#$status: HTTP 响应状态码。

#$upstream_bytes_sent: 从上游服务器发送到客户端的字节数。

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.233.10:6443;

server 192.168.233.20:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

##

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.233.10:6443;

server 192.168.233.20:6443;

}

server {

listen 6443;

proxy_pass k8s-apiserver;

}

}

wq!

nginx -t

systemctl start nginx

systemctl enable nginx

netstat -natp | grep nginx

yum install keepalived -y

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

# 接收邮件地址

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

# 邮件发送地址

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER #lb01节点的为 NGINX_MASTER,lb02节点的为 NGINX_BACKUP

#vrrp_strict #注释掉

}

#添加一个周期性执行的脚本

vrrp_script check_nginx {

script "/etc/nginx/check_nginx.sh" #指定检查nginx存活的脚本路径

}

vrrp_instance VI_1 {

state MASTER #lb01节点的为 MASTER,lb02节点的为 BACKUP

interface ens33 #指定网卡名称 ens33

virtual_router_id 51 #指定vrid,两个节点要一致

priority 100 #lb01节点的为 100,lb02节点的为 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.233.100/24 #指定 VIP

}

track_script {

check_nginx #指定vrrp_script配置的脚本 !!!从不需要设置脚本

}

}

vim /etc/nginx/check_nginx.sh

#!/bin/bash

/usr/bin/curl -I http://localhost &>/dev/null

if [ $? -ne 0 ];then

# /etc/init.d/keepalived stop

systemctl stop keepalived

fi

chmod +x /etc/nginx/check_nginx.sh

systemctl start keepalived

systemctl enable keepalived

ip a

node1,2

cd /opt/kubernetes/cfg/

vim bootstrap.kubeconfig

server: https://192.168.233.100:6443

vim kubelet.kubeconfig

server: https://192.168.233.100:6443

vim kube-proxy.kubeconfig

server: https://192.168.233.100:6443

//重启kubelet和kube-proxy服务

systemctl restart kubelet.service

systemctl restart kube-proxy.service

nginx1:

netstat -natp | grep nginx

master1:

kubectl run nginx --image=nginx

master02

kubectl get pods

Dashboard:

仪表盘,kubenetes的可视化界面,在这个可视化界面上,可以对k8s集群进行管理。

master01:

#上传 recommended.yaml 文件到 /opt/k8s 目录中

cd /opt/k8s

kubectl apply -f recommended.yaml

#创建service account并绑定默认cluster-admin管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

复制:

#使用输出的token登录Dashboard

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!