【精选】车辆违规实线变道检测系统:融合Gold-YOLO改进YOLOv8

1.研究背景与意义

项目参考AAAI Association for the Advancement of Artificial Intelligence

研究背景与意义

随着城市化进程的加快和交通工具的普及,道路交通安全问题日益凸显。其中,车辆违规实线变道是导致交通事故的重要原因之一。在道路上,实线的存在是为了保证交通的有序进行,车辆在变道时必须遵守交通规则,但是很多驾驶员由于各种原因,如疲劳驾驶、不熟悉道路规则等,会违反实线变道规定,给道路交通安全带来潜在的风险。

为了解决车辆违规实线变道问题,研究者们提出了各种检测系统和算法。其中,基于计算机视觉的车辆违规实线变道检测系统成为研究的热点。传统的方法主要基于特征提取和分类器的组合,但是这些方法往往需要手工设计特征,且对于复杂的场景和变化的光照条件效果不佳。近年来,深度学习技术的发展为车辆违规实线变道检测带来了新的机遇。

在深度学习领域,YOLO(You Only Look Once)是一种非常流行的目标检测算法。YOLO算法通过将目标检测问题转化为回归问题,实现了实时目标检测的能力。然而,传统的YOLO算法在车辆违规实线变道检测中存在一些问题,如对小目标的检测效果不佳、定位精度不高等。为了改进这些问题,研究者们提出了一种改进的YOLO算法,即YOLOv8。

YOLOv8算法在YOLO算法的基础上进行了一系列的改进,主要包括引入了Gold-YOLO算法和一些优化策略。Gold-YOLO算法通过在训练过程中引入额外的标签信息,提高了模型的学习能力和检测精度。同时,YOLOv8还采用了一些优化策略,如数据增强、多尺度训练等,进一步提升了算法的性能。

综上所述,车辆违规实线变道检测系统的研究具有重要的现实意义和应用价值。首先,通过准确检测和识别车辆违规实线变道行为,可以及时发现和处理违规驾驶行为,提高道路交通安全性。其次,车辆违规实线变道检测系统可以为交通管理部门提供有效的监控手段,帮助他们制定更科学合理的交通管理策略。此外,车辆违规实线变道检测系统的研究还可以为其他交通安全问题的解决提供借鉴和参考,推动交通安全技术的发展。

因此,本研究旨在融合Gold-YOLO改进YOLOv8算法,设计和实现一种高效准确的车辆违规实线变道检测系统,为道路交通安全提供有效的保障,为交通管理和交通安全技术的发展做出贡献。

2.图片演示

3.视频演示

车辆违规实线变道检测系统:融合Gold-YOLO改进YOLOv8_哔哩哔哩_bilibili

4.数据集的采集&标注和整理

图片的收集

首先,我们需要收集所需的图片。这可以通过不同的方式来实现,例如使用现有的公开数据集TrafficLDatasets。

eiseg是一个图形化的图像注释工具,支持COCO和YOLO格式。以下是使用eiseg将图片标注为COCO格式的步骤:

(1)下载并安装eiseg。

(2)打开eiseg并选择“Open Dir”来选择你的图片目录。

(3)为你的目标对象设置标签名称。

(4)在图片上绘制矩形框,选择对应的标签。

(5)保存标注信息,这将在图片目录下生成一个与图片同名的JSON文件。

(6)重复此过程,直到所有的图片都标注完毕。

由于YOLO使用的是txt格式的标注,我们需要将VOC格式转换为YOLO格式。可以使用各种转换工具或脚本来实现。

下面是一个简单的方法是使用Python脚本,该脚本读取XML文件,然后将其转换为YOLO所需的txt格式。

import contextlib

import json

import cv2

import pandas as pd

from PIL import Image

from collections import defaultdict

from utils import *

# Convert INFOLKS JSON file into YOLO-format labels ----------------------------

def convert_infolks_json(name, files, img_path):

# Create folders

path = make_dirs()

# Import json

data = []

for file in glob.glob(files):

with open(file) as f:

jdata = json.load(f)

jdata['json_file'] = file

data.append(jdata)

# Write images and shapes

name = path + os.sep + name

file_id, file_name, wh, cat = [], [], [], []

for x in tqdm(data, desc='Files and Shapes'):

f = glob.glob(img_path + Path(x['json_file']).stem + '.*')[0]

file_name.append(f)

wh.append(exif_size(Image.open(f))) # (width, height)

cat.extend(a['classTitle'].lower() for a in x['output']['objects']) # categories

# filename

with open(name + '.txt', 'a') as file:

file.write('%s\n' % f)

# Write *.names file

names = sorted(np.unique(cat))

# names.pop(names.index('Missing product')) # remove

with open(name + '.names', 'a') as file:

[file.write('%s\n' % a) for a in names]

# Write labels file

for i, x in enumerate(tqdm(data, desc='Annotations')):

label_name = Path(file_name[i]).stem + '.txt'

with open(path + '/labels/' + label_name, 'a') as file:

for a in x['output']['objects']:

# if a['classTitle'] == 'Missing product':

# continue # skip

category_id = names.index(a['classTitle'].lower())

# The INFOLKS bounding box format is [x-min, y-min, x-max, y-max]

box = np.array(a['points']['exterior'], dtype=np.float32).ravel()

box[[0, 2]] /= wh[i][0] # normalize x by width

box[[1, 3]] /= wh[i][1] # normalize y by height

box = [box[[0, 2]].mean(), box[[1, 3]].mean(), box[2] - box[0], box[3] - box[1]] # xywh

if (box[2] > 0.) and (box[3] > 0.): # if w > 0 and h > 0

file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box))

# Split data into train, test, and validate files

split_files(name, file_name)

write_data_data(name + '.data', nc=len(names))

print(f'Done. Output saved to {os.getcwd() + os.sep + path}')

# Convert vott JSON file into YOLO-format labels -------------------------------

def convert_vott_json(name, files, img_path):

# Create folders

path = make_dirs()

name = path + os.sep + name

# Import json

data = []

for file in glob.glob(files):

with open(file) as f:

jdata = json.load(f)

jdata['json_file'] = file

data.append(jdata)

# Get all categories

file_name, wh, cat = [], [], []

for i, x in enumerate(tqdm(data, desc='Files and Shapes')):

with contextlib.suppress(Exception):

cat.extend(a['tags'][0] for a in x['regions']) # categories

# Write *.names file

names = sorted(pd.unique(cat))

with open(name + '.names', 'a') as file:

[file.write('%s\n' % a) for a in names]

# Write labels file

n1, n2 = 0, 0

missing_images = []

for i, x in enumerate(tqdm(data, desc='Annotations')):

f = glob.glob(img_path + x['asset']['name'] + '.jpg')

if len(f):

f = f[0]

file_name.append(f)

wh = exif_size(Image.open(f)) # (width, height)

n1 += 1

if (len(f) > 0) and (wh[0] > 0) and (wh[1] > 0):

n2 += 1

# append filename to list

with open(name + '.txt', 'a') as file:

file.write('%s\n' % f)

# write labelsfile

label_name = Path(f).stem + '.txt'

with open(path + '/labels/' + label_name, 'a') as file:

for a in x['regions']:

category_id = names.index(a['tags'][0])

# The INFOLKS bounding box format is [x-min, y-min, x-max, y-max]

box = a['boundingBox']

box = np.array([box['left'], box['top'], box['width'], box['height']]).ravel()

box[[0, 2]] /= wh[0] # normalize x by width

box[[1, 3]] /= wh[1] # normalize y by height

box = [box[0] + box[2] / 2, box[1] + box[3] / 2, box[2], box[3]] # xywh

if (box[2] > 0.) and (box[3] > 0.): # if w > 0 and h > 0

file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box))

else:

missing_images.append(x['asset']['name'])

print('Attempted %g json imports, found %g images, imported %g annotations successfully' % (i, n1, n2))

if len(missing_images):

print('WARNING, missing images:', missing_images)

# Split data into train, test, and validate files

split_files(name, file_name)

print(f'Done. Output saved to {os.getcwd() + os.sep + path}')

# Convert ath JSON file into YOLO-format labels --------------------------------

def convert_ath_json(json_dir): # dir contains json annotations and images

# Create folders

dir = make_dirs() # output directory

jsons = []

for dirpath, dirnames, filenames in os.walk(json_dir):

jsons.extend(

os.path.join(dirpath, filename)

for filename in [

f for f in filenames if f.lower().endswith('.json')

]

)

# Import json

n1, n2, n3 = 0, 0, 0

missing_images, file_name = [], []

for json_file in sorted(jsons):

with open(json_file) as f:

data = json.load(f)

# # Get classes

# try:

# classes = list(data['_via_attributes']['region']['class']['options'].values()) # classes

# except:

# classes = list(data['_via_attributes']['region']['Class']['options'].values()) # classes

# # Write *.names file

# names = pd.unique(classes) # preserves sort order

# with open(dir + 'data.names', 'w') as f:

# [f.write('%s\n' % a) for a in names]

# Write labels file

for x in tqdm(data['_via_img_metadata'].values(), desc=f'Processing {json_file}'):

image_file = str(Path(json_file).parent / x['filename'])

f = glob.glob(image_file) # image file

if len(f):

f = f[0]

file_name.append(f)

wh = exif_size(Image.open(f)) # (width, height)

n1 += 1 # all images

if len(f) > 0 and wh[0] > 0 and wh[1] > 0:

label_file = dir + 'labels/' + Path(f).stem + '.txt'

nlabels = 0

try:

with open(label_file, 'a') as file: # write labelsfile

# try:

# category_id = int(a['region_attributes']['class'])

# except:

# category_id = int(a['region_attributes']['Class'])

category_id = 0 # single-class

for a in x['regions']:

# bounding box format is [x-min, y-min, x-max, y-max]

box = a['shape_attributes']

box = np.array([box['x'], box['y'], box['width'], box['height']],

dtype=np.float32).ravel()

box[[0, 2]] /= wh[0] # normalize x by width

box[[1, 3]] /= wh[1] # normalize y by height

box = [box[0] + box[2] / 2, box[1] + box[3] / 2, box[2],

box[3]] # xywh (left-top to center x-y)

if box[2] > 0. and box[3] > 0.: # if w > 0 and h > 0

file.write('%g %.6f %.6f %.6f %.6f\n' % (category_id, *box))

n3 += 1

nlabels += 1

if nlabels == 0: # remove non-labelled images from dataset

os.system(f'rm {label_file}')

# print('no labels for %s' % f)

continue # next file

# write image

img_size = 4096 # resize to maximum

img = cv2.imread(f) # BGR

assert img is not None, 'Image Not Found ' + f

r = img_size / max(img.shape) # size ratio

if r < 1: # downsize if necessary

h, w, _ = img.shape

img = cv2.resize(img, (int(w * r), int(h * r)), interpolation=cv2.INTER_AREA)

ifile = dir + 'images/' + Path(f).name

if cv2.imwrite(ifile, img): # if success append image to list

with open(dir + 'data.txt', 'a') as file:

file.write('%s\n' % ifile)

n2 += 1 # correct images

except Exception:

os.system(f'rm {label_file}')

print(f'problem with {f}')

else:

missing_images.append(image_file)

nm = len(missing_images) # number missing

print('\nFound %g JSONs with %g labels over %g images. Found %g images, labelled %g images successfully' %

(len(jsons), n3, n1, n1 - nm, n2))

if len(missing_images):

print('WARNING, missing images:', missing_images)

# Write *.names file

names = ['knife'] # preserves sort order

with open(dir + 'data.names', 'w') as f:

[f.write('%s\n' % a) for a in names]

# Split data into train, test, and validate files

split_rows_simple(dir + 'data.txt')

write_data_data(dir + 'data.data', nc=1)

print(f'Done. Output saved to {Path(dir).absolute()}')

def convert_coco_json(json_dir='../coco/annotations/', use_segments=False, cls91to80=False):

save_dir = make_dirs() # output directory

coco80 = coco91_to_coco80_class()

# Import json

for json_file in sorted(Path(json_dir).resolve().glob('*.json')):

fn = Path(save_dir) / 'labels' / json_file.stem.replace('instances_', '') # folder name

fn.mkdir()

with open(json_file) as f:

data = json.load(f)

# Create image dict

images = {'%g' % x['id']: x for x in data['images']}

# Create image-annotations dict

imgToAnns = defaultdict(list)

for ann in data['annotations']:

imgToAnns[ann['image_id']].append(ann)

# Write labels file

for img_id, anns in tqdm(imgToAnns.items(), desc=f'Annotations {json_file}'):

img = images['%g' % img_id]

h, w, f = img['height'], img['width'], img['file_name']

bboxes = []

segments = []

for ann in anns:

if ann['iscrowd']:

continue

# The COCO box format is [top left x, top left y, width, height]

box = np.array(ann['bbox'], dtype=np.float64)

box[:2] += box[2:] / 2 # xy top-left corner to center

box[[0, 2]] /= w # normalize x

box[[1, 3]] /= h # normalize y

if box[2] <= 0 or box[3] <= 0: # if w <= 0 and h <= 0

continue

cls = coco80[ann['category_id'] - 1] if cls91to80 else ann['category_id'] - 1 # class

box = [cls] + box.tolist()

if box not in bboxes:

bboxes.append(box)

# Segments

if use_segments:

if len(ann['segmentation']) > 1:

s = merge_multi_segment(ann['segmentation'])

s = (np.concatenate(s, axis=0) / np.array([w, h])).reshape(-1).tolist()

else:

s = [j for i in ann['segmentation'] for j in i] # all segments concatenated

s = (np.array(s).reshape(-1, 2) / np.array([w, h])).reshape(-1).tolist()

s = [cls] + s

if s not in segments:

segments.append(s)

# Write

with open((fn / f).with_suffix('.txt'), 'a') as file:

for i in range(len(bboxes)):

line = *(segments[i] if use_segments else bboxes[i]), # cls, box or segments

file.write(('%g ' * len(line)).rstrip() % line + '\n')

def min_index(arr1, arr2):

"""Find a pair of indexes with the shortest distance.

Args:

arr1: (N, 2).

arr2: (M, 2).

Return:

a pair of indexes(tuple).

"""

dis = ((arr1[:, None, :] - arr2[None, :, :]) ** 2).sum(-1)

return np.unravel_index(np.argmin(dis, axis=None), dis.shape)

def merge_multi_segment(segments):

"""Merge multi segments to one list.

Find the coordinates with min distance between each segment,

then connect these coordinates with one thin line to merge all

segments into one.

Args:

segments(List(List)): original segmentations in coco's json file.

like [segmentation1, segmentation2,...],

each segmentation is a list of coordinates.

"""

s = []

segments = [np.array(i).reshape(-1, 2) for i in segments]

idx_list = [[] for _ in range(len(segments))]

# record the indexes with min distance between each segment

for i in range(1, len(segments)):

idx1, idx2 = min_index(segments[i - 1], segments[i])

idx_list[i - 1].append(idx1)

idx_list[i].append(idx2)

# use two round to connect all the segments

for k in range(2):

# forward connection

if k == 0:

for i, idx in enumerate(idx_list):

# middle segments have two indexes

# reverse the index of middle segments

if len(idx) == 2 and idx[0] > idx[1]:

idx = idx[::-1]

segments[i] = segments[i][::-1, :]

segments[i] = np.roll(segments[i], -idx[0], axis=0)

segments[i] = np.concatenate([segments[i], segments[i][:1]])

# deal with the first segment and the last one

if i in [0, len(idx_list) - 1]:

s.append(segments[i])

else:

idx = [0, idx[1] - idx[0]]

s.append(segments[i][idx[0]:idx[1] + 1])

else:

for i in range(len(idx_list) - 1, -1, -1):

if i not in [0, len(idx_list) - 1]:

idx = idx_list[i]

nidx = abs(idx[1] - idx[0])

s.append(segments[i][nidx:])

return s

def delete_dsstore(path='../datasets'):

# Delete apple .DS_store files

from pathlib import Path

files = list(Path(path).rglob('.DS_store'))

print(files)

for f in files:

f.unlink()

if __name__ == '__main__':

source = 'COCO'

if source == 'COCO':

convert_coco_json('./annotations', # directory with *.json

use_segments=True,

cls91to80=True)

elif source == 'infolks': # Infolks https://infolks.info/

convert_infolks_json(name='out',

files='../data/sm4/json/*.json',

img_path='../data/sm4/images/')

elif source == 'vott': # VoTT https://github.com/microsoft/VoTT

convert_vott_json(name='data',

files='../../Downloads/athena_day/20190715/*.json',

img_path='../../Downloads/athena_day/20190715/') # images folder

elif source == 'ath': # ath format

convert_ath_json(json_dir='../../Downloads/athena/') # images folder

# zip results

# os.system('zip -r ../coco.zip ../coco')

整理数据文件夹结构

我们需要将数据集整理为以下结构:

-----datasets

-----coco128-seg

|-----images

| |-----train

| |-----valid

| |-----test

|

|-----labels

| |-----train

| |-----valid

| |-----test

|

模型训练

Epoch gpu_mem box obj cls labels img_size

1/200 20.8G 0.01576 0.01955 0.007536 22 1280: 100%|██████████| 849/849 [14:42<00:00, 1.04s/it]

Class Images Labels P R mAP@.5 mAP@.5:.95: 100%|██████████| 213/213 [01:14<00:00, 2.87it/s]

all 3395 17314 0.994 0.957 0.0957 0.0843

Epoch gpu_mem box obj cls labels img_size

2/200 20.8G 0.01578 0.01923 0.007006 22 1280: 100%|██████████| 849/849 [14:44<00:00, 1.04s/it]

Class Images Labels P R mAP@.5 mAP@.5:.95: 100%|██████████| 213/213 [01:12<00:00, 2.95it/s]

all 3395 17314 0.996 0.956 0.0957 0.0845

Epoch gpu_mem box obj cls labels img_size

3/200 20.8G 0.01561 0.0191 0.006895 27 1280: 100%|██████████| 849/849 [10:56<00:00, 1.29it/s]

Class Images Labels P R mAP@.5 mAP@.5:.95: 100%|███████ | 187/213 [00:52<00:00, 4.04it/s]

all 3395 17314 0.996 0.957 0.0957 0.0845

5.核心代码讲解

5.2 predict.py

封装为类后的代码如下:

from ultralytics.engine.predictor import BasePredictor

from ultralytics.engine.results import Results

from ultralytics.utils import ops

class DetectionPredictor(BasePredictor):

def postprocess(self, preds, img, orig_imgs):

preds = ops.non_max_suppression(preds,

self.args.conf,

self.args.iou,

agnostic=self.args.agnostic_nms,

max_det=self.args.max_det,

classes=self.args.classes)

if not isinstance(orig_imgs, list):

orig_imgs = ops.convert_torch2numpy_batch(orig_imgs)

results = []

for i, pred in enumerate(preds):

orig_img = orig_imgs[i]

pred[:, :4] = ops.scale_boxes(img.shape[2:], pred[:, :4], orig_img.shape)

img_path = self.batch[0][i]

results.append(Results(orig_img, path=img_path, names=self.model.names, boxes=pred))

return results

这个程序文件是一个名为predict.py的文件,它是一个用于预测基于检测模型的类DetectionPredictor的扩展类。它使用了Ultralytics YOLO库,并遵循AGPL-3.0许可证。

该文件包含了一个名为DetectionPredictor的类,继承自BasePredictor类。它还包含了一个名为postprocess的方法,用于对预测结果进行后处理,并返回一个Results对象的列表。

在postprocess方法中,首先对预测结果进行非最大值抑制(non_max_suppression),根据一些参数进行筛选和过滤。然后,将预测结果的边界框坐标进行缩放,以适应原始图像的尺寸。最后,将原始图像、图像路径、类别名称和边界框信息封装成Results对象,并将其添加到结果列表中。

该文件还包含了一个示例用法的注释,展示了如何使用该类进行预测。

5.3 train.py

# Ultralytics YOLO 🚀, AGPL-3.0 license

from copy import copy

import numpy as np

from ultralytics.data import build_dataloader, build_yolo_dataset

from ultralytics.engine.trainer import BaseTrainer

from ultralytics.models import yolo

from ultralytics.nn.tasks import DetectionModel

from ultralytics.utils import LOGGER, RANK

from ultralytics.utils.torch_utils import de_parallel, torch_distributed_zero_first

class DetectionTrainer(BaseTrainer):

def build_dataset(self, img_path, mode='train', batch=None):

gs = max(int(de_parallel(self.model).stride.max() if self.model else 0), 32)

return build_yolo_dataset(self.args, img_path, batch, self.data, mode=mode, rect=mode == 'val', stride=gs)

def get_dataloader(self, dataset_path, batch_size=16, rank=0, mode='train'):

assert mode in ['train', 'val']

with torch_distributed_zero_first(rank):

dataset = self.build_dataset(dataset_path, mode, batch_size)

shuffle = mode == 'train'

if getattr(dataset, 'rect', False) and shuffle:

LOGGER.warning("WARNING ?? 'rect=True' is incompatible with DataLoader shuffle, setting shuffle=False")

shuffle = False

workers = 0

return build_dataloader(dataset, batch_size, workers, shuffle, rank)

def preprocess_batch(self, batch):

batch['img'] = batch['img'].to(self.device, non_blocking=True).float() / 255

return batch

def set_model_attributes(self):

self.model.nc = self.data['nc']

self.model.names = self.data['names']

self.model.args = self.args

def get_model(self, cfg=None, weights=None, verbose=True):

model = DetectionModel(cfg, nc=self.data['nc'], verbose=verbose and RANK == -1)

if weights:

model.load(weights)

return model

def get_validator(self):

self.loss_names = 'box_loss', 'cls_loss', 'dfl_loss'

return yolo.detect.DetectionValidator(self.test_loader, save_dir=self.save_dir, args=copy(self.args))

def label_loss_items(self, loss_items=None, prefix='train'):

keys = [f'{prefix}/{x}' for x in self.loss_names]

if loss_items is not None:

loss_items = [round(float(x), 5) for x in loss_items]

return dict(zip(keys, loss_items))

else:

return keys

def progress_string(self):

return ('\n' + '%11s' *

(4 + len(self.loss_names))) % ('Epoch', 'GPU_mem', *self.loss_names, 'Instances', 'Size')

def plot_training_samples(self, batch, ni):

plot_images(images=batch['img'],

batch_idx=batch['batch_idx'],

cls=batch['cls'].squeeze(-1),

bboxes=batch['bboxes'],

paths=batch['im_file'],

fname=self.save_dir / f'train_batch{ni}.jpg',

on_plot=self.on_plot)

def plot_metrics(self):

plot_results(file=self.csv, on_plot=self.on_plot)

def plot_training_labels(self):

boxes = np.concatenate([lb['bboxes'] for lb in self.train_loader.dataset.labels], 0)

cls = np.concatenate([lb['cls'] for lb in self.train_loader.dataset.labels], 0)

plot_labels(boxes, cls.squeeze(), names=self.data['names'], save_dir=self.save_dir, on_plot=self.on_plot)

if __name__ == '__main__':

args = dict(model='./yolov8l-goldyolo.yaml', data='coco8.yaml', epochs=100)

trainer = DetectionTrainer(overrides=args)

trainer.train()

这个程序文件是一个用于训练目标检测模型的程序。它使用了Ultralytics YOLO库,提供了一些方便的功能和工具来训练和评估YOLO模型。

该程序文件定义了一个名为DetectionTrainer的类,它继承自BaseTrainer类。DetectionTrainer类包含了一些用于构建数据集、构建数据加载器、预处理数据、设置模型属性、获取模型、获取验证器等方法。

在__main__函数中,首先定义了一些参数,包括模型文件路径、数据文件路径和训练轮数。然后创建了一个DetectionTrainer对象,并调用其train方法开始训练模型。

总体来说,这个程序文件实现了一个用于训练目标检测模型的训练器,并提供了一些方便的功能和工具来处理数据、构建模型、进行训练和评估。

5.5 backbone\convnextv2.py

import torch

import torch.nn as nn

import torch.nn.functional as F

from timm.models.layers import trunc_normal_, DropPath

class LayerNorm(nn.Module):

def __init__(self, normalized_shape, eps=1e-6, data_format="channels_last"):

super().__init__()

self.weight = nn.Parameter(torch.ones(normalized_shape))

self.bias = nn.Parameter(torch.zeros(normalized_shape))

self.eps = eps

self.data_format = data_format

if self.data_format not in ["channels_last", "channels_first"]:

raise NotImplementedError

self.normalized_shape = (normalized_shape, )

def forward(self, x):

if self.data_format == "channels_last":

return F.layer_norm(x, self.normalized_shape, self.weight, self.bias, self.eps)

elif self.data_format == "channels_first":

u = x.mean(1, keepdim=True)

s = (x - u).pow(2).mean(1, keepdim=True)

x = (x - u) / torch.sqrt(s + self.eps)

x = self.weight[:, None, None] * x + self.bias[:, None, None]

return x

class GRN(nn.Module):

def __init__(self, dim):

super().__init__()

self.gamma = nn.Parameter(torch.zeros(1, 1, 1, dim))

self.beta = nn.Parameter(torch.zeros(1, 1, 1, dim))

def forward(self, x):

Gx = torch.norm(x, p=2, dim=(1,2), keepdim=True)

Nx = Gx / (Gx.mean(dim=-1, keepdim=True) + 1e-6)

return self.gamma * (x * Nx) + self.beta + x

class Block(nn.Module):

def __init__(self, dim, drop_path=0.):

super().__init__()

self.dwconv = nn.Conv2d(dim, dim, kernel_size=7, padding=3, groups=dim)

self.norm = LayerNorm(dim, eps=1e-6)

self.pwconv1 = nn.Linear(dim, 4 * dim)

self.act = nn.GELU()

self.grn = GRN(4 * dim)

self.pwconv2 = nn.Linear(4 * dim, dim)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

def forward(self, x):

input = x

x = self.dwconv(x)

x = x.permute(0, 2, 3, 1)

x = self.norm(x)

x = self.pwconv1(x)

x = self.act(x)

x = self.grn(x)

x = self.pwconv2(x)

x = x.permute(0, 3, 1, 2)

x = input + self.drop_path(x)

return x

class ConvNeXtV2(nn.Module):

def __init__(self, in_chans=3, num_classes=1000,

depths=[3, 3, 9, 3], dims=[96, 192, 384, 768],

drop_path_rate=0., head_init_scale=1.

):

super().__init__()

self.depths = depths

self.downsample_layers = nn.ModuleList()

stem = nn.Sequential(

nn.Conv2d(in_chans, dims[0], kernel_size=4, stride=4),

LayerNorm(dims[0], eps=1e-6, data_format="channels_first")

)

self.downsample_layers.append(stem)

for i in range(3):

downsample_layer = nn.Sequential(

LayerNorm(dims[i], eps=1e-6, data_format="channels_first"),

nn.Conv2d(dims[i], dims[i+1], kernel_size=2, stride=2),

)

self.downsample_layers.append(downsample_layer)

self.stages = nn.ModuleList()

dp_rates=[x.item() for x in torch.linspace(0, drop_path_rate, sum(depths))]

cur = 0

for i in range(4):

stage = nn.Sequential(

*[Block(dim=dims[i], drop_path=dp_rates[cur + j]) for j in range(depths[i])]

)

self.stages.append(stage)

cur += depths[i]

self.norm = nn.LayerNorm(dims[-1], eps=1e-6)

self.head = nn.Linear(dims[-1], num_classes)

self.apply(self._init_weights)

self.channel = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]

def _init_weights(self, m):

if isinstance(m, (nn.Conv2d, nn.Linear)):

trunc_normal_(m.weight, std=.02)

nn.init.constant_(m.bias, 0)

def forward(self, x):

res = []

for i in range(4):

x = self.downsample_layers[i](x)

x = self.stages[i](x)

res.append(x)

return res

该程序文件是一个实现了ConvNeXt V2模型的PyTorch代码。ConvNeXt V2是一个用于图像分类任务的卷积神经网络模型。

该程序文件包含了以下几个主要部分:

-

LayerNorm类:实现了支持两种数据格式(channels_last和channels_first)的LayerNorm层。 -

GRN类:实现了全局响应归一化(Global Response Normalization)层。 -

Block类:实现了ConvNeXtV2模型的基本块。 -

ConvNeXtV2类:实现了ConvNeXt V2模型。 -

update_weight函数:用于更新模型的权重。 -

convnextv2_atto、convnextv2_femto、convnextv2_pico、convnextv2_nano、convnextv2_tiny、convnextv2_base、convnextv2_large、convnextv2_huge函数:分别返回不同规模的ConvNeXt V2模型。

该程序文件中的代码实现了ConvNeXt V2模型的各个组件,并提供了不同规模的模型供选择。可以根据需要选择合适的模型,并加载预训练权重进行使用。

5.6 backbone\CSwomTramsformer.py

import torch

import torch.nn as nn

import torch.nn.functional as F

from functools import partial

from timm.data import IMAGENET_DEFAULT_MEAN, IMAGENET_DEFAULT_STD

from timm.models.helpers import load_pretrained

from timm.models.layers import DropPath, to_2tuple, trunc_normal_

from timm.models.registry import register_model

from einops.layers.torch import Rearrange

import torch.utils.checkpoint as checkpoint

import numpy as np

import time

__all__ = ['CSWin_tiny', 'CSWin_small', 'CSWin_base', 'CSWin_large']

class Mlp(nn.Module):

def __init__(self, in_features, hidden_features=None, out_features=None, act_layer=nn.GELU, drop=0.):

super().__init__()

out_features = out_features or in_features

hidden_features = hidden_features or in_features

self.fc1 = nn.Linear(in_features, hidden_features)

self.act = act_layer()

self.fc2 = nn.Linear(hidden_features, out_features)

self.drop = nn.Dropout(drop)

def forward(self, x):

x = self.fc1(x)

x = self.act(x)

x = self.drop(x)

x = self.fc2(x)

x = self.drop(x)

return x

class LePEAttention(nn.Module):

def __init__(self, dim, resolution, idx, split_size=7, dim_out=None, num_heads=8, attn_drop=0., proj_drop=0., qk_scale=None):

super().__init__()

self.dim = dim

self.dim_out = dim_out or dim

self.resolution = resolution

self.split_size = split_size

self.num_heads = num_heads

head_dim = dim // num_heads

# NOTE scale factor was wrong in my original version, can set manually to be compat with prev weights

self.scale = qk_scale or head_dim ** -0.5

if idx == -1:

H_sp, W_sp = self.resolution, self.resolution

elif idx == 0:

H_sp, W_sp = self.resolution, self.split_size

elif idx == 1:

W_sp, H_sp = self.resolution, self.split_size

else:

print ("ERROR MODE", idx)

exit(0)

self.H_sp = H_sp

self.W_sp = W_sp

stride = 1

self.get_v = nn.Conv2d(dim, dim, kernel_size=3, stride=1, padding=1,groups=dim)

self.attn_drop = nn.Dropout(attn_drop)

def im2cswin(self, x):

B, N, C = x.shape

H = W = int(np.sqrt(N))

x = x.transpose(-2,-1).contiguous().view(B, C, H, W)

x = img2windows(x, self.H_sp, self.W_sp)

x = x.reshape(-1, self.H_sp* self.W_sp, self.num_heads, C // self.num_heads).permute(0, 2, 1, 3).contiguous()

return x

def get_lepe(self, x, func):

B, N, C = x.shape

H = W = int(np.sqrt(N))

x = x.transpose(-2,-1).contiguous().view(B, C, H, W)

H_sp, W_sp = self.H_sp, self.W_sp

x = x.view(B, C, H // H_sp, H_sp, W // W_sp, W_sp)

x = x.permute(0, 2, 4, 1, 3, 5).contiguous().reshape(-1, C, H_sp, W_sp) ### B', C, H', W'

lepe = func(x) ### B', C, H', W'

lepe = lepe.reshape(-1, self.num_heads, C // self.num_heads, H_sp * W_sp).permute(0, 1, 3, 2).contiguous()

x = x.reshape(-1, self.num_heads, C // self.num_heads, self.H_sp* self.W_sp).permute(0, 1, 3, 2).contiguous()

return x, lepe

def forward(self, qkv):

"""

x: B L C

"""

q,k,v = qkv[0], qkv[1], qkv[2]

### Img2Window

H = W = self.resolution

B, L, C = q.shape

assert L == H * W, "flatten img_tokens has wrong size"

q = self.im2cswin(q)

k = self.im2cswin(k)

v, lepe = self.get_lepe(v, self.get_v)

q = q * self.scale

attn = (q @ k.transpose(-2, -1)) # B head N C @ B head C N --> B head N N

attn = nn.functional.softmax(attn, dim=-1, dtype=attn.dtype)

attn = self.attn_drop(attn)

x = (attn @ v) + lepe

x = x.transpose(1, 2).reshape(-1, self.H_sp* self.W_sp, C) # B head N N @ B head N C

### Window2Img

x = windows2img(x, self.H_sp, self.W_sp, H, W).view(B, -1, C) # B H' W' C

return x

class CSWinBlock(nn.Module):

def __init__(self, dim, reso, num_heads,

split_size=7, mlp_ratio=4., qkv_bias=False, qk_scale=None,

drop=0., attn_drop=0., drop_path=0.,

act_layer=nn.GELU, norm_layer=nn.LayerNorm,

last_stage=False):

super().__init__()

self.dim = dim

self.num_heads = num_heads

self.patches_resolution = reso

self.split_size = split_size

self.mlp_ratio = mlp_ratio

self.qkv = nn.Linear(dim, dim * 3, bias=qkv_bias)

self.norm1 = norm_layer(dim)

if self.patches_resolution == split_size:

last_stage = True

if last_stage:

self.branch_num = 1

else:

self.branch_num = 2

self.proj = nn.Linear(dim, dim)

self.proj_drop = nn.Dropout(drop)

if last_stage:

self.attns = nn.ModuleList([

LePEAttention(

dim, resolution=self.patches_resolution, idx = -1,

split_size=split_size, num_heads=num_heads, dim_out=dim,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

for i in range(self.branch_num)])

else:

self.attns = nn.ModuleList([

LePEAttention(

dim//2, resolution=self.patches_resolution, idx = i,

split_size=split_size, num_heads=num_heads//2, dim_out=dim//2,

qk_scale=qk_scale, attn_drop=attn_drop, proj_drop=drop)

for i in range(self.branch_num)])

mlp_hidden_dim = int(dim * mlp_ratio)

self.drop_path = DropPath(drop_path) if drop_path > 0. else nn.Identity()

self.mlp = Mlp(in_features=dim, hidden_features=mlp_hidden_dim, out_features=dim, act_layer=act_layer, drop=drop)

self.norm2 = norm_layer(dim)

def forward(self, x):

"""

x: B, H*W, C

"""

H = W = self.patches_resolution

B, L, C = x.shape

assert L == H * W, "flatten img_tokens has wrong size"

img = self.norm1(x)

qkv = self.qkv(img).reshape(B, -1, 3, C).permute(2, 0, 1, 3)

if self.branch_num == 2:

x1 = self.attns[0](qkv[:,:,:,:C//2])

x2 = self.attns[1](qkv[:,:,:,C//2:])

attened_x = torch.cat([x1,x2], dim=2

该程序文件是一个用于图像分类的模型CSWin Transformer的实现。CSWin Transformer是由Microsoft Corporation开发的一种图像分类模型,它使用了一种基于局部感知和全局交互的注意力机制来提取图像特征。该模型包含了CSWinBlock模块和Merge_Block模块。

CSWinBlock模块是CSWin Transformer的基本组成单元,它包含了一个多头注意力机制和一个多层感知机。多头注意力机制用于提取图像中的局部特征,并通过局部感知注意力和局部感知嵌入来实现。多层感知机用于对提取的特征进行非线性变换和维度变换。CSWinBlock模块还包含了一些规范化层和残差连接,用于提高模型的稳定性和性能。

Merge_Block模块用于将CSWinBlock模块提取的特征进行融合和降维。它通过一个卷积层和规范化层将特征图的尺寸减半,并将通道数从dim降低到dim_out。

整个CSWin Transformer模型由多个CSWinBlock模块和一个Merge_Block模块组成,通过堆叠和连接这些模块来构建一个深层的图像分类网络。模型的输入是一张图像,输出是图像的分类结果。

6.系统整体结构

以下是每个文件的功能总结:

| 文件路径 | 功能 |

|---|---|

| export.py | 导出模型到不同的格式,如ONNX、TensorFlow等 |

| predict.py | 运行目标检测算法进行预测 |

| train.py | 训练目标检测模型 |

| ui.py | 加载模型并在界面上显示目标检测结果 |

| backbone\convnextv2.py | ConvNeXt V2模型的实现 |

| backbone\CSwomTramsformer.py | CSWin Transformer模型的实现 |

| backbone\EfficientFormerV2.py | EfficientFormer V2模型的实现 |

| backbone\efficientViT.py | EfficientViT模型的实现 |

| backbone\fasternet.py | FasterNet模型的实现 |

| backbone\lsknet.py | LSKNet模型的实现 |

| backbone\repvit.py | RepVIT模型的实现 |

| backbone\revcol.py | RevCol模型的实现 |

| backbone\SwinTransformer.py | Swin Transformer模型的实现 |

| backbone\VanillaNet.py | VanillaNet模型的实现 |

| extra_modules\afpn.py | Feature Pyramid Network模块的实现 |

| extra_modules\attention.py | 注意力机制模块的实现 |

| extra_modules\block.py | 基础模块的实现 |

| extra_modules\dynamic_snake_conv.py | 动态蛇形卷积模块的实现 |

| extra_modules\head.py | 模型头部的实现 |

| extra_modules\kernel_warehouse.py | 卷积核仓库的实现 |

| extra_modules\orepa.py | OREPA模块的实现 |

| extra_modules\rep_block.py | RepBlock模块的实现 |

| extra_modules\RFAConv.py | RFAConv模块的实现 |

| models\common.py | 通用模型组件和函数 |

| models\experimental.py | 实验性模型的实现 |

| models\tf.py | TensorFlow模型的实现 |

| models\yolo.py | YOLO模型的实现 |

| segment\predict.py | 分割模型的预测功能 |

| segment\train.py | 分割模型的训练功能 |

| segment\val.py | 分割模型的验证功能 |

| ultralytics_init_.py | Ultralytics库的初始化 |

| ultralytics\cfg_init_.py | Ultralytics库的配置文件初始化 |

| ultralytics\data\annotator.py | 数据标注工具的实现 |

| ultralytics\data\augment.py | 数据增强功能的实现 |

| ultralytics\data\base.py | 数据集基类的实现 |

| ultralytics\data\build.py | 构建数据集的实现 |

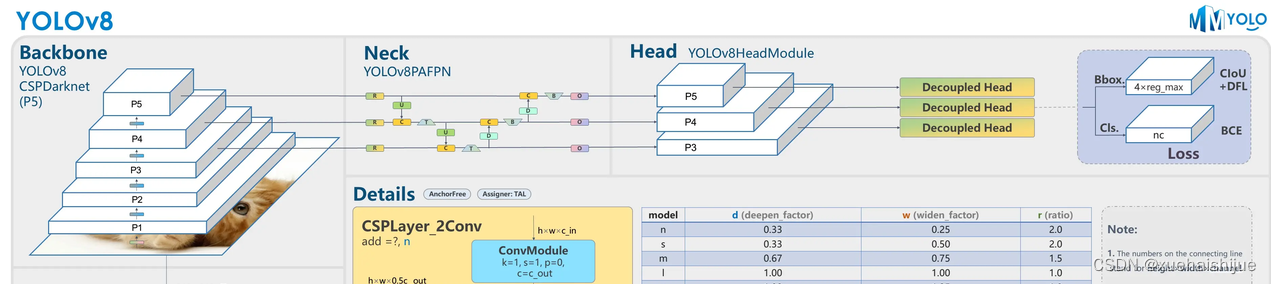

7.YOLOv8简介

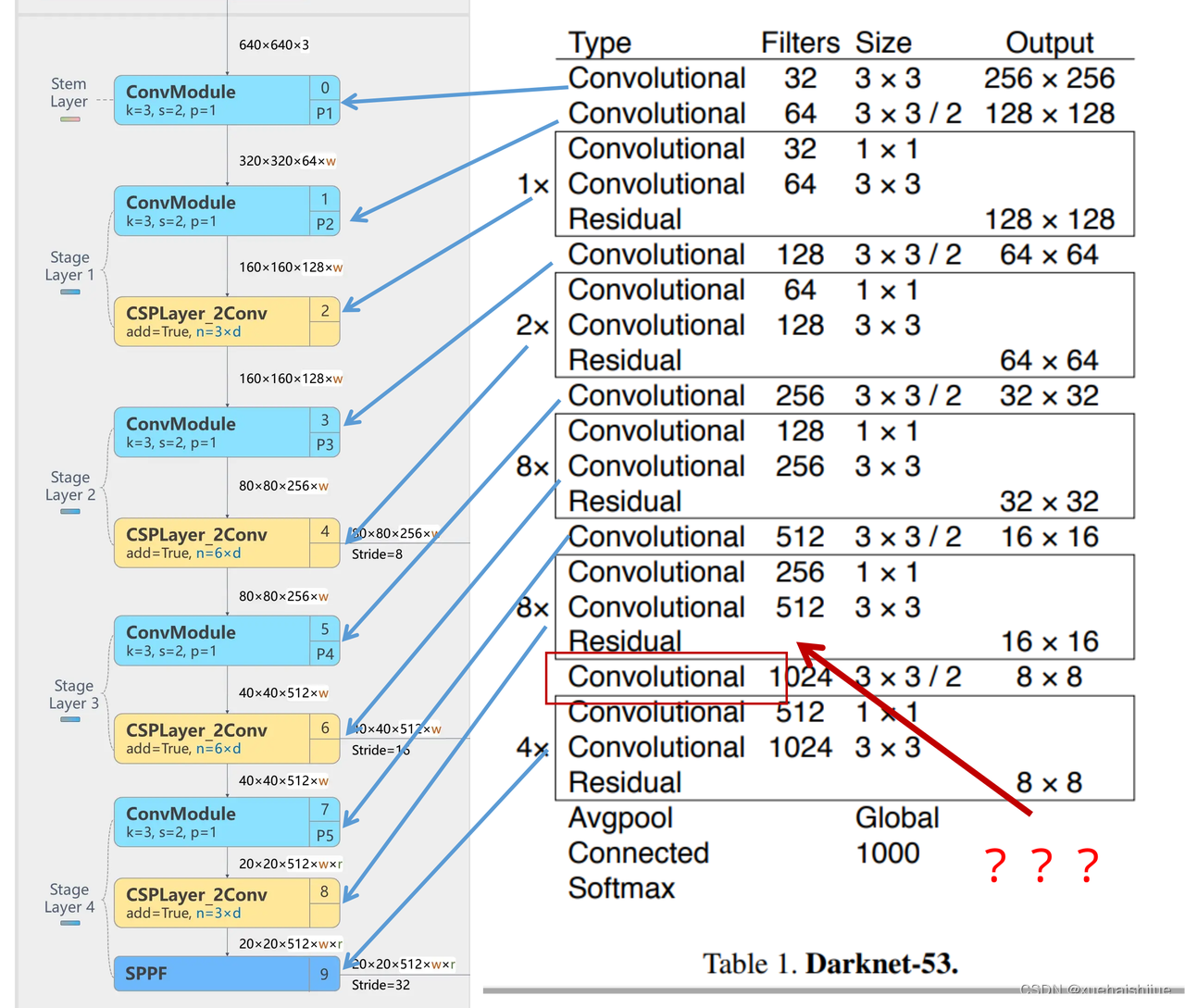

Backbone

Darknet-53

53指的是“52层卷积”+output layer。

借鉴了其他算法的这些设计思想

借鉴了VGG的思想,使用了较多的3×3卷积,在每一次池化操作后,将通道数翻倍;

借鉴了network in network的思想,使用全局平均池化(global average pooling)做预测,并把1×1的卷积核置于3×3的卷积核之间,用来压缩特征;(我没找到这一步体现在哪里)

使用了批归一化层稳定模型训练,加速收敛,并且起到正则化作用。

以上三点为Darknet19借鉴其他模型的点。Darknet53当然是在继承了Darknet19的这些优点的基础上再新增了下面这些优点的。因此列在了这里

借鉴了ResNet的思想,在网络中大量使用了残差连接,因此网络结构可以设计的很深,并且缓解了训练中梯度消失的问题,使得模型更容易收敛。

使用步长为2的卷积层代替池化层实现降采样。(这一点在经典的Darknet-53上是很明显的,output的长和宽从256降到128,再降低到64,一路降低到8,应该是通过步长为2的卷积层实现的;在YOLOv8的卷积层中也有体现,比如图中我标出的这些位置)

特征融合

模型架构图如下

Darknet-53的特点可以这样概括:(Conv卷积模块+Residual Block残差块)串行叠加4次

Conv卷积层+Residual Block残差网络就被称为一个stage

上面红色指出的那个,原始的Darknet-53里面有一层 卷积,在YOLOv8里面,把一层卷积移除了

为什么移除呢?

原始Darknet-53模型中间加的这个卷积层做了什么?滤波器(卷积核)的个数从 上一个卷积层的512个,先增加到1024个卷积核,然后下一层卷积的卷积核的个数又降低到512个

移除掉这一层以后,少了1024个卷积核,就可以少做1024次卷积运算,同时也少了1024个3×3的卷积核的参数,也就是少了9×1024个参数需要拟合。这样可以大大减少了模型的参数,(相当于做了轻量化吧)

移除掉这个卷积层,可能是因为作者发现移除掉这个卷积层以后,模型的score有所提升,所以才移除掉的。为什么移除掉以后,分数有所提高呢?可能是因为多了这些参数就容易,参数过多导致模型在训练集删过拟合,但是在测试集上表现很差,最终模型的分数比较低。你移除掉这个卷积层以后,参数减少了,过拟合现象不那么严重了,泛化能力增强了。当然这个是,拿着你做实验的结论,反过来再找补,再去强行解释这种现象的合理性。

通过MMdetection官方绘制册这个图我们可以看到,进来的这张图片经过一个“Feature Pyramid Network(简称FPN)”,然后最后的P3、P4、P5传递给下一层的Neck和Head去做识别任务。 PAN(Path Aggregation Network)

“FPN是自顶向下,将高层的强语义特征传递下来。PAN就是在FPN的后面添加一个自底向上的金字塔,对FPN补充,将低层的强定位特征传递上去,

FPN是自顶(小尺寸,卷积次数多得到的结果,语义信息丰富)向下(大尺寸,卷积次数少得到的结果),将高层的强语义特征传递下来,对整个金字塔进行增强,不过只增强了语义信息,对定位信息没有传递。PAN就是针对这一点,在FPN的后面添加一个自底(卷积次数少,大尺寸)向上(卷积次数多,小尺寸,语义信息丰富)的金字塔,对FPN补充,将低层的强定位特征传递上去,又被称之为“双塔战术”。

FPN层自顶向下传达强语义特征,而特征金字塔则自底向上传达强定位特征,两两联手,从不同的主干层对不同的检测层进行参数聚合,这样的操作确实很皮。

自底向上增强

而 PAN(Path Aggregation Network)是对 FPN 的一种改进,它的设计理念是在 FPN 后面添加一个自底向上的金字塔。PAN 引入了路径聚合的方式,通过将浅层特征图(低分辨率但语义信息较弱)和深层特征图(高分辨率但语义信息丰富)进行聚合,并沿着特定的路径传递特征信息,将低层的强定位特征传递上去。这样的操作能够进一步增强多尺度特征的表达能力,使得 PAN 在目标检测任务中表现更加优秀。

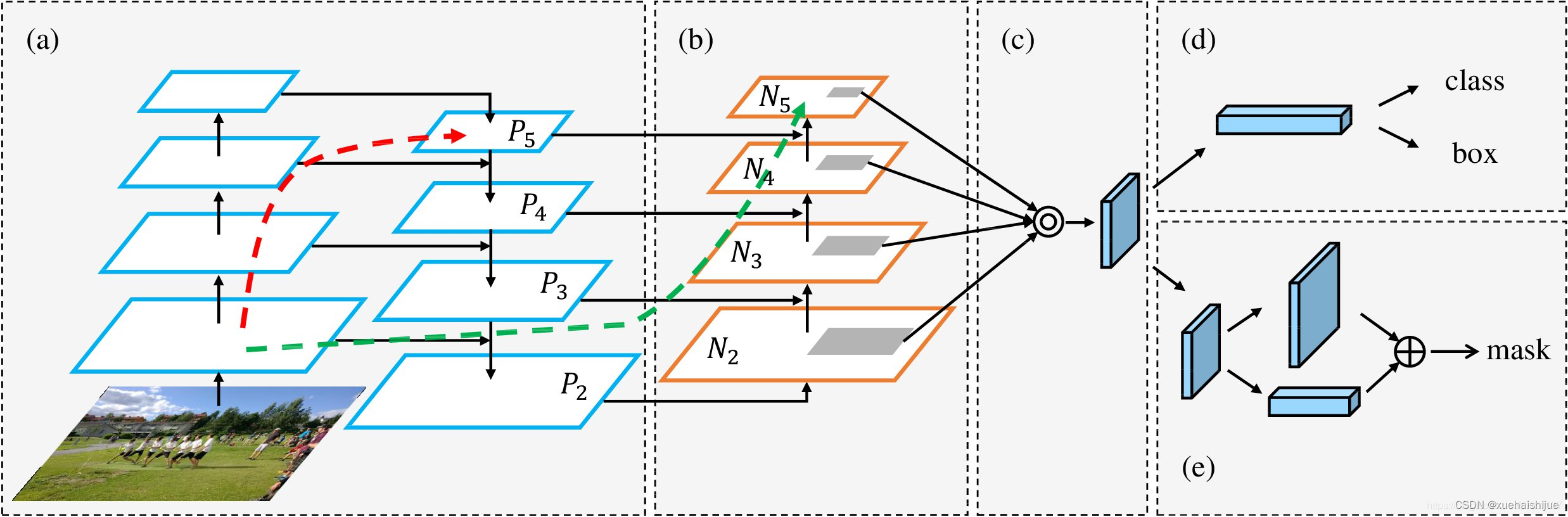

8.Gold-YOLO简介

YOLO系列模型面世至今已有8年,由于其优异的性能,已成为目标检测领域的标杆。在系列模型经过十多个不同版本的改进发展逐渐稳定完善的今天,研究人员更多关注于单个计算模块内结构的精细调整,或是head部分和训练方法上的改进。但这并不意味着现有模式已是最优解。

当前YOLO系列模型通常采用类FPN方法进行信息融合,而这一结构在融合跨层信息时存在信息损失的问题。针对这一问题,我们提出了全新的信息聚集-分发(Gather-and-Distribute Mechanism)GD机制,通过在全局视野上对不同层级的特征进行统一的聚集融合并分发注入到不同层级中,构建更加充分高效的信息交互融合机制,并基于GD机制构建了Gold-YOLO。在COCO数据集中,我们的Gold-YOLO超越了现有的YOLO系列,实现了精度-速度曲线上的SOTA。

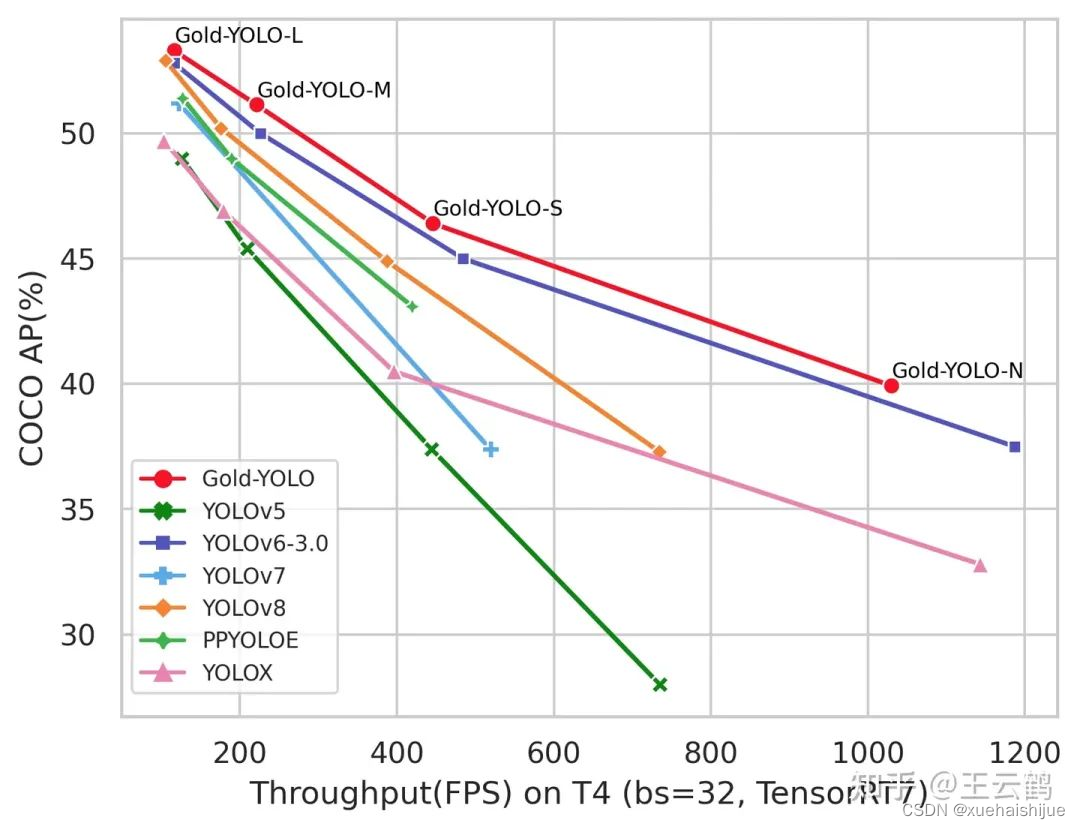

精度和速度曲线(TensorRT7)

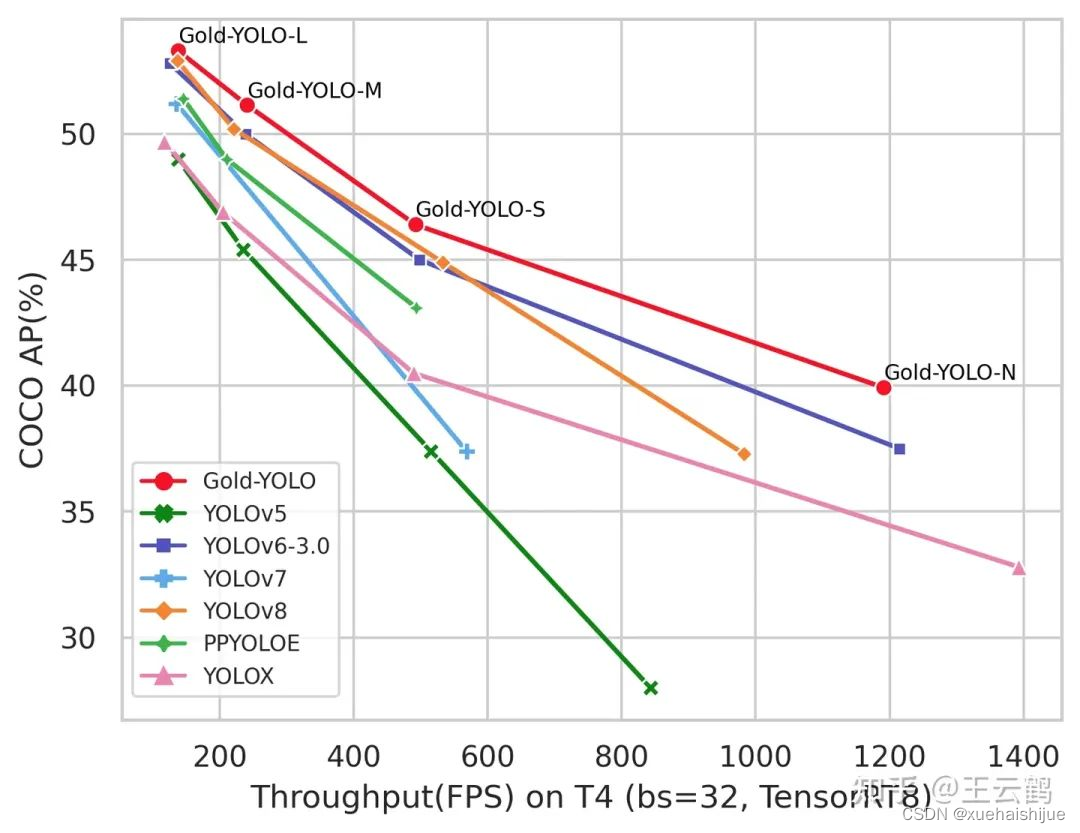

精度和速度曲线(TensorRT8)

传统YOLO的问题

在检测模型中,通常先经过backbone提取得到一系列不同层级的特征,FPN利用了backbone的这一特点,构建了相应的融合结构:不层级的特征包含着不同大小物体的位置信息,虽然这些特征包含的信息不同,但这些特征在相互融合后能够互相弥补彼此缺失的信息,增强每一层级信息的丰富程度,提升网络性能。

原始的FPN结构由于其层层递进的信息融合模式,使得相邻层的信息能够充分融合,但也导致了跨层信息融合存在问题:当跨层的信息进行交互融合时,由于没有直连的交互通路,只能依靠中间层充当“中介”进行融合,导致了一定的信息损失。之前的许多工作中都关注到了这一问题,而解决方案通常是通过添加shortcut增加更多的路径,以增强信息流动。

然而传统的FPN结构即便改进后,由于网络中路径过多,且交互方式不直接,基于FPN思想的信息融合结构仍然存在跨层信息交互困难和信息损失的问题。

Gold-YOLO:全新的信息融合交互机制

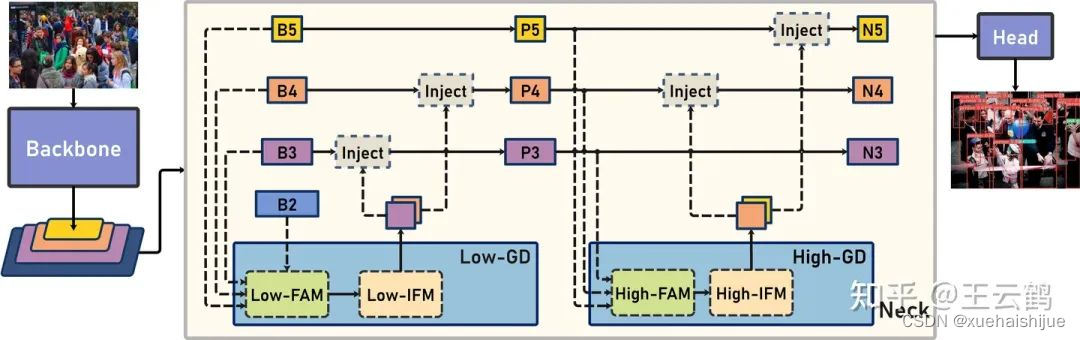

Gold-YOLO架构

参考该博客提出的一种全新的信息交互融合机制:信息聚集-分发机制(Gather-and-Distribute Mechanism)。该机制通过在全局上融合不同层次的特征得到全局信息,并将全局信息注入到不同层级的特征中,实现了高效的信息交互和融合。在不显著增加延迟的情况下GD机制显著增强了Neck部分的信息融合能力,提高了模型对不同大小物体的检测能力。

GD机制通过三个模块实现:信息对齐模块(FAM)、信息融合模块(IFM)和信息注入模块(Inject)。

信息对齐模块负责收集并对齐不同层级不同大小的特征

信息融合模块通过使用卷积或Transformer算子对对齐后的的特征进行融合,得到全局信息

信息注入模块将全局信息注入到不同层级中

在Gold-YOLO中,针对模型需要检测不同大小的物体的需要,并权衡精度和速度,我们构建了两个GD分支对信息进行融合:低层级信息聚集-分发分支(Low-GD)和高层级信息聚集-分发分支(High-GD),分别基于卷积和transformer提取和融合特征信息。

此外,为了促进局部信息的流动,我们借鉴现有工作,构建了一个轻量级的邻接层融合模块,该模块在局部尺度上结合了邻近层的特征,进一步提升了模型性能。我们还引入并验证了预训练方法对YOLO模型的有效性,通过在ImageNet 1K上使用MAE方法对主干进行预训练,显著提高了模型的收敛速度和精度。

9.系统整合

下图[完整源码&数据集&环境部署视频教程&自定义UI界面]

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!