HNU计算机视觉作业二

前言

选修的是蔡mj老师的计算机视觉,上课还是不错的,但是OpenCV可能需要自己学才能完整把作业写出来。由于没有认真学,这门课最后混了80多分,所以下面作业解题过程均为自己写的,并不是标准答案,仅供参考

任务1

修改test-2.py的task_one()函数,基于特征匹配和2D图像变换方法,以query_book.jpg中的书本为搜索目标,从search_book.jpg中检测出该书本的区域。具体输出要求如下:

(1)估计从query_book.jpg到search_book.jpg的2D变换矩阵(单应矩阵),并将单应矩阵的参数保存在下方空白区域

单应矩阵参数:

[[ 1.18057489e-01 -2.96697386e-02 1.21128139e+03]

[-4.89878565e-01 2.85541672e-01 5.48692141e+02]

[-2.67837932e-04 -2.40726966e-04 1.00000000e+00]]

(2)用白色线段在search_book.jpg中画出书本检测区域的四个边界,并对query_book.jpg和search_book.jpg之间的匹配特征点对进行可视化,可视化结果保存为task1_result.jpg

提示:可以使用OpenCV的drawMatches函数对两个图像的匹配特征点对进行可视化

def task_one():

"""

object detection based on feature matching and 2D image transformation

"""

MIN_MATCH_COUNT = 20

img1 = cv2.imread('query_book.jpg') # queryImage

img2 = cv2.imread('search_book.jpg') # trainImage

img1 = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

# --------Your code--------

# Initiate ORB detector

orb = cv2.ORB_create(nfeatures=1500)

# find the keypoints and descriptors

kp1,des1 = orb.detectAndCompute(img1, None)

kp2,des2 = orb.detectAndCompute(img2, None) # BFMatcher with default params

bf = cv2.BFMatcher(cv2.NORM_HAMMING) # returns k best matches

matches = bf.knnMatch(des1, des2,k = 2)

# Apply ratio test.

good =[]

for m, n in matches:

if m.distance < 0.75 * n.distance:

good.append(m)

if len(good) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1,2)

M, mask = cv2.findHomography(src_pts,dst_pts,cv2.RANSAC,5.0)

matchesMask =mask.ravel().tolist()

h, w=img1.shape

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

else:

print("Not enough matches are found - %d/%d" % (len(good), MIN_MATCH_COUNT))

matchesMask = None

draw_params = dict(matchColor=(0, 255, 0),

singlePointColor = None,

matchesMask = matchesMask,

flags = 2)

img3 = cv2.drawMatches(img1, kp1, img2, kp2, good, None, **draw_params)

#print(M)

#plt.imshow(cv2.cvtColor(img3, cv2.COLOR_BGR2RGB))

#plt.show()

cv2.imwrite("task1_result.jpg", img3)

结果如下:

任务2

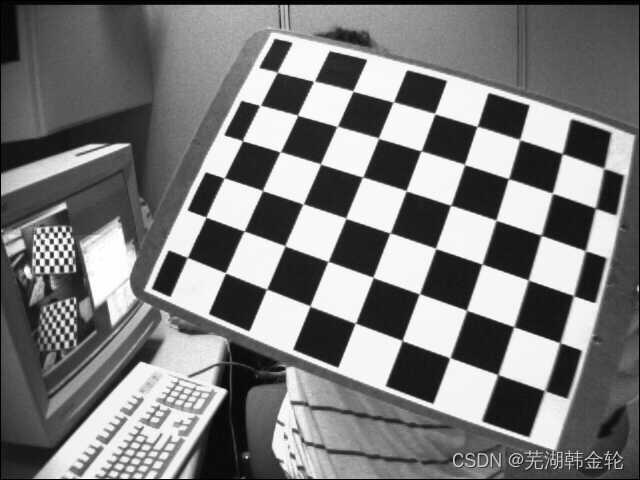

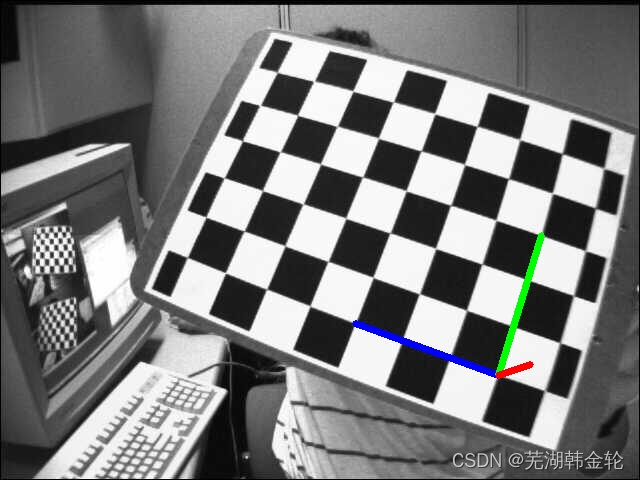

修改test-2.py的task_two()函数,基于left01.jpg, left02.jpg, …, left08.jpg等8个图像进行相机校准。具体输出要求如下:

(1)将相机校准得到的相机内部矩阵参数(intrinsic parameters)保存在下方空白区域

相机内部矩阵参数:

[[534.17982188 0. 341.22392645]

[ 0. 534.42712122 233.96164532]

[ 0. 0. 1. ]]

(2)在left03.jpg上对于世界坐标系的三个坐标轴(例如从坐标原点到[3,0,0], [0,3,0], [0,0,-3]的三个线段)进行可视化,可视化结果保存为task2_result.jpg

提示:可以使用OpenCV的findChessboardCorners函数自动检测每个图像中的特征点;可以使用OpenCV的projectPoints函数将世界坐标系中的三维坐标转换为图像中的二维坐标

def task_two():

"""

camera calibration and 3D visualization

"""

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30,0.001)

objp = np.zeros((6 * 7, 3),np.float32)

objp[:, :2] = np.mgrid[0:7, 0:6].T.reshape(-1, 2)

objpoints =[] # 3d point in real world

imgpoints =[] # 2d points in image plane.

images = glob.glob('left0*.jpg')

# --------Your code--------

for fname in images:

img = cv2.imread(fname)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret,corners = cv2.findChessboardCorners(gray,(7, 6), None)

if ret == True:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray, corners, (11, 11),(-1, -1), criteria)

imgpoints.append(corners2)

ret,mtx,dist,rvecs,tvecs = cv2.calibrateCamera(objpoints,imgpoints, gray.shape[::-1], None, None)

np.savez('cameraParams', mtx=mtx,dist=dist)

#print(mtx)

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

objp = np.zeros((6 * 7, 3), np.float32)

objp[:, :2] = np.mgrid[0:7, 0:6].T.reshape(-1, 2)

axis = np.float32([[3, 0, 0], [0, 3, 0], [0, 0, -3]]).reshape(-1, 3)

img1 = cv2.imread('left03.jpg')

gray = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (7, 6), None)

if ret == True:

corners2 = cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

# 找到旋转和平移矢量。

ret, rvecs, tvecs = cv2.solvePnP(objp, corners2, mtx, dist)

# 将3D点投影到图像平面

imgpts, jac = cv2.projectPoints(axis, rvecs, tvecs, mtx, dist)

pt1 = tuple(map(int, np.around(corners2[0].ravel())))

pt2 = tuple(map(int, np.around(imgpts[0].ravel())))

pt3 = tuple(map(int, np.around(imgpts[1].ravel())))

pt4 = tuple(map(int, np.around(imgpts[2].ravel())))

img1 = cv2.line(img1, pt1, pt2, (255, 0, 0), 5)

img1 = cv2.line(img1, pt1, pt3, (0, 255, 0), 5)

img1 = cv2.line(img1, pt1, pt4, (0, 0, 255), 5)

# plt.imshow(cv2.cvtColor(img1, cv2.COLOR_BGR2RGB))

# plt.title('left03.jpg axis: blue(x) green(y) red(-z)'), plt.xticks([]), plt.yticks([])

# plt.show()

cv2.imwrite("task2_result.jpg", img1)

结果如下:

任务3

修改test-2.py的task_three()函数,基于task3-1.jpg和task3-2.jpg之间的特征匹配进行相机运动估计。具体输出要求如下:

(1)估计两个图像之间的基本矩阵(fundamental matrix),参数保存在下方空白区域

基本矩阵参数:

[[ 5.87283710e-09 4.80576302e-07 -2.32905631e-04]

[ 3.27541245e-06 -6.14881359e-08 -9.80274096e-03]

[-1.51211294e-03 7.89845687e-03 1.00000000e+00]]

(2)对两个图像的匹配特征点和极线(epipolar line)进行可视化,可视化结果分别保存为task3-1_result.jpg和task3-2_result.jpg

提示:可以使用OpenCV的computeCorrespondEpilines函数,基于某个图像的匹配特征点计算在另一图像上对应的极线参数

def task_three():

"""

fundamental matrix estimation and epipolar line visualization

"""

img1 = cv2.imread('task3-1.jpg') # left image

img2 = cv2.imread('task3-2.jpg') # right image

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# --------Your code--------

sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

good = []

pts1 = []

pts2 = []

# 根据Lowe的论文进行比率测试

for i, (m, n) in enumerate(matches):

if m.distance < 0.8 * n.distance:

good.append(m)

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

pts1 = np.int32(pts1)

pts2 = np.int32(pts2)

F, mask = cv2.findFundamentalMat(pts1, pts2, cv2.FM_LMEDS)

print(F)

# 我们只选择内点

pts1 = pts1[mask.ravel() == 1]

pts2 = pts2[mask.ravel() == 1]

def drawlines(img1, img2, lines, pts1, pts2):

''' img1 - 我们在img2相应位置绘制极点生成的图像

lines - 对应的极点 '''

r, c = img1.shape

img1 = cv2.cvtColor(img1, cv2.COLOR_GRAY2BGR)

img2 = cv2.cvtColor(img2, cv2.COLOR_GRAY2BGR)

for r, pt1, pt2 in zip(lines, pts1, pts2):

color = tuple(np.random.randint(0, 255, 3).tolist())

x0, y0 = map(int, [0, -r[2] / r[1]])

x1, y1 = map(int, [c, -(r[2] + r[0] * c) / r[1]])

img1 = cv2.line(img1, (x0, y0), (x1, y1), color, 1)

img1 = cv2.circle(img1, tuple(pt1), 5, color, -1)

img2 = cv2.circle(img2, tuple(pt2), 5, color, -1)

return img1, img2

# 在右图(第二张图)中找到与点相对应的极点,然后在左图绘制极线

lines1 = cv2.computeCorrespondEpilines(pts2.reshape(-1, 1, 2), 2, F)

lines1 = lines1.reshape(-1, 3)

img5, img6 = drawlines(img1, img2, lines1, pts1, pts2)

# 在左图(第一张图)中找到与点相对应的Epilines,然后在正确的图像上绘制极线

lines2 = cv2.computeCorrespondEpilines(pts1.reshape(-1, 1, 2), 1, F)

lines2 = lines2.reshape(-1, 3)

img3, img4 = drawlines(img2, img1, lines2, pts2, pts1)

# plt.subplot(121), plt.imshow(img5)

# plt.subplot(122), plt.imshow(img3)

# plt.show()

# plt.imshow(cv2.cvtColor(img5, cv2.COLOR_BGR2RGB))

# plt.show()

# plt.imshow(cv2.cvtColor(img3, cv2.COLOR_BGR2RGB))

# plt.show()

cv2.imwrite("task3-1_result.jpg", img5)

cv2.imwrite("task3-2_result.jpg", img3)

结果如下:

源代码

# -*- coding: utf-8 -*-

"""

Created on Tue Apr 25 16:24:14 2023

@author: cai-mj

"""

import numpy as np

import cv2

from matplotlib import pyplot as plt

import glob

def task_one():

"""

object detection based on feature matching and 2D image transformation

"""

MIN_MATCH_COUNT = 20

img1 = cv2.imread('query_book.jpg') # queryImage

img2 = cv2.imread('search_book.jpg') # trainImage

img1 = cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

# --------Your code--------

# Initiate ORB detector

orb = cv2.ORB_create(nfeatures=1500)

# find the keypoints and descriptors

kp1,des1 = orb.detectAndCompute(img1, None)

kp2,des2 = orb.detectAndCompute(img2, None) # BFMatcher with default params

bf = cv2.BFMatcher(cv2.NORM_HAMMING) # returns k best matches

matches = bf.knnMatch(des1, des2,k = 2)

# Apply ratio test.

good =[]

for m, n in matches:

if m.distance < 0.75 * n.distance:

good.append(m)

if len(good) > MIN_MATCH_COUNT:

src_pts = np.float32([kp1[m.queryIdx].pt for m in good]).reshape(-1, 1, 2)

dst_pts = np.float32([kp2[m.trainIdx].pt for m in good]).reshape(-1, 1,2)

M, mask = cv2.findHomography(src_pts,dst_pts,cv2.RANSAC,5.0)

matchesMask =mask.ravel().tolist()

h, w=img1.shape

pts = np.float32([[0, 0], [0, h - 1], [w - 1, h - 1], [w - 1, 0]]).reshape(-1, 1, 2)

dst = cv2.perspectiveTransform(pts, M)

img2 = cv2.polylines(img2, [np.int32(dst)], True, 255, 3, cv2.LINE_AA)

else:

print("Not enough matches are found - %d/%d" % (len(good), MIN_MATCH_COUNT))

matchesMask = None

draw_params = dict(matchColor=(0, 255, 0),

singlePointColor = None,

matchesMask = matchesMask,

flags = 2)

img3 = cv2.drawMatches(img1, kp1, img2, kp2, good, None, **draw_params)

#print(M)

#plt.imshow(cv2.cvtColor(img3, cv2.COLOR_BGR2RGB))

#plt.show()

cv2.imwrite("task1_result.jpg", img3)

def draw(img, corners, imgpts):

return img

def task_two():

"""

camera calibration and 3D visualization

"""

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30,0.001)

objp = np.zeros((6 * 7, 3),np.float32)

objp[:, :2] = np.mgrid[0:7, 0:6].T.reshape(-1, 2)

objpoints =[] # 3d point in real world

imgpoints =[] # 2d points in image plane.

images = glob.glob('left0*.jpg')

# --------Your code--------

for fname in images:

img = cv2.imread(fname)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret,corners = cv2.findChessboardCorners(gray,(7, 6), None)

if ret == True:

objpoints.append(objp)

corners2 = cv2.cornerSubPix(gray, corners, (11, 11),(-1, -1), criteria)

imgpoints.append(corners2)

ret,mtx,dist,rvecs,tvecs = cv2.calibrateCamera(objpoints,imgpoints, gray.shape[::-1], None, None)

np.savez('cameraParams', mtx=mtx,dist=dist)

#print(mtx)

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

objp = np.zeros((6 * 7, 3), np.float32)

objp[:, :2] = np.mgrid[0:7, 0:6].T.reshape(-1, 2)

axis = np.float32([[3, 0, 0], [0, 3, 0], [0, 0, -3]]).reshape(-1, 3)

img1 = cv2.imread('left03.jpg')

gray = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (7, 6), None)

if ret == True:

corners2 = cv2.cornerSubPix(gray, corners, (11, 11), (-1, -1), criteria)

# 找到旋转和平移矢量。

ret, rvecs, tvecs = cv2.solvePnP(objp, corners2, mtx, dist)

# 将3D点投影到图像平面

imgpts, jac = cv2.projectPoints(axis, rvecs, tvecs, mtx, dist)

pt1 = tuple(map(int, np.around(corners2[0].ravel())))

pt2 = tuple(map(int, np.around(imgpts[0].ravel())))

pt3 = tuple(map(int, np.around(imgpts[1].ravel())))

pt4 = tuple(map(int, np.around(imgpts[2].ravel())))

img1 = cv2.line(img1, pt1, pt2, (255, 0, 0), 5)

img1 = cv2.line(img1, pt1, pt3, (0, 255, 0), 5)

img1 = cv2.line(img1, pt1, pt4, (0, 0, 255), 5)

# plt.imshow(cv2.cvtColor(img1, cv2.COLOR_BGR2RGB))

# plt.title('left03.jpg axis: blue(x) green(y) red(-z)'), plt.xticks([]), plt.yticks([])

# plt.show()

cv2.imwrite("task2_result.jpg", img1)

def task_three():

"""

fundamental matrix estimation and epipolar line visualization

"""

img1 = cv2.imread('task3-1.jpg') # left image

img2 = cv2.imread('task3-2.jpg') # right image

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

# --------Your code--------

sift = cv2.SIFT_create()

kp1, des1 = sift.detectAndCompute(img1, None)

kp2, des2 = sift.detectAndCompute(img2, None)

FLANN_INDEX_KDTREE = 1

index_params = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)

search_params = dict(checks=50)

flann = cv2.FlannBasedMatcher(index_params, search_params)

matches = flann.knnMatch(des1, des2, k=2)

good = []

pts1 = []

pts2 = []

# 根据Lowe的论文进行比率测试

for i, (m, n) in enumerate(matches):

if m.distance < 0.8 * n.distance:

good.append(m)

pts2.append(kp2[m.trainIdx].pt)

pts1.append(kp1[m.queryIdx].pt)

pts1 = np.int32(pts1)

pts2 = np.int32(pts2)

F, mask = cv2.findFundamentalMat(pts1, pts2, cv2.FM_LMEDS)

print(F)

# 我们只选择内点

pts1 = pts1[mask.ravel() == 1]

pts2 = pts2[mask.ravel() == 1]

def drawlines(img1, img2, lines, pts1, pts2):

''' img1 - 我们在img2相应位置绘制极点生成的图像

lines - 对应的极点 '''

r, c = img1.shape

img1 = cv2.cvtColor(img1, cv2.COLOR_GRAY2BGR)

img2 = cv2.cvtColor(img2, cv2.COLOR_GRAY2BGR)

for r, pt1, pt2 in zip(lines, pts1, pts2):

color = tuple(np.random.randint(0, 255, 3).tolist())

x0, y0 = map(int, [0, -r[2] / r[1]])

x1, y1 = map(int, [c, -(r[2] + r[0] * c) / r[1]])

img1 = cv2.line(img1, (x0, y0), (x1, y1), color, 1)

img1 = cv2.circle(img1, tuple(pt1), 5, color, -1)

img2 = cv2.circle(img2, tuple(pt2), 5, color, -1)

return img1, img2

# 在右图(第二张图)中找到与点相对应的极点,然后在左图绘制极线

lines1 = cv2.computeCorrespondEpilines(pts2.reshape(-1, 1, 2), 2, F)

lines1 = lines1.reshape(-1, 3)

img5, img6 = drawlines(img1, img2, lines1, pts1, pts2)

# 在左图(第一张图)中找到与点相对应的Epilines,然后在正确的图像上绘制极线

lines2 = cv2.computeCorrespondEpilines(pts1.reshape(-1, 1, 2), 1, F)

lines2 = lines2.reshape(-1, 3)

img3, img4 = drawlines(img2, img1, lines2, pts2, pts1)

# plt.subplot(121), plt.imshow(img5)

# plt.subplot(122), plt.imshow(img3)

# plt.show()

# plt.imshow(cv2.cvtColor(img5, cv2.COLOR_BGR2RGB))

# plt.show()

# plt.imshow(cv2.cvtColor(img3, cv2.COLOR_BGR2RGB))

# plt.show()

cv2.imwrite("task3-1_result.jpg", img5)

cv2.imwrite("task3-2_result.jpg", img3)

if __name__ == '__main__':

task_one()

task_two()

task_three()

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!