【Datawhale 大模型基础】第四章 大模型的数据

第四章 大模型的数据

The relationship between LLMs and pre-training datasets is like that of a musician and their sheet music. The pre-training dataset is akin to various pieces of sheet music, while the LLM is like a skilled musician who, by studying these scores and drawing inspiration from them, can ultimately play beautiful and melodious music. The pre-training dataset provides rich language materials, and the large language model, through learning from these materials, can create fluent and expressive language expressions.

The pre-training datasets are vital for LLMs and many researchers work for improving the quality of pre-training datasets.

And I will follow the structure of survey, which uses three part to illustrate the pre-training datasets of LLMs.

4.1 Data Collection and Preparation

4.1.1 Data Source

Current large language models primarily utilize a blend of varied public textual datasets as the corpus for pre-training.

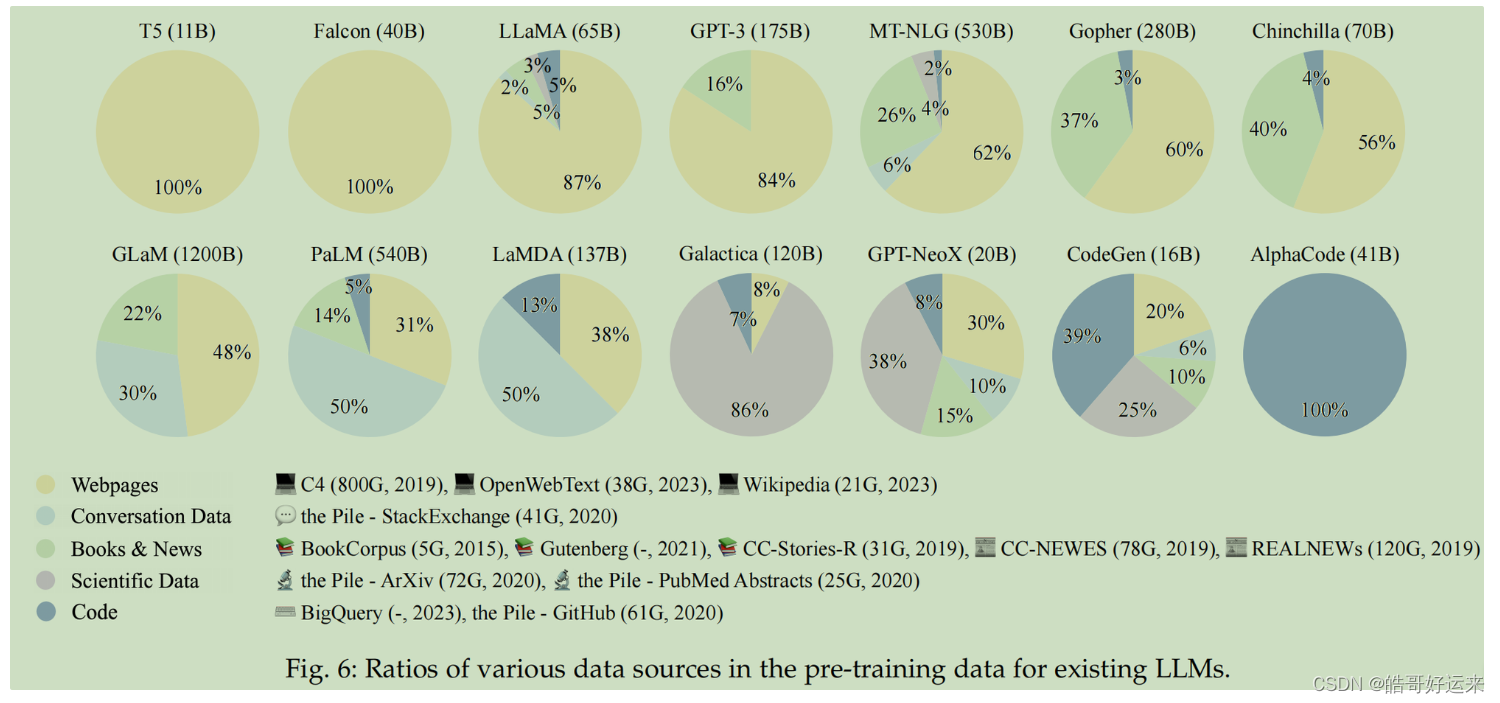

The pre-training corpus can be broadly divided into two categories: general data and specialized data. Most large language models (LLMs) make use of general data, such as webpages, books, and conversational text, because of its extensive, diverse, and easily accessible nature, which can improve the language modeling and generalization abilities of LLMs. Given the remarkable generalization capabilities demonstrated by LLMs, there are also studies that expand their pre-training corpus to include more specialized datasets, such as multilingual data, scientific data, and code, equipping LLMs with specific task-solving capabilities. And the figure below is cited from the survey and it shows the kind of training datasets visually.

General Data

- Webpages. With the widespread use of the Internet, a wide range of data has been generated, allowing LLMs to acquire diverse linguistic knowledge and improve their generalization capabilities. To make use of these data resources conveniently, a significant amount of data has been crawled from the web in prior research. However, the crawled web data often includes both high-quality text and low-quality text, making it crucial to filter and process webpages to enhance the quality of the data.

- Conversation text. Utilizing conversation data can enhance the conversational abilities of LLMs and potentially enhance their performance across various question-answering tasks. As online conversational data often involves discussions among multiple participants, an effective approach is to convert a conversation into a tree structure, linking each utterance to the one it responds to. This allows the multi-party conversation tree to be segmented into multiple sub-conversations, which can then be included in the pre-training corpus. However, there is a potential risk that excessive integration of dialogue data into LLMs may lead to an unintended consequence: declarative instructions and direct interrogatives could be mistakenly interpreted as the start of conversations, potentially reducing the effectiveness of the instructions.

- Books. In contrast to other corpora, books offer a significant reservoir of formal lengthy texts, which have the potential to be advantageous for LLMs in acquiring linguistic knowledge, capturing long-term dependencies, and producing cohesive and narrative texts.

Specialized Data

- Multilingual text. Apart from the text in the target language, the incorporation of a multilingual corpus can improve the multilingual capabilities of language comprehension and generation. Models utilizing multilingual corpus have shown remarkable proficiency in multilingual tasks, including translation, multilingual summarization, and multilingual question answering, often achieving comparable or superior performance to SoTA models fine-tuned on the corpus in the target language(s).

- Scientific text. The growth of scientific publications reflects humanity’s ongoing exploration of science. To enhance LLMs’ understanding of scientific knowledge, incorporating a scientific corpus for model pre-training is beneficial. Pre-training on a substantial amount of scientific text enables LLMs to excel in scientific and reasoning tasks. Efforts to construct the scientific corpus primarily involve gathering arXiv papers, scientific textbooks, mathematical webpages, and other related scientific resources. Given the intricate nature of data in scientific fields, such as mathematical symbols and protein sequences, specific tokenization and preprocessing techniques are typically necessary to unify these diverse data formats for processing by language models.

- Code. Recent studies have demonstrated that training LLMs on a vast code corpus can significantly enhance the quality of the synthesized programs. The generated programs can successfully pass expert-designed unit-test cases or solve competitive programming questions. Generally, two types of code corpora are commonly used for pre-training LLMs. The first source is from programming question answering communities like Stack Exchange. The second source is from public software repositories such as GitHub, where code data (including comments and docstrings) are collected for utilization. Unlike natural language text, code is in the format of a programming language, involving long-range dependencies and precise execution logic.

4.2 Data Preprocessing

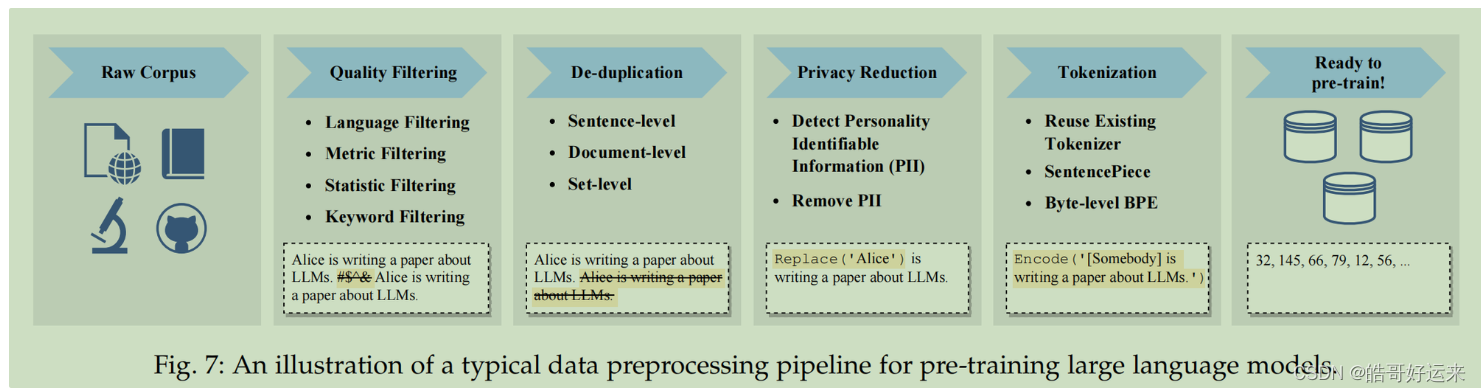

Once a substantial amount of text data has been gathered, it becomes crucial to preprocess the data in order to construct the pre-training corpus. This involves the removal of noisy, redundant, irrelevant, and potentially harmful data, as these factors can significantly impact the capacity and performance of LLMs. Through comparing the performance of models trained on both the filtered and unfiltered corpus, it has been concluded that pre-training LLMs on cleaned data can enhance model performance. And the figure below shows a typical pipeline of preprocessing the pre-training data for LLMs.

-

Quality Filtering. To eliminate low-quality data from the collected corpus, current research generally employs two approaches:

-

classifier-based

The classifier-based approach involves training a selection classifier using high-quality texts and using it to identify and filter out low-quality data. Typically, these methods train a binary classifier with well-curated data (e.g., Wikipedia pages) as positive instances and sample candidate data as negative instances, predicting a score that measures the quality of each data example. However, several studies have found that a classifier-based approach may inadvertently remove high-quality texts in dialectal, colloquial, and sociolectal languages, potentially leading to bias in the pre-training corpus and diminishing its diversity.

-

heuristic-based.

Several studies utilize heuristic-based approaches to eliminate low-quality texts through a set of well-designed rules, which can be summarized as follows:- Language-based filtering: If an LLM is primarily used for tasks in specific languages, text in other languages can be filtered out.

- Metric-based filtering: Evaluation metrics about the generated texts, such as perplexity, can be used to detect and remove unnatural sentences.

- Statistic-based filtering: Statistical features of a corpus, such as punctuation distribution, symbol-to-word ratio, and sentence length, can be used to measure text quality and filter low-quality data.

- Keyword-based filtering: Based on a specific keyword set, noisy or useless elements in the text, such as HTML tags, hyperlinks, boilerplates, and offensive words, can be identified and removed.

-

-

De-duplication. The duplication of data may lead to “double descent” (referring to the phenomenon of performance initially deteriorating and subsequently improving), or even overwhelm the training process. Furthermore, it has been demonstrated that duplicate data diminishes the ability of LLMs to learn from the context, which could further impact the generalization capacity of LLMs using in-context learning. Previous research has shown that duplicate data in a corpus can reduce the diversity of language models, potentially causing the training process to become unstable and affecting model performance. Specifically, de-duplication can be performed at different granularities, including sentence-level, document-level, and dataset-level de-duplication.

- Low-quality sentences containing repeated words and phrases should be removed, as they may introduce repetitive patterns in language modeling.

- At the document level, existing studies mostly rely on the overlap ratio of surface features (e.g., words and n-grams overlap) between documents to detect and remove duplicate documents containing similar contents.

- To avoid the dataset contamination problem, it is crucial to prevent overlap between the training and evaluation sets by removing possible duplicate texts from the training set.

It has been shown that the three levels of de-duplication are useful for improving the training of LLMs, and should be used jointly in practice.

-

Privacy Reduction. The majority of pre-training text data is sourced from the web, including user-generated content containing sensitive or personal information, which can heighten the risk of privacy breaches. Therefore, it’s essential to remove personally identifiable information (PII) from the pre-training corpus. One direct and effective approach is to use rule-based methods, such as keyword spotting, to detect and remove PII like names, addresses, and phone numbers. Additionally, researchers have found that the vulnerability of LLMs to privacy attacks can be linked to the presence of duplicate PII data in the pre-training corpus. Therefore, de-duplication can also help reduce privacy risks to some extent.

-

Tokenization. Tokenization is a crucial step in data preprocessing, aiming to segment raw text into sequences of individual tokens, which are then used as inputs for LLMs. In traditional NLP research, word-based tokenization is the predominant approach, aligned with human language cognition. However, word-based tokenization can yield different segmentation results for the same input in some languages, generate a large word vocabulary containing many low-frequency words, and also suffer from the “out-of-vocabulary” issue. As a result, several neural network models use characters as the minimum unit to derive word representation. Recently, subword tokenizers, such as Byte-Pair Encoding tokenization, WordPiece tokenization, and Unigram tokenization, have been widely used in Transformer-based language models.

- Byte-Pair Encoding (BPE) tokenization. It begins with a set of basic symbols (e.g., the alphabet and boundary characters) and iteratively combines frequent pairs of two consecutive tokens in the corpus to form new tokens through a process called “merge”. The selection criterion for each merge is based on the co-occurrence frequency of two contiguous tokens, with the most frequent pair being selected. This merging process continues until it reaches the predefined size. Notable language models that employ this tokenization approach include GPT-2, BART, and LLaMA.

- WordPiece tokenization. WordPiece shares a similar concept with BPE, iteratively merging consecutive tokens, but uses a slightly different selection criterion for the merge. To conduct the merge, it first trains a language model and uses it to score all possible pairs. Then, at each merge, it selects the pair that leads to the most increase in the likelihood of the training data.

- Unigram tokenization. In contrast to BPE and WordPiece, Unigram tokenization begins with a sufficiently large set of possible substrings or subtokens for a corpus and iteratively removes tokens from the current vocabulary until the expected vocabulary size is reached. It calculates the increase in the likelihood of the training corpus by assuming that a token was removed from the current vocabulary. This step is carried out based on a trained unigram language model. To estimate the unigram language model, it adopts an expectation-maximization (EM) algorithm, where at each iteration, the currently optimal tokenization of words is first found based on the old language model, and then the probabilities of unigrams are re-estimated to update the language model. Dynamic programming algorithms, such as the Viterbi algorithm, are used to efficiently find the optimal decomposition of a word given the language model. Representative models that adopt this tokenization approach include T5 and mBART.

4.3 Data Scheduling

In general, close attention should be given to two key aspects when it comes to data scheduling: the proportion of each data source (data mixture) and the order in which each data source is scheduled for training (data curriculum). A visual representation of data scheduling is provided in the figure below.

-

Data Distribution. Given that each type of data source is closely linked to the development of specific capabilities for LLMs, it is crucial to establish an appropriate distribution for mixing these data. The data mixture is typically set at a global level (i.e., the distribution of the entire pre-training data) and can also be locally adjusted to varying proportions at different stages of training. During pre-training, data samples from different sources are selected based on the mixture proportions: more data is sampled from a data source with a higher weight. Established LLMs such as LLaMA may utilize upsampling or downsampling on the complete data of each source to create specific data mixtures as pre-training data. Moreover, specialized data mixtures can be utilized to serve different purposes. In practice, the determination of data mixture is often empirical, and several common strategies for finding an effective data mixture as follows:

- Increasing the diversity of data sources. Recent studies have empirically demonstrated that excessive training data from a specific domain would diminish the generalization capability of LLMs on other domains. Conversely, enhancing the heterogeneity of data sources (e.g., including diverse data sources) is crucial for enhancing the downstream performance of LLMs. It has been shown that eliminating data sources with high heterogeneity (e.g., webpages) has a more severe impact on LLM capabilities than dropping sources with low heterogeneity (e.g., academic corpus).

- Optimizing data mixtures. In addition to manually setting the data mixtures, several studies have proposed optimizing the data mixtures to enhance model pre-training. Depending on the target downstream tasks, one can select pre-training data that either has higher proximity in the feature space or positively influences downstream task performance. Furthermore, to reduce the reliance on target tasks, DoReMi initially trains a small reference model using given initial domain weights, then trains another small proxy model, emphasizing the domains with the greatest discrepancies in likelihood between the two models. Finally, the learned domain weights of the proxy model are applied to train a much larger LLM. In a simpler approach, one can train several small language models with different data mixtures and select the data mixture that yields the most desirable performance. However, an assumption made in this approach is that when trained in a similar manner, small models would resemble large models in terms of abilities or behaviors, which may not always hold true in practice.

- Specializing the targeted abilities. The model capacities of LLMs heavily depend on data selection and mixture, and one can increase the proportions of specific data sources to enhance certain model abilities. For instance, mathematical reasoning and coding abilities can be specifically enhanced by training with more mathematical texts and code data, respectively. Moreover, experimental results on the LAMBADA dataset demonstrate that increasing the proportion of books data can enhance the model’s capacity to capture long-term dependencies from text. To enhance specific skills such as mathematics and coding in LLMs, or to develop specialized LLMs, a practical approach is to employ a multi-stage training method, where general and skill-specific data are scheduled in two consecutive stages. This approach of training LLMs on different sources or proportions of data across multiple stages is also known as “data curriculum,” which will be introduced below.

-

Data Curriculum. Once the data mixture is prepared, it becomes crucial to determine the order in which specific data is presented to LLMs during pre-training. Studies have demonstrated that, in certain cases, learning a particular skill in a sequential manner (e.g., basic skills → target skill) yields better results than direct learning from a corpus solely focused on the target skill.

Drawing from the concept of curriculum learning, data curriculum has been proposed and widely adopted in model pre-training. Its objective is to arrange different segments of pre-training data for LLMs in a specific order, such as commencing with easy/general examples and gradually introducing more challenging/specialized ones. In a broader sense, it can encompass the adaptive adjustment of data proportions for different sources during pre-training.

A practical approach to determining data curriculum involves monitoring the development of key LLM abilities based on specially constructed evaluation benchmarks and adaptively adjusting the data mixture during pre-training.

In general, finding a suitable data curriculum is more challenging. In practical terms, one can monitor the performance of intermediate model checkpoints on specific evaluation benchmarks and adjust the data mixture and distribution dynamically during pre-training. During this process, it is also beneficial to investigate the potential relationships between data sources and model abilities to guide the design of the data curriculum.

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!