XIAO ESP32S3之物体检测加入视频流

一、前言

????????由于XIAO ESP32S3开发套件没有显示屏配件,因此加入http视频流功能,可通过浏览器请求ESP32S3上的视频流。

二、思路

1、XIAO ESP32S3启动后通过wifi连接到AP;

2、启动http服务器,注册get_mjpeg处理函数;

3、主任务将算法输出的图像压缩为jpg通过xMessageBuffer传递给get_mjpeg处理函数;

4、连接到同一个AP的终端启动浏览器输入XIAO ESP32S3的IP:8081获取视频流。

三、编写代码

1、加入文件

main文件夹下增加http_stream.cpp、http_stream.h文件

修改CMakeLists.txt文件,加入http_stream.cpp,内容如下:

idf_component_register(SRCS

app_main.cpp

fomo_mobilenetv2_model_data.cpp

http_stream.cpp

)2、主函数中加入连接AP和传递图像的功能

修改之后的app_main.cpp代码:

#include <inttypes.h>

#include <stdio.h>

#include "img_converters.h"

#include "core/edgelab.h"

#include "fomo_mobilenetv2_model_data.h"

#include "freertos/FreeRTOS.h"

#include "freertos/task.h"

#include "freertos/message_buffer.h"

#include "http_stream.h"

#define DEMO_WIFI_SSID "huochaigun"

#define DEMO_WIFI_PASS "12345678"

#define STORAGE_SIZE_BYTES 128*1024

static uint8_t *ucStorageBuffer;

StaticMessageBuffer_t xMessageBufferStruct;

#define kTensorArenaSize (1024 * 1024)

uint16_t color[] = {

0x0000,

0x03E0,

0x001F,

0x7FE0,

0xFFFF,

};

extern "C" void app_main(void) {

using namespace edgelab;

Device* device = Device::get_device();

device->init();

printf("device_name:%s\n", device->get_device_name() );

Network* net = device->get_network();

el_printf(" Network Demo\n");

uint32_t cnt_for_retry = 0;

net->init();

while (net->status() != NETWORK_IDLE) {

el_sleep(100);

if(cnt_for_retry++ > 50) {

net->init();

cnt_for_retry = 0;

}

}

el_printf(" Network initialized!\n");

cnt_for_retry = 0;

net->join(DEMO_WIFI_SSID, DEMO_WIFI_PASS);

while (net->status() != NETWORK_JOINED) {

el_sleep(100);

if(cnt_for_retry++ > 100) {

net->join(DEMO_WIFI_SSID, DEMO_WIFI_PASS);

cnt_for_retry = 0;

}

}

el_printf(" WIFI joined!\n");

// Display* display = device->get_display();

Camera* camera = device->get_camera();

// display->init();

camera->init(240, 240);

ucStorageBuffer = (uint8_t *)malloc( STORAGE_SIZE_BYTES );

if( ucStorageBuffer != NULL )

{

xMessageBuffer = xMessageBufferCreateStatic( STORAGE_SIZE_BYTES, ucStorageBuffer, &xMessageBufferStruct );

if( xMessageBuffer == NULL )

{

// There was not enough heap memory space available to create the

// message buffer.

printf("Create xMessageBuffer fail\n");

}

}

else

{

printf("malloc ucStorageBuffer fail\n");

}

start_http_stream();

auto* engine = new EngineTFLite();

auto* tensor_arena = heap_caps_malloc(kTensorArenaSize, MALLOC_CAP_SPIRAM | MALLOC_CAP_8BIT);

engine->init(tensor_arena, kTensorArenaSize);

engine->load_model(g_fomo_mobilenetv2_model_data, g_fomo_mobilenetv2_model_data_len);

auto* algorithm = new AlgorithmFOMO(engine);

while (true) {

el_img_t img;

camera->start_stream();

camera->get_frame(&img);

algorithm->run(&img);

uint32_t preprocess_time = algorithm->get_preprocess_time();

uint32_t run_time = algorithm->get_run_time();

uint32_t postprocess_time = algorithm->get_postprocess_time();

uint8_t i = 0u;

for (const auto& box : algorithm->get_results()) {

el_printf("\tbox -> cx_cy_w_h: [%d, %d, %d, %d] t: [%d] s: [%d]\n",

box.x,

box.y,

box.w,

box.h,

box.target,

box.score);

int16_t y = box.y - box.h / 2;

int16_t x = box.x - box.w / 2;

el_draw_rect(&img, x, y, box.w, box.h, color[++i % 5], 4);

}

el_printf("preprocess: %d, run: %d, postprocess: %d\n", preprocess_time, run_time, postprocess_time);

// display->show(&img);

uint8_t * jpg_buf;

size_t jpg_buf_len;

bool jpeg_converted = fmt2jpg( img.data, img.size, img.width, img.height, PIXFORMAT_RGB565, 30, &jpg_buf, &jpg_buf_len);

if( jpeg_converted == true )

{

// printf("jpg_buf_len:%d\n", jpg_buf_len );

if( xMessageBuffer != NULL )

{

xMessageBufferSend( xMessageBuffer, jpg_buf, jpg_buf_len , 0 );

}

free(jpg_buf);

}

camera->stop_stream();

}

delete algorithm;

delete engine;

}

说明:RGB转为jpg的图像质量为30,这样转换后的图形数据小,视频流更流畅。

3、http服务

http_stream.cpp代码:

#include <stdio.h>

#include <stdint.h>

#include <stddef.h>

#include <string.h>

#include "esp_log.h"

#include "esp_timer.h"

#include "freertos/FreeRTOS.h"

#include "freertos/task.h"

#include "freertos/semphr.h"

#include "freertos/event_groups.h"

#include "esp_http_server.h"

#include "freertos/message_buffer.h"

#define PART_BOUNDARY "123456789000000000000987654321"

static const char* _STREAM_CONTENT_TYPE = "multipart/x-mixed-replace;boundary=" PART_BOUNDARY;

static const char* _STREAM_BOUNDARY = "\r\n--" PART_BOUNDARY "\r\n";

static const char* _STREAM_PART = "Content-Type: image/jpeg\r\nContent-Length: %u\r\n\r\n";

static const char *TAG = "http_stream";

MessageBufferHandle_t xMessageBuffer;

/**

* @brief jpg_stream_httpd_handler

* @param None

* @retval None

*/

esp_err_t jpg_stream_httpd_handler(httpd_req_t *req)

{

esp_err_t res = ESP_OK;

size_t _jpg_buf_size;

size_t _jpg_buf_len;

uint8_t * _jpg_buf;

char * part_buf[64];

static int64_t last_frame = 0;

if(!last_frame)

{

// last_frame = esp_timer_get_time();

}

_jpg_buf_size = 128*1024;

_jpg_buf = (uint8_t *)malloc( _jpg_buf_size );

if( _jpg_buf == NULL )

{

return res;

}

xMessageBufferReset( xMessageBuffer );

res = httpd_resp_set_type(req, _STREAM_CONTENT_TYPE);

if(res != ESP_OK)

{

return res;

}

while(true)

{

size_t xReceivedBytes = xMessageBufferReceive( xMessageBuffer, _jpg_buf, _jpg_buf_size, pdMS_TO_TICKS(100) );

_jpg_buf_len = xReceivedBytes;

res = ESP_FAIL;

if( _jpg_buf_len > 0 )

{

res = httpd_resp_send_chunk(req, _STREAM_BOUNDARY, strlen(_STREAM_BOUNDARY));

}

else

{

continue;

}

if(res == ESP_OK)

{

size_t hlen = snprintf((char *)part_buf, 64, _STREAM_PART, _jpg_buf_len);

res = httpd_resp_send_chunk(req, (const char *)part_buf, hlen);

}

if(res == ESP_OK)

{

res = httpd_resp_send_chunk(req, (const char *)_jpg_buf, _jpg_buf_len);

}

if(res != ESP_OK)

{

break;

}

}

free( _jpg_buf );

last_frame = 0;

return res;

}

httpd_uri_t uri_get_mjpeg = {

.uri = "/",

.method = HTTP_GET,

.handler = jpg_stream_httpd_handler,

.user_ctx = NULL};

/**

* @brief start http_stream.

* @param None.

* @retval None.

*/

httpd_handle_t start_http_stream( void )

{

printf("start_http_stream\n");

/* 生成默认的配置参数 */

httpd_config_t config = HTTPD_DEFAULT_CONFIG();

config.server_port = 8081;

/* 置空 esp_http_server 的实例句柄 */

httpd_handle_t server = NULL;

/* 启动 httpd server */

if (httpd_start(&server, &config) == ESP_OK)

{

/* 注册 URI 处理程序 */

// httpd_register_uri_handler(server, &uri_get);

// httpd_register_uri_handler(server, &uri_get_1m);

httpd_register_uri_handler(server, &uri_get_mjpeg);

}

/* 如果服务器启动失败,返回的句柄是 NULL */

return server;

}

http_stream.h代码:

#ifndef __HTTP_STREAM_H__

#define __HTTP_STREAM_H__

extern MessageBufferHandle_t xMessageBuffer;

void start_http_stream( void );

#endif4、修改PSRAM的配置

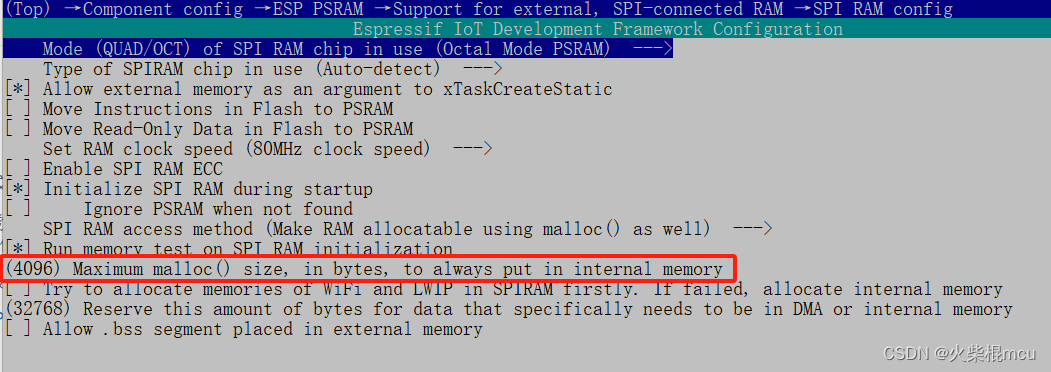

由于增加的功能需要消耗很多RAM空间,所以需要将PSRAM充分利用起来。

执行idf.py menuconfig,修改SPI RAM config,将malloc()阀值改小一些,这里改为4096字节,意思是使用malloc()分配内存时,大于4096字节则从外部PSRAM获取,配置如下:

?Component config ?--->

????????ESP PSRAM ?--->

????????????????SPI RAM config ?--->

????????????????????????(4096) Maximum malloc() size, in bytes, to always put in internal memory

四?、运行测试

1、编译、烧录、监视

idf.py build

idf.py flash

idf.py monitor连接AP的日志:

I (813) wifi:mode : sta (dc:54:75:d7:a2:10)

I (813) wifi:enable tsf

Network initialized!

I WAITING FOR IP...

I (2033) wifi:new:<11,0>, old:<1,0>, ap:<255,255>, sta:<11,0>, prof:1

I (2403) wifi:state: init -> auth (b0)

I (2413) wifi:state: auth -> assoc (0)

I (2423) wifi:state: assoc -> run (10)

W (2423) wifi:[ADDBA]rx delba, code:39, delete tid:5

I (2443) wifi:<ba-add>idx:0 (ifx:0, 90:76:9f:23:a3:58), tid:5, ssn:6, winSize:64

I (2583) wifi:connected with CMCC-2106, aid = 1, channel 11, BW20, bssid = 90:76:9f:23:a3:58

I (2583) wifi:security: WPA2-PSK, phy: bgn, rssi: -63

I (2583) wifi:pm start, type: 1

I (2583) wifi:dp: 1, bi: 102400, li: 3, scale listen interval from 307200 us to 307200 us

I (2583) wifi:set rx beacon pti, rx_bcn_pti: 0, bcn_timeout: 25000, mt_pti: 0, mt_time: 10000

I (2633) wifi:AP's beacon interval = 102400 us, DTIM period = 1

I (3583) esp_netif_handlers: edgelab ip: 192.168.10.104, mask: 255.255.255.0, gw: 192.168.10.1?可见IP地址为192.168.10.104。

2、浏览器获取视频流

同一个局域网的电脑启动浏览器,地址栏输入:192.168.10.104:8081,然后回车,浏览器会显示视频,图像分辨率为240*240,因为转换为jpg的质量设置的较低,所以不怎么清晰,下图为浏览器显示的图像:

?

?

有方框表示识别到了物体。

五、总结

此模型主要是对尺寸较小且颜色较深的物体能检测。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!