文献速递:人工智能医学影像分割---一个用于 COVID-19 CT 图像的粗细分割网络

01

文献速递介绍

如今,根据国家癌症研究所的报告,美国约有9.9%的男性患有前列腺癌。1 此外,根据美国癌症协会的数据,预计2019年将有174,650个新病例被诊断出前列腺癌,与此同时大约有31,620名男性将死于前列腺癌。因此,近年来强调了对前列腺癌的准确诊断和有效治疗。图像引导放射治疗(IGRT),它可以通过不同成像方式(即CT和MRI)从不同方向向前列腺癌组织投射高能X射线,是主要的治疗方法之一。在放射治疗中,目标是防止对正常组织的可能过度辐射,同时确保对癌组织的满意辐射。

因此,在CT(或MR)图像中准确定位/分割前列腺对于提高治疗的精确度和准确性起着重要作用。

Title

题目

An Effective MR-Guided CT Network Training for Segmenting Prostate in CT Images

高效的MR引导CT网络训练,用于CT图像中前列腺分割

Abstract

摘要

Segmentation of prostate in medical imaging**data (e.g., CT, MRI, TRUS) is often considered as a crit ical yet challenging task for radiotherapy treatment. It is relatively easier to segment prostate from MR images than from CT images, due to better soft tissue contrast of the MR images. For segmenting prostate from CT images, most previous methods mainly used CT alone, and thus their per formances are often limited by low tissue contrast in the CT images. In this article, we explore the possibility of using indirect guidance from MR images for improving prostate segmentation in the CT images. In particular, we propose a novel deep transfer learning approach, i.e., MR-guided CT network training (namely MICS-NET), which can employ MR images to help better learning of features in CT im ages for prostate segmentation. In MICS-NET, the guidance from MRI consists of two steps: (1) learning informative and transferable features from MRI and then transferring them to CT images in a cascade manner, and (2) adaptively transferring the prostate likelihood of MRI model (i.e., well trained convnet by purely using MR images) with a view consistency constraint. To illustrate the effectiveness of our approach, we evaluate MICS-NET on a real CT prostate image set, with the manual delineations available as the ground truth for evaluation. Our methods generate promis ing segmentation results which achieve (1) six percentages higher Dice Ratio than the CT model purely using CT im ages and (2) comparable performance with the MRI model purely using MR images. Index Terms—Prostate segmentation,

deep transfer learning, fully convolutional network, cascade learning,view consistency constraint.

前列腺在医学成像数据(例如CT、MRI、TRUS)中的分割通常被认为是放射治疗的一个关键且具有挑战性的任务。与CT图像相比,从MR图像中分割前列腺相对更容易,这是由于MR图像更好的软组织对比度。对于从CT图像中分割前列腺,大多数以前的方法主要单独使用CT,因此它们的性能通常受限于CT图像中低组织对比度。在这篇文章中,我们探索了使用MR图像的间接引导来改善CT图像中前列腺分割的可能性。具体来说,我们提出了一种新颖的深度迁移学习方法,即MR引导的CT网络训练(即MICS-NET),它可以利用MR图像来帮助更好地学习CT图像中前列腺分割的特征。在MICS-NET中,MRI的指导包括两个步骤:(1)从MRI中学习信息丰富且可迁移的特征,然后以级联方式将它们迁移到CT图像中;(2)以视图一致性约束的方式,适应性地迁移MRI模型的前列腺可能性(即仅使用MR图像训练的良好的卷积网络)。为了说明我们方法的有效性,我们在一个真实的CT前列腺图像集上评估MICS-NET,使用手动描绘作为评估的真实基准。我们的方法产生了有希望的分割结果,实现了(1)与仅使用CT图像的CT模型相比,Dice比率提高了6个百分点;(2)与仅使用MR图像的MRI模型具有可比的性能。

索引术语——前列腺分割、深度迁移学习、全卷积网络、级联学习、视图一致性约束。

Methods

方法

In this paper, we aim to borrow MRI information (e.g.,

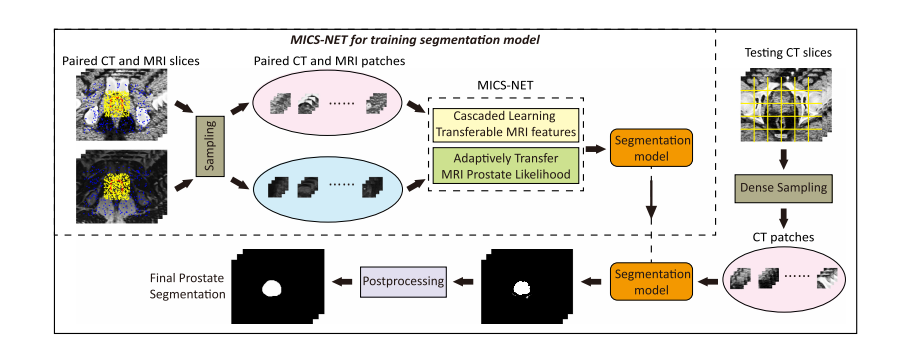

informative features) to benefit prostate segmentation in CT images, by transferring MRI model to help train a better CT model. Due to large intensity difference between CT and MR images, directly transferring the MRI features to CT model is not convenient. Thus, we design a novel strategy to first construct several new integrated images between CT and MR images as the bridge and then apply a cascade manner to transfer feature learning (i.e., transferring MRI features to the integrated images, and then transferring features from the integrated images to CT model). These integrated images could alleviate the loss brought by directly transferring the features from MRI to CT. Also, to sufficiently borrow the advantages of the MRI model to guide the CT model, we jointly optimize the CT and MRI models in the training phase by minimizing the disagreement of their estimated prostate likelihoods. In summary, we propose an effective MR-guided CT network training method, called MICS-NET, for segmenting prostate in CT images. Fig. 2 shows the flowchart of the proposed MICS NET, including both the training and testing phases. Specifically,

in the training phase, patches are sampled from paired 2D CT and MRI slices in the training data set to jointly train a segmentation model by our MICS-NET. In the testing phase, CT patches are first densely sampled from 2D CT slices in the testing data set and then fed into the trained segmentation model to obtain their prostate likelihoods. These prostate likelihoods are combined together to generate the likelihood map with the same size as the 2D CT slices, followed by a postprocessing phase for final segmentation.

在本文中,我们的目标是借助MRI信息(例如,信息特征)来促进CT图像中前列腺分割的效果,通过将MRI模型转移以帮助训练更好的CT模型。由于CT和MR图像之间存在较大的强度差异,直接将MRI特征转移到CT模型并不方便。因此,我们设计了一种新颖的策略,首先构建CT和MR图像之间的几个新的综合图像作为桥梁,然后以级联方式传递特征学习(即,将MRI特征转移到综合图像,然后将特征从综合图像转移到CT模型)。这些综合图像可以减轻直接将特征从MRI转移到CT所带来的损失。此外,为了充分借助MRI模型的优势来指导CT模型,我们在训练阶段联合优化CT和MRI模型,通过最小化它们估计的前列腺可能性的不一致性。

总结来说,我们提出了一种有效的MR引导CT网络训练方法,称为MICS-NET,用于CT图像中的前列腺分割。图2展示了所提出的MICS-NET的流程图,包括训练和测试阶段。具体来说,在训练阶段,从训练数据集中的成对2D CT和MRI切片中采样补丁,以联合训练我们的MICS-NET分割模型。在测试阶段,首先从测试数据集中的2D CT切片中密集采样CT补丁,然后将其输入到训练好的分割模型中,以获取它们的前列腺可能性。这些前列腺可能性被组合在一起,生成与2D CT切片大小相同的可能性图,随后进行后处理阶段以进行最终分割。

Conclusions

结论

Most previous prostate CT segmentation methods focus on learning sophisticated model or extracting informative features purely on the CT images. Their performances might be largely influenced by the low tissue contrast in CT images. In this

paper, we demonstrate that MR images can help improve the segmentation performance of CT images. To realize this goal, we have presented a novel model, called MICS-NET, to learn a better model for prostate segmentation in CT images with the guidance transferred from MR images. Specifically, our pro posed MICS-NET first learns the informative and transferrable features via a cascaded learning paradigm, and then imposes a view consistency constraint to fuse the models across the CT and MR images. The experimental results show that our method generates the promising prostate segmentation results in CT images, which reveals that MR images can indeed help prostate segmentation in CT images.

大多数以前的前列腺CT分割方法都专注于仅在CT图像上学习复杂的模型或提取信息丰富的特征。它们的性能可能会受到CT图像中低组织对比度的极大影响。在本文中,我们展示了MR图像可以帮助提高CT图像的分割性能。为了实现这一目标,我们提出了一种名为MICS-NET的新模型,以从MR图像传递的指导来学习CT图像中前列腺分割的更好模型。具体来说,我们提出的MICS-NET首先通过级联学习范式学习信息丰富且可迁移的特征,然后施加视图一致性约束来融合CT和MR图像之间的模型。实验结果表明,我们的方法在CT图像中生成了有希望的前列腺分割结果,这揭示了MR图像确实可以帮助CT图像中的前列腺分割。

Figure

图

Fig. 1. Two typical examples of respective intensity distributions for CT and MR images of the same patient. The blue curves represent intensity distributions of prostate voxels, while the magenta curves represent in tensity distributions of non-prostate voxels. In the CT (right) and MR (left) images, the red curves denote the true prostate boundaries manually delineated by a physician. It is worth noting the intensity distributions of prostate and non-prostate voxels are easier to be separated in MR images than in CT images.

图 1.两个典型的同一患者的CT和MR图像的强度分布示例。蓝色曲线代表前列腺体素的强度分布,而洋红色曲线代表非前列腺体素的强度分布。在CT(右侧)和MR(左侧)图像中,红色曲线表示由医生手动描绘的真实前列腺边界。值得注意的是,在MR图像中,前列腺和非前列腺体素的强度分布比在CT图像中更容易分离。

Fig. 2.The flowchart of the proposed MICS-NET for prostate segmentation in CT images. The dashed line with the arrow represents that we train the segmentation model in the training stage, and then use it to segment prostate in the testing stage.

图 2. 所提出的MICS-NET在CT图像中前列腺分割的流程图。带箭头的虚线表示我们在训练阶段训练分割模型,然后在测试阶段用它来分割前列腺。

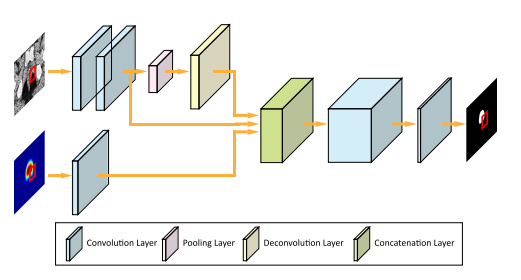

Fig. 3. Illustration of the structure of patchFCN network. There are two channels as input: (1) Patches sampled from training/testing images

and (2) the location prior of patches obtained by averaging all the segmentation labels in the training set after alignment for generating a rough prostate-location heat-map

图 3.展示了patchFCN网络结构。有两个输入通道:(1)从训练/测试图像中采样的补丁;(2)通过在训练集中对所有分割标签进行对齐后平均得到的补丁位置先验,用于生成大致的前列腺位置热图。

Fig. 4.A sequence of continuous changes from MRI to CT, along the direction of the magenta arrows. Also, the first row is paired CT and MR images, and the second row is the integrated images by averaging the aligned CT and MR images with different ratios.

图 4. 一系列从MRI到CT的连续变化,沿着洋红色箭头的方向。同时,第一行是成对的CT和MR图像,第二行是通过对齐的CT和MR图像以不同比例平均融合后的综合图像。

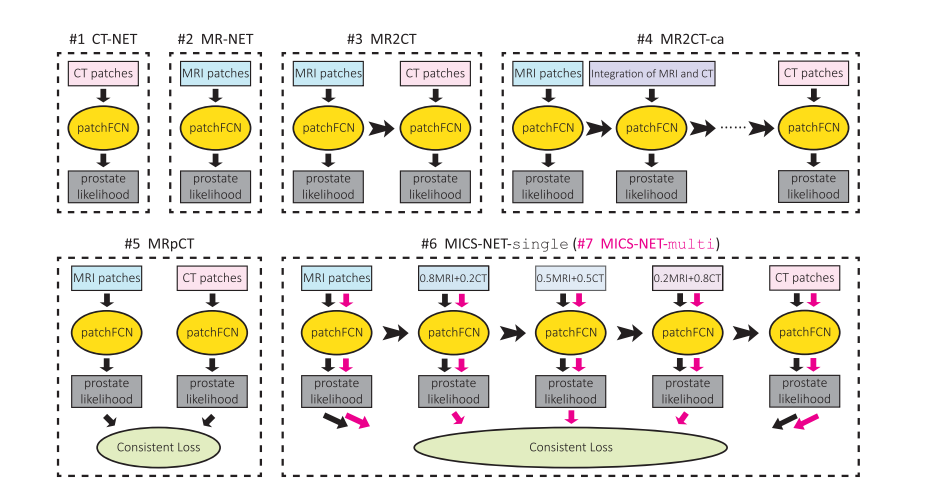

Fig. 5. Illustration of the related prostate segmentation models in CT or MRI patches. In the subfigures, all vertical arrows denote the flow of data input or output, and all horizontal ones indicate the flow of model parameters. In the last subfigure, the vertical black arrows and the vertical magenta arrows represent the data flow of MICS-NET-single and MICS-NET-multi, respectively. In our methods (MICS-NET-single and MICS-NET-multi), the mixed patches with MR and CT images are only used in the training phase to well train a two-pipeline/multi-pipeline segmentation model. Also, in the testing phase, the CT pipeline in the learned segmentation model is used to segment the CT images, where the MR images are not required.

图 5.展示了在CT或MRI补丁中相关的前列腺分割模型。在子图中,所有垂直箭头代表数据输入或输出的流向,所有水平箭头表示模型参数的流动。在最后一个子图中,垂直黑色箭头和垂直洋红色箭头分别代表MICS-NET-single和MICS-NET-multi的数据流。在我们的方法(MICS-NET-single和MICS-NET-multi)中,混合了MR和CT图像的补丁仅在训练阶段使用,以便良好地训练双管道/多管道分割模型。此外,在测试阶段,学到的分割模型中的CT管道用于分割CT图像,此时不需要MR图像。

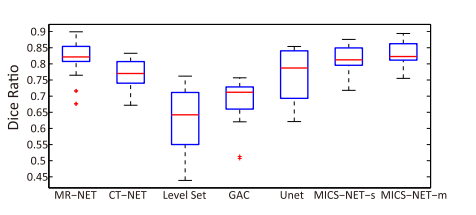

Fig. 6.Comparison of mean Dice Ratio on all 22 patients. Eachcolumn represents the box plot of the respective method, in which thebottom and top of the box are the first and third quartiles, and the band

inside the box is the second quartile (the median). Any data not included by the box is viewed as an outlier with a red ‘+’. MICS-NET-single andMICS-NET-multi are abbreviated as MICS-NET-s and MICS-NET-m,respectively

图 6.对所有22名患者的平均Dice比率进行比较。每一列代表相应方法的箱型图,其中箱体的底部和顶部是第一和第三四分位数,箱体内的带状部分是第二四分位数(中位数)。任何未被箱体包含的数据都被视为异常值,用红色的‘+’表示。MICS-NET-single和MICS-NET-multi分别缩写为MICS-NET-s和MICS-NET-m。

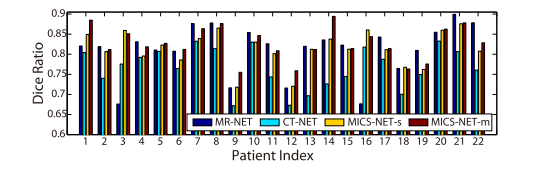

Fig. 7. Comparison of Dice Ratio* for each individual.

图 7. 对每个个体的Dice比率进行比较。

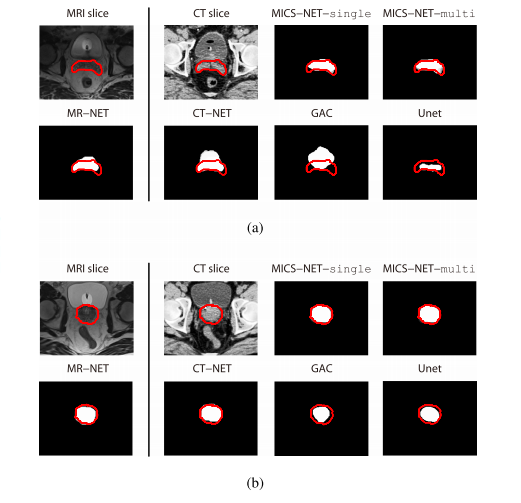

Fig. 8.Visual segmentation results in MRI and CT. The manually delineated prostate boundaries are denoted as red.

图 8.MRI和CT中的视觉分割结果。手动描绘的前列腺边界以红色表示。

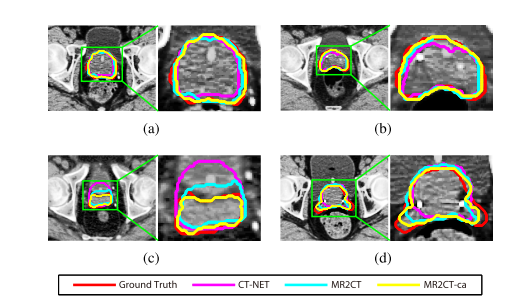

Fig. 9. Visual results of prostate segmentation in CT images by three methods. Different colors denote segmentation results by different methods.

图 9. 通过三种方法在CT图像中前列腺分割的视觉结果。不同的颜色表示不同方法的分割结果。

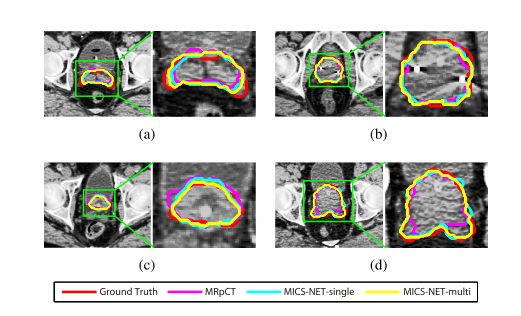

Fig. 10.Visual results of prostate segmentation in CT images by other three methods. Different colors denote segmentation results by different methods.

图 10.通过其他三种方法在CT图像中前列腺分割的视觉结果。不同的颜色表示不同方法的分割结果。

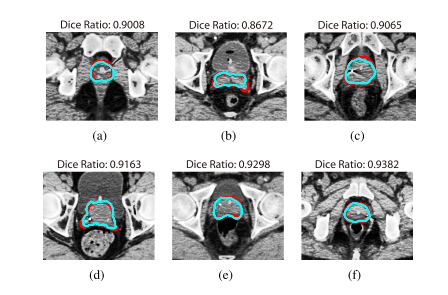

Fig.11.Typical segmentation results in CT slices. The red curves indicate manual segmentation by experienced physician and the light blue curves indicate thes egmentation results by our proposed MICS-NET-single.TheDice Ratiovalues are measuredon the current 2D slices.

图 11. CT切片中典型的分割结果。红色曲线表示经验丰富的医生手动分割,浅蓝色曲线表示我们提出的MICS-NET-single的分割结果。Dice比率值是在当前的2D切片上测量的。

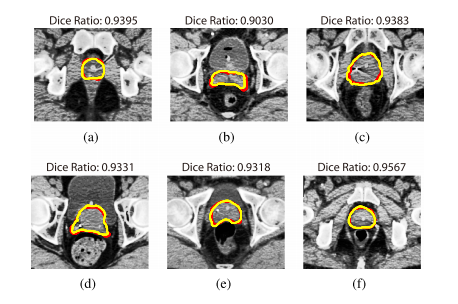

Fig. 12.Typical segmentation results in CT slices (which are respec-tively paired with the slices in Fig. 11). The red curves indicate manual segmentation by experienced physician and the yellow curves indicate the segmentation results by our proposed MICS-NET-multi. The Dice* Ratio* values are measured on the current 2D slices.

图 12.CT切片中的典型分割结果(分别与图 11 中的切片配对)。红色曲线表示经验丰富的医生手动分割,黄色曲线表示我们提出的MICS-NET-multi的分割结果。Dice比率值是在当前的2D切片上测量的。

Table

表

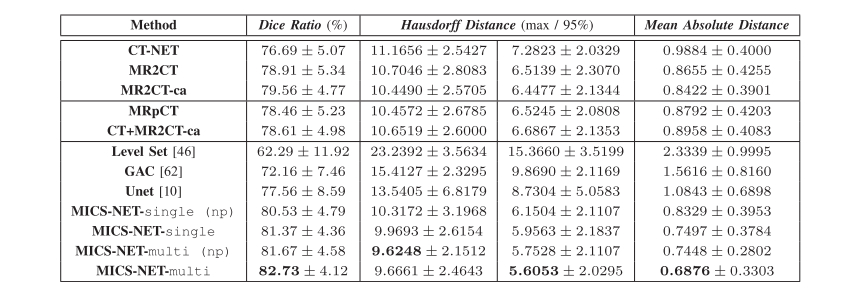

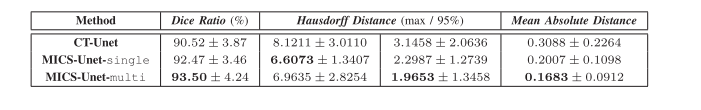

TABLE I comparison of different segmentation methods in ct images. the top mean results are in bold for each column."NP"denotes our methods without without postpocessing

表 I CT图像中不同分割方法的比较。每列的最高平均结果用粗体表示。‘NP’ 代表我们的方法没有后处理。

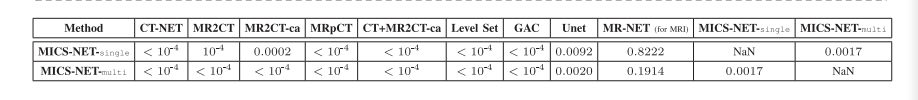

TABLEII statistical Compsrison OF different segmentation methods in dice ration.whenp-value ls less than 0.05,the compared two methods are considered to be significantly different

表 II在Dice比率中不同分割方法的统计比较。当p值小于0.05时,比较的两种方法被认为有显著差异。

TABLEIIIperformance of image-based prostate segmentaion in CT image with the augmented training data

表 III使用增强训练数据在CT图像中基于图像的前列腺分割性能

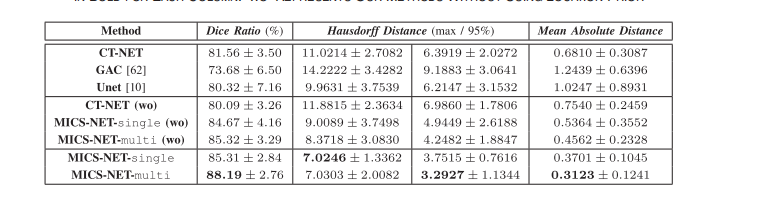

TABLE IV performance comparison of different segmentation methods in CT in images after data generation。the top mean results are in bold for each column,WO represents our methods without using location prior

表 IV 数据生成后,在CT图像中不同分割方法的性能比较。每列的最高平均结果用粗体表示。‘WO’代表我们的方法没有使用位置先验。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!