ubuntu编译FFmpeg并使用编译好的so使用c++进行编码和解码

得到一个安卓系统中,编码和解码要跑在native层的需求。目前我的计划是分两步走:

1.那么需要使用NDK交叉编译FFmpeg获得对应平台的so

2.打算在ubuntu下先写好对应的sample code,测试完成后,将头文件和cpp文件直接编译成so给安卓侧使用。

根据第2点,需要编译X86平台下的FFmpeg的so。

以下记录一下在X86平台下编译遇到的问题

1.下载ffmpeg源码,可以从github上下载,也可以从官网下载,我这边直接从github中下载最新的源码。

https://github.com/FFmpeg/FFmpeg

2.因为我要编译一个可以encode和decode的so,所以还需要下载一个libx264,

下载地址 https://www.videolan.org/developers/x264.html

下载完后cd进到x264的文件夹中执行以下命令。

./configure --prefix=/home/wei/ffmpeg --enable-shared --disable-asm

sudo make -j8

sudo make install

其中 --prefix是这个x264编译好后存放的地址,我是放到了/home/wei/ffmpeg中,编译完后会在/home/wei/ffmpeg生成include和lib文件夹,会将对应的头文件和so放到对应的文件夹中。

如果需要安装其他功能模块的,可以参考这篇博客,

https://blog.csdn.net/yellowyz/article/details/134547483。

3.编译好libx264和,cd到FFmpeg文件夹中,因为我是要生成动态链接库,已经有编码和解码的功能,可以执行以下脚本。

./configure --prefix="/home/wei/WorkShape/ffmpeg" \

--enable-gpl \

--enable-nonfree \

--enable-shared \

--enable-libmp3lame \

--enable-libx264 \

--enable-decoder=h264 \

--enable-filter=delogo \

--enable-debug \

--disable-optimizations \

--enable-libspeex \

make -j8

sudo make install

其实脚本中间也有没有用的模块,不过我偷懒就没有删除,区别就是可能编译的时间久一点,但是最基本的编码和解码时有了,其他要打开的功能可以自己参考以上写法即可。–enable-libx264 打开编码,–enable-decoder=h264打开解码,–enable-shared生成动态链接库。

最后根据我的编译选项,在/home/wei/ffmpeg/inlucde中会生成以下文件夹

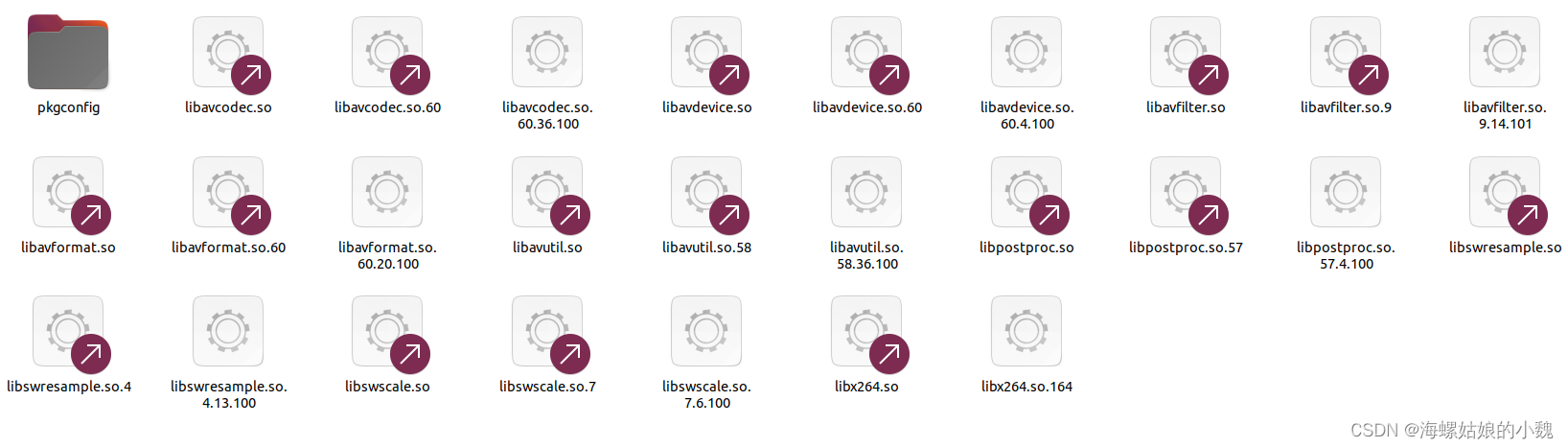

在,在/home/wei/ffmpeg/lib中会生成以下动态库,可以后续给自己的程序链接上。

编译好链接库后,可以正式的写测试代码了,以下代码是我从ffmpeg/doc/example下拿到的,进行了适当的修改,打开一张nv12的图片,然后拷贝到frame中,进行编码。需要修改的就是 const char *inputFile = “1280_1024.nv12”; 文件的名称,和你图片的宽高即可。运行后会生成一个test.mp4。

#define image_width 1280

#define image_height 1024

编码

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

extern "C"

{

#include <libavcodec/avcodec.h>

#include <libavutil/opt.h>

#include <libavutil/imgutils.h>

// #include <libavutil/error.h>

}

#define image_width 1280

#define image_height 1024

static void encode(AVCodecContext *enc_ctx, AVFrame *frame, AVPacket *pkt,

FILE *outfile)

{

int ret;

/* send the frame to the encoder */

if (frame)

printf("Send frame %3" PRId64 "\n", frame->pts);

ret = avcodec_send_frame(enc_ctx, frame);

if (ret < 0)

{

fprintf(stderr, "Error sending a frame for encoding\n");

exit(1);

}

while (ret >= 0)

{

ret = avcodec_receive_packet(enc_ctx, pkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

return;

else if (ret < 0)

{

fprintf(stderr, "Error during encoding\n");

exit(1);

}

printf("Write packet %3" PRId64 " (size=%5d)\n", pkt->pts, pkt->size);

fwrite(pkt->data, 1, pkt->size, outfile);

av_packet_unref(pkt);

}

}

int main(int argc, char **argv)

{

const char *filename = "test.mp4", *codec_name = "libx264";

const AVCodec *codec;

AVCodecContext *c = NULL;

int i, ret, x, y;

FILE *f;

AVFrame *frame;

AVPacket *pkt;

uint8_t endcode[] = {0, 0, 1, 0xb7};

/* find the mpeg1video encoder */

codec = avcodec_find_encoder_by_name(codec_name);

if (!codec)

{

fprintf(stderr, "Codec '%s' not found\n", codec_name);

exit(1);

}

c = avcodec_alloc_context3(codec);

if (!c)

{

fprintf(stderr, "Could not allocate video codec context\n");

exit(1);

}

pkt = av_packet_alloc();

if (!pkt)

exit(1);

/* put sample parameters */

c->bit_rate = 400000;

/* resolution must be a multiple of two */

c->width = 1280;

c->height = 1024;

/* frames per second */

c->time_base = (AVRational){1, 25};

c->framerate = (AVRational){25, 1};

/* emit one intra frame every ten frames

* check frame pict_type before passing frame

* to encoder, if frame->pict_type is AV_PICTURE_TYPE_I

* then gop_size is ignored and the output of encoder

* will always be I frame irrespective to gop_size

*/

c->gop_size = 10;

c->max_b_frames = 1;

c->pix_fmt = AV_PIX_FMT_NV12;

if (codec->id == AV_CODEC_ID_H264)

av_opt_set(c->priv_data, "preset", "slow", 0);

/* open it */

ret = avcodec_open2(c, codec, NULL);

if (ret < 0)

{

fprintf(stderr, "Could not open codec: \n");

exit(1);

}

f = fopen(filename, "wb");

if (!f)

{

fprintf(stderr, "Could not open %s\n", filename);

exit(1);

}

const char *inputFile = "1280_1024.nv12";

uint8_t *buffer = (uint8_t *)malloc(image_width * image_height * 3 / 2);

FILE *file = fopen(inputFile, "rb");

if (file == nullptr)

{

printf("无法打开文件 %s\n", inputFile);

return 0;

}

fread(buffer, 1, image_width * image_height * 3 / 2, file);

fclose(file);

frame = av_frame_alloc();

if (!frame)

{

fprintf(stderr, "Could not allocate video frame\n");

exit(1);

}

frame->format = c->pix_fmt;

frame->width = c->width;

frame->height = c->height;

ret = av_frame_get_buffer(frame, 0);

if (ret < 0)

{

fprintf(stderr, "Could not allocate the video frame data\n");

exit(1);

}

memcpy(frame->data[0], buffer, image_width * image_height);

memcpy(frame->data[1], buffer + image_width * image_height, image_width * image_height / 2);

for (i = 0; i < 25; i++)

{

fflush(stdout);

/* Make sure the frame data is writable.

On the first round, the frame is fresh from av_frame_get_buffer()

and therefore we know it is writable.

But on the next rounds, encode() will have called

avcodec_send_frame(), and the codec may have kept a reference to

the frame in its internal structures, that makes the frame

unwritable.

av_frame_make_writable() checks that and allocates a new buffer

for the frame only if necessary.

*/

ret = av_frame_make_writable(frame);

if (ret < 0)

exit(1);

/* Prepare a dummy image.

In real code, this is where you would have your own logic for

filling the frame. FFmpeg does not care what you put in the

frame.

*/

/* Y */

// for (y = 0; y < c->height; y++)

// {

// for (x = 0; x < c->width; x++)

// {

// frame->data[0][y * frame->linesize[0] + x] = x + y + i * 3;

// }

// }

// /* Cb and Cr */

// for (y = 0; y < c->height / 2; y++)

// {

// for (x = 0; x < c->width / 2; x++)

// {

// frame->data[1][y * frame->linesize[1] + x] = 128 + y + i * 2;

// frame->data[2][y * frame->linesize[2] + x] = 64 + x + i * 5;

// }

// }

frame->pts = i;

/* encode the image */

encode(c, frame, pkt, f);

}

/* flush the encoder */

encode(c, NULL, pkt, f);

/* Add sequence end code to have a real MPEG file.

It makes only sense because this tiny examples writes packets

directly. This is called "elementary stream" and only works for some

codecs. To create a valid file, you usually need to write packets

into a proper file format or protocol; see mux.c.

*/

if (codec->id == AV_CODEC_ID_MPEG1VIDEO || codec->id == AV_CODEC_ID_MPEG2VIDEO)

fwrite(endcode, 1, sizeof(endcode), f);

fclose(f);

avcodec_free_context(&c);

av_frame_free(&frame);

av_packet_free(&pkt);

return 0;

}

解码

接着开始写解码的过程,将刚才编码生成的mp4 解码成yuv 的数据

#include <stdexcept>

#include <iostream>

#include <memory>

#include <mutex>

extern "C"

{

#include <libavformat/avformat.h>

#include <libavcodec/avcodec.h>

}

class VideoDecoder

{

private:

AVFormatContext *formatContext;

AVCodecContext *codecContext;

const AVCodec *codec;

int videoStreamIndex;

AVFrame *frame;

// ASVLOFFSCREEN DstImg;

std::mutex data_mutex_;

const char *inputFilePath;

bool exit_;

bool video_decoded_;

int H264_frame;

bool isFirstFrameReady;

public:

VideoDecoder();

~VideoDecoder();

void init(const char *input);

int decode(char *display_buffer);

void set_thread_exit();

bool get_video_decoded_();

bool getIsFirstFrameReady();

};

#include "VideoDecoder.h"

VideoDecoder::VideoDecoder() : formatContext(nullptr),

codecContext(nullptr),

codec(nullptr),

videoStreamIndex(-1),

exit_(false),

video_decoded_(false),

H264_frame(0),

isFirstFrameReady(false)

{

frame = av_frame_alloc();

}

VideoDecoder::~VideoDecoder()

{

if (&frame)

{

av_frame_free(&frame);

}

avcodec_free_context(&codecContext);

avformat_close_input(&formatContext);

}

void VideoDecoder::init(const char *input)

{

inputFilePath = input;

if (avformat_open_input(&formatContext, inputFilePath, nullptr, nullptr) != 0)

{

throw std::runtime_error("无法打开视频文件");

}

if (avformat_find_stream_info(formatContext, nullptr) < 0)

{

throw std::runtime_error("无法获取视频流信息");

}

for (unsigned int i = 0; i < formatContext->nb_streams; i++)

{

if (formatContext->streams[i]->codecpar->codec_type == AVMEDIA_TYPE_VIDEO)

{

videoStreamIndex = i;

break;

}

}

if (videoStreamIndex == -1)

{

throw std::runtime_error("无法找到视频流");

}

AVCodecParameters *codecParams = formatContext->streams[videoStreamIndex]->codecpar;

codec = avcodec_find_decoder(codecParams->codec_id);

if (!codec)

{

throw std::runtime_error("无法找到视频解码器");

}

codecContext = avcodec_alloc_context3(codec);

if (!codecContext)

{

throw std::runtime_error("无法创建解码器上下文");

}

if (avcodec_parameters_to_context(codecContext, codecParams) < 0)

{

throw std::runtime_error("无法初始化解码器上下文");

}

if (avcodec_open2(codecContext, codec, nullptr) < 0)

{

throw std::runtime_error("无法打开解码器");

}

}

bool VideoDecoder::get_video_decoded_()

{

std::unique_lock<std::mutex> lock(data_mutex_);

return video_decoded_;

}

bool VideoDecoder::getIsFirstFrameReady()

{

std::unique_lock<std::mutex> lock(data_mutex_);

return isFirstFrameReady;

}

void VideoDecoder::set_thread_exit()

{

exit_ = true;

}

int VideoDecoder::decode(char *display_buffer)

{

try

{

AVPacket packet;

video_decoded_ = false;

while (av_read_frame(formatContext, &packet) >= 0)

{

if (exit_)

{

printf("exit_:%d\n", exit_);

break;

}

if (packet.stream_index == videoStreamIndex)

{

avcodec_send_packet(codecContext, &packet);

int ret = avcodec_receive_frame(codecContext, frame);

if (ret == 0)

{

std::unique_lock<std::mutex> lock(data_mutex_);

{

// 假设帧的格式是AV_PIX_FMT_YUV420P

FILE *file = fopen(("frame" + std::to_string(H264_frame) + ".yuv").c_str(), "wb");

if (file != nullptr)

{

// 写入Y分量

fwrite(frame->data[0], 1, frame->linesize[0] * frame->height, file);

// 写入U分量

fwrite(frame->data[1], 1, frame->linesize[1] * frame->height / 2, file);

// 写入V分量

fwrite(frame->data[2], 1, frame->linesize[2] * frame->height / 2, file);

fclose(file);

}

}

}

}

av_packet_unref(&packet);

printf("filename :%s H264_frame%d\n", inputFilePath, H264_frame);

H264_frame++;

}

printf("filename :%s decode finished\n", inputFilePath);

std::unique_lock<std::mutex> lock(data_mutex_);

video_decoded_ = true;

}

catch (const std::exception &ex)

{

std::cerr << "Error: " << ex.what() << std::endl;

}

return 0;

}

#include "VideoDecoder.h"

#include <iostream>

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/imgutils.h"

#include "libavutil/avassert.h"

#include "libswscale/swscale.h"

}

int main()

{

char *display_buffer = (char *)malloc(1280 * 1024 * 3 / 2);

const char *filename = "test.mp4";

VideoDecoder videoDecoder;

videoDecoder.init(filename);

videoDecoder.decode(display_buffer);

return 0;

}

以上是解码的头文件和cpp 文件,以及main 函数,自己通过cmakelists.txt进行链接编译即可。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!