非线性约束的优化问题_序列二次规划算法代码

1. 理论部分

2. 序列二次规划算法代码及解析

3.完整代码

1.理论部分

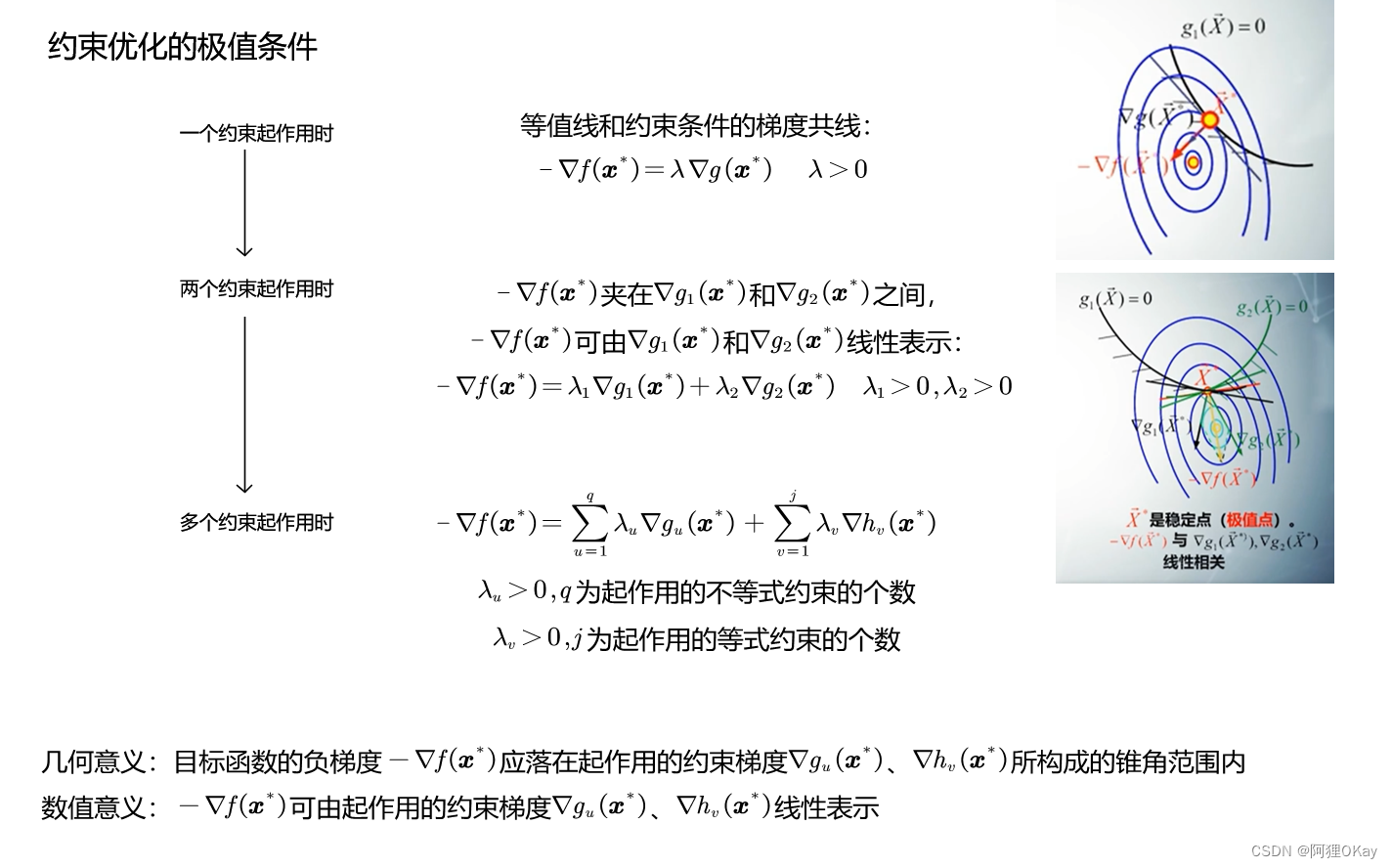

a.约束优化问题的极值条件

库恩塔克条件(Kuhn-Tucker conditions,KT条件)是确定某点为极值点的必要条件。如果所讨论的规划是凸规划,那么库恩-塔克条件也是充分条件。

(1)判断数值x处起作用的约束,计算x处各约束的取值是否为0,找出起作用的约束条件。并非所有约束条件都起作用,即并非落在所有约束条件的边界交集处。对于起作用的边界条件,满足边界函数g(x)或h(x)为0;

(2)数值x处,目标函数导数f'(x)和起作用的约束导数g'(x)的数值;

(3)代入KT条件(ex. ),求解拉格朗日乘子λ的大小,判断λ是否大于0,若大于零,则为极值点。

(4)若不满足条件,继续迭代。

具体做法如下:

其中λ为拉格朗日乘子,当某一点x使λ > 0存在时,称满足KT条件,则该点x是极值点。

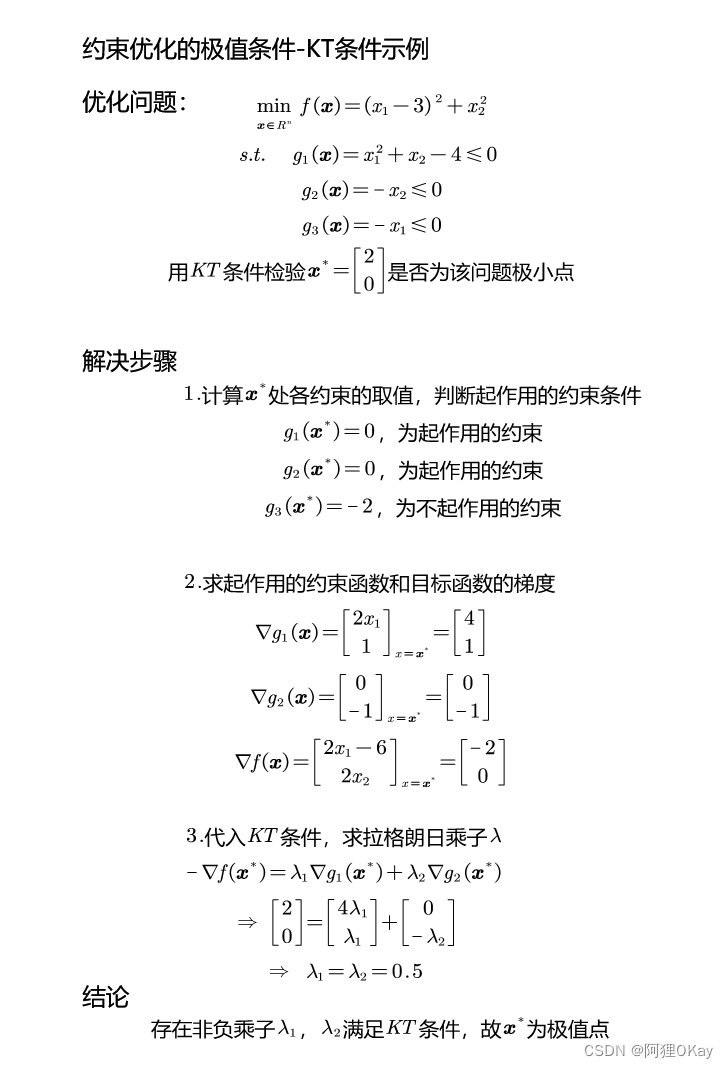

约束优化问题的应用示例如下图:

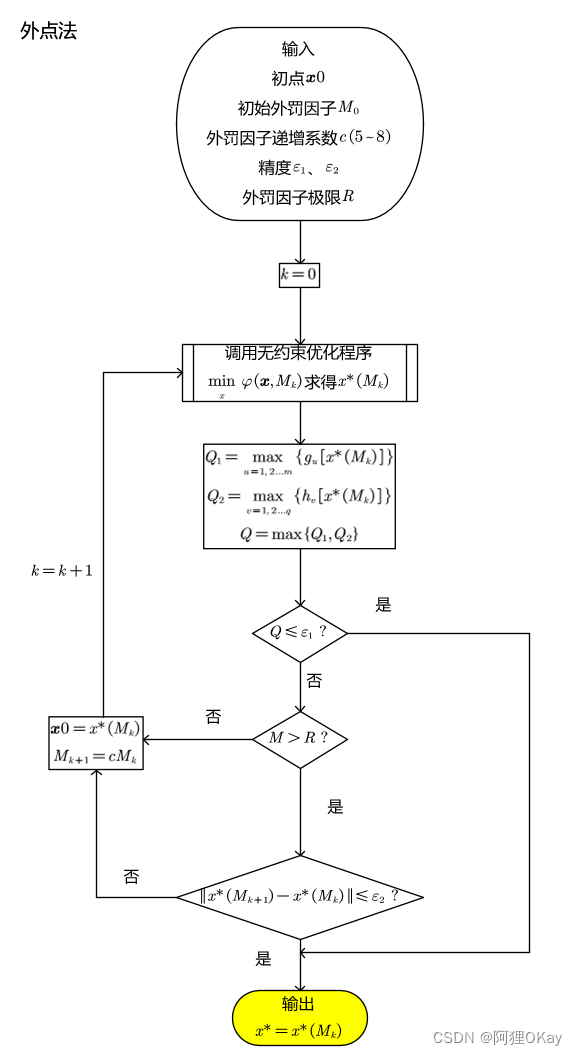

b.约束优化方法及迭代

此处介绍和使用外惩罚函数法/外点法

外点法是一种从随机一点(一般在可行域外)出发,逐渐移动到可行区域的方法。

对于约束优化问题:

构造外惩罚函数:

即,当g(x)不满足条件时,该项有大于0的值,h(x)不满足条件时,该项也有值,加和的越大代表该数值点距离距离约束的可行域越远,惩罚项的值越大,惩罚作用越明显。。其中Mk为外罚因子,M 0 < M 1 < M 2 . . . < M k?为递增的正数序列,一般M0=1,递增系数c=5~8。

迭代终止满足两个判断条件中一个即可:

1. ,Q为当前数值点多个约束函数中最大的值,即满足了所有的约束函数条件。

2.??且?

,R为外罚因子控制量,M超出外罚因子的极限R

外点法的迭代图如下:

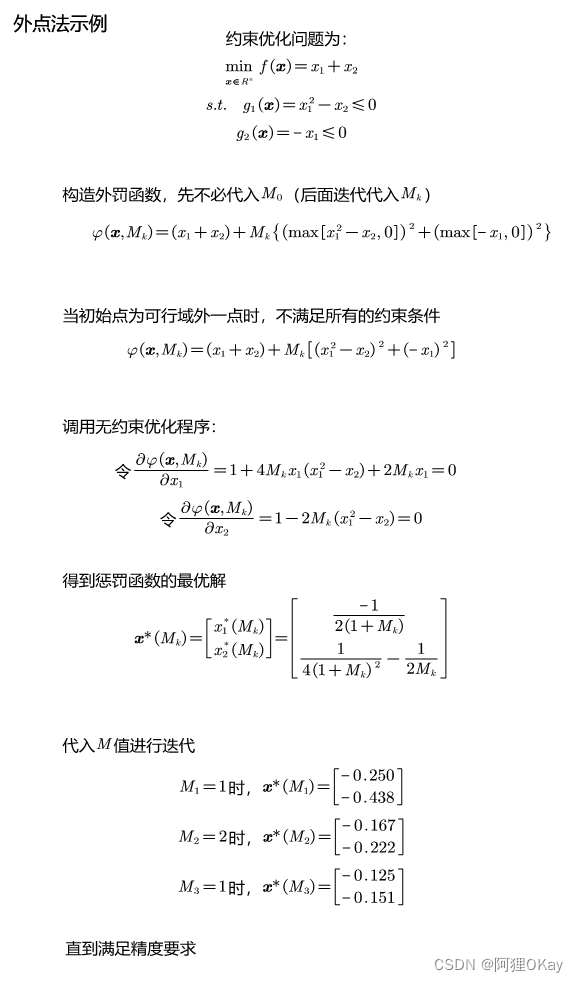

外点法的示例如下图:

a. 定义变量

vars = 2; % 自变量数量

cons = 1; % 约束数量

maxIter=100; % 最大迭代次数

x = [-1;4]; % 初始猜测值

l =0; % 拉格朗日乘子

H = eye(vars,vars); % Hessian 矩阵,假设为I矩阵目标函数为

及目标函数的导数为

function y = f(x)

y = x(1)^4 - 2*x(2)*x(1)^2 + x(2)^2 + x(1)^2 - 2*x(1)+5;

end

function y = gradf(x)

y(1) = 2*x(1)-4*x(1)*x(2)+4*x(1)^3-2;

y(2) = -2*x(1)^2 + 2*x(2);

end约束项不等式为

以及其导数为

function y = g(x)

y(1) = -(x(1)+0.25)^2+0.75*x(2);

end

function y = gradg(x)

y(1,1) = -2*x(1)-1/2;

y(1,2) = 3/4;

end求解KKT条件

function y = SolveKKT(gradfEval,gradgEval,gEval,Hessian)

A = [Hessian -gradgEval';gradgEval 0]; %对称矩阵,约束函数的梯度矩阵

b = [-gradfEval -gEval]';

y = A\b; %方程 Ay = b 解出y值,分别对应最优的x值和最优的拉格朗日乘子的值

end判断数值点x是否满足约束条件

function [gViol,lViol] = Viols(gEval,l)%约束条件函数值,拉格朗日乘子l

gViol=[];

lViol=[];

for i = 1:length(gEval)

if gEval(i)<0 %如果约束条件函数值小于零,则不满足约束

gViol(i)=gEval(i); %将约束条件函数值和拉格朗日乘子l,放入列表

lViol(i)=l(i);

end

end

end处理不满足约束的情况,构造惩罚函数,lViol对应Mk,累加得到惩罚函数

function y = Penalty(fEval,gViol,lViol)

%代入该点处的函数值,不满足约束的约束函数值,以及拉格朗日乘子l

sum = 0;

y = fEval;

for i = 1:length(gViol) %找出不满足约束条件的约束函数值

sum = sum + lViol(i)*abs(gViol(i)); %约束函数值g(x)求反,再乘一个系数,即,||l_1*g(x1)+l2*g(x2) ||

end

y = y + sum;

end在初始点首先进行初始计算,找出不满足约束的约束,计算其损失函数。

% EVALUATE AT STARTING POINT

fEval= f(x);

gEval = g(x);

[gViol,lViol] = Viols(gEval,l);

gradfEval = gradf(x);

gradgEval = gradg(x);

P = Penalty(fEval,gViol,lViol);开始进入SQP算法主体,求解kkt条件,得到xSol为函数x的解,lSol为拉格朗日乘子的解。

for i=1:maxIter %开始循环迭代

% SOLVE KKT CONDITIONS FOR THE OPTIMUM OF THE QUADRATIC APPROXIMATION

%求解二次优化的KKT条件

sol = SolveKKT(gradfEval,gradgEval,gEval,H);%代入导数f'(x),g'(x),约束函数值g(x),Hessian矩阵,

xSol = sol(1:vars);

lSol = sol(vars+1:vars+cons);

如果拉格朗日乘子有负的,即,不满足约束条件,则将其设为0,并重新求解方程

for j = 1:length(lSol)

if lSol(j)<0 %如果拉格朗日乘子有一个是负的

sol= H\(-gradfEval)'; %将结果重新求解H*sol = -gradfEval

xSol = sol(1:vars);

lSol(j)=0; %该约束的拉格朗日乘子置为0

end

end计算新的数值点情况

xNew = x + xSol; %更新得到新的数值点

lNew = lSol; % 得到新的拉格朗日乘子

fEvalNew = f(xNew);%计算新点目标函数的数值

gEvalNew = g(xNew);%计算新点的约束函数数值

gradfEvalNew = gradf(xNew);%计算该点目标函数梯度

gradgEvalNew = gradg(xNew);%计算该点约束函数梯度

[gViolNew,lViolNew] = Viols(gEvalNew,lNew);%找出不满足的约束条件

PNew = Penalty(fEvalNew,gViolNew,lViolNew);%代入惩罚函数如果惩罚函数增加,减小步长到原来的一半

% IF PENALTY FUNCTION INCREASED, LOWER THE STEP BY 0.5

while PNew-P>1e-4

xSol = 0.5*xSol;

xNew = x + xSol;

fEvalNew = f(xNew);

gEvalNew = g(xNew);

gradfEvalNew = gradf(xNew);

gradgEvalNew = gradg(xNew);

[gViolNew,lViolNew] = Viols(gEvalNew,lNew);

PNew = Penalty(fEvalNew,gViolNew,lViolNew);

end如果x数值变化很小,停止迭代,找到最优,结束迭代

% STOPPING CRITERION

if norm(xNew(1:vars)-x(1:vars))<=1e-2

break

end更新Hessian矩阵,Q为Mk+1- Mk的值

% UPDATE THE HESSIAN

gradLEval = gradLagr(gradfEval,gradgEval,lNew,vars); % lnew not l!!!

gradLEvalNew = gradLagr(gradfEvalNew,gradgEvalNew,lNew,vars);

Q = gradLEvalNew-gradLEval;

dx = xNew-x;

HNew = UpdateH(H,dx,Q);计算拉格朗日乘子梯度的函数

function y = gradLagr(gradfEval,gradgEval,l,n)

y = gradfEval';

sum = zeros(n,1);

for i = 1:length(l)

sum = sum -l(i)*gradgEval(i:n)';

end

y = y + sum;

end更新Hessian矩阵,gamma为Mk+1- Mk的值

function y = UpdateH(H,dx,gamma)

term1=(gamma*gamma') / (gamma'*dx);

term2 = ((H*dx)*(dx'*H)) / (dx'*(H*dx));

y = H + term1-term2;

end更新所有的变量,用于下次的迭代,包括Hessian矩阵H,数值点x,惩罚函数值P,目标函数值fEval,目标函数值梯度gradEval,约束函数值gEval,约束函数值梯度gradgEval

% UPDATE ALL VALUES FOR NEXT ITERATION

H = HNew;

fEval = fEvalNew;

gEval = gEvalNew;

gradfEval = gradfEvalNew;

gradgEval = gradgEvalNew;

P = PNew;

x = xNew;3.可以运行的完整代码如下:

% EXAMPLE OF SQP ALGORITHM

clear variables; close all; clc;

fprintf("---------------------------------------------------------------\n")

fprintf("An implementation of Sequential Quadratic Programming method\nin a nonlinear constrained optimization problem\n")

fprintf("---------------------------------------------------------------\n")

% INITIAL VALUES - INPUT

vars = 2; % number of variables

cons = 1; % number of constraints

maxIter=100; % max iterations

x = [-1;4]; % initial guess point

l =0; % LagrangeMultipliers vector

H = eye(vars,vars); % Hessian matrix assumed to be identity

% EVALUATE AT STARTING POINT

fEval= f(x);

gEval = g(x);

[gViol,lViol] = Viols(gEval,l);

gradfEval = gradf(x);

gradgEval = gradg(x);

P = Penalty(fEval,gViol,lViol);

% SQP ALGORITHM

for i=1:maxIter

% SOLVE KKT CONDITIONS FOR THE OPTIMUM OF THE QUADRATIC APPROXIMATION

sol = SolveKKT(gradfEval,gradgEval,gEval,H);

xSol = sol(1:vars);

lSol = sol(vars+1:vars+cons);

% IF THE LAGRANGE MULTIPLIER IS NEGATIVE SET IT TO ZERO

for j = 1:length(lSol)

if lSol(j)<0

sol= H\(-gradfEval)';

xSol = sol(1:vars);

lSol(j)=0;

end

end

% EVALUATE AT NEW CANDIDATE POINT

xNew = x + xSol;

lNew = lSol;

fEvalNew = f(xNew);

gEvalNew = g(xNew);

gradfEvalNew = gradf(xNew);

gradgEvalNew = gradg(xNew);

[gViolNew,lViolNew] = Viols(gEvalNew,lNew);

PNew = Penalty(fEvalNew,gViolNew,lViolNew);

% IF PENALTY FUNCTION INCREASED, LOWER THE STEP BY 0.5

while PNew-P>1e-4

xSol = 0.5*xSol;

xNew = x + xSol;

fEvalNew = f(xNew);

gEvalNew = g(xNew);

gradfEvalNew = gradf(xNew);

gradgEvalNew = gradg(xNew);

[gViolNew,lViolNew] = Viols(gEvalNew,lNew);

PNew = Penalty(fEvalNew,gViolNew,lViolNew);

end

% STOPPING CRITERION

if norm(xNew(1:vars)-x(1:vars))<=1e-2

break

end

% UPDATE THE HESSIAN

gradLEval = gradLagr(gradfEval,gradgEval,lNew,vars); % lnew not l!!!

gradLEvalNew = gradLagr(gradfEvalNew,gradgEvalNew,lNew,vars);

Q = gradLEvalNew-gradLEval;

dx = xNew-x;

HNew = UpdateH(H,dx,Q);

% UPDATE ALL VALUES FOR NEXT ITERATION

H = HNew;

fEval = fEvalNew;

gEval = gEvalNew;

gradfEval = gradfEvalNew;

gradgEval = gradgEvalNew;

P = PNew;

x = xNew;

end

fprintf('SQP: Optimum point:\n x1=%10.4f\n x2=%10.4f\n iterations =%10.0f \n', x(1), x(2), i)

% FUNCTIONS NEEDED

function y = SolveKKT(gradfEval,gradgEval,gEval,Hessian)

A = [Hessian -gradgEval';gradgEval 0];

b = [-gradfEval -gEval]';

y = A\b;

end

function y = f(x)

y = x(1)^4 - 2*x(2)*x(1)^2 + x(2)^2 + x(1)^2 - 2*x(1)+5;

end

function y = gradf(x)

y(1) = 2*x(1)-4*x(1)*x(2)+4*x(1)^3-2;

y(2) = -2*x(1)^2 + 2*x(2);

end

function y = gradLagr(gradfEval,gradgEval,l,n)

y = gradfEval';

sum = zeros(n,1);

for i = 1:length(l)

sum = sum -l(i)*gradgEval(i:n)';

end

y = y + sum;

end

function y = gradg(x)

y(1,1) = -2*x(1)-1/2;

y(1,2) = 3/4;

end

function y = g(x)

y(1) = -(x(1)+0.25)^2+0.75*x(2);

end

function [gViol,lViol] = Viols(gEval,l)

gViol=[];

lViol=[];

for i = 1:length(gEval)

if gEval(i)<0

gViol(i)=gEval(i);

lViol(i)=l(i);

end

end

end

function y = Penalty(fEval,gViol,lViol)

sum = 0;

y = fEval;

for i = 1:length(gViol)

sum = sum + lViol(i)*abs(gViol(i));

end

y = y + sum;

end

function y = UpdateH(H,dx,gamma)

term1=(gamma*gamma') / (gamma'*dx);

term2 = ((H*dx)*(dx'*H)) / (dx'*(H*dx));

y = H + term1-term2;

end

最后输出结果为:

---------------------------------------------------------------

An implementation of Sequential Quadratic Programming method

in a nonlinear constrained optimization problem

---------------------------------------------------------------

SQP: Optimum point:

x1= 0.4999

x2= 0.7498

iterations = 9 本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!