hab_virtio hypervisor 虚拟化

?

Linux的 I / O?虚拟化?Virtio?框架

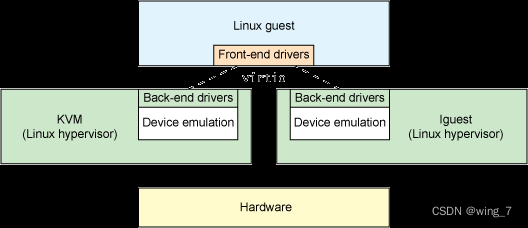

简而言之,virtio是半虚拟化管理程序中设备上的抽象层。virtio由Rusty Russell开发以支持他自己的虚拟化解决方案lguest。本文从准虚拟化和仿真设备的介绍开始,然后探讨的细节virtio。重点是virtio2.6.30内核发行版中的框架。

Linux是虚拟机管理程序平台。正如我在有关Linux作为虚拟机管理程序的文章中所展示的那样,Linux提供了各种具有不同属性和优点的虚拟机管理程序解决方案。

示例包括基于内核的虚拟机(KVM)lguest,和用户模式Linux。在Linux上拥有这些不同的虚拟机管理程序解决方案可以根据操作系统的独立需求来增加操作系统的负担。其中一项税项是设备虚拟化。它没有提供各种设备仿真机制(用于网络,块和其他驱动程序),而是virtio为这些设备仿真提供了一个通用的前端,以标准化接口并提高跨平台代码的重用性。

完全虚拟化与半虚拟化

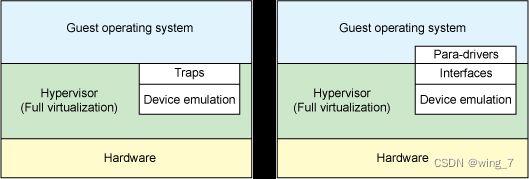

让我们快速讨论两种不同类型的虚拟化方案:完全虚拟化和半虚拟化。

-

- 在完全虚拟化中,客户操作系统在裸机上的虚拟机管理程序之上运行。来宾不知道已对其进行了虚拟化,因此无需更改即可在此配置中工作。

-

- 在半虚拟化中,来宾操作系统不仅意识到它在虚拟机管理程序上运行,而且还包括使来宾到管理程序之间转换更加有效的代码(请参见图1)。

在完全虚拟化方案中,系统管理程序必须模拟设备硬件,该设备硬件在会话的最低级别上进行模拟(例如,模拟到网络驱动程序)。尽管在此抽象中仿真是干净的,但它也是效率最低且最复杂的。在超虚拟化方案中,来宾和管理程序可以协同工作以使此仿真高效。半虚拟化方法的缺点是,操作系统知道它已被虚拟化,因此需要进行修改才能工作。

?

?Linux虚拟化KVM-Qemu分析(十一)之virtqueue_virtqueue_add_outbuf-CSDN博客

lagvm/LINUX/android/kernel/msm-5.4/drivers/soc/qcom/hab/hab_virtio.c

static struct virtio_device_tbl {

29 int32_t mmid;

30 __u32 device;

31 struct virtio_device *vdev;

32 } vdev_tbl[] = {

33 { HAB_MMID_ALL_AREA, HAB_VIRTIO_DEVICE_ID_HAB, NULL }, /* hab */

34 { MM_BUFFERQ_1, HAB_VIRTIO_DEVICE_ID_BUFFERQ, NULL },

35 { MM_MISC, HAB_VIRTIO_DEVICE_ID_MISC, NULL },

36 { MM_AUD_1, HAB_VIRTIO_DEVICE_ID_AUDIO, NULL },

37 { MM_CAM_1, HAB_VIRTIO_DEVICE_ID_CAMERA, NULL },

38 { MM_DISP_1, HAB_VIRTIO_DEVICE_ID_DISPLAY, NULL },

39 { MM_GFX, HAB_VIRTIO_DEVICE_ID_GRAPHICS, NULL },

40 { MM_VID, HAB_VIRTIO_DEVICE_ID_VIDEO, NULL },

41 };

static struct virtio_device_id id_table[] = {

851 { HAB_VIRTIO_DEVICE_ID_HAB, VIRTIO_DEV_ANY_ID }, /* virtio hab with all mmids */

852 { HAB_VIRTIO_DEVICE_ID_BUFFERQ, VIRTIO_DEV_ANY_ID }, /* virtio bufferq only */

853 { HAB_VIRTIO_DEVICE_ID_MISC, VIRTIO_DEV_ANY_ID }, /* virtio misc */

854 { HAB_VIRTIO_DEVICE_ID_AUDIO, VIRTIO_DEV_ANY_ID }, /* virtio audio */

855 { HAB_VIRTIO_DEVICE_ID_CAMERA, VIRTIO_DEV_ANY_ID }, /* virtio camera */

856 { HAB_VIRTIO_DEVICE_ID_DISPLAY, VIRTIO_DEV_ANY_ID }, /* virtio display */

857 { HAB_VIRTIO_DEVICE_ID_GRAPHICS, VIRTIO_DEV_ANY_ID }, /* virtio graphics */

858 { HAB_VIRTIO_DEVICE_ID_VIDEO, VIRTIO_DEV_ANY_ID }, /* virtio video */

859 { 0 },

860 }; static struct virtio_driver virtio_hab_driver = {

863 .driver.name = KBUILD_MODNAME,

864 .driver.owner = THIS_MODULE,

865 .feature_table = features,

866 .feature_table_size = ARRAY_SIZE(features),

867 .id_table = id_table,

868 .probe = virthab_probe,

869 .remove = virthab_remove,

870 #ifdef CONFIG_PM_SLEEP

871 .freeze = virthab_freeze,

872 .restore = virthab_restore,

873 #endif

874 };lagvm/LINUX/android/kernel/msm-5.4/drivers/soc/qcom/hab/hab.c

/*

23 * The following has to match habmm definitions, order does not matter if

24 * hab config does not care either. When hab config is not present, the default

25 * is as guest VM all pchans are pchan opener (FE)

26 */

27 static struct hab_device hab_devices[] = {

28 HAB_DEVICE_CNSTR(DEVICE_AUD1_NAME, MM_AUD_1, 0),

29 HAB_DEVICE_CNSTR(DEVICE_AUD2_NAME, MM_AUD_2, 1),

30 HAB_DEVICE_CNSTR(DEVICE_AUD3_NAME, MM_AUD_3, 2),

31 HAB_DEVICE_CNSTR(DEVICE_AUD4_NAME, MM_AUD_4, 3),

32 HAB_DEVICE_CNSTR(DEVICE_CAM1_NAME, MM_CAM_1, 4),

33 HAB_DEVICE_CNSTR(DEVICE_CAM2_NAME, MM_CAM_2, 5),

34 HAB_DEVICE_CNSTR(DEVICE_DISP1_NAME, MM_DISP_1, 6),

35 HAB_DEVICE_CNSTR(DEVICE_DISP2_NAME, MM_DISP_2, 7),

36 HAB_DEVICE_CNSTR(DEVICE_DISP3_NAME, MM_DISP_3, 8),

37 HAB_DEVICE_CNSTR(DEVICE_DISP4_NAME, MM_DISP_4, 9),

38 HAB_DEVICE_CNSTR(DEVICE_DISP5_NAME, MM_DISP_5, 10),

39 HAB_DEVICE_CNSTR(DEVICE_GFX_NAME, MM_GFX, 11),

40 HAB_DEVICE_CNSTR(DEVICE_VID_NAME, MM_VID, 12),

41 HAB_DEVICE_CNSTR(DEVICE_VID2_NAME, MM_VID_2, 13),

42 HAB_DEVICE_CNSTR(DEVICE_VID3_NAME, MM_VID_3, 14),

43 HAB_DEVICE_CNSTR(DEVICE_MISC_NAME, MM_MISC, 15),

44 HAB_DEVICE_CNSTR(DEVICE_QCPE1_NAME, MM_QCPE_VM1, 16),

45 HAB_DEVICE_CNSTR(DEVICE_CLK1_NAME, MM_CLK_VM1, 17),

46 HAB_DEVICE_CNSTR(DEVICE_CLK2_NAME, MM_CLK_VM2, 18),

47 HAB_DEVICE_CNSTR(DEVICE_FDE1_NAME, MM_FDE_1, 19),

48 HAB_DEVICE_CNSTR(DEVICE_BUFFERQ1_NAME, MM_BUFFERQ_1, 20),

49 HAB_DEVICE_CNSTR(DEVICE_DATA1_NAME, MM_DATA_NETWORK_1, 21),

50 HAB_DEVICE_CNSTR(DEVICE_DATA2_NAME, MM_DATA_NETWORK_2, 22),

51 HAB_DEVICE_CNSTR(DEVICE_HSI2S1_NAME, MM_HSI2S_1, 23),

52 HAB_DEVICE_CNSTR(DEVICE_XVM1_NAME, MM_XVM_1, 24),

53 HAB_DEVICE_CNSTR(DEVICE_XVM2_NAME, MM_XVM_2, 25),

54 HAB_DEVICE_CNSTR(DEVICE_XVM3_NAME, MM_XVM_3, 26),

55 HAB_DEVICE_CNSTR(DEVICE_VNW1_NAME, MM_VNW_1, 27),

56 HAB_DEVICE_CNSTR(DEVICE_EXT1_NAME, MM_EXT_1, 28),

57 };

struct hab_driver hab_driver = {

60 .ndevices = ARRAY_SIZE(hab_devices),

61 .devp = hab_devices,

62 .uctx_list = LIST_HEAD_INIT(hab_driver.uctx_list),

63 .drvlock = __SPIN_LOCK_UNLOCKED(hab_driver.drvlock),

64 .imp_list = LIST_HEAD_INIT(hab_driver.imp_list),

65 .imp_lock = __SPIN_LOCK_UNLOCKED(hab_driver.imp_lock),

66 };lagvm/LINUX/android/kernel/msm-5.4/drivers/soc/qcom/hab/hab_virtio.c

/* probe is called when GVM detects virtio device from devtree */

669 static int virthab_probe(struct virtio_device *vdev)

670 {

671 struct virtio_hab *vh = NULL;

672 int err = 0, ret = 0;

673 int mmid_range = hab_driver.ndevices; //mnid_range=28

674 uint32_t mmid_start = hab_driver.devp[0].id;//mmid_start=MM_XXX

675

676 if (!virtio_has_feature(vdev, VIRTIO_F_VERSION_1)) {

677 pr_info("virtio has feature missing\n");

678 return -ENODEV;

679 }

680 pr_info("virtio has feature %llX virtio devid %X vid %d empty %d\n",

681 vdev->features, vdev->id.device, vdev->id.vendor,

682 list_empty(&vhab_list));

683

684 /* find out which virtio device is calling us.

685 * if this is hab's own virtio device, all the pchans are available

686 */

687 if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_HAB) {

688 /* all MMIDs are taken cannot co-exist with others */

689 mmid_start = hab_driver.devp[0].id;

690 mmid_range = hab_driver.ndevices;

691 virthab_store_vdev(HAB_MMID_ALL_AREA, vdev);

692 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_BUFFERQ) {

693 mmid_start = MM_BUFFERQ_1;

694 mmid_range = 1;

695 virthab_store_vdev(MM_BUFFERQ_1, vdev);

696 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_MISC) {

697 mmid_start = MM_MISC;

698 mmid_range = 1;

699 virthab_store_vdev(MM_MISC, vdev);

700 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_AUDIO) {

701 mmid_start = MM_AUD_1;

702 mmid_range = 4;

703 virthab_store_vdev(MM_AUD_1, vdev);

704 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_CAMERA) {

705 mmid_start = MM_CAM_1;

706 mmid_range = 2;

707 virthab_store_vdev(MM_CAM_1, vdev);

708 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_DISPLAY) {

709 mmid_start = MM_DISP_1;

710 mmid_range = 5;

711 virthab_store_vdev(MM_DISP_1, vdev);

712 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_GRAPHICS) {

713 mmid_start = MM_GFX;

714 mmid_range = 1;

715 virthab_store_vdev(MM_GFX, vdev);

716 } else if (vdev->id.device == HAB_VIRTIO_DEVICE_ID_VIDEO) {

717 mmid_start = MM_VID;

718 mmid_range = 2;

719 virthab_store_vdev(MM_VID, vdev);

720 } else {

721 pr_err("unknown virtio device is detected %d\n",

722 vdev->id.device);

723 mmid_start = 0;

724 mmid_range = 0;

725 }

726 pr_info("virtio device id %d mmid %d range %d\n",

727 vdev->id.device, mmid_start, mmid_range);

728

729 if (!virthab_pchan_avail_check(vdev->id.device, mmid_start, mmid_range))

730 return -EINVAL;

731

732 ret = virthab_alloc(vdev, &vh, mmid_start, mmid_range);

733 if (!ret)

734 pr_info("alloc done %d mmid %d range %d\n",

735 ret, mmid_start, mmid_range);

736 else {

737 pr_err("probe failed mmid %d range %d\n",

738 mmid_start, mmid_range);

739 return ret;

740 }

741

742 err = virthab_init_vqs(vh);

743 if (err)

744 goto err_init_vq;

745 //设置vdev 的状态

746 virtio_device_ready(vdev);

747 pr_info("virto device ready\n");

748

749 vh->ready = true;

750 pr_info("store virto device %pK empty %d\n", vh, list_empty(&vhab_list));

751 //获取数据通知BE qnx

752 ret = virthab_queue_inbufs(vh, 1);

753 if (ret)

754 return ret;

755

756 return 0;

757

758 err_init_vq:

759 kfree(vh);

760 pr_err("virtio input probe failed %d\n", err);

761 return err;

762 }给vqueue 分配内存空间

567 int virthab_alloc(struct virtio_device *vdev, struct virtio_hab **pvh,

568 uint32_t mmid_start, int mmid_range)

569 {

570 struct virtio_hab *vh;

571 int ret;

572 unsigned long flags;

573

574 vh = kzalloc(sizeof(*vh), GFP_KERNEL);

575 if (!vh)

576 return -ENOMEM;

577

578 ret = virthab_alloc_mmid_device(vh, mmid_start, mmid_range);

579

580 if (!ret)

581 pr_info("alloc done mmid %d range %d\n",

582 mmid_start, mmid_range);

583 else

584 return ret;

585

586 vh->vdev = vdev; /* store virtio device locally */

587

588 *pvh = vh;

589 spin_lock_irqsave(&vh_lock, flags);

590 list_add_tail(&vh->node, &vhab_list);

591 spin_unlock_irqrestore(&vh_lock, flags);

592

593 spin_lock_init(&vh->mlock);

594 pr_info("start vqs init vh list empty %d\n", list_empty(&vhab_list));

595

596 return 0;

597 }

598 EXPORT_SYMBOL(virthab_alloc);static int virthab_alloc_mmid_device(struct virtio_hab *vh,

530 uint32_t mmid_start, int mmid_range)

531 {

532 int i;

533 //分配虚拟queue 内存空间

534 vh->vqs = kzalloc(sizeof(struct virtqueue *) * mmid_range *

535 HAB_PCHAN_VQ_MAX, GFP_KERNEL);

536 if (!vh->vqs)

537 return -ENOMEM;

538 //分配虚拟queue callback内存空间

539 vh->cbs = kzalloc(sizeof(vq_callback_t *) * mmid_range *

540 HAB_PCHAN_VQ_MAX, GFP_KERNEL);

541 if (!vh->vqs)

542 return -ENOMEM;

543

544 vh->names = kzalloc(sizeof(char *) * mmid_range *

545 HAB_PCHAN_VQ_MAX, GFP_KERNEL);

546 if (!vh->names)

547 return -ENOMEM;

548 //为每个设备channel分配内存空间

549 vh->vqpchans = kcalloc(hab_driver.ndevices, sizeof(struct vq_pchan),

550 GFP_KERNEL);

551 if (!vh->vqpchans)

552 return -ENOMEM;

553

554 /* loop through all pchans before vq registration for name creation */

555 for (i = 0; i < mmid_range * HAB_PCHAN_VQ_MAX; i++) {

556 vh->names[i] = kzalloc(MAX_VMID_NAME_SIZE + 2, GFP_KERNEL);

557 if (!vh->names[i])

558 return -ENOMEM;

559 }

560

561 vh->mmid_start = mmid_start;

562 vh->mmid_range = mmid_range;

563

564 return 0;

565 }初始化虚拟的queue

static int virthab_init_vqs(struct virtio_hab *vh)

500 {

501 int ret;

502 vq_callback_t **cbs = vh->cbs;

503 char **names = vh->names;

504 //初始化virtio_hab

505 ret = virthab_init_vqs_pre(vh);

506 if (ret)

507 return ret;

508

509 pr_info("mmid %d request %d vqs\n", vh->mmid_start,

510 vh->mmid_range * HAB_PCHAN_VQ_MAX);

511

512 ret = virtio_find_vqs(vh->vdev, vh->mmid_range * HAB_PCHAN_VQ_MAX,

513 vh->vqs, cbs, (const char * const*)names, NULL);

514 if (ret) {

515 pr_err("failed to find vqs %d\n", ret);

516 return ret;

517 }

518

519 pr_info("find vqs OK %d\n", ret);

520 vh->vqs_offset = 0; /* this virtio device has all the vqs to itself */

521

522 ret = virthab_init_vqs_post(vh);

523 if (ret)

524 return ret;

525

526 return 0;

527 }将struct hab_device解析初始化virtio_hab

?

int virthab_init_vqs_pre(struct virtio_hab *vh)

387 {

388 struct vq_pchan *vpchans = vh->vqpchans;

389 vq_callback_t **cbs = vh->cbs;

390 char **names = vh->names;

391 char *temp;

392 int i, idx = 0;

393 struct hab_device *habdev = NULL;

394

395 pr_debug("2 callbacks %pK %pK\n", (void *)virthab_recv_txq,

396 (void *)virthab_recv_rxq);

397

398 habdev = find_hab_device(vh->mmid_start);

399 if (!habdev) {

400 pr_err("failed to locate mmid %d range %d\n",

401 vh->mmid_start, vh->mmid_range);

402 return -ENODEV;

403 }

404

405 /* do sanity check */

406 for (i = 0; i < hab_driver.ndevices; i++)

407 if (habdev == &hab_driver.devp[i])

408 break;

409 if (i + vh->mmid_range > hab_driver.ndevices) {

410 pr_err("invalid mmid %d range %d total %d\n",

411 vh->mmid_start, vh->mmid_range,

412 hab_driver.ndevices);

413 return -EINVAL;

414 }

415

416 idx = i;

417

418 for (i = 0; i < vh->mmid_range; i++) {

419 habdev = &hab_driver.devp[idx + i];

420 //配置vq callback 函数

421 /* ToDo: each cb should only apply to one vq */

422 cbs[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_TX_VQ] = virthab_recv_txq;

423 cbs[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_RX_VQ] = virthab_recv_rxq_task;

424 //给vq 配置name

425 strlcpy(names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_TX_VQ],

426 habdev->name, sizeof(habdev->name));

427 temp = names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_TX_VQ];

428 temp[0] = 't'; temp[1] = 'x'; temp[2] = 'q';

429

430 strlcpy(names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_RX_VQ],

431 habdev->name, sizeof(habdev->name));

432 temp = names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_RX_VQ];

433 temp[0] = 'r'; temp[1] = 'x'; temp[2] = 'q';

434 //指定vpchans mmid

435 vpchans[i].mmid = habdev->id;

436 if (list_empty(&habdev->pchannels))

437 pr_err("pchan is not initialized %s slot %d mmid %d\n",

438 habdev->name, i, habdev->id);

439 else

440 /* GVM only has one instance of the pchan for each mmid

441 * (no multi VMs)

442 */

443 vpchans[i].pchan = list_first_entry(&habdev->pchannels,

444 struct physical_channel,

445 node);

446

447 pr_info("txq %d %s cb %pK\n", i,

448 names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_TX_VQ],

449 (void *)cbs[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_TX_VQ]);

450 pr_info("rxq %d %s cb %pK\n", i,

451 names[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_RX_VQ],

452 (void *)cbs[i * HAB_PCHAN_VQ_MAX + HAB_PCHAN_RX_VQ]);

453 }

454

455 return 0;

456 }

457 EXPORT_SYMBOL(virthab_init_vqs_pre);匹配mmid 获取hab_driver.devp配置

247 struct hab_device *find_hab_device(unsigned int mm_id)

248 {

249 int i;

250

251 for (i = 0; i < hab_driver.ndevices; i++) {

252 if (hab_driver.devp[i].id == HAB_MMID_GET_MAJOR(mm_id))

253 return &hab_driver.devp[i];

254 }

255

256 pr_err("%s: id=%d\n", __func__, mm_id);

257 return NULL;

258 } static inline

193 int virtio_find_vqs(struct virtio_device *vdev, unsigned nvqs,

194 struct virtqueue *vqs[], vq_callback_t *callbacks[],

195 const char * const names[],

196 struct irq_affinity *desc)

197 {

//调用remoteproc_virtio.c?中注册的rproc_virtio_find_vqs

//同时注册通知BE端的notify函数rproc_virtio_notify?

//在virthab_queue_inbufs?获取到数据后通知BE qnx 端

198 return vdev->config->find_vqs(vdev, nvqs, vqs, callbacks, names, NULL, desc);

199 }将vdev 设置成 VIRTIO_CONFIG_S_DRIVER_OK状态

/**

212 * virtio_device_ready - enable vq use in probe function

213 * @vdev: the device

214 *

215 * Driver must call this to use vqs in the probe function.

216 *

217 * Note: vqs are enabled automatically after probe returns.

218 */

219 static inline

220 void virtio_device_ready(struct virtio_device *dev)

221 {

222 unsigned status = dev->config->get_status(dev);

223

224 BUG_ON(status & VIRTIO_CONFIG_S_DRIVER_OK);

//调用rproc_virtio_get_status?然后rproc_virtio_set_status

225 dev->config->set_status(dev, status | VIRTIO_CONFIG_S_DRIVER_OK);

226 }?将数据发送到qnx?

/* queue all the inbufs on all pchans/vqs */

298 int virthab_queue_inbufs(struct virtio_hab *vh, int alloc)

299 {

300 int ret, size;

301 int i;

302 struct scatterlist sg[1];

303 struct vh_buf_header *hd, *hd_tmp;

304

305 for (i = 0; i < vh->mmid_range; i++) {

//获取vpchan 读写通道

306 struct vq_pchan *vpc = &vh->vqpchans[i];

307

308 if (alloc) {

309 vpc->in_cnt = 0;

310 vpc->s_cnt = 0;

311 vpc->m_cnt = 0;

312 vpc->l_cnt = 0;

313

314 vpc->in_pool = kmalloc(IN_POOL_SIZE, GFP_KERNEL);

315 vpc->s_pool = kmalloc(OUT_SMALL_POOL_SIZE, GFP_KERNEL);

316 vpc->m_pool = kmalloc(OUT_MEDIUM_POOL_SIZE, GFP_KERNEL);

317 vpc->l_pool = kmalloc(OUT_LARGE_POOL_SIZE, GFP_KERNEL);

318 if (!vpc->in_pool || !vpc->s_pool || !vpc->m_pool ||

319 !vpc->l_pool) {

320 pr_err("failed to alloc buf %d %pK %d %pK %d %pK %d %pK\n",

321 IN_POOL_SIZE, vpc->in_pool,

322 OUT_SMALL_POOL_SIZE, vpc->s_pool,

323 OUT_MEDIUM_POOL_SIZE, vpc->m_pool,

324 OUT_LARGE_POOL_SIZE, vpc->l_pool);

325 return -ENOMEM;

326 }

327

328 init_waitqueue_head(&vpc->out_wq);

329 init_pool_list(vpc->in_pool, IN_BUF_SIZE,

330 IN_BUF_NUM, PT_IN,

331 &vpc->in_list, NULL,

332 &vpc->in_cnt);

333 init_pool_list(vpc->s_pool, OUT_SMALL_BUF_SIZE,

334 OUT_SMALL_BUF_NUM, PT_OUT_SMALL,

335 &vpc->s_list, NULL,

336 &vpc->s_cnt);

337 init_pool_list(vpc->m_pool, OUT_MEDIUM_BUF_SIZE,

338 OUT_MEDIUM_BUF_NUM, PT_OUT_MEDIUM,

339 &vpc->m_list, NULL,

340 &vpc->m_cnt);

341 init_pool_list(vpc->l_pool, OUT_LARGE_BUF_SIZE,

342 OUT_LARGE_BUF_NUM, PT_OUT_LARGE,

343 &vpc->l_list, NULL,

344 &vpc->l_cnt);

345

346 pr_debug("VQ buf allocated %s %d %d %d %d %d %d %d %d %pK %pK %pK %pK\n",

347 vpc->vq[HAB_PCHAN_RX_VQ]->name,

348 IN_POOL_SIZE, OUT_SMALL_POOL_SIZE,

349 OUT_MEDIUM_POOL_SIZE, OUT_LARGE_POOL_SIZE,

350 vpc->in_cnt, vpc->s_cnt, vpc->m_cnt,

351 vpc->l_cnt, vpc->in_list, vpc->s_list,

352 vpc->m_list, vpc->l_list);

353 }

354

355 spin_lock(&vpc->lock[HAB_PCHAN_RX_VQ]);

356 size = virtqueue_get_vring_size(vpc->vq[HAB_PCHAN_RX_VQ]);

357 pr_info("vq %s vring index %d num %d pchan %s\n",

358 vpc->vq[HAB_PCHAN_RX_VQ]->name,

359 vpc->vq[HAB_PCHAN_RX_VQ]->index, size,

360 vpc->pchan->name);

361

362 list_for_each_entry_safe(hd, hd_tmp, &vpc->in_list, node) {

363 list_del(&hd->node);

364 sg_init_one(sg, hd->buf, IN_BUF_SIZE);

//将virtioqueue添加到sg中

365 ret = virtqueue_add_inbuf(vpc->vq[HAB_PCHAN_RX_VQ], sg,

366 1, hd, GFP_ATOMIC);

367 if (ret) {

368 pr_err("failed to queue %s inbuf %d to PVM %d\n",

369 vpc->vq[HAB_PCHAN_RX_VQ]->name,

370 vpc->in_cnt, ret);

371 }

372 vpc->in_cnt--;

373 }

374 //通知后端RX vqueue

375 ret = virtqueue_kick(vpc->vq[HAB_PCHAN_RX_VQ]);

376 if (!ret)

377 pr_err("failed to kick %d %s ret %d cnt %d\n", i,

378 vpc->vq[HAB_PCHAN_RX_VQ]->name, ret,

379 vpc->in_cnt);

380 spin_unlock(&vpc->lock[HAB_PCHAN_RX_VQ]);

381 }

382 return 0;

383 }

384 EXPORT_SYMBOL(virthab_queue_inbufs);lagvm/LINUX/android/kernel/msm-5.4/drivers/remoteproc/remoteproc_virtio.c

static const struct virtio_config_ops rproc_virtio_config_ops = {

288 .get_features = rproc_virtio_get_features,

289 .finalize_features = rproc_virtio_finalize_features,

290 .find_vqs = rproc_virtio_find_vqs,

291 .del_vqs = rproc_virtio_del_vqs,

292 .reset = rproc_virtio_reset,

293 .set_status = rproc_virtio_set_status,

294 .get_status = rproc_virtio_get_status,

295 .get = rproc_virtio_get,

296 .set = rproc_virtio_set,

297 };lagvm/LINUX/android/kernel/msm-5.4/drivers/remoteproc/remoteproc_virtio.c

static struct virtqueue *rp_find_vq(struct virtio_device *vdev,

66 unsigned int id,

67 void (*callback)(struct virtqueue *vq),

68 const char *name, bool ctx)

69 {

70 struct rproc_vdev *rvdev = vdev_to_rvdev(vdev);

71 struct rproc *rproc = vdev_to_rproc(vdev);

72 struct device *dev = &rproc->dev;

73 struct rproc_mem_entry *mem;

74 struct rproc_vring *rvring;

75 struct fw_rsc_vdev *rsc;

76 struct virtqueue *vq;

77 void *addr;

78 int len, size;

79

80 /* we're temporarily limited to two virtqueues per rvdev */

81 if (id >= ARRAY_SIZE(rvdev->vring))

82 return ERR_PTR(-EINVAL);

83

84 if (!name)

85 return NULL;

86

87 /* Search allocated memory region by name */

88 mem = rproc_find_carveout_by_name(rproc, "vdev%dvring%d", rvdev->index,

89 id);

90 if (!mem || !mem->va)

91 return ERR_PTR(-ENOMEM);

92

93 rvring = &rvdev->vring[id];

94 addr = mem->va;

95 len = rvring->len;

96

97 /* zero vring */

98 size = vring_size(len, rvring->align);

99 memset(addr, 0, size);

100

101 dev_dbg(dev, "vring%d: va %pK qsz %d notifyid %d\n",

102 id, addr, len, rvring->notifyid);

103

104 /*

105 * Create the new vq, and tell virtio we're not interested in

106 * the 'weak' smp barriers, since we're talking with a real device.

107 */

108 vq = vring_new_virtqueue(id, len, rvring->align, vdev, false, ctx,

109 addr, rproc_virtio_notify, callback, name);

110 if (!vq) {

111 dev_err(dev, "vring_new_virtqueue %s failed\n", name);

112 rproc_free_vring(rvring);

113 return ERR_PTR(-ENOMEM);

114 }

115

116 rvring->vq = vq;

117 vq->priv = rvring;

118

119 /* Update vring in resource table */

120 rsc = (void *)rproc->table_ptr + rvdev->rsc_offset;

121 rsc->vring[id].da = mem->da;

122

123 return vq;

124 }?Khab 提供给其他模块的创建hab channel 的接口 habmm_socket_xxx=hab_vchan_xxxx

hab open

int hab_vchan_open(struct uhab_context *ctx,

689 unsigned int mmid,

690 int32_t *vcid,

691 int32_t timeout,

692 uint32_t flags)

693 {

694 struct virtual_channel *vchan = NULL;

695 struct hab_device *dev;

696

697 pr_debug("Open mmid=%d, loopback mode=%d, loopback be ctx %d\n",

698 mmid, hab_driver.b_loopback, ctx->lb_be);

699

700 if (!vcid)

701 return -EINVAL;

702

703 if (hab_is_loopback()) {

704 if (ctx->lb_be)

705 vchan = backend_listen(ctx, mmid, timeout);

706 else

707 vchan = frontend_open(ctx, mmid, LOOPBACK_DOM);

708 } else {

709 dev = find_hab_device(mmid);

710

711 if (dev) {

712 struct physical_channel *pchan =

713 hab_pchan_find_domid(dev,

714 HABCFG_VMID_DONT_CARE);

715 if (pchan) {

716 if (pchan->is_be)

717 vchan = backend_listen(ctx, mmid,

718 timeout);

719 else

720 vchan = frontend_open(ctx, mmid,

721 HABCFG_VMID_DONT_CARE);

722 } else {

723 pr_err("open on nonexistent pchan (mmid %x)\n",

724 mmid);

725 return -ENODEV;

726 }

727 } else {

728 pr_err("failed to find device, mmid %d\n", mmid);

729 return -ENODEV;

730 }

731 }

732

733 if (IS_ERR(vchan)) {

734 if (-ETIMEDOUT != PTR_ERR(vchan) && -EAGAIN != PTR_ERR(vchan))

735 pr_err("vchan open failed mmid=%d\n", mmid);

736 return PTR_ERR(vchan);

737 }

738

739 pr_debug("vchan id %x remote id %x session %d\n", vchan->id,

740 vchan->otherend_id, vchan->session_id);

741

742 write_lock(&ctx->ctx_lock);

743 list_add_tail(&vchan->node, &ctx->vchannels);

744 ctx->vcnt++;

745 write_unlock(&ctx->ctx_lock);

746

747 *vcid = vchan->id;

748

749 return 0;

750 }?Hab send

long hab_vchan_send(struct uhab_context *ctx,

548 int vcid,

549 size_t sizebytes,

550 void *data,

551 unsigned int flags)

552 {

553 struct virtual_channel *vchan;

554 int ret;

555 struct hab_header header = HAB_HEADER_INITIALIZER;

556 int nonblocking_flag = flags & HABMM_SOCKET_SEND_FLAGS_NON_BLOCKING;

557

558 if (sizebytes > (size_t)HAB_HEADER_SIZE_MAX) {

559 pr_err("Message too large, %lu bytes, max is %d\n",

560 sizebytes, HAB_HEADER_SIZE_MAX);

561 return -EINVAL;

562 }

563

564 vchan = hab_get_vchan_fromvcid(vcid, ctx, 0);

565 if (!vchan || vchan->otherend_closed) {

566 ret = -ENODEV;

567 goto err;

568 }

569

570 /* log msg send timestamp: enter hab_vchan_send */

571 trace_hab_vchan_send_start(vchan);

572

573 HAB_HEADER_SET_SIZE(header, sizebytes);

574 if (flags & HABMM_SOCKET_SEND_FLAGS_XING_VM_STAT) {

575 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_PROFILE);

576 if (sizebytes < sizeof(struct habmm_xing_vm_stat)) {

577 pr_err("wrong profiling buffer size %zd, expect %zd\n",

578 sizebytes,

579 sizeof(struct habmm_xing_vm_stat));

580 return -EINVAL;

581 }

582 } else if (flags & HABMM_SOCKET_XVM_SCHE_TEST) {

583 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_SCHE_MSG);

584 } else if (flags & HABMM_SOCKET_XVM_SCHE_TEST_ACK) {

585 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_SCHE_MSG_ACK);

586 } else if (flags & HABMM_SOCKET_XVM_SCHE_RESULT_REQ) {

587 if (sizebytes < sizeof(unsigned long long)) {

588 pr_err("Message buffer too small, %lu bytes, expect %d\n",

589 sizebytes,

590 sizeof(unsigned long long));

591 return -EINVAL;

592 }

593 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_SCHE_RESULT_REQ);

594 } else if (flags & HABMM_SOCKET_XVM_SCHE_RESULT_RSP) {

595 if (sizebytes < 3 * sizeof(unsigned long long)) {

596 pr_err("Message buffer too small, %lu bytes, expect %d\n",

597 sizebytes,

598 3 * sizeof(unsigned long long));

599 return -EINVAL;

600 }

601 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_SCHE_RESULT_RSP);

602 } else {

603 HAB_HEADER_SET_TYPE(header, HAB_PAYLOAD_TYPE_MSG);

604 }

605 HAB_HEADER_SET_ID(header, vchan->otherend_id);

606 HAB_HEADER_SET_SESSION_ID(header, vchan->session_id);

607

608 while (1) {

//FE 发送数据

609 ret = physical_channel_send(vchan->pchan, &header, data);

610

611 if (vchan->otherend_closed || nonblocking_flag ||

612 ret != -EAGAIN)

613 break;

614

615 schedule();

616 }

617

618 /*

619 * The ret here as 0 indicates the message was already sent out

620 * from the hab_vchan_send()'s perspective.

621 */

622 if (!ret)

623 vchan->tx_cnt++;

624 err:

625

626 /* log msg send timestamp: exit hab_vchan_send */

627 trace_hab_vchan_send_done(vchan);

628

629 if (vchan)

630 hab_vchan_put(vchan);

631

632 return ret;

633 }Hab recv

int hab_vchan_recv(struct uhab_context *ctx,

636 struct hab_message **message,

637 int vcid,

638 int *rsize,

639 unsigned int timeout,

640 unsigned int flags)

641 {

642 struct virtual_channel *vchan;

643 int ret = 0;

//flags=0

644 int nonblocking_flag = flags & HABMM_SOCKET_RECV_FLAGS_NON_BLOCKING;

645

646 vchan = hab_get_vchan_fromvcid(vcid, ctx, 1);

647 if (!vchan) {

648 pr_err("vcid %X vchan 0x%pK ctx %pK\n", vcid, vchan, ctx);

649 *message = NULL;

650 return -ENODEV;

651 }

652

653 vchan->rx_inflight = 1;

654

655 if (nonblocking_flag) {

656 /*

657 * Try to pull data from the ring in this context instead of

658 * IRQ handler. Any available messages will be copied and queued

659 * internally, then fetched by hab_msg_dequeue()

660 */

//hab_msg_enqueue

661 physical_channel_rx_dispatch((unsigned long) vchan->pchan);

662 }

663

664 ret = hab_msg_dequeue(vchan, message, rsize, timeout, flags);

665 if (!ret && *message) {

666 /* log msg recv timestamp: exit hab_vchan_recv */667 trace_hab_vchan_recv_done(vchan, *message);

668

669 /*

670 * Here, it is for sure that a message was received from the

671 * hab_vchan_recv()'s view w/ the ret as 0 and *message as

672 * non-zero.

673 */674 vchan->rx_cnt++;

675 }

676

677 vchan->rx_inflight = 0;

678

679 hab_vchan_put(vchan);

680 return ret;

681 }?由于flag 都配置为0 不会调用physical_channel_rx_dispatch进行enqueue 操作

physical_channel_rx_dispatch((unsigned long) vchan->pchan);

/* called by hab recv() to act like ISR to poll msg from remote VM */

/* ToDo: need to change the callback to here */

void physical_channel_rx_dispatch(unsigned long data)

{

struct physical_channel *pchan = (struct physical_channel *)data;

struct virtio_pchan_link *link =

(struct virtio_pchan_link *)pchan->hyp_data;

struct vq_pchan *vpc = link->vpc;

if (link->vpc == NULL) {

pr_info("%s: %s link->vpc not ready\n", __func__, pchan->name);

return;

}

virthab_recv_rxq_task(vpc->vq[HAB_PCHAN_RX_VQ]);

}

static void virthab_recv_rxq_task(struct virtqueue *vq)

{

struct virtio_hab *vh = get_vh(vq->vdev);

struct vq_pchan *vpc = get_virtio_pchan(vh, vq);

tasklet_schedule(&vpc->task);

}

Tasklet 调用栈从BE qnx获取数据

? ? ->hab_msg_queue

? ? ? ? - -> physical_channel_read

我们继续分析enqueue的调用时机

static void hab_msg_queue(struct virtual_channel *vchan,

struct hab_message *message)

{

int irqs_disabled = irqs_disabled();

hab_spin_lock(&vchan->rx_lock, irqs_disabled);

list_add_tail(&message->node, &vchan->rx_list);

hab_spin_unlock(&vchan->rx_lock, irqs_disabled);

wake_up(&vchan->rx_queue);

}

hab_init

static int __init hab_init(void)

321 {

322 int result;

323 dev_t dev;

324

325 result = alloc_chrdev_region(&hab_driver.major, 0, 1, "hab");

326

327 if (result < 0) {

328 pr_err("alloc_chrdev_region failed: %d\n", result);

329 return result;

330 }

331

332 cdev_init(&hab_driver.cdev, &hab_fops);

333 hab_driver.cdev.owner = THIS_MODULE;

334 hab_driver.cdev.ops = &hab_fops;

335 dev = MKDEV(MAJOR(hab_driver.major), 0);

336

337 result = cdev_add(&hab_driver.cdev, dev, 1);

338

339 if (result < 0) {

340 unregister_chrdev_region(dev, 1);

341 pr_err("cdev_add failed: %d\n", result);

342 return result;

343 }

344

345 hab_driver.class = class_create(THIS_MODULE, "hab");

346

347 if (IS_ERR(hab_driver.class)) {

348 result = PTR_ERR(hab_driver.class);

349 pr_err("class_create failed: %d\n", result);

350 goto err;

351 }

352

353 hab_driver.dev = device_create(hab_driver.class, NULL,

354 dev, &hab_driver, "hab");

355

356 if (IS_ERR(hab_driver.dev)) {

357 result = PTR_ERR(hab_driver.dev);

358 pr_err("device_create failed: %d\n", result);

359 goto err;

360 }

361

362 result = register_reboot_notifier(&hab_reboot_notifier);

363 if (result)

364 pr_err("failed to register reboot notifier %d\n", result);

365

366 /* read in hab config, then configure pchans */

367 result = do_hab_parse();

368

369 if (!result) {

370 hab_driver.kctx = hab_ctx_alloc(1);

371 if (!hab_driver.kctx) {

372 pr_err("hab_ctx_alloc failed\n");

373 result = -ENOMEM;

374 hab_hypervisor_unregister();

375 goto err;

376 } else {

377 /* First, try to configure system dma_ops */

378 result = dma_coerce_mask_and_coherent(

379 hab_driver.dev,

380 DMA_BIT_MASK(64));

381

382 /* System dma_ops failed, fallback to dma_ops of hab */

383 if (result) {

384 pr_warn("config system dma_ops failed %d, fallback to hab\n",

385 result);

386 hab_driver.dev->bus = NULL;

387 set_dma_ops(hab_driver.dev, &hab_dma_ops);

388 }

389 }

390 }

391 hab_stat_init(&hab_driver);

392 return result;

393

394 err:

395 if (!IS_ERR_OR_NULL(hab_driver.dev))

396 device_destroy(hab_driver.class, dev);

397 if (!IS_ERR_OR_NULL(hab_driver.class))

398 class_destroy(hab_driver.class);

399 cdev_del(&hab_driver.cdev);

400 unregister_chrdev_region(dev, 1);

401

402 pr_err("Error in hab init, result %d\n", result);

403 return result;

404 }

405 解析dist quin-vm-common.dtsi 获取vmmid 配置

quin-vm-common.dtsi

hab: qcom,hab {

compatible = "qcom,hab";

vmid = <2>;

mmidgrp100: mmidgrp100 {

grp-start-id = <100>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp200: mmidgrp200 {

grp-start-id = <200>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp300: mmidgrp300 {

grp-start-id = <300>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp400: mmidgrp400 {

grp-start-id = <400>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp500: mmidgrp500 {

grp-start-id = <500>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp600: mmidgrp600 {

grp-start-id = <600>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp700: mmidgrp700 {

grp-start-id = <700>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp900: mmidgrp900 {

grp-start-id = <900>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp1000: mmidgrp1000 {

grp-start-id = <1000>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp1100: mmidgrp1100 {

grp-start-id = <1100>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp1200: mmidgrp1200 {

grp-start-id = <1200>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp1400: mmidgrp1400 {

grp-start-id = <1400>;

role = "fe";

remote-vmids = <0>;

};

mmidgrp1500: mmidgrp1500 {

grp-start-id = <1500>;

role = "fe";

remote-vmids = <0>;

};

};

int do_hab_parse(void)

972 {

973 int result;

974 int i;

975 struct hab_device *device;

976

977 /* single GVM is 2, multigvm is 2 or 3. GHS LV-GVM 2, LA-GVM 3 */

978 int default_gvmid = DEFAULT_GVMID; //DEFAULT_GVMID=2

979

980 pr_debug("hab parse starts for %s\n", hab_info_str);

981

982 /* first check if hypervisor plug-in is ready */

983 result = hab_hypervisor_register();

984 if (result) {

985 pr_err("register HYP plug-in failed, ret %d\n", result);

986 return result;

987 }

988

989 /*

990 * Initialize open Q before first pchan starts.

991 * Each is for one pchan list

992 */

993 for (i = 0; i < hab_driver.ndevices; i++) {

994 device = &hab_driver.devp[i];

995 init_waitqueue_head(&device->openq);

996 }

997

998 /* read in hab config and create pchans*/

999 memset(&hab_driver.settings, HABCFG_VMID_INVALID,

1000 sizeof(hab_driver.settings));

//解析dtsi 获取vmid=2

//初始化settings)->vmid_mmid_list[_vmid_].vmid

1001 result = hab_parse(&hab_driver.settings);

1002 if (result) {

1003 pr_err("hab config open failed, prepare default gvm %d settings\n",

1004 default_gvmid);

1005 fill_default_gvm_settings(&hab_driver.settings, default_gvmid,

1006 MM_AUD_START, MM_ID_MAX);

1007 }

1008

1009 /* now generate hab pchan list */

1010 result = hab_generate_pchan_list(&hab_driver.settings);

1011 if (result) {

1012 pr_err("generate pchan list failed, ret %d\n", result);

1013 } else {

1014 int pchan_total = 0;

1015

1016 for (i = 0; i < hab_driver.ndevices; i++) {

1017 device = &hab_driver.devp[i];

1018 pchan_total += device->pchan_cnt;

1019 }

1020 pr_debug("ret %d, total %d pchans added, ndevices %d\n",

1021 result, pchan_total, hab_driver.ndevices);

1022 }

1023

1024 return result;

1025 }?Check mmid

/*

936 * generate pchan list based on hab settings table.

937 * return status 0: success, otherwise failure

938 */

939 static int hab_generate_pchan_list(struct local_vmid *settings)

940 {

941 int i, j, ret = 0;

942

943 /* scan by valid VMs, then mmid */

944 pr_debug("self vmid is %d\n", settings->self);

945 for (i = 0; i < HABCFG_VMID_MAX; i++) {

946 if (HABCFG_GET_VMID(settings, i) != HABCFG_VMID_INVALID &&

947 HABCFG_GET_VMID(settings, i) != settings->self) {

948 pr_debug("create pchans for vm %d\n", i);

949

950 for (j = 1; j <= HABCFG_MMID_AREA_MAX; j++) {

951 if (HABCFG_GET_MMID(settings, i, j)

952 != HABCFG_VMID_INVALID)

953 ret = hab_generate_pchan(settings,

954 i, j);

955 }

956 }

957 }

958 return ret;

959 }创建pchan

/*

870 * generate pchan list based on hab settings table.

871 * return status 0: success, otherwise failure

872 */

873 static int hab_generate_pchan(struct local_vmid *settings, int i, int j)

874 {

875 int ret = 0;

876

877 pr_debug("%d as mmid %d in vmid %d\n",

878 HABCFG_GET_MMID(settings, i, j), j, i);

879

880 switch (HABCFG_GET_MMID(settings, i, j)) {

881 case MM_AUD_START/100:

882 ret = hab_generate_pchan_group(settings, i, j, MM_AUD_START, MM_AUD_END);

883 break;

884 case MM_CAM_START/100:

885 ret = hab_generate_pchan_group(settings, i, j, MM_CAM_START, MM_CAM_END);

886 break;

887 case MM_DISP_START/100:

888 ret = hab_generate_pchan_group(settings, i, j, MM_DISP_START, MM_DISP_END);

889 break;

890 case MM_GFX_START/100:

891 ret = hab_generate_pchan_group(settings, i, j, MM_GFX_START, MM_GFX_END);

892 break;

893 case MM_VID_START/100:

894 ret = hab_generate_pchan_group(settings, i, j, MM_VID_START, MM_VID_END);

895 break;

896 case MM_MISC_START/100:

897 ret = hab_generate_pchan_group(settings, i, j, MM_MISC_START, MM_MISC_END);

898 break;

899 case MM_QCPE_START/100:

900 ret = hab_generate_pchan_group(settings, i, j, MM_QCPE_START, MM_QCPE_END);

901 break;

902 case MM_CLK_START/100:

903 ret = hab_generate_pchan_group(settings, i, j, MM_CLK_START, MM_CLK_END);

904 break;

905 case MM_FDE_START/100:

906 ret = hab_generate_pchan_group(settings, i, j, MM_FDE_START, MM_FDE_END);

907 break;

908 case MM_BUFFERQ_START/100:

909 ret = hab_generate_pchan_group(settings, i, j, MM_BUFFERQ_START, MM_BUFFERQ_END);

910 break;

911 case MM_DATA_START/100:

912 ret = hab_generate_pchan_group(settings, i, j, MM_DATA_START, MM_DATA_END);

913 break;

914 case MM_HSI2S_START/100:

915 ret = hab_generate_pchan_group(settings, i, j, MM_HSI2S_START, MM_HSI2S_END);

916 break;

917 case MM_XVM_START/100:

918 ret = hab_generate_pchan_group(settings, i, j, MM_XVM_START, MM_XVM_END);

919 break;

920 case MM_VNW_START/100:

921 ret = hab_generate_pchan_group(settings, i, j, MM_VNW_START, MM_VNW_END);

922 break;

923 case MM_EXT_START/100:

924 ret = hab_generate_pchan_group(settings, i, j, MM_EXT_START, MM_EXT_END);

925 break;

926 default:

927 pr_err("failed to find mmid %d, i %d, j %d\n",

928 HABCFG_GET_MMID(settings, i, j), i, j);

929

930 break;

931 }

932 return ret;

933 }依次初始化hab 虚拟设备

static int hab_generate_pchan_group(struct local_vmid *settings,

849 int i, int j, int start, int end)

850 {

851 int k, ret = 0;

852

853 for (k = start + 1; k < end; k++) {

854 /*

855 * if this local pchan end is BE, then use

856 * remote FE's vmid. If local end is FE, then

857 * use self vmid

858 */

859 ret += hab_initialize_pchan_entry(

860 find_hab_device(k),

861 settings->self,

862 HABCFG_GET_VMID(settings, i),

863 HABCFG_GET_BE(settings, i, j));

864 }

865

866 return ret;

867 }Dtsi 解析的role都是fe is_be=0 vmid_local=2

/*

806 * To name the pchan - the pchan has two ends, either FE or BE locally.

807 * if is_be is true, then this is listener for BE. pchane name use remote

808 * FF's vmid from the table.

809 * if is_be is false, then local is FE as opener. pchan name use local FE's

810 * vmid (self)

811 */

812 static int hab_initialize_pchan_entry(struct hab_device *mmid_device,

813 int vmid_local, int vmid_remote, int is_be)

814 {

815 char pchan_name[MAX_VMID_NAME_SIZE];

816 struct physical_channel *pchan = NULL;

817 int ret;

818 int vmid = is_be ? vmid_remote : vmid_local; /* used for naming only */

819

820 if (!mmid_device) {

821 pr_err("habdev %pK, vmid local %d, remote %d, is be %d\n",

822 mmid_device, vmid_local, vmid_remote, is_be);

823 return -EINVAL;

824 }

825

826 snprintf(pchan_name, MAX_VMID_NAME_SIZE, "vm%d-", vmid);

827 strlcat(pchan_name, mmid_device->name, MAX_VMID_NAME_SIZE);

828 //vmid_remote= grp-start-id/100

829 ret = habhyp_commdev_alloc((void **)&pchan, is_be, pchan_name,

830 vmid_remote, mmid_device);

831 if (ret) {

832 pr_err("failed %d to allocate pchan %s, vmid local %d, remote %d, is_be %d, total %d\n",

833 ret, pchan_name, vmid_local, vmid_remote,

834 is_be, mmid_device->pchan_cnt);

835 } else {

836 /* local/remote id setting should be kept in lower level */

837 pchan->vmid_local = vmid_local;

838 pchan->vmid_remote = vmid_remote;

839 pr_debug("pchan %s mmid %s local %d remote %d role %d\n",

840 pchan_name, mmid_device->name,

841 pchan->vmid_local, pchan->vmid_remote,

842 pchan->dom_id);

843 }

844

845 return ret;

846 }

847 初始化looppack device 和pchan

int habhyp_commdev_alloc(void **commdev, int is_be, char *name,

201 int vmid_remote, struct hab_device *mmid_device)

202 {

203 struct physical_channel *pchan;

204 //创建虚拟的//创建recv enqueue 线程接收qnx 的消息 device mmid_device name=mmid

205 int ret = loopback_pchan_create(mmid_device, name);

206

207 if (ret) {

208 pr_err("failed to create %s pchan in mmid device %s, ret %d, pchan cnt %d\n",

209 name, mmid_device->name, ret, mmid_device->pchan_cnt);

210 *commdev = NULL;

211 } else {

212 pr_debug("loopback physical channel on %s return %d, loopback mode(%d), total pchan %d\n",

213 name, ret, hab_driver.b_loopback,

214 mmid_device->pchan_cnt);

215 pchan = hab_pchan_find_domid(mmid_device,

216 HABCFG_VMID_DONT_CARE);

217 *commdev = pchan;

218 hab_pchan_put(pchan);

219

220 pr_debug("pchan %s vchans %d refcnt %d\n",

221 pchan->name, pchan->vcnt, get_refcnt(pchan->refcount));

222 }

223

224 return ret;

225 }创建recv enqueue 线程接收qnx 的消息

/* pchan is directly added into the hab_device */

150 int loopback_pchan_create(struct hab_device *dev, char *pchan_name)

151 {

152 int result;

153 struct physical_channel *pchan = NULL;

154 struct loopback_dev *lb_dev = NULL;

155

156 pchan = hab_pchan_alloc(dev, LOOPBACK_DOM);

157 if (!pchan) {

158 result = -ENOMEM;

159 goto err;

160 }

161

162 pchan->closed = 0;

163 strlcpy(pchan->name, pchan_name, sizeof(pchan->name));

164

165 lb_dev = kzalloc(sizeof(*lb_dev), GFP_KERNEL);

166 if (!lb_dev) {

167 result = -ENOMEM;

168 goto err;

169 }

170

171 spin_lock_init(&lb_dev->io_lock);

172 INIT_LIST_HEAD(&lb_dev->msg_list);

173

174 init_waitqueue_head(&lb_dev->thread_queue);

175

//创建recv enqueue 线程接收qnx 的消息

176 lb_dev->thread_data.data = pchan;

177 lb_dev->kthread = kthread_run(lb_kthread, &lb_dev->thread_data,

178 pchan->name);

179 if (IS_ERR(lb_dev->kthread)) {

180 result = PTR_ERR(lb_dev->kthread);

181 pr_err("failed to create kthread for %s, ret %d\n",

182 pchan->name, result);

183 goto err;

184 }

185

186 pchan->hyp_data = lb_dev;

187

188 return 0;

189 err:

190 kfree(lb_dev);

191 kfree(pchan);

192

193 return result;

194 }接收qnx 消息hab_msg_queue enqueue rt_list ,最后等待hab_msg_dequeue 获取数据给LA 进行处理完成

int lb_kthread(void *d)

42 {

43 struct lb_thread_struct *p = (struct lb_thread_struct *)d;

44 struct physical_channel *pchan = (struct physical_channel *)p->data;

45 struct loopback_dev *dev = pchan->hyp_data;

46 int ret = 0;

47

48 while (!p->stop) {

49 schedule();

//等到msg_list不为空 表示qnx 有消息发送到LA侧

50 ret = wait_event_interruptible(dev->thread_queue,

51 !lb_thread_queue_empty(dev) ||

52 p->stop);

53

54 spin_lock_bh(&dev->io_lock);

55

56 while (!list_empty(&dev->msg_list)) {

57 struct loopback_msg *msg = NULL;

58

59 msg = list_first_entry(&dev->msg_list,

60 struct loopback_msg, node);

61 dev->current_msg = msg;

62 list_del(&msg->node);

63 dev->msg_cnt--;

64 //调用hab_msg_recv接收消息将msg 挂到rx_list

//list_add_tail(&message->node, &vchan->rx_list);

65 ret = hab_msg_recv(pchan, &msg->header);

66 if (ret) {

67 pr_err("failed %d msg handling sz %d header %d %d %d, %d %X %d, total %d\n",

68 ret, msg->payload_size,

69 HAB_HEADER_GET_ID(msg->header),

70 HAB_HEADER_GET_TYPE(msg->header),

71 HAB_HEADER_GET_SIZE(msg->header),

72 msg->header.session_id,

73 msg->header.signature,

74 msg->header.sequence, dev->msg_cnt);

75 }

76

77 kfree(msg);

78 dev->current_msg = NULL;

79 }

80

81 spin_unlock_bh(&dev->io_lock);

82 }

83 p->bexited = 1;

84 pr_debug("exit kthread\n");

85 return 0;

86 }本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!