FGSM、PGD、BIM对抗攻击算法实现

2023-12-16 16:34:49

?????

? ? ? ?本篇文章是博主在AI、无人机、强化学习等领域学习时,用于个人学习、研究或者欣赏使用,并基于博主对人工智能等领域的一些理解而记录的学习摘录和笔记,若有不当和侵权之处,指出后将会立即改正,还望谅解。文章分类在AI学习:

? ? ? ?AI学习笔记(6)---《FGSM、PGD、BIM对抗攻击算法实现》

FGSM、PGD、BIM对抗攻击算法实现

目录

1 前言

????????PGD、BIM对抗攻击算法实现可以直接导入这个?torchattacks这个库,这个库中有很多常用的对抗攻击的算法。

pip install torchattacks

?????????然后添加相关代码,即可直接调用:

perturbed_data = torchattacks.PGD(model, epsilon, 0.2, steps=4)

# perturbed_data = (torchattacks.BIM(model, epsilon, 0.2, steps=4))

perturbed_data = perturbed_data(data, target)?FGSM对抗样本识别的代码请看这篇文章:

本篇文章主要分享使用FGSM、PGD、BIM对抗攻击算法实现实现手写数字识别,项目的代码在下面的链接中:

如果CSDN下载不了的话,可以关注公众号免费获取:小趴菜只卖红薯

2 采用PGD对抗样本生成

2.1 PGD对抗样本生成代码

class PGD(Attack):

def __init__(self, model, eps=8 / 255, alpha=2 / 255, steps=10, random_start=True):

super().__init__("PGD", model)

self.eps = eps

self.alpha = alpha

self.steps = steps

self.random_start = random_start

self.supported_mode = ["default", "targeted"]

def forward(self, images, labels):

r"""

Overridden.

"""

images = images.clone().detach().to(self.device)

labels = labels.clone().detach().to(self.device)

if self.targeted:

target_labels = self.get_target_label(images, labels)

loss = nn.CrossEntropyLoss()

adv_images = images.clone().detach()

if self.random_start:

# Starting at a uniformly random point

adv_images = adv_images + torch.empty_like(adv_images).uniform_(

-self.eps, self.eps

)

adv_images = torch.clamp(adv_images, min=0, max=1).detach()

for _ in range(self.steps):

adv_images.requires_grad = True

outputs = self.get_logits(adv_images)

# Calculate loss

if self.targeted:

cost = -loss(outputs, target_labels)

else:

cost = loss(outputs, labels)

# Update adversarial images

grad = torch.autograd.grad(

cost, adv_images, retain_graph=False, create_graph=False

)[0]

adv_images = adv_images.detach() + self.alpha * grad.sign()

delta = torch.clamp(adv_images - images, min=-self.eps, max=self.eps)

adv_images = torch.clamp(images + delta, min=0, max=1).detach()

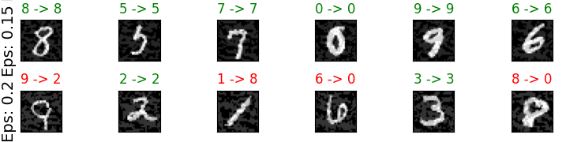

return adv_images2.2 PGD对抗样本生成测试结果

3 采用BIM对抗样本生成

3.1 BIM对抗样本生成代码

class BIM(Attack):

def __init__(self, model, eps=8 / 255, alpha=2 / 255, steps=10):

super().__init__("BIM", model)

self.eps = eps

self.alpha = alpha

if steps == 0:

self.steps = int(min(eps * 255 + 4, 1.25 * eps * 255))

else:

self.steps = steps

self.supported_mode = ["default", "targeted"]

def forward(self, images, labels):

r"""

Overridden.

"""

images = images.clone().detach().to(self.device)

labels = labels.clone().detach().to(self.device)

if self.targeted:

target_labels = self.get_target_label(images, labels)

loss = nn.CrossEntropyLoss()

ori_images = images.clone().detach()

for _ in range(self.steps):

images.requires_grad = True

outputs = self.get_logits(images)

# Calculate loss

if self.targeted:

cost = -loss(outputs, target_labels)

else:

cost = loss(outputs, labels)

# Update adversarial images

grad = torch.autograd.grad(

cost, images, retain_graph=False, create_graph=False

)[0]

adv_images = images + self.alpha * grad.sign()

a = torch.clamp(ori_images - self.eps, min=0)

b = (adv_images >= a).float() * adv_images + (

adv_images < a

).float() * a # nopep8

c = (b > ori_images + self.eps).float() * (ori_images + self.eps) + (

b <= ori_images + self.eps

).float() * b # nopep8

images = torch.clamp(c, max=1).detach()

return images3.2 BIM对抗样本生成测试结果

4?总结

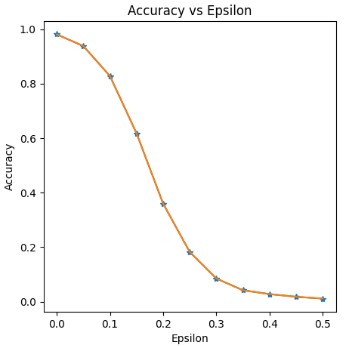

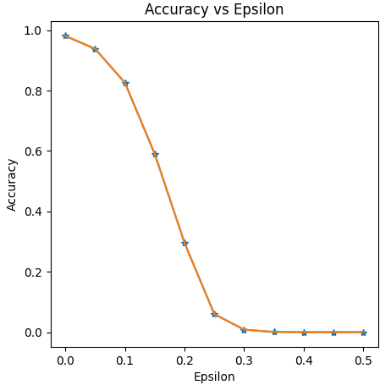

????????由上述的实验结果可以看出,在epsilon相同的时候有FGSM的准确率率 > PGD的准确率 > BIM的准确率衰减速率;

????????通过图像的倾斜角度可以观察出,FGSM的准确率衰减速率 < PGD的准确率衰减速率 < BIM的准确率衰减速率。

????????如果限制扰动量的大小,以使人眼不易察觉,可以通过改进对抗样本生成方法,使用不同对抗样本生成方法,提高对抗样本的对抗性。

? ? ?文章若有不当和不正确之处,还望理解与指出。由于部分文字、图片等来源于互联网,无法核实真实出处,如涉及相关争议,请联系博主删除。如有错误、疑问和侵权,欢迎评论留言联系作者,或者关注VX公众号:Rain21321,联系作者。

文章来源:https://blog.csdn.net/qq_51399582/article/details/135030590

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!