[论文精读]Brain Network Transformer

论文网址:[2210.06681] Brain Network Transformer (arxiv.org)

英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用!

1. 省流版

1.1. 心得

(1)在介绍中说根据一些区域的共同激活和共同停用可以分为不同的功能模块。这样的话感觉针对不同的疾病就需要不同的图谱了。但是我又没去研究这个图谱,,,=。=而且还是大可能看玄学

(2)?作者认为功能连接矩阵阻碍①中心性(其实可以算啊...我忘了叫啥了反正我最开始写的那篇a gentle introduction of graph neural network吗啥玩意儿的里面有计算的方式。不过感觉算的是连接强度的中心性而不是位置中心性。emm...位置中心性应该也本不重要吧?),②空间性(我更认为功能>结构性,在脑子里面...),③边缘编码(我不是太熟悉这玩意儿不过能大概或许get到。这...的确是个问题,但是我还没有想过它是否真的影响很大)

(3)整笑了这文章把我的疑惑也写进去了这FC本体连接性确实太强了,十六万哈哈哈哈哈哈

1.2. 论文框架图

2. 论文逐段精读

2.1. Abstract

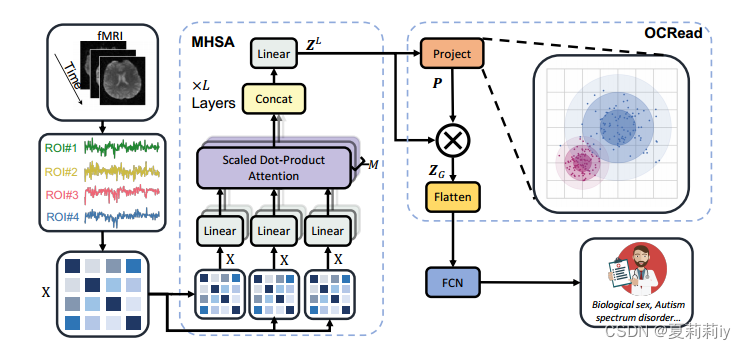

? ? ? ? ①They intend to obtain positional information and strength of connections

? ? ? ? ②?They propose?an ORTHONORMAL CLUSTERING READOUT operation, which is based on?self-supervised soft clustering and orthonormal projection

2.2. Introduction

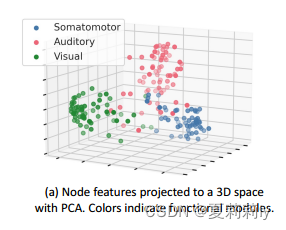

? ? ? ? ①?Research in the medical field findings some regions might work together when activate or deactivate. Then the brain can be divided into different ROIs to better analyse diseases. Unfortunately, they?may not be absolutely reliable.

? ? ? ? ②Transformer based models on fMRI analysis have been?prevalent these years. Such as GAT with local aggregation, Graph Transformer with edge information injection, SAN with?eigenvalues and eigenvectors embedding and Graphomer with unique?centrality and?spatial/edge encodin

? ? ? ? ③?It may lose?centrality, spatial, and edge encoding when adopting functional connectivity (FC)

? ? ? ? ④In FC, every node has the same degree(不是...你不能砍一点吗...)and only considers the one-hop information

? ? ? ? ⑤The edge in formation in brain is the strength of connevtivity but in biology is probably whether they connect

? ? ? ? ⑥In molecule, the number of nodes < 50, the number of edges < 2500. In FC, the number of node < 400, the number of edges < 160000

? ? ? ? ⑦Thereupon, the authors put forward?BRAIN NETWORK TRANSFORMER (BRAINNETTF), which uses the "effective initial node features of connection profiles"(我不知道这是啥,文中说它可以“自然地为基于变压器的模型提供了位置特征,避免了特征值或特征向量的昂贵计算”)

? ? ? ? ⑧In order to reduce the impact of inaccurate?regional division, they design the?ORTHONORMAL CLUSTERING READOUT, which is a?global pooling operator.

? ? ? ? ⑨It is a big challenge that open access datasets are limited in brain analysis

unleash??vt.使爆发;发泄;突然释放[VN]?~ sth (on/upon sb/sth)

2.3. Background and Related Work

2.3.1.?GNNs for Brain Network Analysis

? ? ? ? ①GroupINN: reduces model size and is based on grouping

? ? ? ? ②BrainGNN: utilizes GNN and special pooling operator

? ? ? ? ③IBGNN: analyzes?disorder-specific ROIs and prominent connections

? ? ? ? ④FBNetGen: brings learnable generation of brain networks

? ? ? ? ⑤STAGIN: extracts dynamic brain network

2.3.2. Graph Transformer

? ? ? ? ①Graph Transformer: injects edge information and embeds eigenvectors as position

? ? ? ? ②SAN: enhances positional embedding

? ? ? ? ③Graphomer: designs?a fine-grained attention mechanism

? ? ? ? ④HGT: adopts special sampling algorithm

? ? ? ? ⑤EGT: edge augmentation

? ? ? ? ⑥LSPE: utilizes learnable structural and positional encoding

? ? ? ? ⑦GRPE:?improves?relative position information?encoding

2.4. Brain Network Transformer

2.4.1. Problem Definition

2.4.2.?Multi-Head Self-Attention Module (MHSA)

2.4.3.?ORTHONORMAL CLUSTERING READOUT (OCREAD)

(1)Theoretical Justifications

2.4.4.?Generalizing OCREAD to Other Graph Tasks and Domains

2.5. Experiments

2.5.1. Experimental Settings

2.5.2.?Performance Analysis (RQ1)

2.5.3.?Ablation Studies on the OCREAD Module (RQ2)

(1)OCREAD with varying readout functions

(2)OCREAD with varying cluster initializations

2.5.4.?In-depth Analysis of Attention Scores and Cluster Assignments (RQ3)

2.6. Discussion and Conclusion

2.7. Appendix

2.7.1.?Training Curves of Different Models with or without StratifiedSampling

2.7.2.?Transformer Performance with Different Node Features

2.7.3.?Statistical Proof of the Goodness with Orthonormal Cluster Centers

(1)Proof of Theorem 3.1

(2)Proof of Theorem 3.2

2.7.4. Running Time

2.7.5. Number of Parameters

2.7.6. Parameter Tuning

2.7.7. Software Version

2.7.8.?The Difference between Various Initialization Methods

3. 知识补充

3.1. Positional embedding

参考学习:Transformer中的位置嵌入究竟应该如何看待? - 知乎 (zhihu.com)

3.2. Centrality

参考学习:度中心性、特征向量中心性、中介中心性、连接中心性 - 知乎 (zhihu.com)

4. Reference List

Kan X. et al. (2022) 'Brain Network Transformer', NeurIPS. doi:?https://doi.org/10.48550/arXiv.2210.06681

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!