PaddleClas学习3——使用PPLCNet模型对车辆朝向进行识别(c++)

2023-12-14 19:43:39

使用PPLCNet模型对车辆朝向进行识别

1 准备环境

参考上一篇:Windows PaddleSeg c++部署

2 准备模型

2.1 模型导出

对上一篇 使用PPLCNet模型对车辆朝向进行识别 训练得到模型进行转换。将该模型转为 inference 模型只需运行如下命令:

python tools\export_model.py -c .\ppcls\configs\PULC\vehicle_attribute\PPLCNet_x1_0.yaml -o Global.pretrained_model=output/PPLCNet_x1_0/best_model -o Global.save_inference_dir=./deploy/models/class_vehicle_attribute_infer

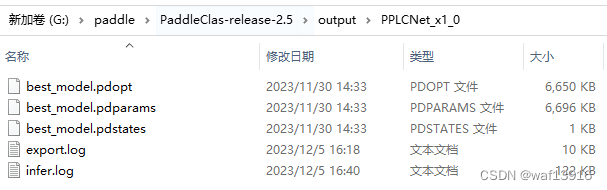

图2.1 训练得到的模型

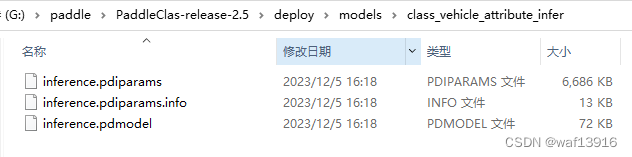

图2.2 导出的模型

2.2 修改配置文件

deploy/configs/PULC/vehicle_attribute/inference_vehicle_attribute.yaml

修改Global下的infer_imgs和inference_model_dir。

Global:

infer_imgs: "./images/PULC/vehicle_attribute/0002_c002_00030670_0.jpg"

inference_model_dir: "./models/class_vehicle_attribute_infer"

batch_size: 1

use_gpu: True

enable_mkldnn: True

cpu_num_threads: 10

#benchmark: False

enable_benchmark: False

use_fp16: False

ir_optim: True

use_tensorrt: False

gpu_mem: 8000

enable_profile: False

3 编译

工程整体目录结构如下:

G:/paddle/c++

├── paddle_inference

G:/paddle

├── PaddleClas-release-2.5

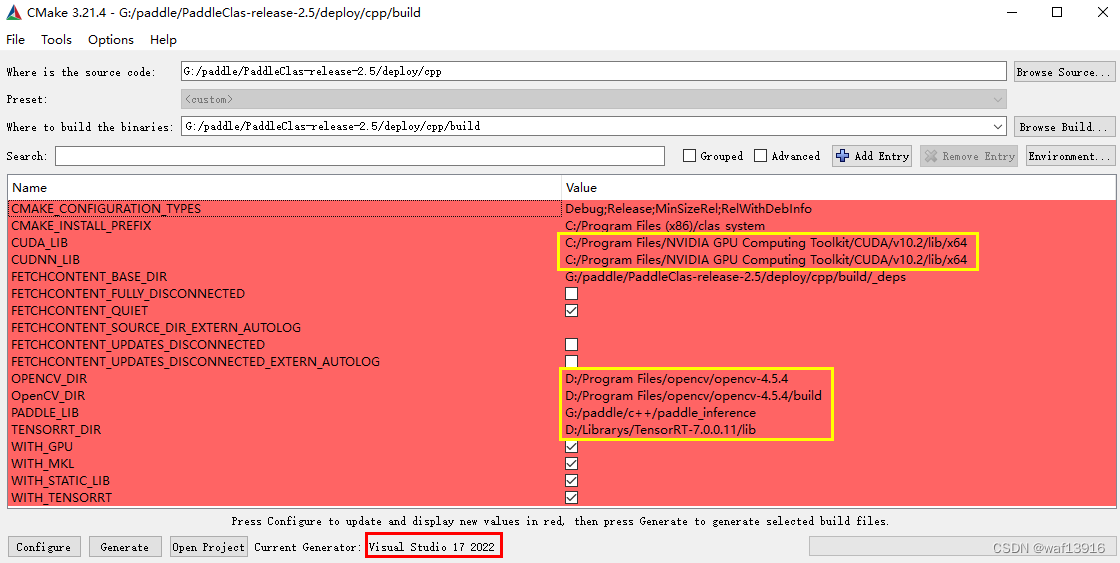

3.1 使用CMake生成项目文件

3.2 编译

用Visual Studio 2022打开cpp\build\clas_system.sln,将编译模式设置为Release,点击生成->生成解决方案,在cpp\build\Release文件夹内生成clas_system.exe。

3.3 执行

进入到build/Release目录下,将准备的模型和图片放到clas_system.exe同级目录,build/Release目录结构如下:

Release

├──clas_system.exe # 可执行文件

├──images # 测试图片

├── PULC

├── vehicle_attribute

├── 0002_c002_00030670_0.jpg

├──configs # 配置文件

├── PULC

├── vehicle_attribute

├── inference_vehicle_attribute.yaml

├──models # 推理用到的模型

├── class_vehicle_attribute_infer

├── inference.pdmodel # 预测模型的拓扑结构文件

├── inference.pdiparams # 预测模型的权重文件

└── inference.pdiparams.info # 参数额外信息,一般无需关注

├──*.dll # dll文件

3.4 添加后处理程序

3.4.1 postprocess.h

// postprocess.h

#include <iostream>

#include <vector>

namespace PaddleClas {

class VehicleAttribute {

public:

float color_threshold = 0.5;

float type_threshold = 0.5;

float direction_threshold = 0.5;

std::vector<std::string> color_list = { "yellow", "orange", "green", "gray", "red", "blue", "white",

"golden", "brown", "black" };

std::vector<std::string> type_list = { "sedan", "suv", "van", "hatchback", "mpv", "pickup", "bus",

"truck", "estate" };

std::vector<std::string> direction_list = { "forward", "sideward", "backward" };

std::string run(std::vector<float>& pred_data);

};

}

3.4.2 postprocess.cpp

// postprocess.cpp

#include "include/postprocess.h"

#include <string>

namespace PaddleClas {

std::string VehicleAttribute::run(std::vector<float>& pred_data) {

int color_num = 10;

int type_num = 9;

int direction_num = 3;

int index_color = std::distance(&pred_data[0], std::max_element(&pred_data[0], &pred_data[0] + 10));//左闭右开

int index_type = std::distance(&pred_data[0] + 10, std::max_element(&pred_data[0] + 10, &pred_data[0] + 19));

int index_direction = std::distance(&pred_data[0] + 19, std::max_element(&pred_data[0] + 19, &pred_data[0] + 22));

std::string color_info, type_info, direction_info;

if (pred_data[index_color] >= this->color_threshold) {

color_info = "Color: (" + color_list[index_color] + ", pro: " + std::to_string(pred_data[index_color]) + ")";

}

if (pred_data[index_type + 10] >= this->type_threshold) {

type_info = "Type: (" + type_list[index_type] + ", pro: " + std::to_string(pred_data[index_type + 10]) + ")";

}

if (pred_data[index_direction + 19] >= this->direction_threshold) {

direction_info = "Direction: (" + direction_list[index_direction] + ", pro: " + std::to_string(pred_data[index_direction + 19]) + ")";

}

std::string pred_res = color_info + type_info + direction_info;

pred_res += "pred: ";

for (int i = 0; i < pred_data.size(); i++) {

if (i < 10) {

if (pred_data[i] > color_threshold) {

pred_res += "1, ";

}

else {

pred_res += "0, ";

}

}

else if (i < 19) {

if (pred_data[i] > type_threshold) {

pred_res += "1, ";

}

else {

pred_res += "0, ";

}

}

else {

if (pred_data[i] > direction_threshold) {

pred_res += "1, ";

}

else {

pred_res += "0, ";

}

}

}

return pred_res;

}

}//namespace

3.4.3 在cls.h中添加函数声明

// Run predictor for vehicle attribute

void Run(cv::Mat& img, std::vector<float>& out_data, std::string &pred_res,

std::vector<double>& times);

3.4.4 在cls.cpp中添加函数定义

void Classifier::Run(cv::Mat& img, std::vector<float>& out_data, std::string& pred_res,

std::vector<double>& times){

cv::Mat srcimg;

cv::Mat resize_img;

img.copyTo(srcimg);

auto preprocess_start = std::chrono::system_clock::now();

this->resize_op_.Run(img, resize_img, this->resize_size_);

//this->resize_op_.Run(img, resize_img, this->resize_short_size_);

//this->crop_op_.Run(resize_img, this->crop_size_);

this->normalize_op_.Run(&resize_img, this->mean_, this->std_, this->scale_);

std::vector<float> input(1 * 3 * resize_img.rows * resize_img.cols, 0.0f);

this->permute_op_.Run(&resize_img, input.data());

auto input_names = this->predictor_->GetInputNames();

auto input_t = this->predictor_->GetInputHandle(input_names[0]);

input_t->Reshape({ 1, 3, resize_img.rows, resize_img.cols });

auto preprocess_end = std::chrono::system_clock::now();

auto infer_start = std::chrono::system_clock::now();

input_t->CopyFromCpu(input.data());

this->predictor_->Run();

auto output_names = this->predictor_->GetOutputNames();

auto output_t = this->predictor_->GetOutputHandle(output_names[0]);

std::vector<int> output_shape = output_t->shape();

int out_num = std::accumulate(output_shape.begin(), output_shape.end(), 1,

std::multiplies<int>());

out_data.resize(out_num);

output_t->CopyToCpu(out_data.data());

auto infer_end = std::chrono::system_clock::now();

auto postprocess_start = std::chrono::system_clock::now();

pred_res = this->vehicle_attribute_op.run(out_data);

auto postprocess_end = std::chrono::system_clock::now();

std::chrono::duration<float> preprocess_diff =

preprocess_end - preprocess_start;

times[0] = double(preprocess_diff.count() * 1000);

std::chrono::duration<float> inference_diff = infer_end - infer_start;

double inference_cost_time = double(inference_diff.count() * 1000);

times[1] = inference_cost_time;

std::chrono::duration<float> postprocess_diff =

postprocess_end - postprocess_start;

times[2] = double(postprocess_diff.count() * 1000);

}

3.4.5 在main.cpp中调用

EFINE_string(config,

"./configs/PULC/vehicle_attribute/inference_vehicle_attribute.yaml", "Path of yaml file");

DEFINE_string(c,

"", "Path of yaml file");

int main(int argc, char** argv) {

google::ParseCommandLineFlags(&argc, &argv, true);

std::string yaml_path = "";

if (FLAGS_config == "" && FLAGS_c == "") {

std::cerr << "[ERROR] usage: " << std::endl

<< argv[0] << " -c $yaml_path" << std::endl

<< "or:" << std::endl

<< argv[0] << " -config $yaml_path" << std::endl;

exit(1);

}

else if (FLAGS_config != "") {

yaml_path = FLAGS_config;

}

else {

yaml_path = FLAGS_c;

}

ClsConfig config(yaml_path);

config.PrintConfigInfo();

std::string path(config.infer_imgs);

std::vector <std::string> img_files_list;

if (cv::utils::fs::isDirectory(path)) {

std::vector <cv::String> filenames;

cv::glob(path, filenames);

for (auto f : filenames) {

img_files_list.push_back(f);

}

}

else {

img_files_list.push_back(path);

}

std::cout << "img_file_list length: " << img_files_list.size() << std::endl;

Classifier classifier(config);

std::vector<double> cls_times = { 0, 0, 0 };

std::vector<double> cls_times_total = { 0, 0, 0 };

double infer_time;

std::vector<float> out_data;

std::string result;

int warmup_iter = 5;

bool label_output_equal_flag = true;

for (int idx = 0; idx < img_files_list.size(); ++idx) {

std::string img_path = img_files_list[idx];

cv::Mat srcimg = cv::imread(img_path, cv::IMREAD_COLOR);

if (!srcimg.data) {

std::cerr << "[ERROR] image read failed! image path: " << img_path

<< "\n";

exit(-1);

}

cv::cvtColor(srcimg, srcimg, cv::COLOR_BGR2RGB);

classifier.Run(srcimg, out_data, result, cls_times);

std::cout << "Current image path: " << img_path << std::endl;

infer_time = cls_times[0] + cls_times[1] + cls_times[2];

std::cout << "Current total inferen time cost: " << infer_time << " ms."

<< std::endl;

std::cout << "Current inferen result: " << result << " ."

<< std::endl;

if (idx >= warmup_iter) {

for (int i = 0; i < cls_times.size(); ++i)

cls_times_total[i] += cls_times[i];

}

}

if (img_files_list.size() > warmup_iter) {

infer_time = cls_times_total[0] + cls_times_total[1] + cls_times_total[2];

std::cout << "average time cost in all: "

<< infer_time / (img_files_list.size() - warmup_iter) << " ms."

<< std::endl;

}

std::string presion = "fp32";

if (config.use_fp16)

presion = "fp16";

if (config.benchmark) {

AutoLogger autolog("Classification", config.use_gpu, config.use_tensorrt,

config.use_mkldnn, config.cpu_threads, 1,

"1, 3, 224, 224", presion, cls_times_total,

img_files_list.size());

autolog.report();

}

return 0;

}

4 模型预测

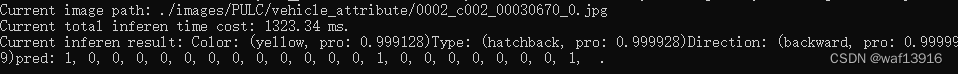

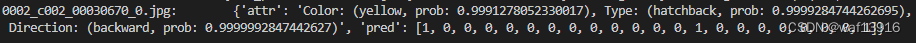

4.1 测试结果

图 4.1 输入图像

图4.2 预测结果

4.2 与python预测结果对比

python deploy\python\predict_cls.py -c .\deploy\configs\PULC\vehicle_attribute\inference_vehicle_attribute.yaml -o Global.pretrained_model=output/PPLCNet_x1_0/best_model

文章来源:https://blog.csdn.net/u014377655/article/details/134859690

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!