Unity中Shader裁剪空间推导(在Shader中使用)

2023-12-29 20:34:56

文章目录

前言

在上一篇文章中,我们推导得出了 透视相机到裁剪空间的转化矩阵

我们在正交矩阵Shader的基础上,继续测试

在这篇文章中,我们在Shader中使用该矩阵测试一下。

- OpenGL

[ 2 v w 0 0 0 0 2 n h 0 0 0 0 n + f n ? f 2 n f n ? f 0 0 ? 1 0 ] \begin{bmatrix} \frac{2v}{w} & 0 & 0 & 0 \\ 0 & \frac{2n}{h} & 0 &0\\ 0 & 0 & \frac{n+f}{n-f} &\frac{2nf}{n-f}\\ 0 & 0 & -1 & 0\\ \end{bmatrix} ?w2v?000?0h2n?00?00n?fn+f??1?00n?f2nf?0? ? - DirectX

[ 2 v w 0 0 0 0 2 n h 0 0 0 0 n f ? n n f f ? n 0 0 ? 1 0 ] \begin{bmatrix} \frac{2v}{w} & 0 & 0 & 0 \\ 0 & \frac{2n}{h} & 0 &0\\ 0 & 0 & \frac{n}{f-n} &\frac{nf}{f-n}\\ 0 & 0 & -1 & 0\\ \end{bmatrix} ?w2v?000?0h2n?00?00f?nn??1?00f?nnf?0? ?

一、在Shader中使用转化矩阵

1、在顶点着色器中定义转化矩阵

- OpenGL:

M_clipP = float4x4

(

2n/w,0,0,0,

0,2n/h,0,0,

0,0,(n+f)/(n-f),(2nf)/(n-f),

0,0,-1,0

);

- DirectX:

M_clipP = float4x4

(

2n/w,0,0,0,

0,2n/h,0,0,

0,0,n/(f-n),(n*f)/(f-n),

0,0,-1,0

);

2、用 UNITY_NEAR_CLIP_VALUE 区分平台矩阵

- 1为OpenGL

- -1为DirectX

3、定义一个枚举用于区分当前是处于什么相机

[Enum(OrthoGraphic,0,Perspective,1)]_CameraType(“CameraType”,Float) = 0

- 在手动转化矩阵时使用三目运算符来决定使用哪一个矩阵

float4x4 M_clip = _CameraType ? M_clipP : M_clipO;

o.vertexCS = mul(M_clip,float4(vertexVS,1));

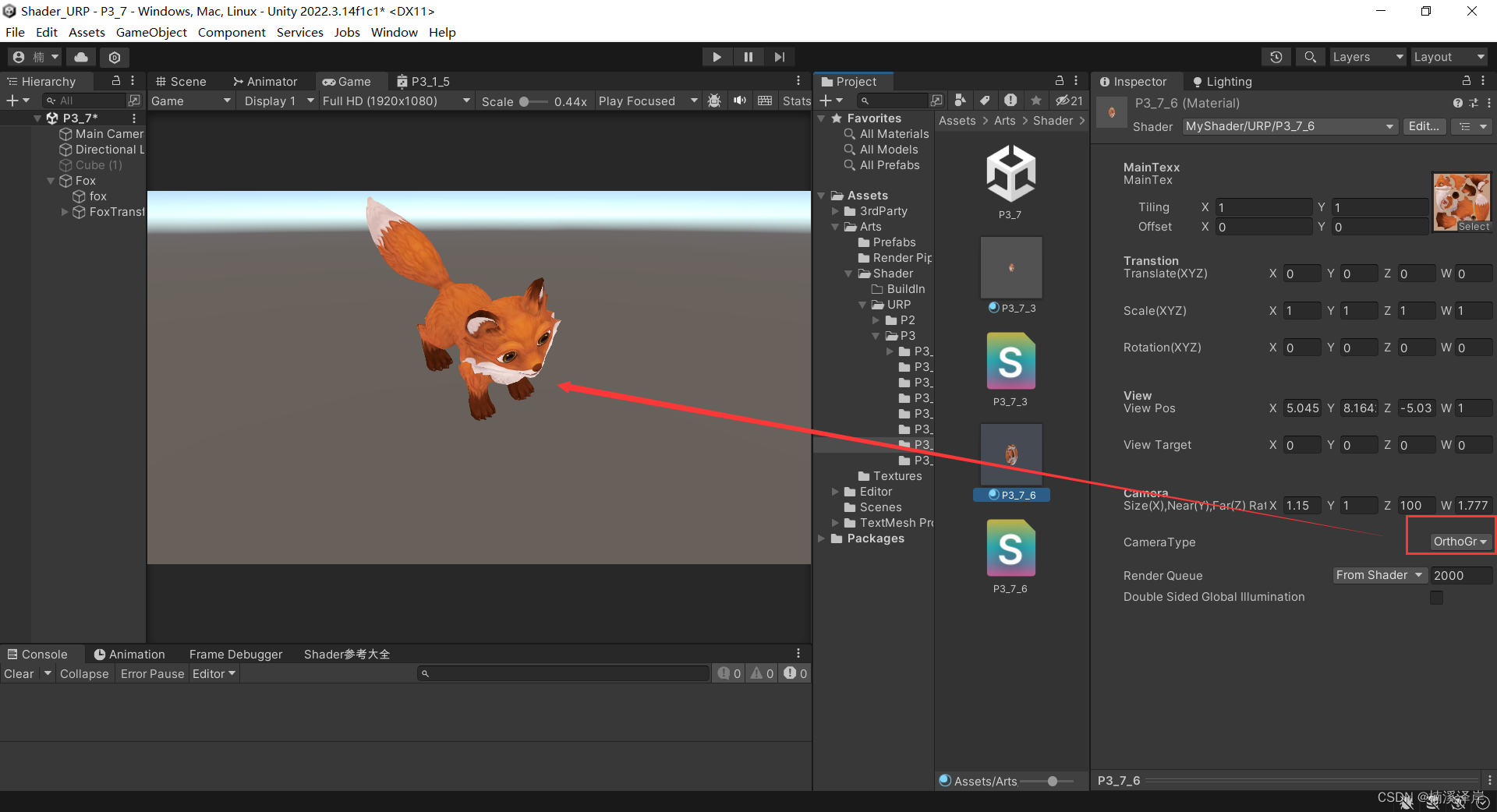

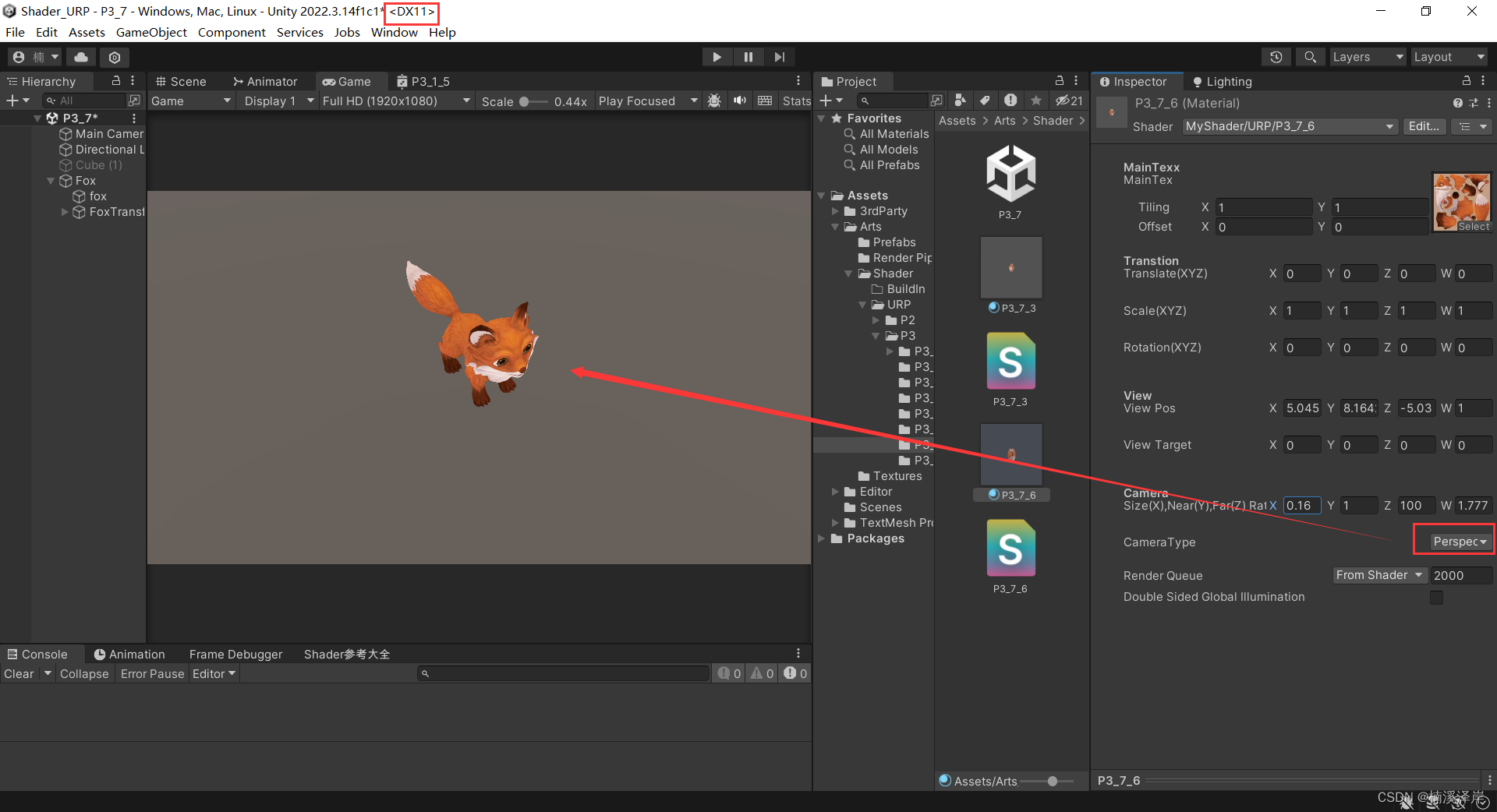

二、我们在DirectX平台下,看看效果

1、正交相机下

2、透视相机下

3、最终代码

//平移变换

//缩放变换

//旋转变换(四维)

//视图空间矩阵

//正交相机视图空间 -> 裁剪空间

Shader "MyShader/URP/P3_7_6"

{

Properties

{

[Header(MainTexx)]

_MainTex("MainTex",2D) = "white"{}

[Header(Transtion)]

_Translate("Translate(XYZ)",Vector) = (0,0,0,0)

_Scale("Scale(XYZ)",Vector)= (1,1,1,1)

_Rotation("Rotation(XYZ)",Vector) = (0,0,0,0)

[Header(View)]

_ViewPos("View Pos",vector) = (0,0,0,0)

_ViewTarget("View Target",vector) = (0,0,0,0)

[Header(Camera)]

_CameraParams("Size(X),Near(Y),Far(Z) Ratio(W)",Vector) = (0,0,0,1.777)

[Enum(OrthoGraphic,0,Perspective,1)]_CameraType("CameraType",Float) = 0

}

SubShader

{

Tags

{

"PenderPipeline"="UniversalPipeline"

"RenderType"="Opaque"

"Queue"="Geometry"

}

Pass

{

HLSLPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Color.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

struct Attribute

{

float4 vertexOS : POSITION;

float2 uv : TEXCOORD0;

};

struct Varying

{

float4 vertexCS : SV_POSITION;

float2 uv : TEXCOORD0;

};

CBUFFER_START(UnityPerMaterial)

float4 _Translate;

float4 _Scale;

float4 _Rotation;

float4 _ViewPos;

float4 _ViewTarget;

float4 _CameraParams;

float _CameraType;

CBUFFER_END

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

Varying vert (Attribute v)

{

Varying o;

o.uv = v.uv;

//平移变换

float4x4 M_Translate = float4x4

(

1,0,0,_Translate.x,

0,1,0,_Translate.y,

0,0,1,_Translate.z,

0,0,0,1

);

v.vertexOS = mul(M_Translate,v.vertexOS);

//缩放交换

float4x4 M_Scale = float4x4

(

_Scale.x,0,0,0,

0,_Scale.y,0,0,

0,0,_Scale.z,0,

0,0,0,1

);

v.vertexOS = mul(M_Scale,v.vertexOS);

//旋转变换

float4x4 M_rotateX = float4x4

(

1,0,0,0,

0,cos(_Rotation.x),sin(_Rotation.x),0,

0,-sin(_Rotation.x),cos(_Rotation.x),0,

0,0,0,1

);

float4x4 M_rotateY = float4x4

(

cos(_Rotation.y),0,sin(_Rotation.y),0,

0,1,0,0,

-sin(_Rotation.y),0,cos(_Rotation.y),0,

0,0,0,1

);

float4x4 M_rotateZ = float4x4

(

cos(_Rotation.z),sin(_Rotation.z),0,0,

-sin(_Rotation.z),cos(_Rotation.z),0,0,

0,0,1,0,

0,0,0,1

);

v.vertexOS = mul(M_rotateX,v.vertexOS);

v.vertexOS = mul(M_rotateY,v.vertexOS);

v.vertexOS = mul(M_rotateZ,v.vertexOS);

//观察空间矩阵推导

//P_view = [W_view] * P_world

//P_view = [V_world]^-1 * P_world

//P_view = [V_world]^T * P_world

float3 ViewZ = normalize(_ViewPos.xyz - _ViewTarget.xyz);

float3 ViewY = float3(0,1,0);

float3 ViewX = cross(ViewZ,ViewY);

ViewY = cross(ViewX,ViewZ);

float4x4 M_viewTemp = float4x4

(

ViewX.x,ViewX.y,ViewX.z,0,

ViewY.x,ViewY.y,ViewY.z,0,

ViewZ.x,ViewZ.y,ViewZ.z,0,

0,0,0,1

);

float4x4 M_viewTranslate = float4x4

(

1,0,0,-_ViewPos.x,

0,1,0,-_ViewPos.y,

0,0,1,-_ViewPos.z,

0,0,0,1

);

float4x4 M_view = mul(M_viewTemp,M_viewTranslate);

float3 vertexWS = TransformObjectToWorld(v.vertexOS.xyz);

//世界空间转化到观察空间

float3 vertexVS = mul(M_view,float4(vertexWS,1)).xyz;

//相机参数

float h = _CameraParams.x * 2;

float w = h * _CameraParams.w;

float n = _CameraParams.y;

float f = _CameraParams.z;

//正交相机投影矩阵

//P_Clip = [M_Clip] * P_view

float4x4 M_clipO;

if(UNITY_NEAR_CLIP_VALUE==-1)

{

//OpenGL

M_clipO = float4x4

(

2/w,0,0,0,

0,2/h,0,0,

0,0,2/(n - f),(n + f) / (n - f),

0,0,0,1

);

}

if(UNITY_NEAR_CLIP_VALUE==1)

{

//DirectX

M_clipO = float4x4

(

2/w,0,0,0,

0,2/h,0,0,

0,0,1/(f-n),f/(f-n),

0,0,0,1

);

}

//透视相机投影矩阵

float4x4 M_clipP;

if(UNITY_NEAR_CLIP_VALUE==-1)

{

//OpenGL

M_clipP = float4x4

(

2*n/w,0,0,0,

0,2*n/h,0,0,

0,0,(n+f)/(n-f),(2*n*f)/(n-f),

0,0,-1,0

);

}

if(UNITY_NEAR_CLIP_VALUE==1)

{

//DirectX

M_clipP = float4x4

(

2*n/w,0,0,0,

0,2*n/h,0,0,

0,0,n/(f-n),(n*f)/(f-n),

0,0,-1,0

);

}

//手动将观察空间下的坐标转换到裁剪空间下

float4x4 M_clip = _CameraType ? M_clipP : M_clipO;

o.vertexCS = mul(M_clip,float4(vertexVS,1));

//观察空间 转化到 齐次裁剪空间

//o.vertexCS = TransformWViewToHClip(vertexVS);

//o.vertexCS = TransformObjectToHClip(v.vertexOS.xyz);

return o;

}

half4 frag (Varying i) : SV_Target

{

float4 mainTex = SAMPLE_TEXTURE2D(_MainTex,sampler_MainTex,i.uv);

return mainTex;

}

ENDHLSL

}

}

}

文章来源:https://blog.csdn.net/qq_51603875/article/details/135295341

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!