大三上实训内容

2023-12-14 02:14:50

项目一:爬取天气预报数据

【内容】

在中国天气网(http://www.weather.com.cn)中输入城市的名称,例如输入信阳,进入http://www.weather.com.cn/weather1d/101180601.shtml#input

的网页显示信阳的天气预报,其中101180601是信阳的代码,每个城市或者地区都有一个代码。如下图所示,请爬取河南所有城市15天的天气预报数据。

1到7天代码

import requests

from bs4 import BeautifulSoup

import csv

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36',

'Accept-Encoding': 'gzip, deflate'

}

city_list = [101180101,101180901,101180801,101180301,101180501,101181101,101180201,101181201,101181501,101180701,101180601,101181401,101181001,101180401,101181701,101181601,101181301]

city_name_dict = {

101180101: "郑州市",

101180901: "洛阳市",

101180801: "开封市",

101180301: "新乡市",

101180501: "平顶山市",

101181101: "焦作市",

101180201: "安阳市",

101181201: "鹤壁市",

101181501: "漯河市",

101180701: "南阳市",

101180601: "信阳市",

101181401: "周口市",

101181001: "商丘市",

101180401: "许昌市",

101181701: "三门峡市",

101181601: "驻马店市",

101181301: "濮阳"

}

# 创建csv文件

with open('河南地级市7天天气情况.csv', 'w', newline='', encoding='utf-8') as csvfile:

csv_writer = csv.writer(csvfile)

# 写入表头

csv_writer.writerow(['City ID', 'City Name', 'Weather Info'])

for city in city_list:

city_id = city

city_name = city_name_dict.get(city_id, "未知城市")

print(f"City ID: {city_id}, City Name: {city_name}")

url = f'http://www.weather.com.cn/weather/{city}.shtml'

response = requests.get(headers=headers, url=url)

soup = BeautifulSoup(response.content.decode('utf-8'), 'html.parser')

# 找到v<div id="7d" class="c7d">标签

v_div = soup.find('div', {'id': '7d'})

# 提取v<div id="7d" class="c7d">下的天气相关的网页信息

weather_info = v_div.find('ul', {'class': 't clearfix'})

# 提取li标签下的内容,每个标签下的分行打印,移除打印结果之间的空格

weather_list = []

for li in weather_info.find_all('li'):

weather_list.append(li.text.strip().replace('\n', ''))

# 将城市ID、城市名称和天气信息写入csv文件

csv_writer.writerow([city_id, city_name, ', '.join(weather_list)])

8到15天的代码

import requests

from bs4 import BeautifulSoup

import csv

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36',

'Accept-Encoding': 'gzip, deflate'

}

city_list = [101180101, 101180901, 101180801, 101180301, 101180501, 101181101, 101180201, 101181201, 101181501,

101180701, 101180601, 101181401, 101181001, 101180401, 101181701, 101181601, 101181301]

city_name_dict = {

101180101: "郑州市",

101180901: "洛阳市",

101180801: "开封市",

101180301: "新乡市",

101180501: "平顶山市",

101181101: "焦作市",

101180201: "安阳市",

101181201: "鹤壁市",

101181501: "漯河市",

101180701: "南阳市",

101180601: "信阳市",

101181401: "周口市",

101181001: "商丘市",

101180401: "许昌市",

101181701: "三门峡市",

101181601: "驻马店市",

101181301: "濮阳"

}

# 创建csv文件

with open('河南地级市8-15天天气情况.csv', 'w', newline='', encoding='utf-8') as csvfile:

csv_writer = csv.writer(csvfile)

# 写入表头

csv_writer.writerow(['City ID', 'City Name', 'Weather Info'])

for city in city_list:

city_id = city

city_name = city_name_dict.get(city_id, "未知城市")

print(f"City ID: {city_id}, City Name: {city_name}")

url = f'http://www.weather.com.cn/weather15d/{city}.shtml'

response = requests.get(headers=headers, url=url)

soup = BeautifulSoup(response.content.decode('utf-8'), 'html.parser')

# 找到v<div id="15d" class="c15d">标签

v_div = soup.find('div', {'id': '15d'})

# 提取v<div id="15d" class="c15d">下的天气相关的网页信息

weather_info = v_div.find('ul', {'class': 't clearfix'})

# 提取li标签下的信息

for li in weather_info.find_all('li'):

time = li.find('span', {'class': 'time'}).text

wea = li.find('span', {'class': 'wea'}).text

tem = li.find('span', {'class': 'tem'}).text

wind = li.find('span', {'class': 'wind'}).text

wind1 = li.find('span', {'class': 'wind1'}).text

csv_writer.writerow([city_id, city_name, f"时间:{time},天气:{wea},温度:{tem},风向:{wind},风力:{wind1}"])

15天代码

import requests

from bs4 import BeautifulSoup

import csv

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36',

'Accept-Encoding': 'gzip, deflate'

}

city_list = [101180101, 101180901, 101180801, 101180301, 101180501, 101181101, 101180201, 101181201, 101181501,

101180701, 101180601, 101181401, 101181001, 101180401, 101181701, 101181601, 101181301]

city_name_dict = {

101180101: "郑州市",

101180901: "洛阳市",

101180801: "开封市",

101180301: "新乡市",

101180501: "平顶山市",

101181101: "焦作市",

101180201: "安阳市",

101181201: "鹤壁市",

101181501: "漯河市",

101180701: "南阳市",

101180601: "信阳市",

101181401: "周口市",

101181001: "商丘市",

101180401: "许昌市",

101181701: "三门峡市",

101181601: "驻马店市",

101181301: "濮阳"

}

# 创建csv文件

with open('河南地级市1-15天天气情况.csv', 'w', newline='', encoding='utf-8') as csvfile:

csv_writer = csv.writer(csvfile)

# 写入表头

csv_writer.writerow(['City ID', 'City Name', 'Weather Info'])

for city in city_list:

city_id = city

city_name = city_name_dict.get(city_id, "未知城市")

print(f"City ID: {city_id}, City Name: {city_name}")

# 爬取1-7天天气情况

url_7d = f'http://www.weather.com.cn/weather/{city}.shtml'

response_7d = requests.get(headers=headers, url=url_7d)

soup_7d = BeautifulSoup(response_7d.content.decode('utf-8'), 'html.parser')

v_div_7d = soup_7d.find('div', {'id': '7d'})

weather_info_7d = v_div_7d.find('ul', {'class': 't clearfix'})

weather_list_7d = []

for li in weather_info_7d.find_all('li'):

weather_list_7d.append(li.text.strip().replace('\n', ''))

# 爬取8-15天天气情况

url_15d = f'http://www.weather.com.cn/weather15d/{city}.shtml'

response_15d = requests.get(headers=headers, url=url_15d)

soup_15d = BeautifulSoup(response_15d.content.decode('utf-8'), 'html.parser')

v_div_15d = soup_15d.find('div', {'id': '15d'})

weather_info_15d = v_div_15d.find('ul', {'class': 't clearfix'})

weather_list_15d = []

for li in weather_info_15d.find_all('li'):

time = li.find('span', {'class': 'time'}).text

wea = li.find('span', {'class': 'wea'}).text

tem = li.find('span', {'class': 'tem'}).text

wind = li.find('span', {'class': 'wind'}).text

wind1 = li.find('span', {'class': 'wind1'}).text

weather_list_15d.append(f"时间:{time},天气:{wea},温度:{tem},风向:{wind},风力:{wind1}")

# 将城市ID、城市名称和天气信息写入csv文件

csv_writer.writerow([city_id, city_name, ', '.join(weather_list_7d+weather_list_15d)])项目二:爬取红色旅游数据

【内容】

?? 信阳是大别山革命根据地,红色旅游资源非常丰富,爬取http://www.bytravel.cn/view/red/index441_list.html 网页的红色旅游景点,并在地图上标注出来。

相关代码

import requests # 导入requests库,用于发送HTTP请求

import csv # 导入csv库,用于处理CSV文件

from bs4 import BeautifulSoup # 导入BeautifulSoup库,用于解析HTML文档

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0 Safari/537.36',

'Accept-Encoding': 'gzip, deflate' # 设置请求头,模拟浏览器访问

}

# 创建csv文件并写入表头

csv_file = open('信阳红色景点.csv', 'w', newline='', encoding='utf-8') # 打开csv文件,以写入模式

csv_writer = csv.writer(csv_file) # 创建csv写入对象

csv_writer.writerow(['景点名称', '景点简介', '星级', '图片链接']) # 写入表头

# 爬取第一页

url = 'http://www.bytravel.cn/view/red/index441_list.html' # 定义要爬取的网页URL

response = requests.get(headers=headers, url=url) # 发送GET请求,获取网页内容

soup = BeautifulSoup(response.content.decode('gbk'), 'html.parser') # 使用BeautifulSoup解析网页内容

target_div = soup.find('div', {'style': 'margin:5px 10px 0 10px'}) # 在解析后的HTML中查找目标div

for div in target_div.find_all('div', {'style': 'margin:2px 10px 0 7px;padding:3px 0 0 0'}): # 在目标div中查找所有符合条件的子div

title_element = div.find('a', {'class': 'blue14b'}) # 在子div中查找标题元素

if title_element: # 如果找到了标题元素

title = title_element.text # 获取标题文本

else:

title = "未找到标题" # 如果没有找到标题元素,设置默认值

Introduction_element = div.find('div', id='tctitletop102') # 在子div中查找简介元素

if Introduction_element: # 如果找到了简介元素

intro = Introduction_element.text.strip().replace("[详细]", "") # 获取简介文本,去除首尾空格和"[详细]"标记

else:

intro = "无简介" # 如果没有找到简介元素,设置默认值

star_element = div.find('font', {'class': 'f14'}) # 在子div中查找星级元素

if star_element: # 如果找到了星级元素

star = star_element.text # 获取星级文本

else:

star = "无星级" # 如果没有找到星级元素,设置默认值

img_url_element = div.find('img', {'class': 'hpic'}) # 在子div中查找图片链接元素

if img_url_element: # 如果找到了图片链接元素

img_url = img_url_element['src'] # 获取图片链接

else:

img_url = "无图片链接" # 如果没有找到图片链接元素,设置默认值

print('景点名称:', title) # 打印景点名称

print('景点简介:', intro) # 打印景点简介

print('星级:', star) # 打印星级

print('图片链接:', img_url) # 打印图片链接

# 将数据写入csv文件

csv_writer.writerow([title, intro, star, img_url]) # 将景点名称、简介、星级和图片链接写入csv文件

# 爬取第二页到第五页

for page in range(1, 5): # 遍历第二页到第五页

url = f'http://www.bytravel.cn/view/red/index441_list{page}.html' # 构造每一页的URL

response = requests.get(headers=headers, url=url) # 发送GET请求,获取网页内容

soup = BeautifulSoup(response.content.decode('gbk'), 'html.parser') # 使用BeautifulSoup解析网页内容

target_div = soup.find('div', {'style': 'margin:5px 10px 0 10px'}) # 在解析后的HTML中查找目标div

for div in target_div.find_all('div', {'style': 'margin:2px 10px 0 7px;padding:3px 0 0 0'}): # 在目标div中查找所有符合条件的子div

title_element = div.find('a', {'class': 'blue14b'}) # 在子div中查找标题元素

if title_element: # 如果找到了标题元素

title = title_element.text # 获取标题文本

else:

title = "未找到标题" # 如果没有找到标题元素,设置默认值

Introduction_element = div.find('div', id='tctitletop102') # 在子div中查找简介元素

if Introduction_element: # 如果找到了简介元素

intro = Introduction_element.text.strip().replace("[详细]", "") # 获取简介文本,去除首尾空格和"[详细]"标记

else:

intro = "无简介" # 如果没有找到简介元素,设置默认值

star_element = div.find('font', {'class': 'f14'}) # 在子div中查找星级元素

if star_element: # 如果找到了星级元素

star = star_element.text # 获取星级文本

else:

star = "无星级" # 如果没有找到星级元素,设置默认值

img_url_element = div.find('img', {'class': 'hpic'}) # 在子div中查找图片链接元素

if img_url_element: # 如果找到了图片链接元素

img_url = img_url_element['src'] # 获取图片链接

else:

img_url = "无图片链接" # 如果没有找到图片链接元素,设置默认值

print('景点名称:', title) # 打印景点名称

print('景点简介:', intro) # 打印景点简介

print('星级:', star) # 打印星级

print('图片链接:', img_url) # 打印图片链接

# 将数据写入csv文件

csv_writer.writerow([title, intro, star, img_url]) # 将景点名称、简介、星级和图片链接写入csv文件

# 关闭csv文件

csv_file.close()

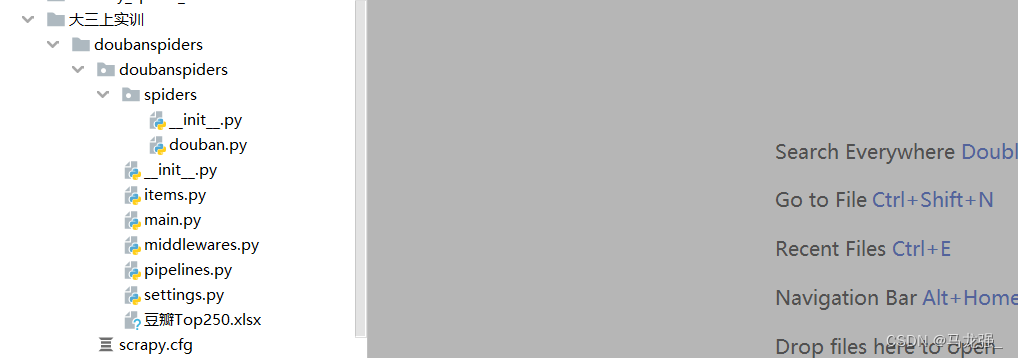

项目三:豆瓣网爬取top250电影数据

【内容】

运用scrapy框架从豆瓣电影top250网站爬取全部上榜的电影信息,并将电影的名称、评分、排名、一句影评、剧情简介分别保存都mysql 和mongodb 库里面。

douban.py

import scrapy # 导入scrapy库

from scrapy import Selector, Request # 从scrapy库中导入Selector和Request类

from scrapy.http import HtmlResponse # 从scrapy库中导入HtmlResponse类

from ..items import DoubanspidersItem # 从当前目录下的items模块中导入DoubanspidersItem类

class DoubanSpider(scrapy.Spider): # 定义一个名为DoubanSpider的爬虫类,继承自scrapy.Spider

name = 'douban' # 设置爬虫的名称为'douban'

allowed_domains = ['movie.douban.com'] # 设置允许爬取的域名为'movie.douban.com'

# start_urls = ['http://movie.douban.com/top250'] # 设置起始URL,但注释掉了,所以不会自动开始爬取

def start_requests(self): # 定义start_requests方法,用于生成初始请求

for page in range(10): # 循环10次,每次生成一个请求,爬取豆瓣电影Top250的前10页数据

yield Request(url=f'https://movie.douban.com/top250?start={page * 25}&filt=') # 使用yield关键字返回请求对象,Scrapy会自动处理请求并调用回调函数

def parse(self, response: HtmlResponse, **kwargs): # 定义parse方法,用于解析响应数据

sel = Selector(response) # 使用Selector类解析响应数据

list_items = sel.css('#content > div > div.article > ol > li') # 使用CSS选择器提取电影列表项

for list_item in list_items: # 遍历电影列表项

detail_url = list_item.css('div.info > div.hd > a::attr(href)').extract_first() # 提取电影详情页的URL

movie_item = DoubanspidersItem() # 创建一个DoubanspidersItem实例

movie_item['name'] = list_item.css('span.title::text').extract_first() # 提取电影名称

movie_item['score'] = list_item.css('span.rating_num::text').extract_first() # 提取电影评分

movie_item['top'] = list_item.css('div.pic em ::text').extract_first() # 提取电影排名

yield Request( # 使用yield关键字返回请求对象,Scrapy会自动处理请求并调用回调函数

url=detail_url, callback=self.parse_movie_info, cb_kwargs={'item': movie_item})

def parse_movie_info(self, response, **kwargs): # 定义parse_movie_info方法,用于解析电影详情页数据

movie_item = kwargs['item'] # 获取传入的DoubanspidersItem实例

sel = Selector(response) # 使用Selector类解析响应数据

movie_item['comment'] = sel.css('div.comment p.comment-content span.short::text').extract_first() # 提取电影评论

movie_item['introduction'] = sel.css('span[property="v:summary"]::text').extract_first().strip() or '' # 提取电影简介

yield movie_item # 返回处理后的DoubanspidersItem实例,Scrapy会自动处理并保存结果

items.py

import scrapy

class DoubanspidersItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# pass

top = scrapy.Field()

name = scrapy.Field()

score = scrapy.Field()

introduction = scrapy.Field()

comment = scrapy.Field()pipelines.py

from itemadapter import ItemAdapter

import openpyxl

import pymysql

class DoubanspidersPipeline:

def __init__(self):

self.conn = pymysql.connect(

host='localhost',

port=3306,

user='root',

password='789456MLq',

db='sx_douban250',

charset='utf8mb4'

)

self.cursor = self.conn.cursor()

self.data = []

def close_spider(self,spider):

if len(self.data) > 0:

self._write_to_db()

self.conn.close()

def process_item(self, item, spider):

self.data.append(

(item['top'],item['name'],item['score'],item['introduction'],item['comment'])

)

if len(self.data) == 100:

self._writer_to_db()

self.data.clear()

return item

def _writer_to_db(self):

self.cursor.executemany(

'insert into doubantop250 (top,name,score,introduction,comment)'

'values (%s,%s,%s,%s,%s)',

self.data

)

self.conn.commit()

from pymongo import MongoClient

class MyMongoDBPipeline:

def __init__(self):

self.client = MongoClient('mongodb://localhost:27017/')

self.db = self.client['sx_douban250']

self.collection = self.db['doubantop250']

self.data = []

def close_spider(self, spider):

if len(self.data) > 0:

self._write_to_db()

self.client.close()

def process_item(self, item, spider):

self.data.append({

'top': item['top'],

'name': item['name'],

'score': item['score'],

'introduction': item['introduction'],

'comment': item['comment']

})

if len(self.data) == 100:

self._write_to_db()

self.data.clear()

return item

def _write_to_db(self):

self.collection.insert_many(self.data)

self.data.clear()

class ExcelPipeline:

def __init__(self):

self.wb = openpyxl.Workbook()

self.ws = self.wb.active

self.ws.title = 'Top250'

self.ws.append(('排名','评分','主题','简介','评论'))

def open_spider(self,spider):

pass

def close_spider(self,spider):

self.wb.save('豆瓣Top250.xlsx')

def process_item(self,item,spider):

self.ws.append(

(item['top'], item['name'], item['score'], item['introduction'], item['comment'])

)

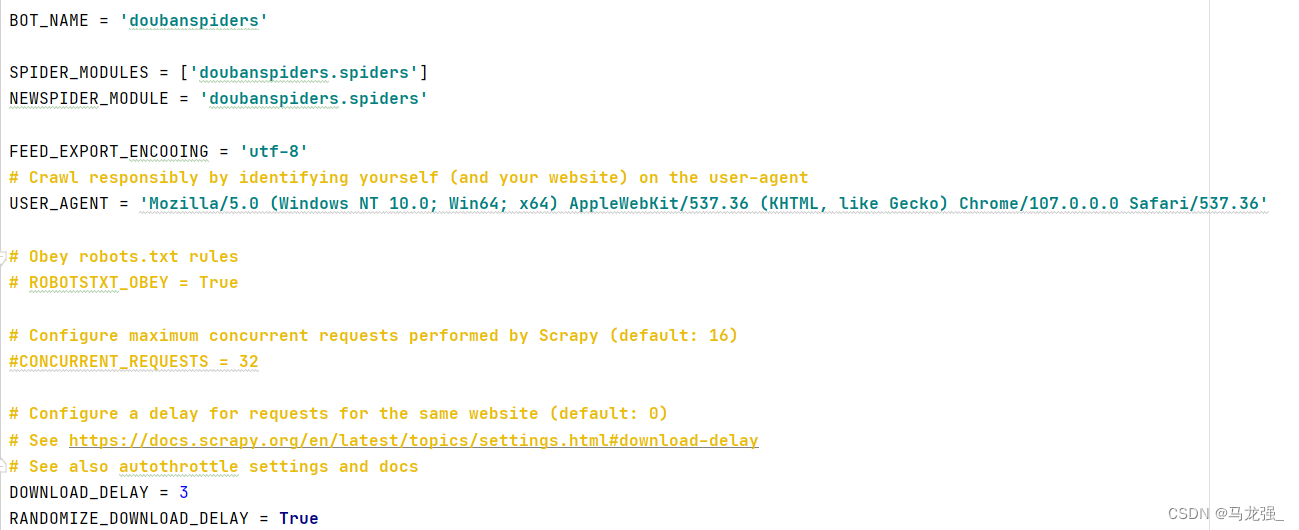

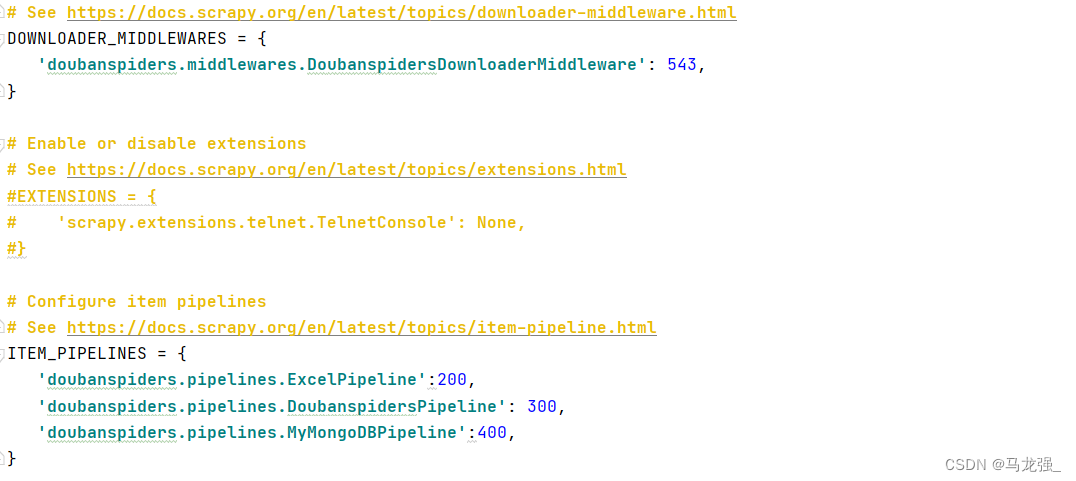

return itemsettings.py相关内容修改

文章来源:https://blog.csdn.net/m0_74972727/article/details/134884967

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!