文献速递:多模态影像组学文献分享:多模态图注意力网络用于COVID-19预后预测

文献速递:多模态影像组学文献分享:多模态图注意力网络用于COVID-19预后预测

01

文献速递介绍

在处理像 COVID-19 这样的新出现的疾病时,患者和疾病特定因素(例如,体重或已知共病)对疾病的即时进展的影响在很大程度上是未知的。对最可能的个体疾病进展进行准确预测可以改善有限资源的规划并为患者找到最佳治疗方案。在 COVID-19 的情况下,通常只能通过急性指标(例如,呼吸率、血氧水平)在短时间内确定肺炎患者是否需要进入重症监护室(ICU),而统计分析和集成所有可用数据的决策支持系统可以使预后更早进行。为此,我们提出了一种整体的、多模态的基于图的方法,结合成像和非成像信息。具体来说,我们引入了一种多模态相似性度量,以构建显示患者聚类的群体图。对于图中的每个患者,我们从用作潜在图像特征编码器的分割网络中提取放射组学特征。将这些模式与临床患者数据(如生命体征、人口统计数据和实验室结果)结合起来,形成每个患者的多模态表示。这种特征提取与基于图像的 Graph Attention Network 一起端到端训练,以处理群体图并预测 COVID-19 患者的结果:进入 ICU、需要呼吸机和死亡率。为了结合多种模式,从使用分割神经网络的胸部 CT 中提取放射组学特征。在德国慕尼黑 Klinikum rechts der Isar 收集的数据集和公开可用的 iCTCF 数据集上的结果表明,我们的方法优于单一模态和非图基线。此外,我们的聚类和图注意力增加了对群体图中患者关系的理解,并提供了对网络决策过程的见解

Title

题目

Multimodal graph attention network for COVID?19 outcomeprediction

多模态图注意力网络用于COVID-19预后预测

Methods

方法

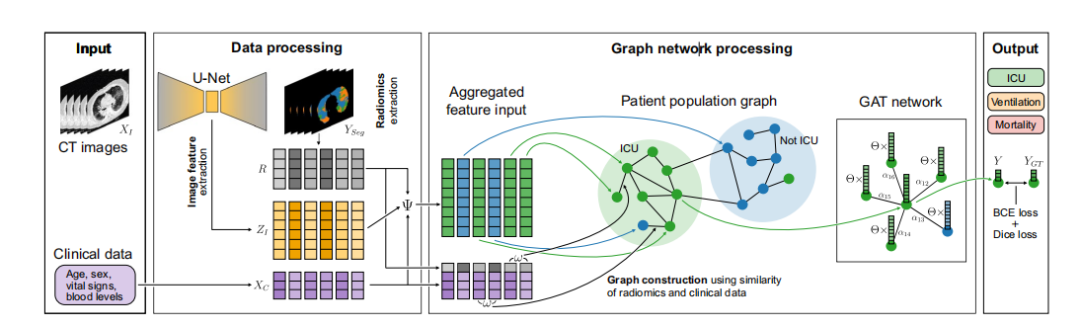

Our proposed method provides an efective way to process multimodal patient information such as CT imagesXI combined with clinical data XC for disease outcome prediction of patients, as shown in Fig. 1. For a COVID-19 patient admitted to the hospital, the three outcomes we predict are the need for ICU admission, the need formechanical ventilation, and the survival of the patient (for our in-house dataset), while we predict severity forthe iCTCF dataset. Additionally, we use the segmentation of COVID-19 pathologies as an auxiliary target to improve the training. From the segmentation output, we calculate radiomic features R that represent the relative

burden of the lung for each pathology class. To efectively incorporate the diferent modalities, we introducea new framework that combines the segmentation capabilities of U-Net with the analytic strengths of GCNs.Tis network uses a population graph constructed with the similarity of clinical patient data XC and radiomicfeatures R to refne the image features of each patient. Te proposed method operates end-to-end to perform

an ideal combination of image feature representation learning, U-Net image segmentation, and graph data

processing. Te graph is pre-computed before training, and at test time, patients are dynamically connected tothe graph of patients in the training set to ensure no data leaking during training and allow for usage fexibilityin a clinical setting

我们提出的方法为处理诸如 CT 图像 XI 与临床数据 XC 相结合的多模态患者信息提供了一种有效的方式,用于预测患者的疾病结果,如图 1 所示。对于入院的 COVID-19 患者,我们预测的三个结果是需要进入重症监护室 (ICU)、需要机械通气以及患者的存活情况(对于我们内部的数据集),而对于 iCTCF 数据集我们预测严重程度。此外,我们使用 COVID-19 病理的分割作为辅助目标来改善训练。从分割输出中,我们计算代表每种病理类别肺部相对负担的放射组学特征 R。为了有效地整合不同的模态,我们引入了一个新框架,结合了 U-Net 的分割能力和 GCNs 的分析强度。该网络使用一个以临床患者数据 XC 和放射组学特征 R 的相似性构建的群体图来精细化每个患者的图像特征。所提出的方法从头到尾进行操作,以实现图像特征表示学习、U-Net 图像分割和图数据处理的理想结合。该图在训练前预先计算,而在测试时,患者动态地连接到训练集中的患者图中,以确保训练过程中没有数据泄露,并允许在临床环境中灵活使用。

Conclusions

结论

In this work, we developed and evaluated a method to efectively leverage multimodal information for the out

come prediction of COVID-19 patients. Here, the said information in the form of CT lung scans, clinical data,

and radiomics was incorporated into a graph structure and processed within a GAT to stabilize and support

the prediction based on data similarity. With U-GAT, we propose an end-to-end methodology that segmentspatient pathologies in medical images and uses a combination of imaging and non-imaging data to predictclinical outcomes. We explicitly incorporate automatically extracted lung radiomics in our architecture anddemonstrate increased performance. We show that the auxiliary segmentation of COVID-19 pathologies indeedimproves outcome prediction. To create the patient population graph, we propose a novel graph constructionbased on feature weighting utilizing mutual information, efectively clustering relevant patients. Our attentionanalysis imparts an additional layer of transparency, potentially increasing clinicians’ confdence in our predictive

approach. Tis added clarity can assist in identifying comparable patients from previous cases, thus informingand guiding the treatment trajectory for the current patient under consideration. Tis study underscores thepotential of graph-based, data-driven strategies in improving patient care and decision-making in challengingclinical settings using multiple modalities.

在这项工作中,我们开发并评估了一种有效利用多模态信息来预测 COVID-19 患者结果的方法。在这里,CT 肺部扫描、临床数据和放射组学等所述信息被整合到一个图结构中,并在 GAT 中处理,以稳定和支持基于数据相似性的预测。通过 U-GAT,我们提出了一种端到端的方法,该方法分割医学图像中的患者病理,并使用成像和非成像数据的组合来预测临床结果。我们在架构中显式地整合了自动提取的肺部放射组学,并展示了性能的提升。我们展示了 COVID-19 病理的辅助分割确实提高了结果预测。为了创建患者群体图,我们提出了一种基于利用互信息的特征加权的新颖图构造方法,有效地聚集了相关患者。我们的注意力分析增加了额外的透明度层,可能增加临床医生对我们预测方法的信心。这种增加的清晰度可以帮助识别以前案例中的可比患者,从而为当前正在考虑的患者的治疗路径提供信息和指导。这项研究强调了基于图的、数据驱动的策略在使用多种模态改善患者护理和决策方面的潜力,特别是在具有挑战性的临床环境中。

Figure

图

Figure 1. U-GAT is an end-to-end model, integrating learned image and radiomic features (ZI and R) with

clinical metadata XC-such as age, sex, vital signs, and blood levels-for disease outcome prediction. Diseaseafected area segmentation YSeg in CT images XI aids in extracting radiomic features R and regularizes imagefeature ZI extraction. Tese features coalesce into a multimodal vector via function . Test patients cluster withtraining patients in a graph based on radiomic and clinical data feature distance ω. A Graph Attention Network(GAT) then refnes the features to predict the most probable outcome Y, utilizing learned linear transformationand patient attention coefcients αij. Comparison to outcome ground truth YGT is facilitated by binarycross-entropy (BCE), while the Dice loss aids in the auxiliary segmentation task with manual ground truth. Inthe COVID-19 context, we segment lung CT image pathologies and predict patient ICU admission, ventilationneed, and survival for the KRI dataset, and severity for the iCTCF dataset (not shown here).

图1. U-GAT是一个端到端的模型,它将学习到的图像和放射性特征(ZI和R)与临床元数据XC(如年龄、性别、生命体征和血液水平)结合起来,用于疾病结果预测。计算机断层扫描(CT)图像XI中受疾病影响区域的分割YSeg有助于提取放射性特征R,并规范化图像特征ZI的提取。这些特征通过函数汇聚成一个多模态向量。测试患者基于放射性和临床数据特征距离ω与训练患者在图中聚集。然后,图注意力网络(GAT)细化这些特征以预测最可能的结果Y,利用学习到的线性变换和患者注意系数αij。与结果基准YGT的比较通过二元交叉熵(BCE)促进,而Dice损失则在辅助分割任务中帮助与人工基准的比较。在COVID-19的背景下,我们对肺部CT图像病理进行分割,并预测KRI数据集中患者的重症监护室(ICU)入院、呼吸机需求和生存情况,以及iCTCF数据集的严重程度(此处未显示)。

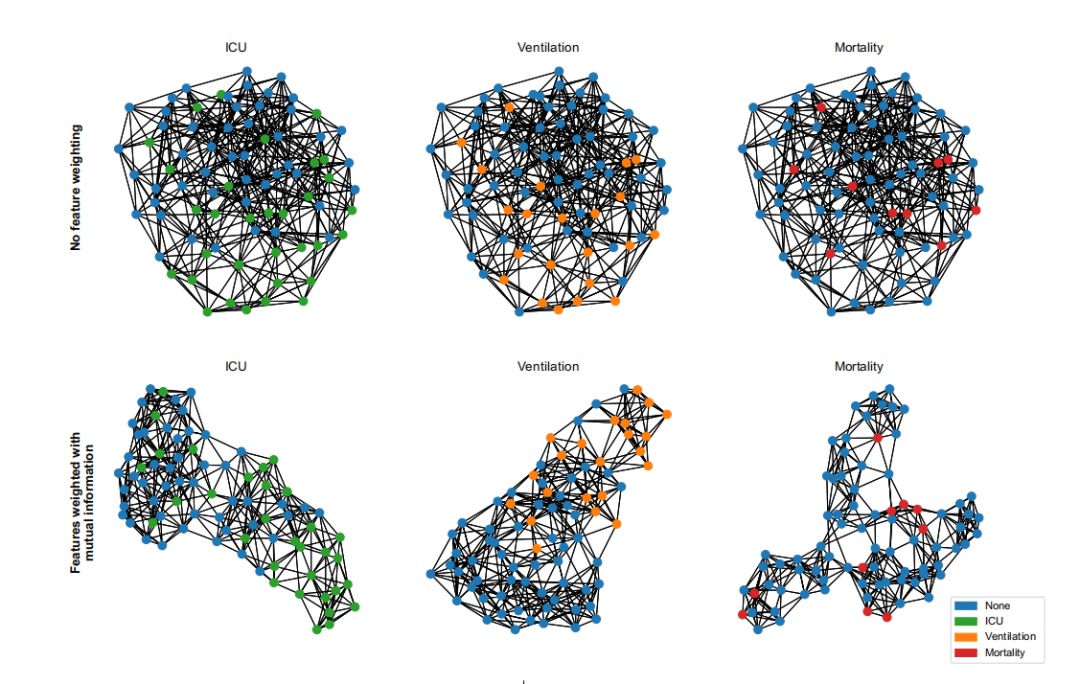

Figure 2. Te initial patient clustering, visualized for the KRI dataset, is based on clinical and radiomic feature

similarity. Te top row displays graphs created by linking each node to its seven nearest neighbors based onEuclidean distance. To optimize this graph construction for the task at hand, we propose feature weighting in thedistance calculation, informed by its task-specifc mutual information56 of features (bottom row). Tis prioritizesessential features in clustering and tailors the graph for specifc tasks without needing feature selection or priorknowledge.

图2. 最初的患者聚类,针对KRI数据集进行了可视化,基于临床和放射性特征的相似性。顶部行显示的图是通过将每个节点与基于欧几里得距离的七个最近邻居连接起来创建的。为了优化这种图构造以适应手头的任务,我们提出在距离计算中加入特征权重,这是由其特定任务的特征间的互信息56所决定的(底部行)。这在聚类中优先考虑了关键特征,并在不需要特征选择或先验知识的情况下为特定任务定制图。

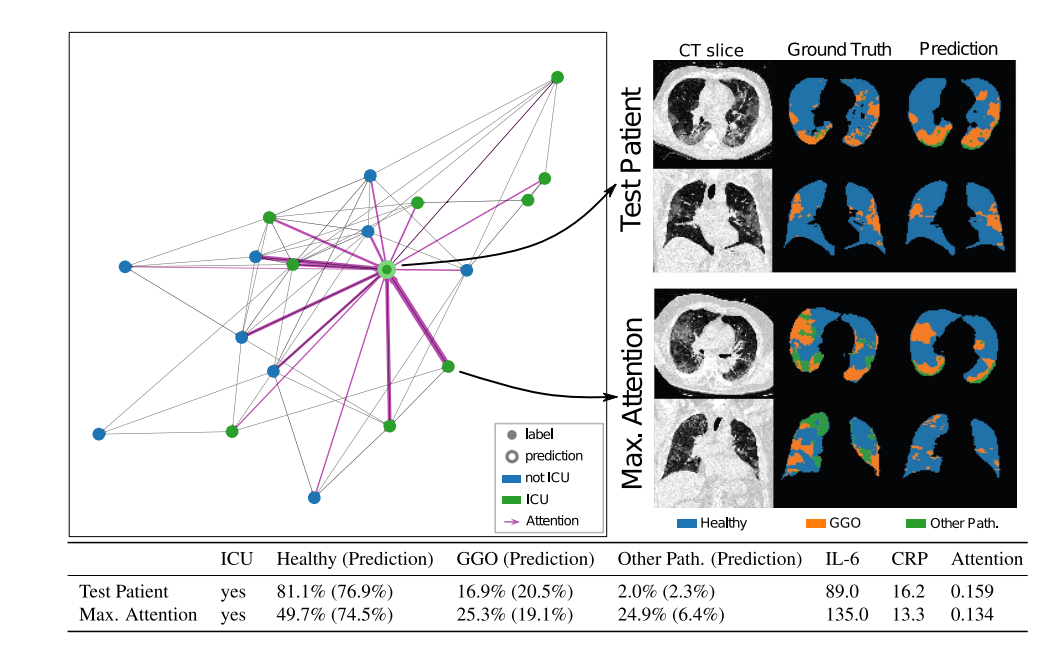

Figure 3. KRI dataset—Lef: Batch graph showing the attention scores of a single test patient. Te line’s

thickness corresponds to the respective neighbors’ attention score afer two hops. Right: CT images,

segmentation ground truth, and predicted segmentation of a single axial and coronal slice from the test patientand the neighbor with maximum attention. Bottom: Most important features for the test patient and theneighbor with maximum attention. In brackets, the radiomics predicted by the pretrained U-Net are shown.

图 3. KRI 数据集 - 左图:显示单个测试病人注意力得分的批处理图。线条的粗细对应于两跳之后相邻节点的注意力得分。右图:来自测试病人和注意力最高的邻居的单个轴向和冠状切片的 CT 图像、分割真值和预测分割。底部:测试病人和注意力最高的邻居的最重要特征。括号中显示了由预训练的 U-Net 预测的放射组学特征。

Table

表

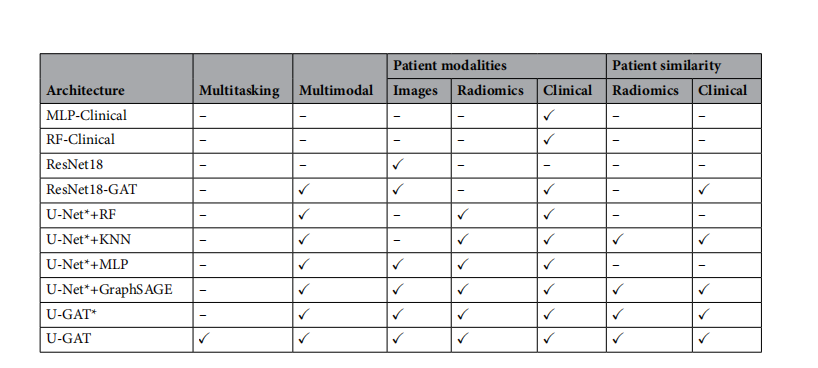

*Table 1. Backbones and classifers used for evaluation with the respective features for patients and the

*

distance metric (similarity). Images describes the latent image features extracted with an image encoder.Radiomics stands for the radiomics extracted from the segmentation networks. Clinical data includes vitalsigns, blood values, and demographic information. We compare U-GAT to other end-to-end trained methodsonly using clinical data (MLP-Clinical), only using image data (ResNet18), and a GAT with a CNN backbonewithout an auxiliary segmentation task (ResNet18-GAT). In addition, we compare the performance of diferentclassifers on the image features extracted from a frozen U-Net, marked with a , i.e., U-Net. KNN is ak-nearest neighbors classifer. GraphSAGE is a graph convolutional method without an attention mechanism

表1. 用于评估的主干网络和分类器及其对应的患者特征和距离度量(相似性)。图像描述了用图像编码器提取的潜在图像特征。放射性代表从分割网络中提取的放射性特征。临床数据包括生命体征、血液值和人口统计信息。我们将U-GAT与其他仅使用临床数据(MLP-Clinical)、仅使用图像数据(ResNet18)的端到端训练方法以及没有辅助分割任务的具有CNN主干的GAT(ResNet18-GAT)进行比较。此外,我们比较了不同分类器在从冻结的U-Net提取的图像特征上的性能,标记为,即U-Net。KNN是k近邻分类器。GraphSAGE是一种没有注意机制的图卷积方法57。多任务指的是分类和分割的联合训练。

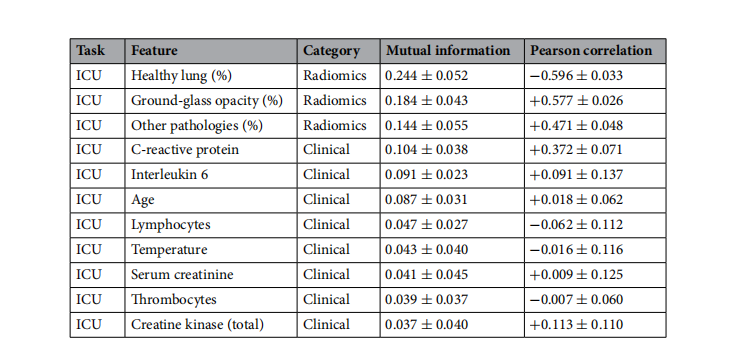

Table 2. Top 10 features sorted by the mutual information for each task and its Pearson correlation in the KRIdataset. Te average is calculated on the training sets of all repetitions.

表2. 根据每项任务的互信息对KRI数据集中的前10个特征进行排序,并计算其在KRI数据集中的皮尔逊相关系数。平均值是在所有重复的训练集上计算得出的。

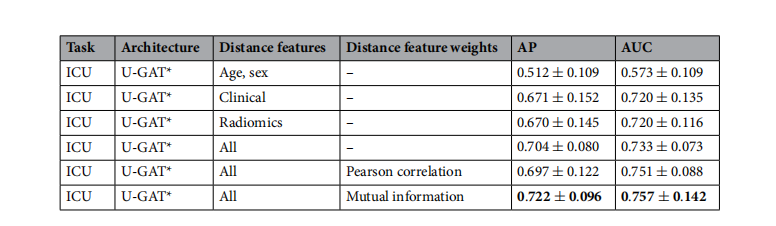

Table 3. Evaluation of edge features and their weighting used for distance calculation on the validation set ofthe KRI dataset. Highest values are in bold.

表3. 对KRI数据集验证集上用于距离计算的边缘特征及其权重的评估。最高值以粗体显示。

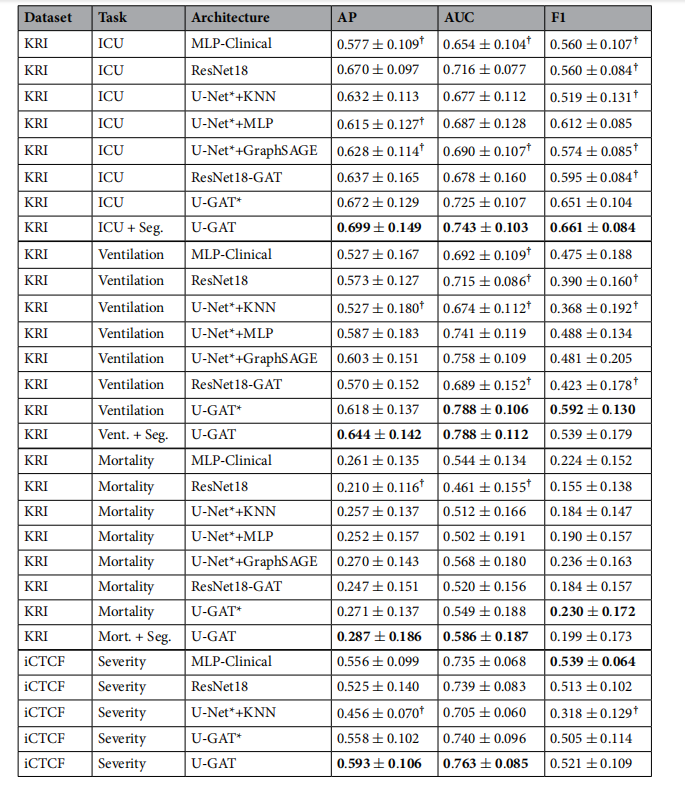

Table 4. Ablative testing and comparison with an MLP only using clinical data and a ResNet18 only using

image data as input on all tasks. Highest values per task are in bold. U-GAT* refers to the proposed methodusing image and radiomic features extracted from frozen U-Net trained on the same annotations as the end-toend U-GAT. Values marked with ? indicate statistical signifcance with p < 0.05 based on the Wilcoxon’s ranktest comparing the proposed method with every other baseline.

表4. 对仅使用临床数据的MLP和仅使用图像数据的ResNet18在所有任务上进行消融测试和比较。每项任务的最高值以粗体显示。U-GAT*指的是使用从在与端到端U-GAT相同注释上训练的冻结U-Net中提取的图像和放射性特征的提出方法。标有?的值表示基于Wilcoxon等级测试,与每个其他基线方法相比,具有p < 0.05的统计显著

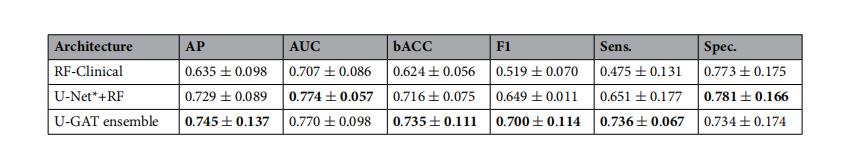

Table 5. Comparative analysis of ICU outcome prediction on the KRI dataset: U-GAT vs its cross-validationensemble, a random forest model using only clinical data, and another random forest model incorporating allavailable tabular data, including radiomics extracted with a pretrained U-Net. Highest values are in bold.

ther random forest model incorporating allavailable tabular data, including radiomics extracted with a pretrained U-Net. Highest values are in bold.*

表5. 在KRI数据集上对重症监护室(ICU)结果预测的比较分析:U-GAT与其交叉验证集成、仅使用临床数据的随机森林模型,以及包含所有可用表格数据的另一个随机森林模型,其中包括使用预训练的U-Net提取的放射性特征。最高值以粗体显示。

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!