【运维知识大神篇】运维界的超神器Kubernetes教程2(资源清单编写+镜像下载策略+容器重启策略+资源限制详解+端口映射详解+K8s故障排查技巧)

本篇文章继续给大家介绍Kubernetes,主要是Pod的进阶知识,包括资源清单的编写,镜像下载策略,容器重启策略,资源限制详解,端口映射详解,五大K8s故障排查技巧。带你深入了解Pod的魅力。

目录

资源清单编写

要擅于使用 kubectl explain 查看文档,及官方文档的查看

环境变量传递,一个资源清单运行两个项目,可看上一篇文章的实战项目

镜像下载策略

镜像下载策略,指的是利用k8s启动容器时,是如何下载镜像的,有三种策略。

Always:总是取拉去最新的镜像,本地有的话会覆盖;

Never:如果本地有镜像,则会用本地镜像,尝试启动容器;如果本地没有镜像,则永远不会去拉取尝试镜像;

IfNotPresent:如果本地有镜像,本地镜像存在同名称的tag,会取出镜像的RepoDigests(镜像摘要)和远程仓库的RepoDigests进行比较,若比较结果相同,则直接使用本地缓存镜像,若比较结果不同,则会拉去远程仓库的最新镜像。

当镜像标签是latest时,默认策略是Always;当镜像标签是自定义时(也就是标签不是latest),那么默认策略是IfNotPresent。

我们准备在仓库提前上传一个镜像,在本地再准备一个同名镜像,测试修改资源清单的配置文件判断是否在仓库下载了镜像

1、worker232打包镜像,远程上传linux1,linux2目录都创建的,本地删除,再打包个只创建linux1目录的

#编写要上传到仓库的dockerfile

[root@Master232 dockerfile]# cat dockerfile

FROM alpine:latest

RUN mkdir /koten-linux1

RUN mkdir /koten-linux2

CMD ["tail","-f","/etc/hosts"]

#构建dockerfile

[root@Master232 dockerfile]# docker build -t harbor.koten.com/koten-linux/alpine:latest .

#上传dockerfile到私有仓库

[root@Master232 dockerfile]# docker push harbor.koten.com/koten-linux/alpine:latest

#删除本地镜像,此时本地没有镜像

[root@Master232 dockerfile]# docker rmi harbor.koten.com/koten-linux/alpine:latest

Untagged: harbor.koten.com/koten-linux/alpine:latest

Untagged: harbor.koten.com/koten-linux/alpine@sha256:64e6642cba7dae211c954797d59e42368dcb3e12ee03d4a443660382548b827c

Deleted: sha256:46b6cef27ac0c2db9e67e6242986f4bf5eb229cc94adece6071ae9792985c6bf

Deleted: sha256:9838d1c75c25086c17bdfd0f7ae5d8b7fe10260797065e0e6670b6c7f4de3e37

Deleted: sha256:ebddcc1cca4e837f6c4a3242d887d07aae17266cd02a41333571a504bc726bb6

#修改dockerfile为只创建一个目录

[root@Master232 dockerfile]# cat dockerfile

FROM alpine:latest

RUN mkdir /koten-linux1

CMD ["tail","-f","/etc/hosts"]

#再次构建镜像

[root@Master232 dockerfile]# docker build -t harbor.koten.com/koten-linux/alpine:latest .2、编写资源清单,测试镜像

此时的仓库镜像为创建两个目录的,而本地镜像为创建一个目录的,我们通过资源清单运行

测试IfNotPresent下载策略

[root@Master231 pod]# cat 05-pods-download.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-pull-policy

spec:

# nodeName: worker233

nodeName: worker232

containers:

- name: image-pull-policy

image: harbor.koten.com/koten-linux/alpine:latest

# 镜像的拉取策略

# imagePullPolicy: Always

# imagePullPolicy: Never

imagePullPolicy: IfNotPresent

[root@Master231 pod]# kubectl apply -f 05-pods-download.yaml

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-pull-policy 1/1 Running 0 47s

#发现只有linux1,本地有镜像所以不再下载

[root@Master231 pod]# kubectl exec -it image-pull-policy -- ls /

bin koten-linux1 opt sbin usr

dev lib proc srv var

etc media root sys

home mnt run tmp

?测试Always下载策略

[root@Master231 pod]# cat 05-pods-download.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-pull-policy

spec:

# nodeName: worker233

nodeName: worker232

containers:

- name: db

image: harbor.koten.com/koten-linux/alpine:latest

# 镜像的拉取策略

imagePullPolicy: Always

# imagePullPolicy: Never

# imagePullPolicy: IfNotPresent

[root@Master231 pod]# kubectl delete pods --all

[root@Master231 pod]# kubectl apply -f 05-pods-download.yaml

pod/image-pull-policy created

#发现有两个目录,所以确定是在远端下载运行了

[root@Master231 pod]# kubectl exec -it image-pull-policy -- ls /

bin koten-linux1 mnt run tmp

dev koten-linux2 opt sbin usr

etc lib proc srv var

home media root sys测试Never下载策略

[root@Master231 pod]# cat 05-pods-download.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-pull-policy

spec:

# nodeName: worker233

nodeName: worker232

containers:

- name: db

image: harbor.koten.com/koten-linux/alpine:latest

# 镜像的拉取策略

# imagePullPolicy: Always

imagePullPolicy: Never

# imagePullPolicy: IfNotPresent

[root@Master231 pod]# kubectl apply -f 05-pods-download.yaml

pod/image-pull-policy created

#选择Never,刚刚本地被覆盖了,已经有创建两个目录的镜像了,所以采用本地镜像运行

[root@Master231 pod]# kubectl exec -it image-pull-policy -- ls /

bin koten-linux1 mnt run tmp

dev koten-linux2 opt sbin usr

etc lib proc srv var

home media root sys

#删除镜像再次运行资源清单

[root@Worker232 dockerfile]# docker image rm -f harbor.koten.com/koten-linux/alpine:latest

Untagged: harbor.koten.com/koten-linux/alpine:latest

Untagged: harbor.koten.com/koten-linux/alpine@sha256:a5b9fbd47d6897edcf1f6a8d6df13bbc1005c54241256069653499c53e66bc2c

#发现并没有去寻找镜像,直接报错

[root@Master231 pod]# kubectl apply -f 05-pods-download.yaml

pod/image-pull-policy created

[root@Master231 pod]# kubectl exec -it image-pull-policy -- ls /

error: unable to upgrade connection: container not found ("db")容器重启策略

容器退出时,容器是否重新创建新的容器,有三个参数。

Always:容器退出时,始终重启容器(创建新容器),是默认重启策略

Never:容器退出时,不重启容器(不创建新容器)

OnFailure:当容器异常退出时(kill -9时容器的退出码不是0,是137),重启容器(即创建容器),当容器正常退出(docker stop,退出码为0),不重启容器。

当Pod中的容器退出时,kubelet会按照指数回退方式计算重启的延迟(10s、20s、40s......),最长延迟为5分钟,如果容器重启后执行10分钟没有出现问题,kubelet会重启这个延迟时间。

注意:

1、无论容器的重启策略是什么,当我们手动使用它docker移除容器时,K8S均会自动拉起并不会记录重启次数;

2、当容器非正常退出(即异常退出,可以使用kill -9模拟)时,Always和OnFailure这两种策略会重新拉起POD并会记录重启次数;

3、当任务正常退出时(docker stop、docker rm),只有Always可以重启任务并记录重启次数,但是Always有时候也记录不上正常退出的,关停后重启了,但是次数没有加;

4、kubectl delete pods --all删除Pod不会触发重启策略。编写资源清单依次按照三个重启策略运行

[root@Master231 pod]# cat 06-pods-restartPolicy.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-restart-policy-01

spec:

nodeName: worker232

# 指定容器的重启策略

restartPolicy: Always

# restartPolicy: Never

# restartPolicy: OnFailure

containers:

- name: db

image: harbor.koten.com/koten-linux/alpine:latest

imagePullPolicy: Always

# 修改容器的运行命令

command: ["sleep","60"]

测试Always重启策略

[root@Master231 pod]# kubectl apply -f 06-pods-restartPolicy.yaml

pod/image-restart-policy-01 created

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-restart-policy-01 1/1 Running 0 6s

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

91da1ebbe971 harbor.koten.com/koten-linux/alpine "sleep 60" 18 seconds ago Up 17 seconds k8s_db_image-restart-policy-01_default_428e644e-f9c4-41a2-8460-afd16bf8d5bf_0

#用docker停止容器,但是立即重启

[root@Worker232 dockerfile]# docker stop 91da1ebbe971

91da1ebbe971

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4c5515f0c9ed harbor.koten.com/koten-linux/alpine "sleep 60" 4 seconds ago Up 3 seconds k8s_db_image-restart-policy-01_default_428e644e-f9c4-41a2-8460-afd16bf8d5bf_1

[root@Worker232 dockerfile]# ps -axu|grep sleep

root 74726 0.0 0.0 1568 252 ? Ss 20:36 0:00 sleep 60

root 74902 0.0 0.0 112808 968 pts/0 S+ 20:37 0:00 grep --color=auto sleep

[root@Worker232 dockerfile]# kill -9 74726

[root@Worker232 dockerfile]# ps -axu|grep sleep

root 75004 0.0 0.0 112808 968 pts/0 S+ 20:37 0:00 grep --color=auto sleep

#等了一会儿,又出现了

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

15ccf66844ca harbor.koten.com/koten-linux/alpine "sleep 60" 9 seconds ago Up 8 seconds k8s_db_image-restart-policy-01_default_428e644e-f9c4-41a2-8460-afd16bf8d5bf_2

#主节点会记录重启次数

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-restart-policy-01 0/1 CrashLoopBackOff 2 (20s ago) 2m59s测试Never重启策略

[root@Master231 pod]# cat 06-pods-restartPolicy.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-restart-policy-01

spec:

nodeName: worker232

# 指定容器的重启策略

# restartPolicy: Always

restartPolicy: Never

# restartPolicy: OnFailure

containers:

- name: db

image: harbor.koten.com/koten-linux/alpine:latest

imagePullPolicy: Always

# 修改容器的运行命令

command: ["sleep","60"]

[root@Master231 pod]# kubectl delete pods --all

pod "image-restart-policy-01" deleted

[root@Master231 pod]# kubectl apply -f 06-pods-restartPolicy.yaml

pod/image-restart-policy-01 created

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e817061abc25 harbor.koten.com/koten-linux/alpine "sleep 60" 12 seconds ago Up 10 seconds k8s_db_image-restart-policy-01_default_0c9b4e80-3fd7-475a-85c0-9ddca90b7860_0

[root@Worker232 dockerfile]# docker stop e817061abc25

#停止后Pod状态变成Error(容器异常退出),不会再次启动

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-restart-policy-01 0/1 Error 0 58s测试OnFailure重启策略

[root@Master231 pod]# cat 06-pods-restartPolicy.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-restart-policy-01

spec:

nodeName: worker232

# 指定容器的重启策略

# restartPolicy: Always

# restartPolicy: Never

restartPolicy: OnFailure

containers:

- name: db

image: harbor.koten.com/koten-linux/alpine:latest

imagePullPolicy: Always

# 修改容器的运行命令

command: ["sleep","60"]

[root@Master231 pod]# kubectl delete pods --all

pod "image-restart-policy-01" deleted

[root@Master231 pod]# kubectl apply -f 06-pods-restartPolicy.yaml

pod/image-restart-policy-01 created

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c3d84673ec3a harbor.koten.com/koten-linux/alpine "sleep 60" 10 seconds ago Up 10 seconds k8s_db_image-restart-policy-01_default_80ba68c5-18b0-4529-9cd0-a8baa7bb370c_0

#异常退出后重启

[root@Worker232 dockerfile]# ps -axu|grep sleep

root 78357 0.0 0.0 1568 252 ? Ss 20:43 0:00 sleep 60

root 78710 0.0 0.0 112808 964 pts/0 S+ 20:44 0:00 grep --color=auto sleep

[root@Worker232 dockerfile]# kill -9 78357

[root@Worker232 dockerfile]# ps -axu|grep sleep

root 78813 0.0 0.0 112808 968 pts/0 S+ 20:44 0:00 grep --color=auto sleep

[root@Worker232 dockerfile]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

dce4aa08b442 harbor.koten.com/koten-linux/alpine "sleep 60" 32 seconds ago Up 32 seconds k8s_db_image-restart-policy-01_default_80ba68c5-18b0-4529-9cd0-a8baa7bb370c_2

#正常退出后不再重启

[root@Worker232 dockerfile]# docker stop dce4aa08b442

dce4aa08b442

#此时状态为Completed(容器正常退出)

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-restart-policy-01 0/1 Completed 2 4m6s资源限制详解

在资源清单上编写参数,用于限制Pod,Namespace的资源,比如Pod内的某个容器资源使用cpu,内存等。

拉取压测工具镜像

1、拉取测试镜像,打标签,发送至harbor私有仓库

先在harbor上创建名为koten-tools的项目

docker pull bosskoten/stress-tools:v1.0

docker tag bosskoten/stress-tools:v1.0 harbor.koten.com/koten-tools/stress:v0.1

docker push harbor.koten.com/koten-tools/stress:v0.12、编写资源清单

[root@Master231 pod]# cat 07-pods-resources.yaml

apiVersion: v1

kind: Pod

metadata:

name: image-resources-stress-04

spec:

# nodeName: worker232

restartPolicy: Always

containers:

- name: stress

# image: bosskoten/stress-tools:v1.0

image: harbor.koten.com/koten-tools/stress:v0.1

imagePullPolicy: Always

command: ["tail","-f","/etc/hosts"]

# 配置资源限制

resources:

# 指定容器期望资源,若满足不了,则无法完成调度,状态会显示异常。但是满足了也不一定能用到这些,只是根据业务需求来说,用这些合适

# 若超出资源限制,状态会显示为OutOfmemory(内存溢出异常)

requests:

# 指定内存限制

# memory: "2G"

memory: "200M"

# 1core=1000m

cpu: "250m"

# 指定资源的上限,超出则业务异常,可能是容器被终止,可能是告警,可能是节点负载增加,可能是业务性能下降

limits:

memory: "500M"

# 表示使用1.5core,即1500m

cpu: 1.5

#cpu: 0.53、运行资源清单

[root@Master231 pod]# kubectl apply -f 07-pods-resources.yaml

pod/image-resources-stress-04 created

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

image-resources-stress-04 1/1 Running 0 10s

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

image-resources-stress-04 1/1 Running 0 24s 10.100.1.10 worker232 <none> <none>

4、进行压力测试,观察是否超过限制

#启用资源清单进行测试

[root@Worker232 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1123a7e941a2 harbor.koten.com/koten-tools/stress "tail -f /etc/hosts" About a minute ago Up About a minute k8s_stress_image-resources-stress-04_default_7c870e99-76e7-42a1-807b-8b81bdca5645_0

#进行CPU压测,产生四个CPU进程1分钟后停止运行

[root@Master231 pod]# kubectl exec -it image-resources-stress-04 sh

/usr/local/stress # stress -c 4 --verbose --timeout 1m

#发现虽然指定了四颗CPU,但是CPU使用率稳定在150%左右

[root@Worker232 ~]# docker stats 1123a7e941a2

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

1123a7e941a2 k8s_stress_image-resources-stress-04_default_7c870e99-76e7-42a1-807b-8b81bdca5645_0 149.25% 316KiB / 476.8MiB 0.06% 0B / 0B 1.52MB / 0B 7

#产生2个work工作经常,并且每个工作经常占用200000000 Bytes(即200MB),且不释放内存,但不会超过400M的使用空间。

/usr/local/stress # stress -m 2 --vm-bytes 200000000 --vm-keep --verbose

#发现内存占用在400M左右,并且后面有大约500M的限制

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS

1123a7e941a2 k8s_stress_image-resources-stress-04_default_7c870e99-76e7-42a1-807b-8b81bdca5645_0 150.69% 383.1MiB / 476.8MiB 80.34% 0B / 0B 4.39MB / 0B 5端口映射详解

K8s集群外部访问K8s的Pod业务有6种方式,分别是hostNetwork;hostIP;svc的nodePort类型;apiServerr的反向代理,也是svc的CluserIP;kube-proxy;ingress;

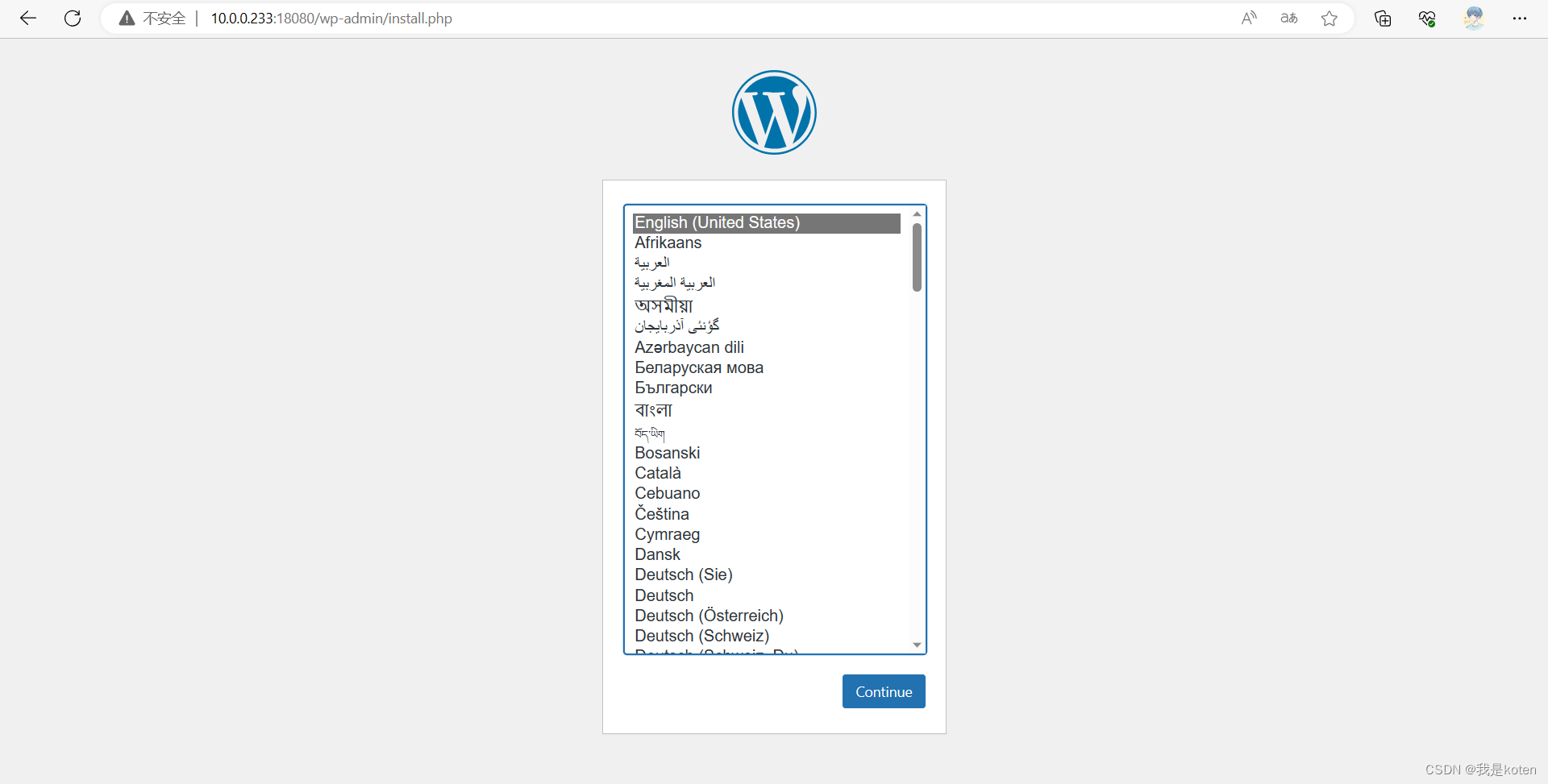

?上篇文章在实战项目中演示了了hostNetwork和端口映射,现在以wordpress举例,详细讲解下hostIP端口映射

1、先编写资源清单,端口映射可以合着写也可以分着容器写

[root@Master231 pod]# cat 08-pods-ports.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress

spec:

#hostNetwork: true

nodeName: worker233

containers:

- name: db

image: harbor.koten.com/koten-db/mysql:5.7

# 向容器传递环境变量

env:

# 变量的名称

- name: MYSQL_ALLOW_EMPTY_PASSWORD

# 指定变量的值

value: "yes"

- name: MYSQL_DATABASE

value: "wordpress"

- name: MYSQL_USER

value: "admin"

- name: MYSQL_PASSWORD

value: "123"

ports:

# 容器的端口号

- containerPort: 3306

# 容器绑定到宿主机的IP地址,若不写表示"0.0.0.0"

hostIP: 10.0.0.233

# 映射的主机端口

hostPort: 13306

# 给服务起名字,要求唯一

name: db

# 指定协议

protocol: TCP

#- containerPort: 80

# hostIP: 10.0.0.233

# hostPort: 18080

# name: wp

- name: wordpress

image: harbor.koten.com/koten-web/wordpress:v1.0

env:

- name: WORDPRESS_DB_HOST

# 指定变量的值

value: "127.0.0.1"

- name: WORDPRESS_DB_NAME

value: "wordpress"

- name: WORDPRESS_DB_USER

value: "admin"

- name: WORDPRESS_DB_PASSWORD

value: "123"

ports:

- containerPort: 80

hostIP: 10.0.0.233

hostPort: 18080

name: wp

2、运行资源清单

[root@Master231 pod]# kubectl apply -f 08-pods-ports.yaml

pod/wordpress created

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

wordpress 2/2 Running 0 5s 10.100.2.9 worker233 <none> <none>

3、查看端口发现没有端口映射,防火墙中可以找到端口转发,直接curl IP也可以curl通

[root@Worker233 ~]# ss -ntl|grep 18080

[root@Worker233 ~]# iptables -t nat -vnL|grep 18080

0 0 CNI-HOSTPORT-SETMARK tcp -- * * 10.100.2.0/24 10.0.0.233 tcp dpt:18080

0 0 CNI-HOSTPORT-SETMARK tcp -- * * 127.0.0.1 10.0.0.233 tcp dpt:18080

0 0 DNAT tcp -- * * 0.0.0.0/0 10.0.0.233 tcp dpt:18080 to:10.100.2.9:80

0 0 CNI-DN-7391794342a07a3e211cd tcp -- * * 0.0.0.0/0 0.0.0.0/0 /* dnat name: "cbr0" id: "33057eacf048ab12986c65024b0928476c1d1473cd280bda7be9d50ef8321942" */ multiport dports 13306,18080

[root@Master231 pod]# curl -I 10.100.2.9

HTTP/1.1 302 Found

Date: Thu, 15 Jun 2023 09:13:18 GMT

Server: Apache/2.4.51 (Debian)

X-Powered-By: PHP/7.4.27

Expires: Wed, 11 Jan 1984 05:00:00 GMT

Cache-Control: no-cache, must-revalidate, max-age=0

X-Redirect-By: WordPress

Location: http://10.100.2.9/wp-admin/install.php

Content-Type: text/html; charset=UTF-8

4、将WordPress案例工作节点拆分,一个pod两个容器分别运行在两个节点,要求使用ports字段进行暴露,要求wordpres在232节点,数据库在233节点,不使用宿主机网络。

编写资源清单

[root@Master231 pod]# cat 09-pods-ports-split.yaml

apiVersion: v1

kind: Pod

metadata:

name: koten-mysql

spec:

nodeName: worker233

containers:

- name: mysql

image: harbor.koten.com/koten-db/mysql:5.7

# 向容器传递环境变量

env:

# 变量的名称

- name: MYSQL_ALLOW_EMPTY_PASSWORD

# 指定变量的值

value: "yes"

- name: MYSQL_DATABASE

value: "wordpress"

- name: MYSQL_USER

value: "admin"

- name: MYSQL_PASSWORD

value: "123"

ports:

- containerPort: 3306

name: db

---

apiVersion: v1

kind: Pod

metadata:

name: koten-wordpress

spec:

nodeName: worker232

containers:

- name: wordpress

image: harbor.koten.com/koten-web/wordpress:v1.0

env:

- name: WORDPRESS_DB_HOST

# 指定变量的值

value: "127.0.0.1"

- name: WORDPRESS_DB_NAME

value: "wordpress"

- name: WORDPRESS_DB_USER

value: "admin"

- name: WORDPRESS_DB_PASSWORD

value: "123"

ports:

- containerPort: 80

hostIP: 10.0.0.232

hostPort: 18080

name: wp

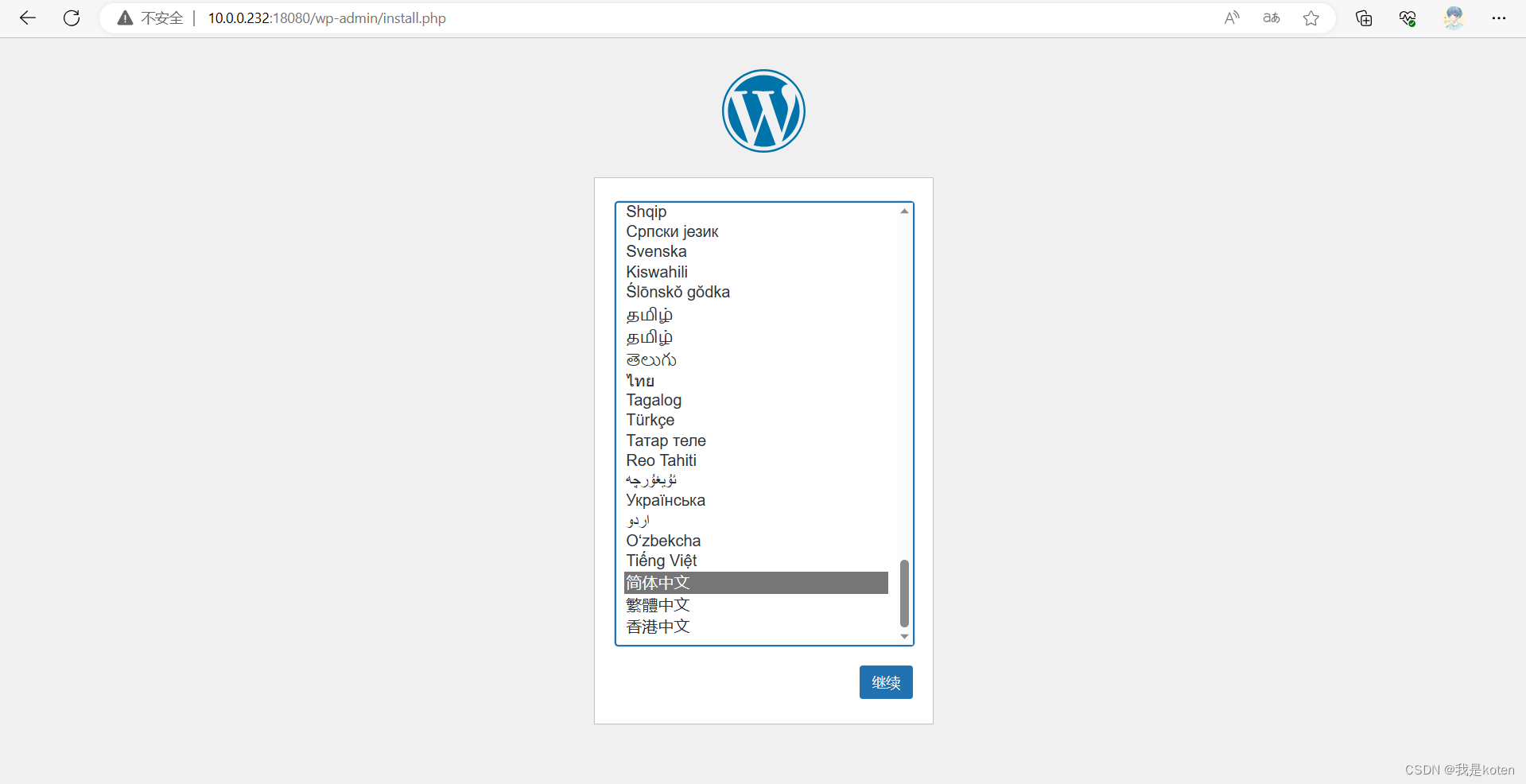

运行资源清单查看mysql的IP?

[root@Master231 pod]# kubectl apply -f 09-pods-ports-split.yaml

pod/koten-mysql created

pod/koten-wordpress created

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

koten-mysql 1/1 Running 0 40s 10.100.2.10 worker233 <none> <none>

koten-wordpress 1/1 Running 0 40s 10.100.1.32 worker232 <none> <none>

获取到IP后修改资源清单,只删除后wordpress的pod,再apply更新

[root@Master231 pod]# cat 09-pods-ports-split.yaml

......

- name: WORDPRESS_DB_HOST

# 指定变量的值

value: "10.100.2.10"

......

[root@Master231 pod]# kubectl delete pod koten-wordpress

pod "koten-wordpress" deleted

[root@Master231 pod]# kubectl apply -f 09-pods-ports-split.yaml

pod/koten-mysql unchanged

pod/koten-wordpress created

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

koten-mysql 1/1 Running 0 8m35s 10.100.2.10 worker233 <none> <none>

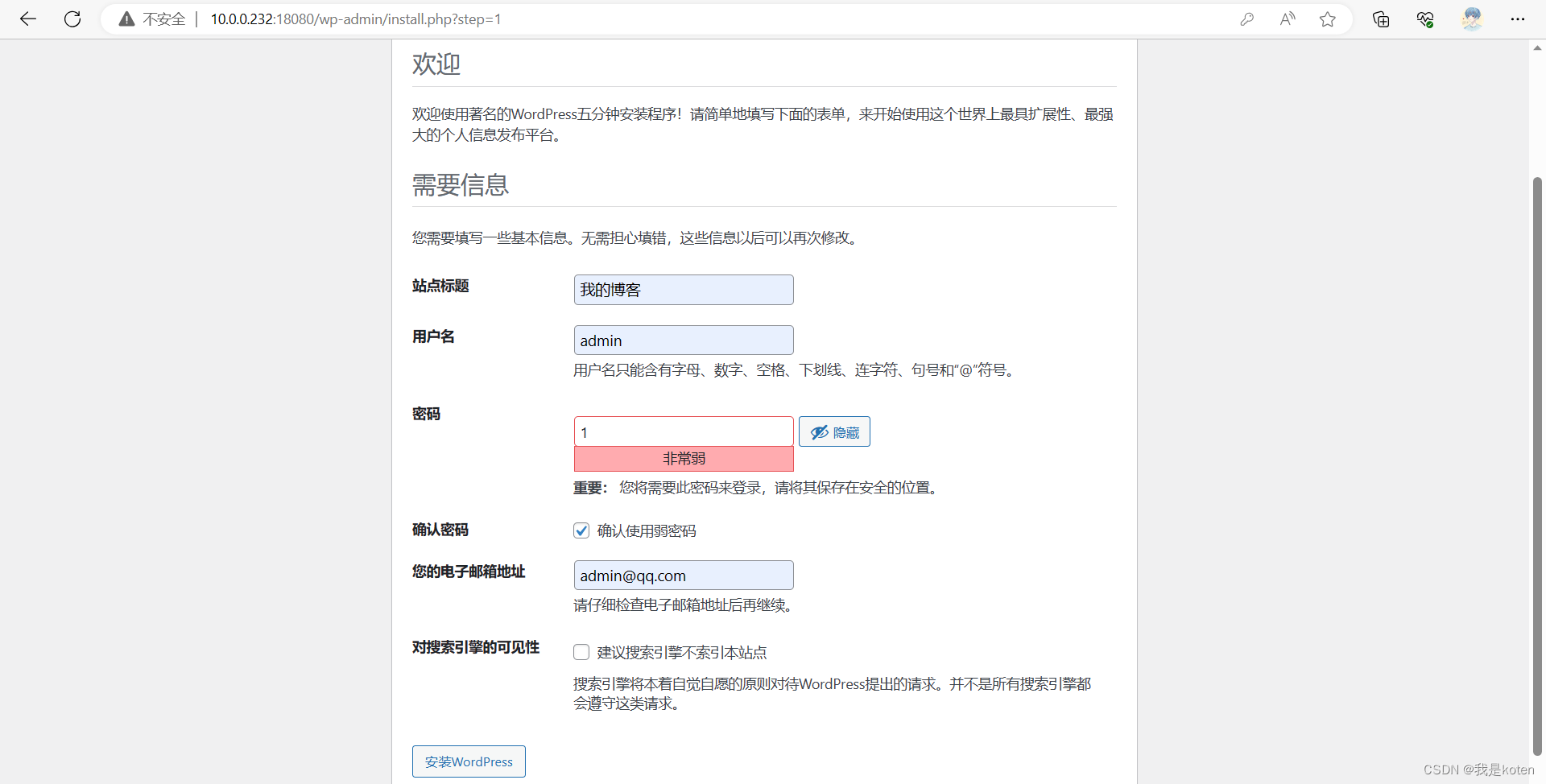

koten-wordpress 1/1 Running 0 17s 10.100.1.33 worker232 <none> <none>可以curl通,浏览器访问正常,部署正常

由于是80的端口映射在宿主机的18080端口上,所以我们curl容器的ip不带端口,默认80,浏览器访问宿主机的IP需要带18080端口

[root@Master231 pod]# curl -I 10.100.1.33

HTTP/1.1 302 Found

Date: Thu, 15 Jun 2023 13:22:14 GMT

Server: Apache/2.4.51 (Debian)

X-Powered-By: PHP/7.4.27

Expires: Wed, 11 Jan 1984 05:00:00 GMT

Cache-Control: no-cache, must-revalidate, max-age=0

X-Redirect-By: WordPress

Location: http://10.100.1.33/wp-admin/install.php

Content-Type: text/html; charset=UTF-8

直接跳转到了这一步说明传输的信息有效了?

K8s故障排查技巧

一、kubectl descrribe

查看资源的详细信息,根据事件信息获取当前资源的状态,从而给出解决方案

三种查看方式

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

koten-mysql 1/1 Running 0 14m

koten-wordpress 1/1 Running 0 5m43s

[root@Master231 pod]# kubectl describe -f 09-pods-ports-split.yaml

[root@Master231 pod]# kubectl describe pod koten-wordpress

[root@Master231 pod]# kubectl describe pod/koten-wordpress

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 33m kubelet Container image "harbor.koten.com/koten-web/wordpress:v1.0" already present on machine

Normal Created 33m kubelet Created container wordpress

Normal Started 33m kubelet Started container wordpress

[root@Master231 pod]# 一般情况下我们查看里面的Events即可,里面有报错信息,如果Pod状态显示Pending,没有Events,可以观察详细信息的Node字段与节点信息的名称是否一致

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

koten-mysql 1/1 Running 0 17s 10.100.2.11 worker233 <none> <none>

koten-wordpress 0/1 Pending 0 17s <none> work232 <none> <none>

[root@Master231 pod]# kubectl describe pod koten-wordpress

[root@Master231 pod]# kubectl describe pod koten-wordpress

Name: koten-wordpress

Namespace: default

Priority: 0

Node: work232/

Labels: <none>

Annotations: <none>

......

Events: <none>

[root@Master231 pod]# kubectl get no

NAME STATUS ROLES AGE VERSION

master231 Ready control-plane,master 29h v1.23.17

worker232 Ready <none> 29h v1.23.17

worker233 Ready <none> 29h v1.23.17

二、kubectl logs

通过容器启动的日志查看

实时查看某个Pod的日志

[root@Master231 pod]# kubectl logs -f games

+ nginx

+ '[' -n ]

+ '[' -n ]

+ echo root:123

+ chpasswd

chpasswd: password for 'root' changed

+ ssh-keygen -A

+ /usr/sbin/sshd -D

查看Pod中指定容器的日志

[root@Master231 pod]# kubectl logs -f koten-wp -c wordpress查看Pod中某个容器最近2分钟内产生的日志

[root@Master231 pod]# kubectl logs --since=2m -f koten-wp -c wordpress查看之前终止的 Pod 的日志。如果 Pod 已经重新启动,它将查看最新一次终止的 Pod 的日志。前提是该容器未被删除

kubectl logs -p -f games三、kubectl exec

连接到指定的容器查看

编写一个Pod节点有两个容器的资源清单

[root@Master231 pod]# cat 09-pods-ports-split.yaml

apiVersion: v1

kind: Pod

metadata:

name: koten-wp

spec:

nodeName: worker233

containers:

- name: mysql

image: harbor.koten.com/koten-db/mysql:5.7

# 向容器传递环境变量

env:

# 变量的名称

- name: MYSQL_ALLOW_EMPTY_PASSWORD

# 指定变量的值

value: "yes"

- name: MYSQL_DATABASE

value: "wordpress"

- name: MYSQL_USER

value: "admin"

- name: MYSQL_PASSWORD

value: "123"

ports:

- containerPort: 3306

name: db

- name: wordpress

image: harbor.koten.com/koten-web/wordpress:v1.0

env:

- name: WORDPRESS_DB_HOST

# 指定变量的值

value: "127.0.0.1"

- name: WORDPRESS_DB_NAME

value: "wordpress"

- name: WORDPRESS_DB_USER

value: "admin"

- name: WORDPRESS_DB_PASSWORD

value: "123"

ports:

- containerPort: 80

hostIP: 10.0.0.232

hostPort: 18080

name: wp

运行资源清单,发现一个Pod显示两个容器,查询Pod

[root@Master231 pod]# kubectl apply -f 09-pods-ports-split.yaml

pod/koten-wp created

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

koten-wp 2/2 Running 0 7s

#当一个Pod只有一个容器时,可以直接进入

[root@Master231 pod]# kubectl exec -it koten-mysql -- sh

#当一个Pod有多个容器时,可以-c指定容器进入

[root@Master231 pod]# kubectl exec -it koten-wp -c wordpress -- bash

root@koten-wp:/var/www/html#

#若不指定,则默认进入资源清单里第一个容器

[root@Master231 pod]# kubectl exec -it koten-wp -- bash

Defaulted container "mysql" out of: mysql, wordpress

root@koten-wp:/#

四、kubectl get

-o? yaml:用于获取资源的资源清单,避免资源清单丢失无法找到的情况

-o? wide:以宽格式显示资源信息

观察输出字段,Ready判断容器是否就绪,Status观察容器运行的状态,Restart判断容器是否重启、重启次数

[root@Master231 pod]# kubectl get pods

NAME READY STATUS RESTARTS AGE

koten-wp 2/2 Running 0 5m38s

[root@Master231 pod]# kubectl get pods -o yaml

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

koten-wp 2/2 Running 0 6m50s 10.100.2.15 worker233 <none> <none>五、kubectl cp

用户拷贝数据

拷贝本地的/etc/hosts到pod中db容器的/haha路径

kubectl cp /etc/hosts koten-wp:/haha 将本地的目录拷贝到容器的指定路径

kubectl cp /etc koten-wp:/haha -c db将Pod的db容器的/haha目录拷贝到宿主机,更名为xixi目录

kubectl cp koten-wp:/haha xixi -c db将当前目录下的所有文件打包,并解压至指定Pod的容器的指定路径

tar cf - * | kubectl exec -i koten-wp -c db -- tar xf - -C /tmp/六、command和args区别

[root@Master231 pod]# cat 14-pods-command-args.yaml

apiVersion: v1

kind: Pod

metadata:

name: linux-command-args

spec:

# hostNetwork: true

containers:

- name: web

image: harbor.koten.com/koten-web/nginx:1.25.1-alpine

# 类似于Dockerfile的ENTRYPOINT指令。

# command: ["tail","-f","/etc/hosts"]

# 类似于Dockerfile的CMD指令。

# args: ["sleep","3600"]

command:

- "tail"

- "-f"

args:

- "/etc/hosts"

[root@Master231 pod]# kubectl apply -f 14-pods-command-args.yaml

pod/linux-command-args created

[root@Master231 pod]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

linux-command-args 1/1 Running 0 53s 10.100.1.43 worker232 <none> <none>

查看镜像的命令

[root@Worker232 ~]# docker ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

71d7ce418ecd 4a20372d4940 "tail -f /etc/hosts" About a minute ago Up About a minute k8s_web_linux-command-args_default_1ad12102-4fe3-4e78-96e4-e560cd51dcd1_0

我是koten,10年运维经验,持续分享运维干货,感谢大家的阅读和关注

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!