问题的出现与解决思路

之前写过大致一个线预测与跟踪的代码,大致是这个样子。

1.最初测试版本

使用的是kalman滤波进行跟踪,下面的代码是我自己写的最初测试代码。

kalman_lines.h:我这里只写了一个函数,直接将将这个函数进行一个引入便可以

#pragma once

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <vector>

using namespace cv;

using namespace std;

void kalman_line(VideoCapture video, Mat frame, vector<Vec2f> lines, float slope, float intercept);kalman_lines.cpp:函数的实现

#include "kalman_lines.h"

#include <vector>

using namespace std;

void kalman_line(VideoCapture video, Mat frame, vector<Vec2f> lines, float slope, float intercept)

{

// 创建Kalman滤波器对象

KalmanFilter kf(4, 2, 0);

Mat state(4, 1, CV_32F); // x, y, vx, vy

Mat measurement = Mat::zeros(2, 1, CV_32F); // mx, my

// 设置Kalman滤波器初始状态

kf.transitionMatrix = (Mat_<float>(4, 4) << 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1);

setIdentity(kf.measurementMatrix);

setIdentity(kf.processNoiseCov, Scalar::all(1e-4));

setIdentity(kf.measurementNoiseCov, Scalar::all(1e-1));

setIdentity(kf.errorCovPost, Scalar::all(1));

// 选择特定斜率与截距的直线进行追踪

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0];

float theta = lines[i][1];

if (abs(theta) < CV_PI / 4 || abs(theta - CV_PI / 2) < CV_PI / 4)

{

float a = cos(theta);

float b = sin(theta);

float x0 = a * rho;

float y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

float current_slope = (pt2.y - pt1.y) / (float)(pt2.x - pt1.x);

float current_intercept = pt1.y - current_slope * pt1.x;

if (abs(current_slope - slope) < 0.2 && abs(current_intercept - intercept) < 100)

{

slope = current_slope;

intercept = current_intercept;

// 更新Kalman滤波器初始状态

state.at<float>(0) = intercept;

state.at<float>(1) = slope;

kf.statePre.at<float>(0) = intercept;

kf.statePre.at<float>(1) = slope;

}

}

}

Mat gray, edges;

// 视频帧循环处理

while (true)

{

// 读取下一帧图像

video >> frame;

// 检查是否到达视频末尾

if (frame.empty())

break;

// 预测下一状态

Mat prediction = kf.predict();

// 提取当前帧直线

cvtColor(frame, gray, COLOR_BGR2GRAY);

Canny(gray, edges, 50, 150, 3);

std::vector<Vec2f> lines;

HoughLines(edges, lines, 1, CV_PI / 180, 100);

// 选择最接近预测斜率与截距的直线进行追踪

float min_distance = FLT_MAX;

float closest_slope = 0.0;

float closest_intercept = 0.0;

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0];

float theta = lines[i][1];

if (abs(theta) < CV_PI / 4 || abs(theta - CV_PI / 2) < CV_PI / 4)

{

float a = cos(theta);

float b = sin(theta);

float x0 = a * rho;

float y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

float current_slope = (pt2.y - pt1.y) / (float)(pt2.x - pt1.x);

float current_intercept = pt1.y - current_slope * pt1.x;

float distance = sqrt(pow(current_slope - prediction.at<float>(1), 2) + pow(current_intercept - prediction.at<float>(0), 2));

if (distance < min_distance)

{

min_distance = distance;

closest_slope = current_slope;

closest_intercept = current_intercept;

}

}

}

// 更新Kalman滤波器状态

Mat measurement(2, 1, CV_32F);

measurement.at<float>(0) = closest_intercept;

measurement.at<float>(1) = closest_slope;

kf.correct(measurement);

// 绘制预测线和测量线

float slope_pred = kf.statePost.at<float>(1);

float intercept_pred = kf.statePost.at<float>(0);

float slope_meas = closest_slope;

float intercept_meas = closest_intercept;

Point pt1_pred(0, intercept_pred);

Point pt2_pred(frame.cols, slope_pred * frame.cols + intercept_pred);

Point pt1_meas(0, intercept_meas);

Point pt2_meas(frame.cols, slope_meas * frame.cols + intercept_meas);

line(frame, pt1_pred, pt2_pred, Scalar(0, 255, 0), 2);

line(frame, pt1_meas, pt2_meas, Scalar(0, 0, 255), 2);

imshow("Video", frame);

// 检测键盘按下

if (waitKey(30) == 27)

{

break;

}

}

}main主函数:

#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "kalman_lines.h"

using namespace cv;

int main()

{

// 打开视频文件

VideoCapture video("test1.mp4");

// 检查视频是否成功打开

if (!video.isOpened())

{

std::cout << "无法打开视频文件" << std::endl;

return -1;

}

// 创建窗口,用于显示视频帧

namedWindow("Video", WINDOW_NORMAL);

// 获取第一帧图像

Mat frame;

video >> frame;

// 提取第一帧的直线

Mat gray, edges;

cvtColor(frame, gray, COLOR_BGR2GRAY);

Canny(gray, edges, 50, 150, 3);

std::vector<Vec2f> lines;

HoughLines(edges, lines, 1, CV_PI / 180, 100);

kalman_line(video, frame,lines,1.0,0.0);

return 0;

}我是直接打印了一个图纸,图纸上只有一条直线进行预测与跟踪,使用手机拍摄视频。情况如下所示:

20240107test1

问题的出现:可以见到视频之中的跟踪效果并不是特别的好,出现了红色线条是水平的情况。

2.改进版本一:针对斜率与截距的思考

最初的测试版本之中,直线的跟踪效果出现了水平线的情况。我的猜想是在进行预测输入判断的地方出现了错误如下所示:

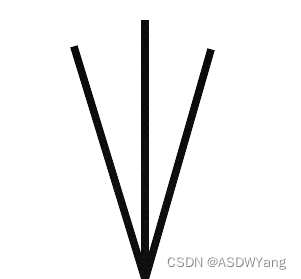

我一开始也没有往这个方向去想,但是后来反复观看测试代码,看到直线是在标准竖直的情况下,就会出现追踪出错的情况,如下图所示的三条直线。

这三条直线的斜率分别为正、无穷、负的,当出现接近竖直情况下就会出现,出现斜率正无穷与负无穷的情况,当直线此刻发生状态变化变出现了跟踪错误的情况。所以这个地方的判断标准之中使用斜率与截距是不合适的。

解决方案:

方案一:使极坐标表示直线便可以规避使用斜率出现问题的影响。

方案二:将原始的坐标轴进行对换,也就是原始的x轴变成了y轴,原始的y轴变成了x轴。由于本次项目的是要测量接近于竖直的情况,因此,第二种方案会更加简便一点。

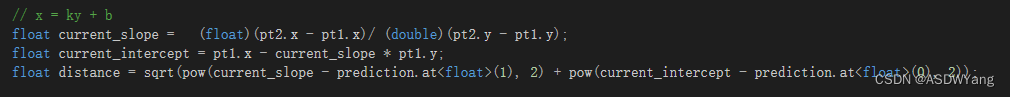

因此原始代码之中需要将y=kx+b换成x=ky+b:

并且需要将这个判断条件进行去除,

![]()

再次运行程序,

矫正1

3.改进版本二:针对跟踪效果的思考

可以见到改进版本一之中的跟踪不会出现错误的线的问题,但是出现的跟踪与预测的效果并不是很好,预测的线条偏离的太多。

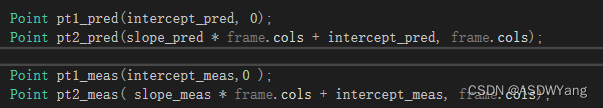

猜想是设定的初始值是出现了问题,参考论文《基于机器视觉的车道线检测与跟踪关键技术研究》之中给出初始矩阵最佳初值进行设定。

再次运行程序,可以见到跟踪与预测的结果好了很多,

矫正2

问题的出现原因分析:

①关于这个地方的直线跟踪的时候,我只是测试了一条直线,这个地方出错的原因是图像运动太快了并且相应的直线太细,导致运动过程之中出现了一点小问题,但是并不影响实际的使用。实际图像之中线条并不是如上所示,所以需要进一步的调优。

②kalman滤波是线性滤波并不是非线性滤波,所以对于处理线性预测是最佳的,而对于这种非线性跟踪并不是最佳方式,这里只是进行一个测试

4.加入干扰直线进行测试

矫正3

5.加入手动选取跟踪过程

#include "kalman_lines.h"

#include <vector>

using namespace std;

bool first_flag = true;

void kalman_line(VideoCapture video, Mat frame, vector<Vec2f> lines, float rho1, float theta1)

{

// 创建Kalman滤波器对象

KalmanFilter kf(4, 2, 0);

Mat state(4, 1, CV_32F); // x, y, vx, vy

Mat measurement = Mat::zeros(2, 1, CV_32F); // mx, my

// 设置Kalman滤波器初始状态

kf.transitionMatrix = (Mat_<float>(4, 4) << 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1);

kf.measurementMatrix = (Mat_<float>(2, 4) << 1, 0, 0, 0, 0, 1, 0, 0);

setIdentity(kf.processNoiseCov, Scalar::all(0.409));

setIdentity(kf.measurementNoiseCov, Scalar::all(0.801));

setIdentity(kf.errorCovPost, Scalar::all(1));

Mat gray, edges;

// 视频帧循环处理

while (true)

{

// 读取下一帧图像

video >> frame;

// 检查是否到达视频末尾

if (frame.empty())

break;

// 预测下一状态

Mat prediction = kf.predict();

// 提取当前帧直线

cvtColor(frame, gray, COLOR_BGR2GRAY);

Canny(gray, edges, 50, 150, 3);

std::vector<Vec2f> lines;

HoughLines(edges, lines, 1, CV_PI / 180, 100);

// 选择最接近预测斜率与截距的直线进行追踪

float min_distance = FLT_MAX;

float closest_slope = 0.0;

float closest_intercept = 0.0;

if (first_flag == true)

{

float a = cos(theta1);

float b = sin(theta1);

float x0 = a * rho1;

float y0 = b * rho1;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

float current_slope = (float)(pt2.x - pt1.x) / (float)(pt2.y - pt1.y);

float current_intercept = pt1.x - current_slope * pt1.y;

float distance = sqrt(0.8*pow(current_slope - prediction.at<float>(1), 2) + 0.2*pow(current_intercept - prediction.at<float>(0), 2));

if (distance < min_distance)

{

min_distance = distance;

closest_slope = current_slope;

closest_intercept = current_intercept;

}

first_flag = false;

}

else

{

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0];

float theta = lines[i][1];

float a = cos(theta);

float b = sin(theta);

float x0 = a * rho;

float y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

//x = ky + b

float current_slope = (float)(pt2.x - pt1.x) / (float)(pt2.y - pt1.y);

float current_intercept = pt1.x - current_slope * pt1.y;

float distance = sqrt(0.8*pow(current_slope - prediction.at<float>(1), 2) + 0.2*pow(current_intercept - prediction.at<float>(0), 2));

if (distance < min_distance)

{

min_distance = distance;

closest_slope = current_slope;

closest_intercept = current_intercept;

}

}

}

// 更新Kalman滤波器状态

Mat measurement(2, 1, CV_32F);

measurement.at<float>(0) = closest_intercept;

measurement.at<float>(1) = closest_slope;

kf.correct(measurement);

float slope_pred = 0.0;

float intercept_pred = 0.0;

/*

if (first_flag == true)

{

slope_pred = closest_slope;

intercept_pred = closest_intercept;

first_flag = false;

}

else

{*/

slope_pred = kf.statePost.at<float>(1);

intercept_pred = kf.statePost.at<float>(0);

// 绘制预测线和测量线

float slope_meas = closest_slope;

float intercept_meas = closest_intercept;

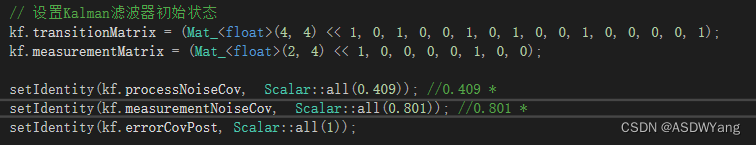

Point pt1_pred(intercept_pred,0);

Point pt2_pred( slope_pred * frame.cols + intercept_pred, frame.cols);

Point pt1_meas(intercept_meas, 0);

Point pt2_meas(slope_meas * frame.cols + intercept_meas, frame.cols);

line(frame, pt1_pred, pt2_pred, Scalar(0, 255, 0), 2);

line(frame, pt1_meas, pt2_meas, Scalar(0, 0, 255), 2);

imshow("Video", frame);

// 检测键盘按下

if (waitKey(30) == 27)

{

break;

}

}

}#include <opencv2/opencv.hpp>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include "kalman_lines.h"

using namespace cv;

float quanju_rho, quanju_theta;

Mat frame;

vector<Vec2f> lines;

//点到直线的距离公式

double distanceToLine(Point a1, Point pt1, Point pt2)

{

double x1 = pt1.x;

double y1 = pt1.y;

double x2 = pt2.x;

double y2 = pt2.y;

double x0 = a1.x;

double y0 = a1.y;

double distance = abs((y2 - y1) * x0 - (x2 - x1) * y0 + x2 * y1 - y2 * x1) / sqrt(pow(y2 - y1, 2) + pow(x2 - x1, 2));

return distance;

}

// 鼠标回调函数

void onMouse(int event, int x, int y, int flags, void* userdata) {

if (event == EVENT_LBUTTONDOWN) {

// 计算每条直线与点击点的距离,并选择最接近的直线

float minDistance = numeric_limits<float>::max();

int closestLine;

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0];

float theta = lines[i][1];

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho;

double y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

//line(frame, pt1, pt2, Scalar(0, 125, 125), 5, LINE_AA);

float distance = abs((pt1.x + pt2.x)/2.0 - x)+ abs((pt1.y + pt2.y) / 2.0 - y);

if (distance < minDistance) {

minDistance = distance;

closestLine = i;

}

}

for (size_t i = 0; i < lines.size(); i++)

{

if (i == closestLine)

{

float rho = lines[i][0];

float theta = lines[i][1];

quanju_rho = rho;

quanju_theta = theta;

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho;

double y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

line(frame, pt1, pt2, Scalar(0, 125, 125), 5, LINE_AA);

}

}

// 显示图像

imshow("Frame", frame);

}

}

int main()

{

// 打开视频文件

VideoCapture video("追踪线.mp4");

// 检查视频是否成功打开

if (!video.isOpened())

{

std::cout << "无法打开视频文件" << std::endl;

return -1;

}

// 创建窗口,用于显示视频帧

//namedWindow("frame", WINDOW_NORMAL);

// 获取第一帧图像

//Mat frame;

video >> frame;

// 提取第一帧的直线

Mat gray, edges;

cvtColor(frame, gray, COLOR_BGR2GRAY);

Canny(gray, edges, 50, 150, 3);

//std::vector<Vec2f> lines;

HoughLines(edges, lines, 1, CV_PI / 180, 100);

/*

// 在图像上绘制检测到的线条

for (size_t i = 0; i < lines.size(); i++)

{

float rho = lines[i][0];

float theta = lines[i][1];

double a = cos(theta);

double b = sin(theta);

double x0 = a * rho;

double y0 = b * rho;

Point pt1(cvRound(x0 + 1000 * (-b)), cvRound(y0 + 1000 * (a)));

Point pt2(cvRound(x0 - 1000 * (-b)), cvRound(y0 - 1000 * (a)));

line(frame, pt1, pt2, Scalar(0, 0, 255), 3, LINE_AA);

}*/

namedWindow("Frame");

imshow("Frame", frame);

setMouseCallback("Frame", onMouse);

waitKey(5000);

namedWindow("Video", WINDOW_NORMAL);

kalman_line(video, frame, lines, quanju_rho, quanju_theta);

return 0;

}追踪1

上述代码不是最优的方式,需要进一步调参。

6.对于跟踪的再度思考

如果之中的杂线过多,需要对于物体进行一个粗跟踪,然后再去跟踪确切的直线,我这里的思路是使用了Staple目标跟踪算法,这是一种结合局部特征HOG和全局特征颜色进行跟踪的代码。

我查到大神的代码:GitHub - xuduo35/STAPLE: C++ implementation of staple algorithm for object tracking.

但是上述的代码是将图像作为一个训练集,并且相应的特征的选取是将已知的特征处使用像素点的方式给定的,我将上述代码的主函数进行了更改,使用拉框的方式进行选取想要跟踪的物体,直接跟踪视频之中的物体,并且将视频之中将来的出现的匹配出作为训练集。相应的主函数如下所示:

#include "staple_tracker.hpp"

#include <iostream>

#include <vector>

#include <string>

#include <fstream>

#include <numeric>

#include <opencv2/opencv.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

cv::Rect_<float> getAxisAlignedBB(std::vector<cv::Point2f> polygon);

std::vector<cv::Rect_<float>> getgroundtruth(std::string txt_file);

void on_MouseHandle(int event, int x, int y, int flags, void* param);

bool drawing_finished = false;

bool drawing_box = false;

cv::Rect groundtruth_rect;

bool mouse_move = false;

int count = 0;

int main(int argc, char * argv[]) {

// 数据定义

STAPLE_TRACKER staple; //创建staple跟踪对象

std::vector<cv::Rect_<float>> result_rects; //创建矩形容器,存储所有算法识别出的跟踪框

cv::VideoCapture capture("追踪.mp4"); //创建VideoCapture类

cv::Mat image; //用来存储每一帧

cv::Mat tempImage; //每一帧的临时变量

int64 tic, toc; //算法运行时间起始点与终止点

double time = 0;

bool show_visualization = true;

bool first_image = true;

// 设置鼠标操作回调函数

cv::namedWindow("STAPLE");

cv::setMouseCallback("STAPLE", on_MouseHandle, (void*)&image);

//注意不加这句话会出现问题

double fps = capture.get(cv::CAP_PROP_FPS); // 获取视频的帧率

int delay = 100000 / fps; // 计算每一帧的延迟时间

while (1) {

count++;

capture.read(image); //逐帧读取视频

if (image.empty()) { //如果视频结束或未检测到摄像头则跳出循环

break;

}

if (drawing_finished == false) {

// 鼠标按下drawing_box=true,在视频画面frame上画矩形

if (drawing_box) {

tempImage.copyTo(image);

cv::rectangle(image, groundtruth_rect.tl(), groundtruth_rect.br(), cv::Scalar(0, 0, 255));// 画框

}

else {

image.copyTo(tempImage);//拷贝源图到临时变量

}

}

else {

// 如果是第一帧图像,则进行staple初始化操作,反之则只更新staple

if (first_image) {

// staple初始化操作

staple.tracker_staple_initialize(image, groundtruth_rect);

// staple目标追踪

staple.tracker_staple_train(image, true);

first_image = false;

}

groundtruth_rect = staple.tracker_staple_update(image);

staple.tracker_staple_train(image, false);

}

//当然时间果断不利于选框,当然也有更好的方式进行选框,可以自己改

if (count < 3)

{

//用于选框

cv::waitKey(delay*1.0);

}

else

{

cv::waitKey(delay / 100);

}

// 可视化部分

if (show_visualization) {

// 显示算法识别的跟踪框

cv::rectangle(image, groundtruth_rect, cv::Scalar(0, 128, 255), 2);

// 输出图像显示结果

cv::imshow("STAPLE", image);

std::cout << "Center: [" << groundtruth_rect.tl().x + groundtruth_rect.width / 2 << ", " << groundtruth_rect.tl().y + groundtruth_rect.height / 2 << "]" << std::endl;

char key = cv::waitKey(10);

if (key == 27 || key == 'q' || key == 'Q')

break;

}

}

cv::destroyAllWindows();

}

// 使轴对齐

cv::Rect_<float> getAxisAlignedBB(std::vector<cv::Point2f> polygon)

{

double cx = double(polygon[0].x + polygon[1].x + polygon[2].x + polygon[3].x) / 4.;

double cy = double(polygon[0].y + polygon[1].y + polygon[2].y + polygon[3].y) / 4.;

double x1 = std::min(std::min(std::min(polygon[0].x, polygon[1].x), polygon[2].x), polygon[3].x);

double x2 = std::max(std::max(std::max(polygon[0].x, polygon[1].x), polygon[2].x), polygon[3].x);

double y1 = std::min(std::min(std::min(polygon[0].y, polygon[1].y), polygon[2].y), polygon[3].y);

double y2 = std::max(std::max(std::max(polygon[0].y, polygon[1].y), polygon[2].y), polygon[3].y);

double A1 = norm(polygon[1] - polygon[2])*norm(polygon[2] - polygon[3]);

double A2 = (x2 - x1) * (y2 - y1);

double s = sqrt(A1 / A2);

double w = s * (x2 - x1) + 1;

double h = s * (y2 - y1) + 1;

cv::Rect_<float> rect(cx - 1 - w / 2.0, cy - 1 - h / 2.0, w, h);

return rect;

}

// 获取groundtruth内的矩形坐标

std::vector<cv::Rect_<float>> getgroundtruth(std::string txt_file)

{

std::vector<cv::Rect_<float>> rects;

std::ifstream gt;

gt.open(txt_file.c_str());

if (!gt.is_open())

std::cout << "Ground truth file " << txt_file

<< " can not be read" << std::endl;

std::string line;

float x1, y1, x2, y2, x3, y3, x4, y4;

while (getline(gt, line)) {

std::replace(line.begin(), line.end(), ',', ' ');

std::stringstream ss;

ss.str(line);

ss >> x1 >> y1 >> x2 >> y2 >> x3 >> y3 >> x4 >> y4;

std::vector<cv::Point2f>polygon;

polygon.push_back(cv::Point2f(x1, y1));

polygon.push_back(cv::Point2f(x2, y2));

polygon.push_back(cv::Point2f(x3, y3));

polygon.push_back(cv::Point2f(x4, y4));

rects.push_back(getAxisAlignedBB(polygon)); //0-index

}

gt.close();

return rects;

}

// 第一帧画框鼠标响应

void on_MouseHandle(int event, int x, int y, int flags, void* param) {

cv::Mat& image = *(cv::Mat*) param;

switch (event)

{

//鼠标移动消息

case cv::EVENT_MOUSEMOVE:

{

if (drawing_box)//如果是否进行绘制的标识符为真,则记录下长和宽到RECT型变量中

{

groundtruth_rect.width = x - groundtruth_rect.x;

groundtruth_rect.height = y - groundtruth_rect.y;

}

}

break;

//左键按下消息

case cv::EVENT_LBUTTONDOWN:

{

mouse_move = true;

drawing_box = true;

groundtruth_rect = cv::Rect(x, y, 0, 0);//记录起始点

}

break;

//左键抬起消息

case cv::EVENT_LBUTTONUP:

{

drawing_box = false;//置标识符为false

drawing_finished = true;

//对宽和高小于0的处理

if (groundtruth_rect.width < 0)

{

groundtruth_rect.x += groundtruth_rect.width;

groundtruth_rect.width *= -1;

}

if (groundtruth_rect.height < 0)

{

groundtruth_rect.y += groundtruth_rect.height;

groundtruth_rect.height *= -1;

}

//调用函数进行绘制

cv::rectangle(image, groundtruth_rect.tl(), groundtruth_rect.br(), cv::Scalar(0, 0, 255));// 画框

}

break;

}

}

可以见到跟踪的过程是比较好的,在实际的跟踪的过程之中可以使用首选粗选取物体,然后再精确跟踪的过程,当然这个过程最适合于背景特别特别复杂、干扰特别多的情况,若是干扰没那么多,后面这个物体跟踪便不用使用。这就是自己的一个大致思路。

跟踪物体

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!