FFmpeg Filter

2023-12-27 10:30:31

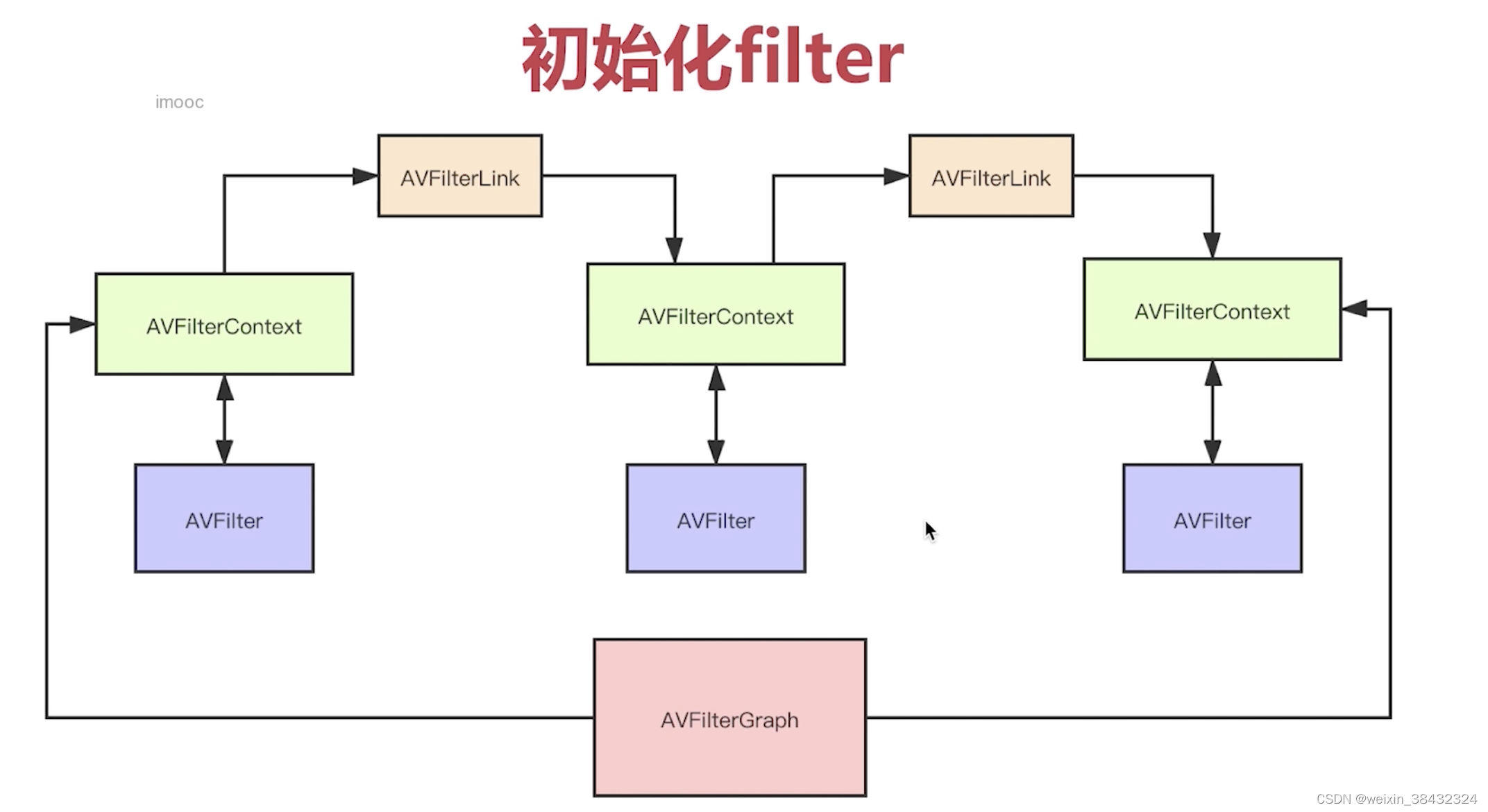

原理

1.将压缩后的每一帧数据进行解码

2.对解码后的数据进行计算

3.再将处理好的数据进行编码

简单滤镜

ffplay -i /Users/king/Desktop/ffmpeg/audio/cut.mp4 -vf "drawbox=x=30:y=30:w=60:h=60:c=red"

drawbox 滤镜名字

后边用等号连接参数,参数使用冒号进行分割?

查看滤镜

ffmpeg -h filter=drawbox ?

ffmpeg -filters 支持的滤镜

filter语法

在avfilter参数之间用“:”来分割 多个filter串联分割使用“,”分割。多个filter之间没有关联用“;”进行分割

ffplay -i /Users/xxxxx/Desktop/ffmpeg/audio/cut.mp4? -vf "

//

// filter_test.c

// ffmpegaudio

//

// Created by KING on 2023/12/23.

//

/**

* Set a binary option to an integer list.

*

* @param obj AVClass object to set options on

* @param name name of the binary option

* @param val pointer to an integer list (must have the correct type with

* regard to the contents of the list)

* @param term list terminator (usually 0 or -1)

* @param flags search flags

*/

//ffplay -i /Users/king/Desktop/ffmpeg/audio/filter.yuv -pixel_format GRAY8 -video_size 1920x1080

#include "filter_test.h"

#include "libavutil/avutil.h"

#include "libavcodec/avcodec.h"

#include "libavfilter/avfilter.h"

#include "libavformat/avformat.h"

#include "libavutil/opt.h"

#include "libavformat/avformat.h"

#include "libavfilter/buffersrc.h"

#include "libavfilter/buffersink.h"

const char *filter_desc = "drawbox=30:10:64:64:red";

static int init_filters(const char *filter_desc,AVFormatContext *fmt_context,AVCodecContext *codec_context,AVFilterGraph **graph, AVFilterContext **buf_ctx,AVFilterContext **buf_sink_ctx, int video_stream_index) {

int ret = -1;

char args[512] = {0,};

AVRational time_base = fmt_context->streams[video_stream_index]->time_base;

AVFilterInOut *inputs = avfilter_inout_alloc();

AVFilterInOut *output = avfilter_inout_alloc();

if (!inputs || !output){

printf("output inputs failed\n");

return AVERROR(ENOMEM);

}

*graph = avfilter_graph_alloc();

if (!*graph){

printf("graph create failed\n");

return AVERROR(ENOMEM);

}

//"[in]drawbox=xxx[out]"

const AVFilter *bufsrc = avfilter_get_by_name("buffer");

if (!bufsrc) {

printf("buffer src create failed\n");

return ret;

}

const AVFilter *bufsinck= avfilter_get_by_name("buffersink");

if (!bufsinck) {

printf("bufsinck create failed\n");

return ret;

}

/// 输入buffer filter创建

snprintf(args,

512,

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",codec_context->width,codec_context->height,codec_context->pix_fmt,time_base.num,time_base.den,codec_context->sample_aspect_ratio.num,codec_context->sample_aspect_ratio.den);

ret = avfilter_graph_create_filter(buf_ctx, bufsrc, "in", args, NULL, *graph);

if (ret < 0) {

printf("avfilter_graph_create_filter in failed\n");

goto __ERROR;

}

///输出 buffer filter 创建

enum AVPixelFormat pix_fmts[] = {AV_PIX_FMT_YUV420P,AV_PIX_FMT_GRAY8,AV_PIX_FMT_NONE};

ret = avfilter_graph_create_filter(buf_sink_ctx, bufsinck, "out", NULL, NULL, *graph);

if (ret < 0) {

printf("avfilter_graph_create_filter out failed\n");

goto __ERROR;

}

av_opt_set_int_list(*buf_sink_ctx,"pix_fmts",pix_fmts,AV_PIX_FMT_NONE,AV_OPT_SEARCH_CHILDREN);

// create inout

///输出 这里的name 是反的

inputs->name = av_strdup("out");

inputs->filter_ctx = *buf_sink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

/// 输入

output->name = av_strdup("in");

output->filter_ctx = *buf_ctx;

output->pad_idx = 0;

output->next = NULL;

// create filter and graph for filter desciption

avfilter_graph_parse_ptr(*graph, filter_desc, &inputs, &output,NULL);

ret = avfilter_graph_config(*graph, NULL);

if (ret < 0) {

printf("avfilter_graph_config failed\n");

}

__ERROR:

avfilter_inout_free(&inputs);

avfilter_inout_free(&output);

return ret;

}

int create_filter(char *file_name, AVFormatContext **fmt_context,AVCodecContext **codec_context, int *video_stream_index, AVCodec **dec) {

int ret = 0;

ret = avformat_open_input(fmt_context, file_name, NULL, NULL);

if (ret < 0) {

printf("open fail");

}

ret = avformat_find_stream_info(*fmt_context, NULL);

if (ret < 0) {

printf("avformat_find_stream_info fail");

}

ret = av_find_best_stream(*fmt_context, AVMEDIA_TYPE_VIDEO, -1, -1, dec,0);

if (ret < 0) {

printf("av_find_best_stream fail");

}

*video_stream_index = ret;

*codec_context = avcodec_alloc_context3(*dec);

if (!codec_context) {

printf("codec_context fdailed");

}

avcodec_parameters_to_context(*codec_context, (*fmt_context)->streams[*video_stream_index]->codecpar);

///打开解码器

ret = avcodec_open2(*codec_context, *dec, NULL);

if (ret < 0) {

printf("avcodec_open2 fail");

}

return ret;

}

static int do_frame(AVFrame *filter_frame, FILE *out) {

int ret = 0;

fwrite(filter_frame->data[0], 1, filter_frame->width * filter_frame->height, out);

fflush(out);

return ret;

}

static int filter_video(AVFilterContext*buf_ctx,AVFilterContext*buf_sink_ctx, AVFrame *frame, AVFrame *filter_frame,FILE *out) {

int ret = 0;

ret = av_buffersrc_add_frame(buf_ctx, frame);

if (ret < 0) {

printf("av_buffersrc_add_frame failed");

return ret;

}

while (1) {

ret = av_buffersink_get_frame(buf_sink_ctx, filter_frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

break;

}

if (ret < 0) {

return ret;

}

do_frame(filter_frame,out);

av_frame_unref(filter_frame);

}

av_frame_unref(frame);

return ret;

}

static int decode_frame_and_filter(AVFilterContext*buf_ctx,AVFilterContext*buf_sink_ctx,AVCodecContext* codec_context,AVFrame *frame, AVFrame *filter_frame, FILE *out) {

int ret = 0;

ret = avcodec_receive_frame(codec_context, frame);

if (ret < 0) {

if (ret != AVERROR(EAGAIN) && ret != AVERROR_EOF) {

printf("avcodec_receive_frame failed");

}

return ret;

}

return filter_video(buf_ctx,buf_sink_ctx,frame,filter_frame,out);

}

void player_filter_test() {

const char *filter_desc="drawbox=30:10:64:64:red";

// const char *filter_desc="movie=/xxx/docs/media/16324057162865/16324083424959.png,scale=64:64[wm];[in][wm]overlay=30:10";

AVFormatContext *fmt_context = NULL;

AVCodec *dec = NULL;

AVCodecContext *codec_context = NULL;

int video_stream_index = 0;

AVFilterContext *buf_ctx = NULL;

AVFilterContext *buf_sink_ctx = NULL;

AVFilterGraph *graph = NULL;

const char *file_name = "/Users/king/Desktop/ffmpeg/audio/cut.mp4";

AVPacket packet;

AVFrame *frame = NULL;

AVFrame *filter_frame = NULL;

FILE *out = NULL;

int ret = 0;

frame = av_frame_alloc();

filter_frame = av_frame_alloc();

out = fopen("/Users/king/Desktop/ffmpeg/audio/filter.yuv", "wb+");

if (!out) {

printf("FILE fail");

goto __ERROR;

}

if (!frame || !filter_frame) {

printf("init_frame fail");

goto __ERROR;

}

if ((ret = create_filter(file_name,&fmt_context,&codec_context,&video_stream_index,&dec)) < 0) {

printf("create_filter fail");

} else {

if ((ret = init_filters(filter_desc,fmt_context,codec_context,&graph,&buf_ctx,&buf_sink_ctx,video_stream_index)) < 0) {

printf("init_filters fail");

goto __ERROR;

}

}

while (1) {

if((ret = av_read_frame(fmt_context,&packet)) < 0) {

break;

}

if (packet.stream_index == video_stream_index) {

if ( (ret = avcodec_send_packet(codec_context, &packet)) < 0) {

printf("avcodec_send_packet failed\n");

break;

}

if ((ret = decode_frame_and_filter(buf_ctx,buf_sink_ctx,codec_context,frame,filter_frame,out)) < 0) {

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

continue;;

}

printf("decode_frame_and_filter failed\n");

break;

}

}

}

__ERROR:

if (out) {

fclose(out);

}

if (fmt_context) {

avformat_free_context(fmt_context);

}

if (codec_context) {

avcodec_free_context(&codec_context);

}

if (graph) {

avfilter_graph_free(&graph);

}

if (buf_ctx) {

avfilter_free(buf_ctx);

}

if (buf_sink_ctx) {

avfilter_free(buf_sink_ctx);

}

if (dec) {

av_free(dec);

}

printf("finish");

}

// 创建graph avfilter_graph_alloc()

// 创建buffer filter 和 buffersink filter

//分析 filter描述符号 并构建avfiltergraph

// 使创建好的avfiltergraph生效

/// 使用filter

/// 1.获得解码后的原始数据pcm/yuv

/// 2.将数据添加到bufferfilter中去

/// 3.从buffer sink中读取处理好的数据

/// 当所有的数据处理完之后 释放资源

//实现自己的filter

//找一个现成的filter为模版

//替换代码中所有filter name 关键字

/// 修改libavfilter 中的makefile 增加新的filter

/// 修改allfilter.c增加新的filter 并重新编译ffmpeg

///

"

通过api的方式使用filter的流程

1.设置filter描述符 (vf 后边的)

2.初始化filter?

3.进行滤镜处理

4.释放filter资源

ffmpeg -i input.mp4 -vf "setpts=0.5*PTS[v]" speed2.0.mp4

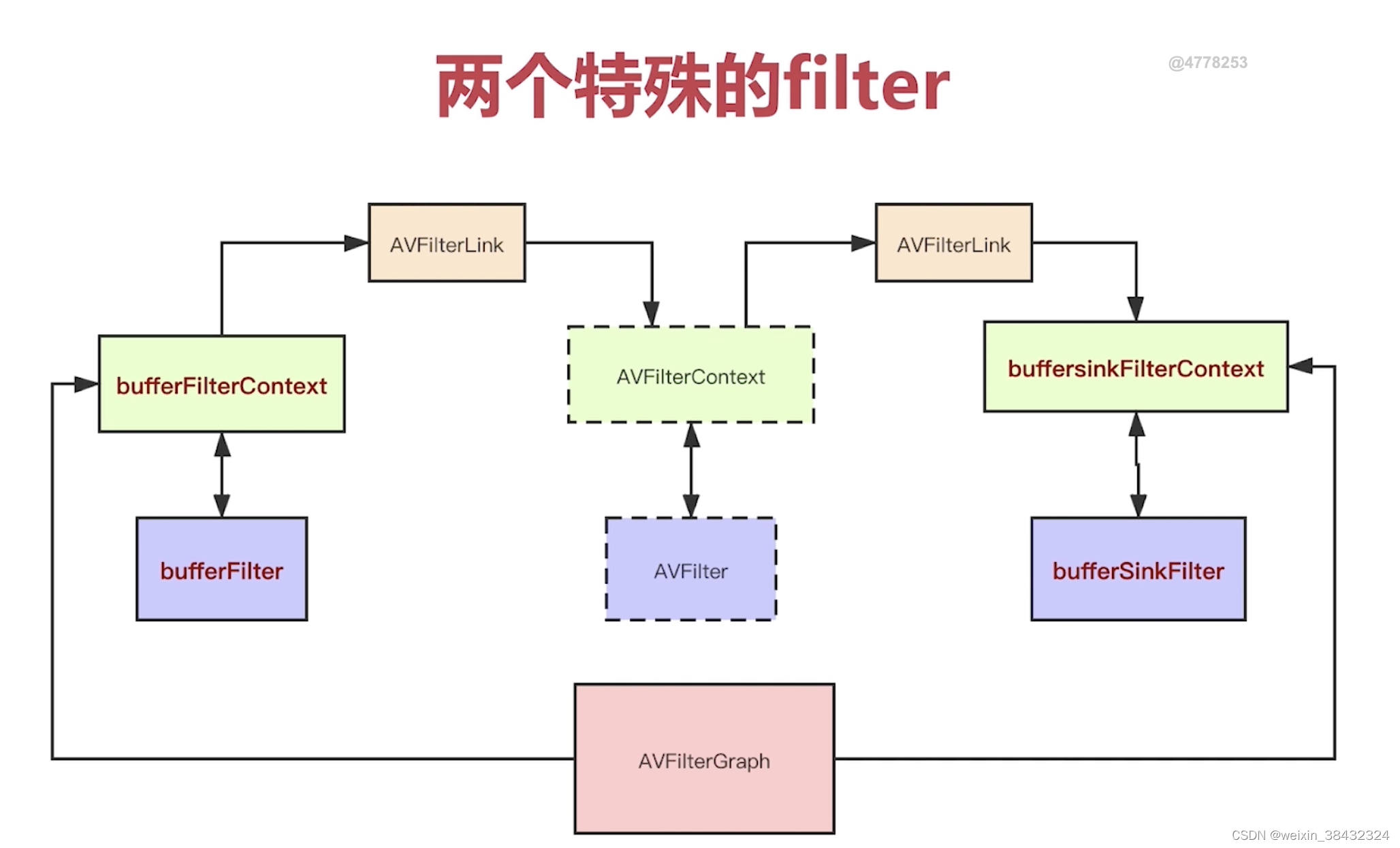

特殊filter

buffer filter?

buffersink filter?

文章来源:https://blog.csdn.net/weixin_38432324/article/details/135163750

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!