优化器(一)torch.optim.SGD-随机梯度下降法

2024-01-07 18:55:23

torch.optim.SGD-随机梯度下降法

import torch

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True,

transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64, shuffle=True)

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, padding=2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(1024, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model1(x)

return x

tudui = Tudui()

loss = nn.CrossEntropyLoss()

optim = torch.optim.SGD(tudui.parameters(), lr=0.01)

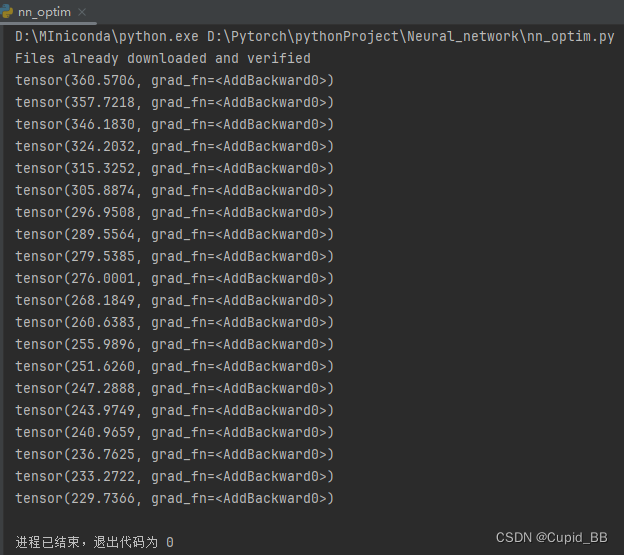

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = tudui(imgs)

result_loss = loss(outputs, targets)

optim.zero_grad()

result_loss.backward()

optim.step()

running_loss += result_loss

print(running_loss)

文章来源:https://blog.csdn.net/weixin_51788042/article/details/135441952

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!