实验记录:模型训练时loss为INF(无穷大)

2023-12-14 19:09:36

INF(infinity)——无穷大

本次原因:

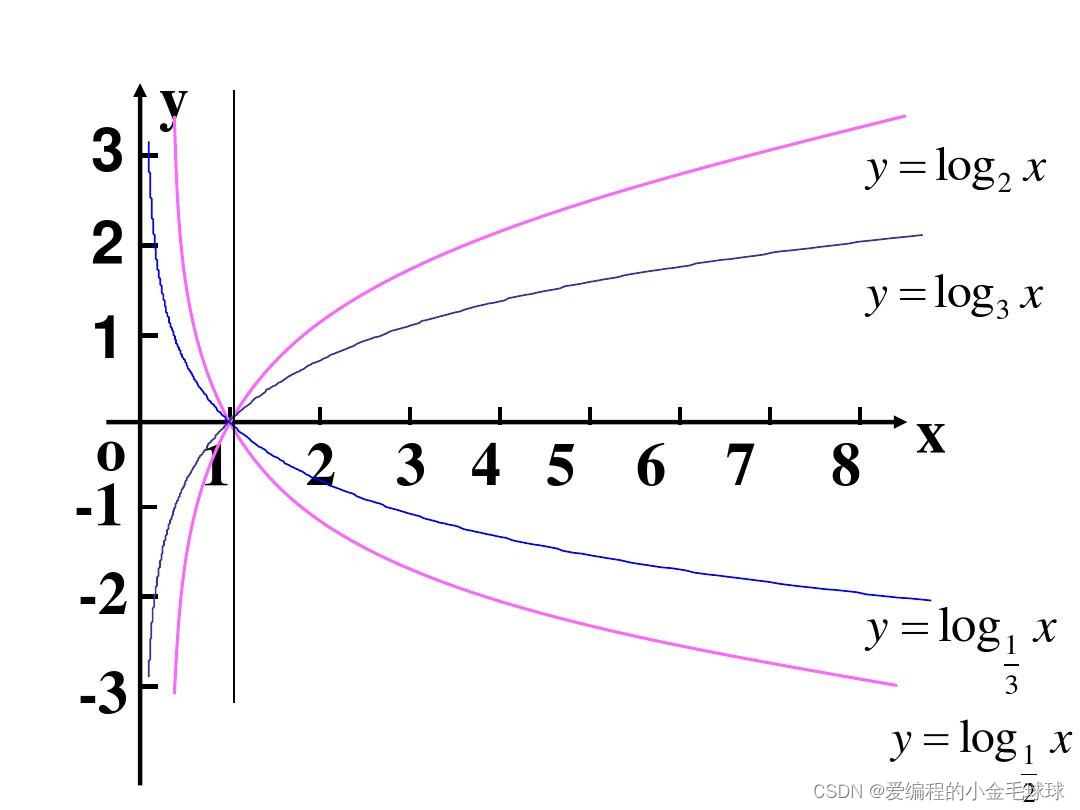

未进行归一化,损失函数使用log(x),在log(x)函数中,x越小趋近于零,结果会返回INF

其它原因:

1.除以零错误:

在计算过程中,如果尝试将一个数除以零,将导致无穷大的损失值。

2.数值不稳定性:

在某些情况下,由于数值不稳定性,梯度下降算法可能会产生非常大的梯度,从而导致损失值变得非常大。

3.学习率过高:

如果学习率设置得过高,梯度更新可能会变得非常大,从而在某些迭代中导致损失值变得非常大。

4.模型结构问题:

模型结构可能存在问题,例如,某些层的权重可能设置得过大或过小,导致计算过程中的数值不稳定。

5.初始化问题:

权重的初始化可能不合适,例如,如果权重的初始值过大或过小,可能会导致计算过程中的数值不稳定。

6.输入数据问题:

输入数据可能包含异常值或缺失值,这可能导致模型在计算过程中遇到问题。

结果输出:

幻数:2051, 图片数量: 26843张, 图片大小: 28*28

100%|██████████| 26843/26843 [00:00<00:00, 33193.52it/s]

100%|██████████| 26843/26843 [00:00<00:00, 3341972.22it/s]

魔数:2049, 图片数量: 26843张

幻数:2051, 图片数量: 2982张, 图片大小: 28*28

100%|██████████| 2982/2982 [00:00<00:00, 33162.09it/s]

魔数:2049, 图片数量: 2982张

cpu

100%|██████████| 2982/2982 [00:00<00:00, 3351397.25it/s]

0%| | 0/210 [00:00<?, ?it/s]training on mps:0

100%|██████████| 210/210 [00:02<00:00, 74.55it/s]

100%|██████████| 24/24 [00:00<00:00, 203.93it/s]

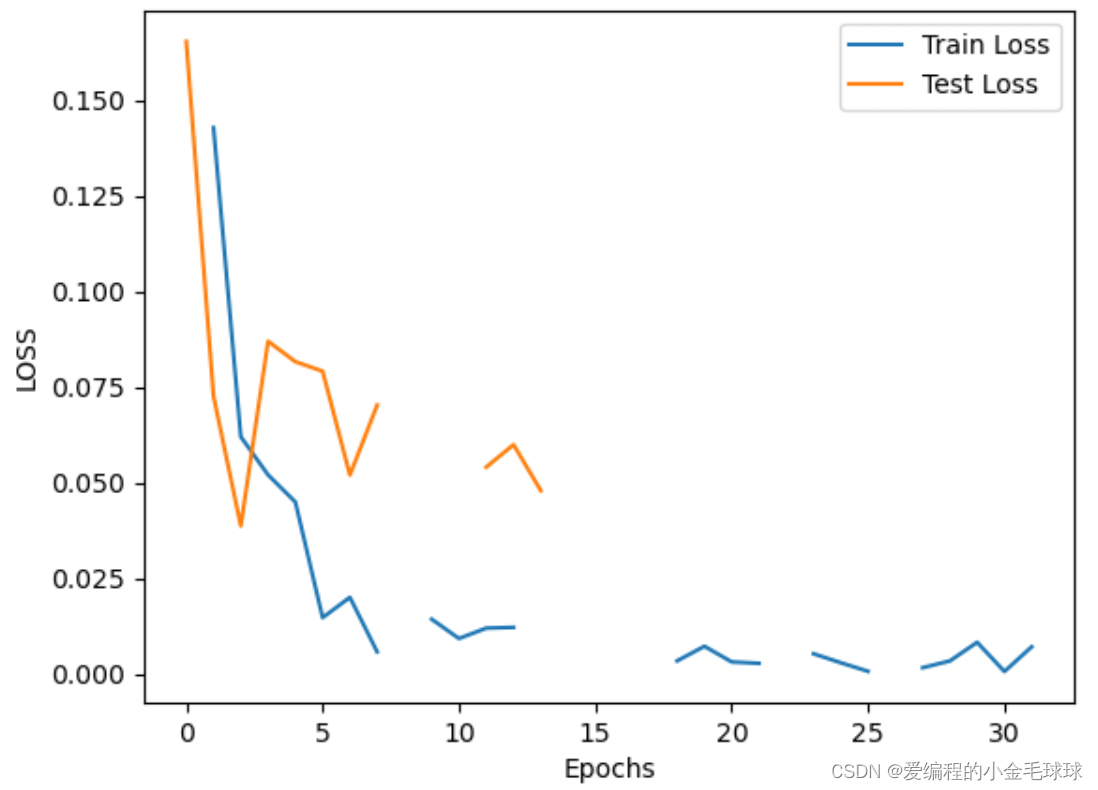

Epoch 1: train_loss inf, train_acc 0.936669, test_acc 0.988263, test_loss 0.165266, time 2.9 sec

100%|██████████| 210/210 [00:02<00:00, 80.01it/s]

100%|██████████| 24/24 [00:00<00:00, 233.16it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 2: train_loss 0.142747, train_acc 0.985061, test_acc 0.997653, test_loss 0.072634, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.98it/s]

100%|██████████| 24/24 [00:00<00:00, 236.72it/s]

Epoch 3: train_loss 0.062067, train_acc 0.992773, test_acc 0.997988, test_loss 0.038777, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.56it/s]

100%|██████████| 24/24 [00:00<00:00, 229.97it/s]

Epoch 4: train_loss 0.052101, train_acc 0.994077, test_acc 0.994299, test_loss 0.086992, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.91it/s]

100%|██████████| 24/24 [00:00<00:00, 229.63it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 5: train_loss 0.045025, train_acc 0.994934, test_acc 0.996311, test_loss 0.081664, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.65it/s]

100%|██████████| 24/24 [00:00<00:00, 233.24it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 6: train_loss 0.014834, train_acc 0.998175, test_acc 0.996311, test_loss 0.079170, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.94it/s]

100%|██████████| 24/24 [00:00<00:00, 232.75it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 7: train_loss 0.020133, train_acc 0.997690, test_acc 0.998994, test_loss 0.052059, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.86it/s]

100%|██████████| 24/24 [00:00<00:00, 230.38it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 8: train_loss 0.005914, train_acc 0.999143, test_acc 0.996982, test_loss 0.070334, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.47it/s]

100%|██████████| 24/24 [00:00<00:00, 231.28it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 9: train_loss inf, train_acc 0.993034, test_acc 0.996311, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.33it/s]

100%|██████████| 24/24 [00:00<00:00, 225.85it/s]

Epoch 10: train_loss 0.014424, train_acc 0.998510, test_acc 0.996647, test_loss 0.083575, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 75.68it/s]

100%|██████████| 24/24 [00:00<00:00, 230.67it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 11: train_loss 0.009379, train_acc 0.998845, test_acc 0.998323, test_loss inf, time 2.9 sec

100%|██████████| 210/210 [00:02<00:00, 80.58it/s]

100%|██████████| 24/24 [00:00<00:00, 236.18it/s]

Epoch 12: train_loss 0.012093, train_acc 0.999329, test_acc 0.998659, test_loss 0.054137, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.45it/s]

100%|██████████| 24/24 [00:00<00:00, 234.54it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 13: train_loss 0.012292, train_acc 0.998696, test_acc 0.998994, test_loss 0.059999, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.10it/s]

100%|██████████| 24/24 [00:00<00:00, 235.41it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 14: train_loss inf, train_acc 0.998957, test_acc 0.998994, test_loss 0.048007, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.03it/s]

100%|██████████| 24/24 [00:00<00:00, 234.61it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 15: train_loss 0.015674, train_acc 0.998361, test_acc 0.997653, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.68it/s]

100%|██████████| 24/24 [00:00<00:00, 231.76it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 16: train_loss inf, train_acc 0.998547, test_acc 0.996647, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.11it/s]

100%|██████████| 24/24 [00:00<00:00, 231.54it/s]

Epoch 17: train_loss inf, train_acc 0.983646, test_acc 0.995305, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 78.57it/s]

100%|██████████| 24/24 [00:00<00:00, 231.14it/s]

Epoch 18: train_loss inf, train_acc 0.999031, test_acc 0.997653, test_loss inf, time 2.8 sec

100%|██████████| 210/210 [00:02<00:00, 79.47it/s]

100%|██████████| 24/24 [00:00<00:00, 235.37it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 19: train_loss 0.003552, train_acc 0.999702, test_acc 0.997653, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.77it/s]

100%|██████████| 24/24 [00:00<00:00, 232.33it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 20: train_loss 0.007358, train_acc 0.999665, test_acc 0.998323, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.39it/s]

100%|██████████| 24/24 [00:00<00:00, 229.74it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 21: train_loss 0.003276, train_acc 0.999739, test_acc 0.998659, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 76.31it/s]

100%|██████████| 24/24 [00:00<00:00, 231.89it/s]

Epoch 22: train_loss 0.002908, train_acc 0.999814, test_acc 0.998323, test_loss inf, time 2.9 sec

100%|██████████| 210/210 [00:02<00:00, 80.48it/s]

100%|██████████| 24/24 [00:00<00:00, 233.76it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 23: train_loss inf, train_acc 0.999516, test_acc 0.998323, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.76it/s]

100%|██████████| 24/24 [00:00<00:00, 235.64it/s]

Epoch 24: train_loss 0.005402, train_acc 0.999739, test_acc 0.997653, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.92it/s]

100%|██████████| 24/24 [00:00<00:00, 233.85it/s]

Epoch 25: train_loss 0.003059, train_acc 0.999665, test_acc 0.997988, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.26it/s]

100%|██████████| 24/24 [00:00<00:00, 235.02it/s]

Epoch 26: train_loss 0.000807, train_acc 0.999851, test_acc 0.997988, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.98it/s]

100%|██████████| 24/24 [00:00<00:00, 232.45it/s]

Epoch 27: train_loss inf, train_acc 0.999776, test_acc 0.998659, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.17it/s]

100%|██████████| 24/24 [00:00<00:00, 235.01it/s]

Epoch 28: train_loss 0.001759, train_acc 0.999925, test_acc 0.998323, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.67it/s]

100%|██████████| 24/24 [00:00<00:00, 235.77it/s]

Epoch 29: train_loss 0.003535, train_acc 0.999404, test_acc 0.997988, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.96it/s]

100%|██████████| 24/24 [00:00<00:00, 232.70it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 30: train_loss 0.008395, train_acc 0.999553, test_acc 0.997988, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 79.63it/s]

100%|██████████| 24/24 [00:00<00:00, 234.90it/s]

0%| | 0/210 [00:00<?, ?it/s]Epoch 31: train_loss 0.000772, train_acc 0.999851, test_acc 0.996982, test_loss inf, time 2.7 sec

100%|██████████| 210/210 [00:02<00:00, 80.36it/s]

100%|██████████| 24/24 [00:00<00:00, 233.44it/s]

Epoch 32: train_loss 0.007225, train_acc 0.999702, test_acc 0.998994, test_loss inf, time 2.7 sec

文章来源:https://blog.csdn.net/XreqcxoKiss/article/details/134970172

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!