6. Service详解

6. Service详解

6.1 Service介绍

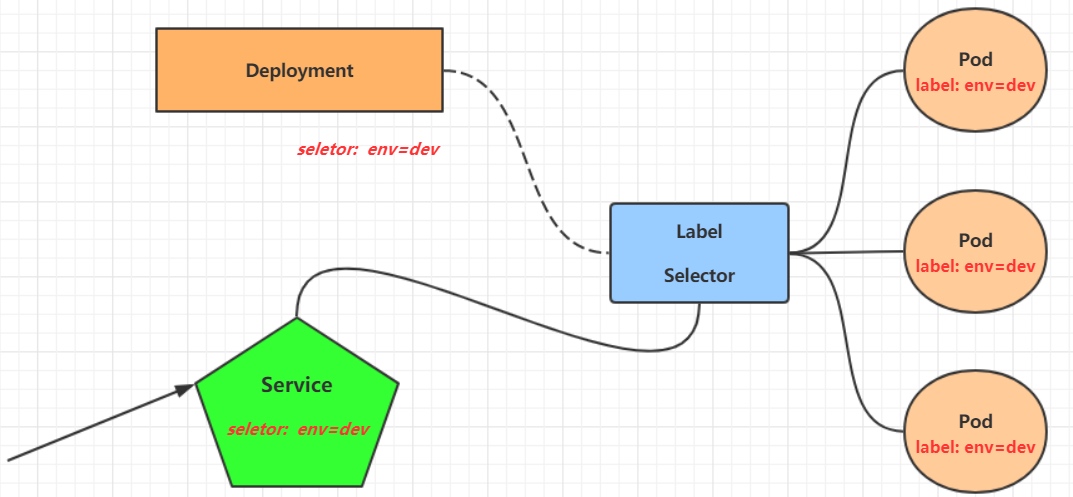

在kubernetes中,pod是应用程序的载体,我们可以通过pod的ip来访问应用程序,但是pod的ip地址不是固定的,这也就意味着不方便直接采用pod的ip对服务进行访问。

为了解决这个问题,kubernetes提供了Service资源,Service会对提供同一个服务的多个pod进行聚合,并且提供一个统一的入口地址。通过访问Service的入口地址就能访问到后面的pod服务。

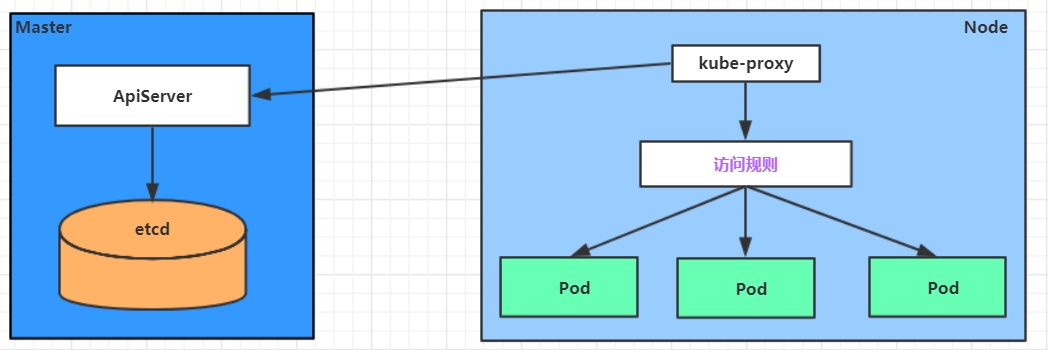

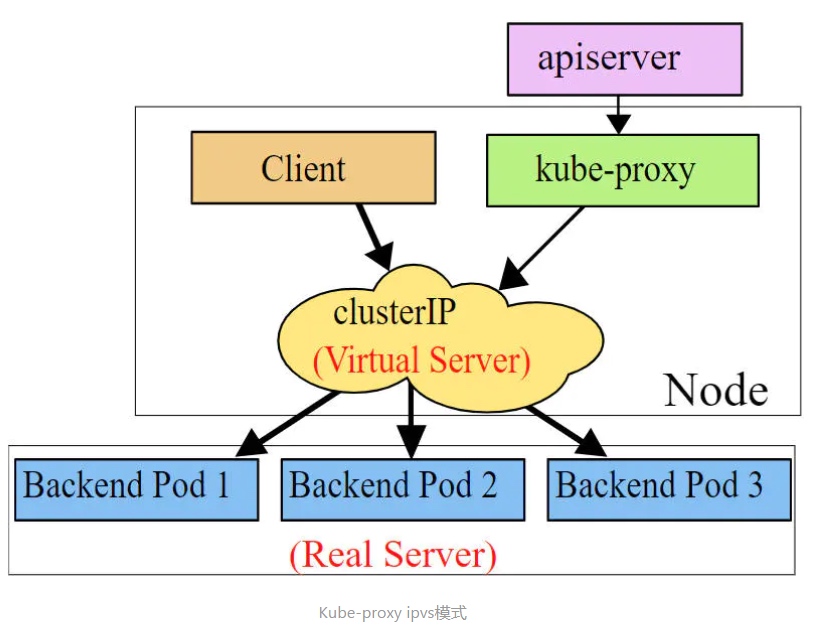

Service在很多情况下只是一个概念,真正起作用的其实是kube-proxy服务进程,每个Node节点上都运行着一个kube-proxy服务进程。当创建Service的时候会通过api-server向etcd写入创建的service的信息,而kube-proxy会基于监听的机制发现这种Service的变动,然后它会将最新的Service信息转换成对应的访问规则。

# 10.97.97.97:80 是service提供的访问入口

# 当访问这个入口的时候,可以发现后面有三个pod的服务在等待调用,

# kube-proxy会基于rr(轮询)的策略,将请求分发到其中一个pod上去

# 这个规则会同时在集群内的所有节点上都生成,所以在任何一个节点上访问都可以。

[root@node1 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.97.97.97:80 rr

-> 10.244.1.39:80 Masq 1 0 0

-> 10.244.1.40:80 Masq 1 0 0

-> 10.244.2.33:80 Masq 1 0 0

kube-proxy目前支持三种工作模式:

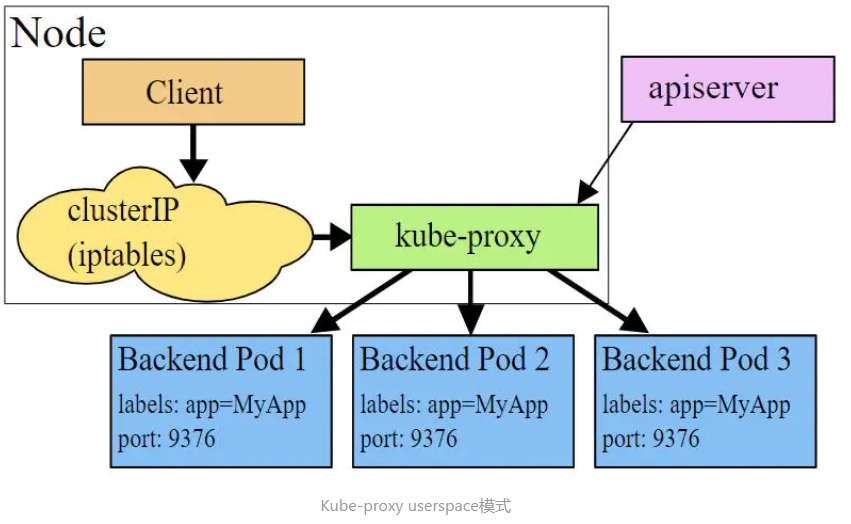

userspace 模式

userspace模式下,kube-proxy会为每一个Service创建一个监听端口,发向Cluster IP的请求被Iptables规则重定向到kube-proxy监听的端口上,kube-proxy根据LB算法选择一个提供服务的Pod并和其建立链接,以将请求转发到Pod上。 该模式下,kube-proxy充当了一个四层负责均衡器的角色。由于kube-proxy运行在userspace中,在进行转发处理时会增加内核和用户空间之间的数据拷贝,虽然比较稳定,但是效率比较低。

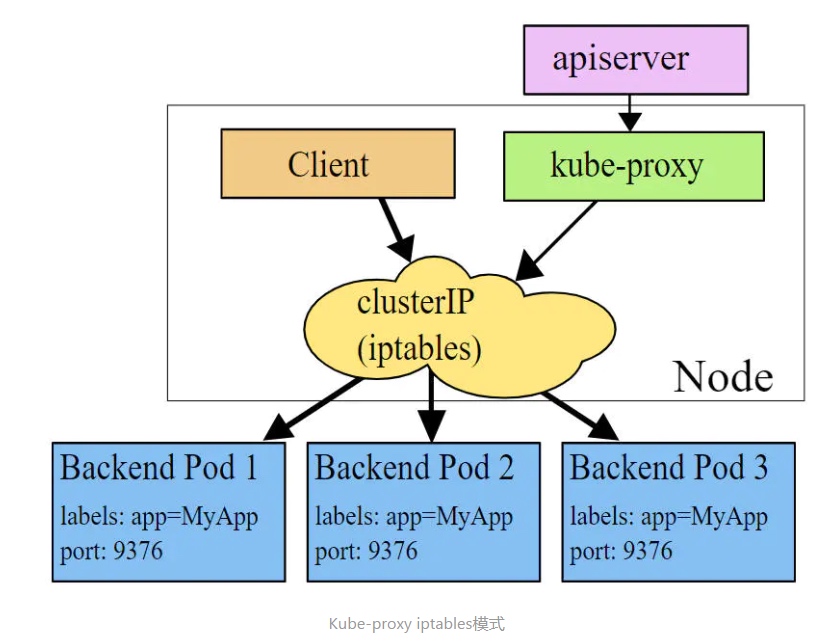

iptables 模式

iptables模式下,kube-proxy为service后端的每个Pod创建对应的iptables规则,直接将发向Cluster IP的请求重定向到一个Pod IP。 该模式下kube-proxy不承担四层负责均衡器的角色,只负责创建iptables规则。该模式的优点是较userspace模式效率更高,但不能提供灵活的LB策略,当后端Pod不可用时也无法进行重试。

ipvs 模式

ipvs模式和iptables类似,kube-proxy监控Pod的变化并创建相应的ipvs规则。ipvs相对iptables转发效率更高。除此以外,ipvs支持更多的LB算法。

# 此模式必须安装ipvs内核模块,否则会降级为iptables

# cm 配置管理器/配置映射

[root@k8s-master inventory]# kubectl api-resources|grep cm

configmaps cm v1 true ConfigMap

# 开启ipvs,# 修改mode: "ipvs"

[root@k8s-master inventory]# kubectl edit configmaps kube-proxy -n kube-system

configmap/kube-proxy edited

修改为 mode: "ipvs"

# 查看标签:k8s-app=kube-proxy

[root@k8s-master kubernetes]# kubectl get pods -n kube-system --show-labels|grep k8s-app=kube-proxy

kube-proxy-n28gm 1/1 Running 0 5m52s controller-revision-hash=65cf5dddb9,k8s-app=kube-prox,pod-template-generation=1

kube-proxy-q6clr 1/1 Running 0 5m52s controller-revision-hash=65cf5dddb9,k8s-app=kube-prox,pod-template-generation=1

kube-proxy-vvkbn 1/1 Running 0 5m53s controller-revision-hash=65cf5dddb9,k8s-app=kube-prox,pod-template-generation=1

[root@k8s-master kubernetes]# kubectl delete pod -l k8s-app=kube-proxy -n kube-system

pod "kube-proxy-n28gm" deleted

pod "kube-proxy-q6clr" deleted

pod "kube-proxy-vvkbn" deleted

[root@k8s-node1 ~]# yum -y install ipvsadm-1.31-1.el8.x86_6

[root@k8s-node1 kubernetes]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:32149 rr

TCP 192.168.207.11:32149 rr

TCP 10.96.0.1:443 rr

-> 192.168.207.10:6443 Masq 1 1 0

TCP 10.96.0.10:53 rr

TCP 10.96.0.10:9153 rr

TCP 10.110.182.125:80 rr

TCP 10.244.1.0:32149 rr

UDP 10.96.0.10:53 rr

6.2 Service类型

Service的资源清单文件:

kind: Service # 资源类型

apiVersion: v1 # 资源版本

metadata: # 元数据

name: service # 资源名称

namespace: dev # 命名空间

spec: # 描述

selector: # 标签选择器,用于确定当前service代理哪些pod

app: nginx

type: # Service类型,指定service的访问方式

clusterIP: # 虚拟服务的ip地址

sessionAffinity: # session亲和性,支持ClientIP、None两个选项

ports: # 端口信息

- protocol: TCP

port: 3017 # service端口

targetPort: 5003 # pod端口

nodePort: 31122 # 主机端口

- ClusterIP:默认值,它是Kubernetes系统自动分配的虚拟IP,只能在集群内部访问

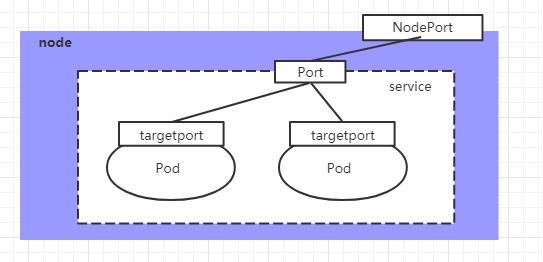

- NodePort:将Service通过指定的Node上的端口暴露给外部,通过此方法,就可以在集群外部访问服务

- LoadBalancer:使用外接负载均衡器完成到服务的负载分发,注意此模式需要外部云环境支持

- ExternalName: 把集群外部的服务引入集群内部,直接使用

6.3 Service使用

6.3.1 实验环境准备

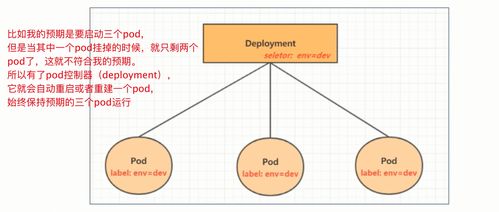

在使用service之前,首先利用Deployment创建出3个pod,注意要为pod设置app=nginx-pod的标签

创建deployment.yaml,内容如下:

[root@k8s-master inventory]# vi deployment.yaml

[root@k8s-master inventory]# cat deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pc-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

[root@k8s-master inventory]# kubectl apply -f deployment.yaml

deployment.apps/pc-deployment created

# 查看pod详情

[root@k8s-master inventory]# kubectl get -f deployment.yaml

NAME READY UP-TO-DATE AVAILABLE AGE

pc-deployment 3/3 3 3 7s

[root@k8s-master inventory]# kubectl get -f deployment.yaml -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

pc-deployment 3/3 3 3 12s nginx nginx:1.17.1 app=nginx-pod

[root@k8s-master inventory]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pc-deployment-6fdb77cb4-4c5zq 1/1 Running 0 16s 10.244.2.42 k8s-node2 <none> <none>

pc-deployment-6fdb77cb4-4prlq 1/1 Running 0 16s 10.244.1.32 k8s-node1 <none> <none>

pc-deployment-6fdb77cb4-jq776 1/1 Running 0 16s 10.244.2.43 k8s-node2 <none> <none>

# 为了方便后面的测试,修改下三台nginx的访问内容

[root@k8s-master inventory]# kubectl exec -itn dev pc-deployment-6fdb77cb4-4c5zq -- /bin/sh

# echo "page 111" > /usr/share/nginx/html/index.html

# exit

[root@k8s-master inventory]# kubectl exec -itn dev pc-deployment-6fdb77cb4-4prlq -- /bin/sh

# echo "page 222" > /usr/share/nginx/html/index.html

# exit

[root@k8s-master inventory]# kubectl exec -itn dev pc-deployment-6fdb77cb4-jq776 -- /bin/sh

# echo "page 333" > /usr/share/nginx/html/index.html

# exit

# 测试

[root@k8s-master ~]# curl 10.244.2.42

page 111

[root@k8s-master ~]# curl 10.244.1.32

page 222

[root@k8s-master ~]# curl 10.244.2.43

page 333

6.3.2 ClusterIP类型的Service

创建service-clusterip.yaml文件

[root@k8s-master inventory]# vi service-clusterip.yaml

[root@k8s-master inventory]# cat service-clusterip.yaml

apiVersion: v1

kind: Service

metadata:

name: service-clusterip

namespace: dev

spec:

selector:

app: nginx-pod

type: ClusterIP

ports:

- port: 80

targetPort: 80

[root@k8s-master inventory]# kubectl apply -f service-clusterip.yaml

service/service-clusterip created

# 查看service

[root@k8s-master inventory]# kubectl get -f service-clusterip.yaml

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service-clusterip ClusterIP 10.110.199.147 <none> 80/TCP 5s

[root@k8s-master inventory]# kubectl get svc -n dev -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx NodePort 10.110.182.125 <none> 80:32149/TCP 5d22h app=nginx-pod

service-clusterip ClusterIP 10.110.199.147 <none> 80/TCP 9s app=nginx-pod

# 查看service的详细信息

# 在这里有一个Endpoints列表,里面就是当前service可以负载到的服务入口

[root@k8s-master inventory]# kubectl describe svc service-clusterip -n dev

Name: service-clusterip

Namespace: dev

Labels: <none>

Annotations: <none>

Selector: app=nginx-pod

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.110.199.147

IPs: 10.110.199.147

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.32:80,10.244.2.42:80,10.244.2.43:80

Session Affinity: None

Events: <none>

# 查看ipvs的映射规则

[root@k8s-master inventory]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 172.17.0.1:32149 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

TCP 192.168.207.10:32149 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

TCP 10.96.0.1:443 rr

-> 192.168.207.10:6443 Masq 1 3 0

TCP 10.96.0.10:53 rr

-> 10.244.0.4:53 Masq 1 0 0

-> 10.244.0.5:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.4:9153 Masq 1 0 0

-> 10.244.0.5:9153 Masq 1 0 0

TCP 10.110.182.125:80 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

TCP 10.110.199.147:80 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

TCP 10.244.0.0:32149 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

TCP 10.244.0.1:32149 rr

-> 10.244.1.32:80 Masq 1 0 0

-> 10.244.2.42:80 Masq 1 0 0

-> 10.244.2.43:80 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.4:53 Masq 1 0 0

-> 10.244.0.5:53 Masq 1 0 0

# 访问 10.110.199.147 观察效果

[root@k8s-master inventory]# kubectl get svc -n dev -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx NodePort 10.110.182.125 <none> 80:32149/TCP 5d22h app=nginx-pod

service-clusterip ClusterIP 10.110.199.147 <none> 80/TCP 48s app=nginx-pod

[root@k8s-master inventory]# curl 10.110.199.147

page 333

[root@k8s-master inventory]# curl 10.110.199.147

page 111

[root@k8s-master inventory]# curl 10.110.199.147

page 222

[root@k8s-master inventory]# curl 10.110.199.147

page 333

[root@k8s-master inventory]# curl 10.110.199.147

page 111

[root@k8s-master inventory]# curl 10.110.199.147

page 222

[root@k8s-master inventory]# curl 10.110.199.147

page 333

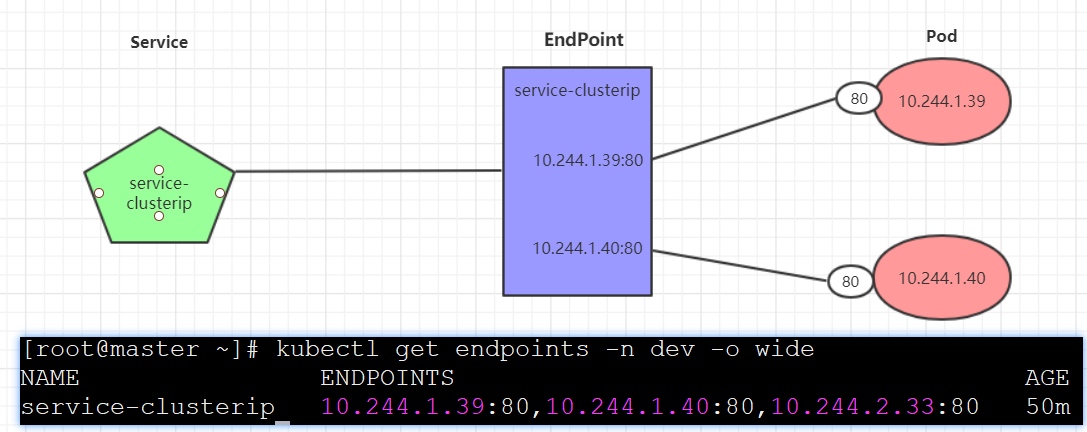

Endpoint

Endpoint是kubernetes中的一个资源对象,存储在etcd中,用来记录一个service对应的所有pod的访问地址,它是根据service配置文件中selector描述产生的。

一个Service由一组Pod组成,这些Pod通过Endpoints暴露出来,Endpoints是实现实际服务的端点集合。换句话说,service和pod之间的联系是通过endpoints实现的。

[root@k8s-master inventory]# kubectl get endpoints -n dev -o wide

NAME ENDPOINTS AGE

service-clusterip 10.244.1.32:80,10.244.2.42:80,10.244.2.43:80 3m41s

负载分发策略

对Service的访问被分发到了后端的Pod上去,目前kubernetes提供了两种负载分发策略:

-

如果不定义,默认使用kube-proxy的策略,比如随机、轮询

-

基于客户端地址的会话保持模式,即来自同一个客户端发起的所有请求都会转发到固定的一个Pod上

此模式可以使在spec中添加

sessionAffinity:ClientIP选项# 查看ipvs的映射规则【rr 轮询】 grep -A 3 过滤下面3行 [root@k8s-master inventory]# ipvsadm -Ln|grep -A 3 '10.110.199.147' TCP 10.110.199.147:80 rr -> 10.244.1.32:80 Masq 1 0 0 -> 10.244.2.42:80 Masq 1 0 0 -> 10.244.2.43:80 Masq 1 0 0 # 循环访问测试 [root@k8s-master inventory]# while true;do curl 10.110.199.147:80; sleep 2; done; page 111 page 222 page 333 page 111 page 222 page 333 page 111 page 222 page 333 page 111 ^C [root@k8s-master inventory]# kubectl delete -f service-clusterip.yaml service "service-clusterip" deleted # 修改分发策略----sessionAffinity:ClientIP [root@k8s-master inventory]# vi service-clusterip.yaml [root@k8s-master inventory]# cat service-clusterip.yaml apiVersion: v1 kind: Service metadata: name: service-clusterip namespace: dev spec: sessionAffinity: ClientIP selector: app: nginx-pod type: ClusterIP ports: - port: 80 # Service端口 targetPort: 80 # pod端口 [root@k8s-master inventory]# kubectl apply -f service-clusterip.yaml service/service-clusterip created [root@k8s-master inventory]# [root@k8s-master inventory]# kubectl get svc -n dev NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service-clusterip ClusterIP 10.111.115.232 <none> 80/TCP 12s # 查看ipvs规则【persistent 代表持久】 [root@k8s-master inventory]# ipvsadm -Ln|grep -A 3 '10.111.115.232' TCP 10.111.115.232:80 rr persistent 10800 -> 10.244.1.32:80 Masq 1 0 0 -> 10.244.2.42:80 Masq 1 0 0 -> 10.244.2.43:80 Masq 1 0 0 # 循环访问测试 [root@k8s-master inventory]# while true;do curl 10.111.115.232; sleep 2; done; page 333 page 333 page 333 [root@k8s-master inventory]# kubectl delete -f service-clusterip.yaml service "service-clusterip" deleted

6.3.3 HeadLess类型的Service

-

在某些场景中,开发人员可能不想使用Service提供的负载均衡功能,而希望自己来控制负载均衡策略,针对这种情况,kubernetes提供了HeadLess Service,这类Service不会分配Cluster IP,如果想要访问service,只能通过service的域名进行查询。

-

headless service可以通过解析service的DNS,返回所有Pod的地址和DNS(statefulSet部署的Pod才有DNS)

普通的service,只能通过解析service的DNS返回service的ClusterIP

6.3.3.1 deployment和statefulset区别

Deployment和StatefulSet是Kubernetes中常用的两种应用部署方式,它们在特点和使用场景上有所不同。

Deployment是Kubernetes中最常见的应用部署方式之一。Deployment可以用来部署和管理无状态(stateless)应用,它通过ReplicaSet来实现应用的伸缩、滚动升级和回滚等操作。Deployment不需要维护稳定的网络标识符和持久性存储卷,每个Pod都是独立的实体。当Pod发生故障或者需要进行扩容时,Deployment会自动创建或者销毁Pod。

相比之下,StatefulSet适用于有状态(stateful)应用的部署和管理。StatefulSet可以保证应用的稳定标识符和有序的部署、扩容与缩容。StatefulSet中的每个Pod都有唯一的网络标识符和持久性存储卷,即使Pod重新创建也可以保留相同的标识符和数据。这对于一些依赖稳定标识符和持久状态的应用非常重要,例如数据库或者分布式存储系统。

此外,Deployment和StatefulSet在更新策略上也存在差异。Deployment采用滚动升级的方式来更新应用,即逐步创建新的Pod并逐步删除旧的Pod,以确保应用的可用性。而StatefulSet则通过Pod的顺序创建和删除来实现有序的升级和回滚,以确保数据的连续性和一致性。

综上所述,Deployment适合无状态应用的部署和管理,而StatefulSet适合有状态应用。根据应用的需求和特点选择合适的部署方式可以更好地利用Kubernetes提供的强大功能和优势。

6.3.3.2 statefulset deployment 区别

StatefulSet和Deployment是Kubernetes中常用的两种资源对象,它们在应用程序的部署和管理方面有着不同的特点和用途。

Deployment是一种无状态的资源对象,用于管理无状态应用的部署。无状态意味着应用程序的实例之间没有任何依赖关系,可以随意地创建、删除和替换。Deployment通过使用ReplicaSet来实现应用程序的扩展和高可用性。当需要对应用程序进行水平扩展或滚动更新时,可以简单地修改Deployment的副本数或更新镜像版本,Kubernetes会自动处理应用程序的创建和删除过程。

StatefulSet是一种有状态的资源对象,用于管理有状态应用的部署。有状态意味着应用程序的实例之间有着一定的依赖关系,并且需要保证有序、唯一性的命名和网络标识。StatefulSet通过使用有序的Pod命名和持久化存储来实现应用程序的有状态部署。当需要对有状态应用进行扩展、缩减或滚动更新时,StatefulSet可以确保应用程序实例的顺序和唯一性,从而保证数据的一致性和可靠性。

总结来说,Deployment适合无状态应用的部署和管理,可以实现水平扩展和滚动更新。而StatefulSet适合有状态应用的部署和管理,可以确保应用程序实例的唯一性和顺序,保证数据的一致性和可靠性。根据应用程序的性质和需求,选择适合的资源对象可以更好地管理和维护应用程序的部署。

# 创建StatefulSet.yaml

[root@k8s-master inventory]# cat statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: pc-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: b1

image: busybox:latest

command: ["/bin/sleep","6000"]

ports:

- containerPort: 80

[root@k8s-master inventory]# kubectl apply -f statefulset.yaml

statefulset.apps/pc-deployment created

[root@k8s-master inventory]# kubectl get -f deployment.yaml -o wide

NAME READY AGE CONTAINERS IMAGES

pc-deployment 3/3 21s b1 busybox:latest

[root@k8s-master inventory]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-0 1/1 Running 0 25s

pc-deployment-1 1/1 Running 0 15s

pc-deployment-2 1/1 Running 0 11s

# 创建service-headliness.yaml

[root@k8s-master inventory]# cat service-headless.yaml

apiVersion: v1

kind: Service

metadata:

name: service-headliness

namespace: dev

spec:

selector:

app: nginx-pod

clusterIP: None # 将clusterIP设置为None,即可创建headliness Service,自动分配

type: ClusterIP

ports:

- port: 80

targetPort: 80

[root@k8s-master inventory]# kubectl apply -f service-headless.yaml

service/service-headliness created

# 获取service, 发现CLUSTER-IP未分配

[root@k8s-master inventory]# kubectl get svc service-headliness -n dev -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-headliness ClusterIP None <none> 80/TCP 27m app=nginx-pod

[root@k8s-master inventory]# kubectl get -f service-headless.yaml

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service-headliness ClusterIP None <none> 80/TCP 28m

# 查看service详情

[root@k8s-master inventory]# kubectl describe svc service-headliness -n dev

Name: service-headliness

Namespace: dev

Labels: <none>

Annotations: <none>

Selector: app=nginx-pod

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.36:80,10.244.1.37:80,10.244.2.48:80

Session Affinity: None

Events: <none>

# 查看域名的解析情况

[root@k8s-master inventory]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

pc-deployment-0 1/1 Running 0 36s

pc-deployment-1 1/1 Running 0 35s

pc-deployment-2 1/1 Running 0 33s

[root@k8s-master inventory]# kubectl exec -itn dev pc-deployment-0 -- /bin/sh

/ #

/ # cat /etc/resolv.conf

search dev.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.96.0.10

options ndots:5

/ # nslookup service-headliness.dev.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10:53

Name: service-headliness.dev.svc.cluster.local Address: 10.244.1.36

Name: service-headliness.dev.svc.cluster.local Address: 10.244.1.37

Name: service-headliness.dev.svc.cluster.local Address: 10.244.2.48

6.3.4 NodePort类型的Service

在之前的样例中,创建的Service的ip地址只有集群内部才可以访问,如果希望将Service暴露给集群外部使用,那么就要使用到另外一种类型的Service,称为NodePort类型。NodePort的工作原理其实就是将service的端口映射到Node的一个端口上,然后就可以通过NodeIp:NodePort来访问service了。

创建service-nodeport.yaml

[root@k8s-master inventory]# cat service-nodeport.yaml

apiVersion: v1

kind: Service

metadata:

name: service-nodeport

namespace: dev

spec:

selector:

app: nginx-pod

type: NodePort

ports:

- port: 80

nodePort: 30002

targetPort: 80

# 查看service

[root@k8s-master inventory]# kubectl apply -f service-nodeport.yaml

service/service-nodeport created

[root@k8s-master inventory]# kubectl get -f service-nodeport.yaml

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service-nodeport NodePort 10.104.111.160 <none> 80:30002/TCP 11s

[root@k8s-master inventory]# kubectl get -f service-nodeport.yaml -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-nodeport NodePort 10.104.111.160 <none> 80:30002/TCP 16s app=nginx-pod

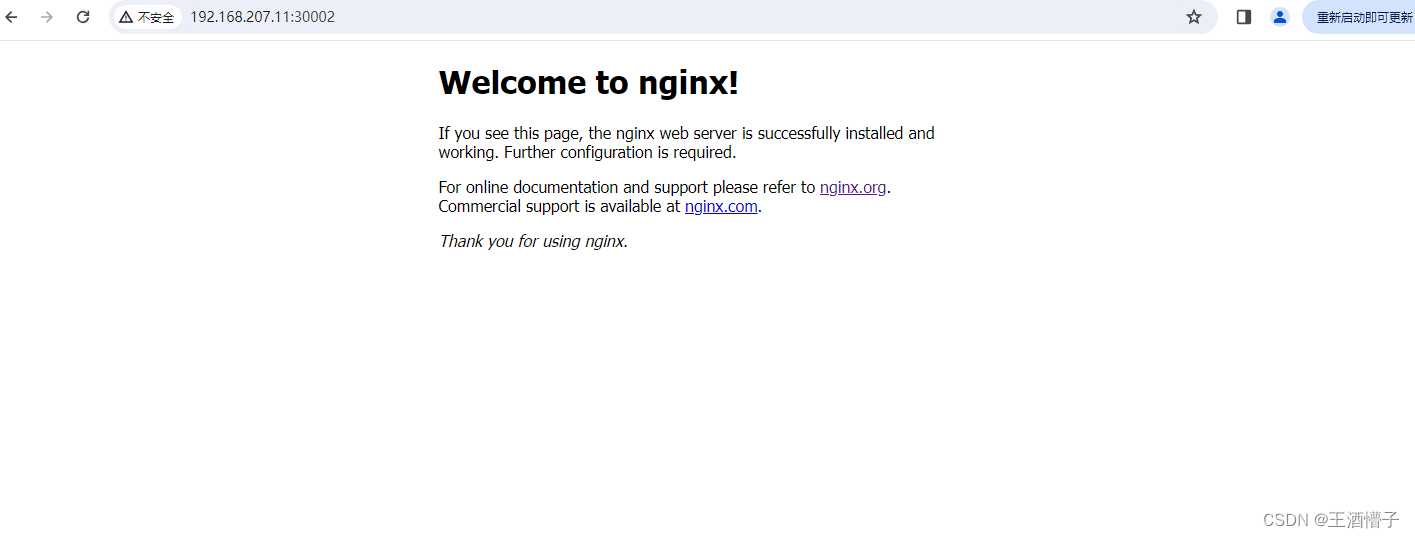

# 接下来可以通过电脑主机的浏览器去访问集群中任意一个nodeip的30002端口,即可访问到pod

6.3.5 LoadBalancer类型的Service

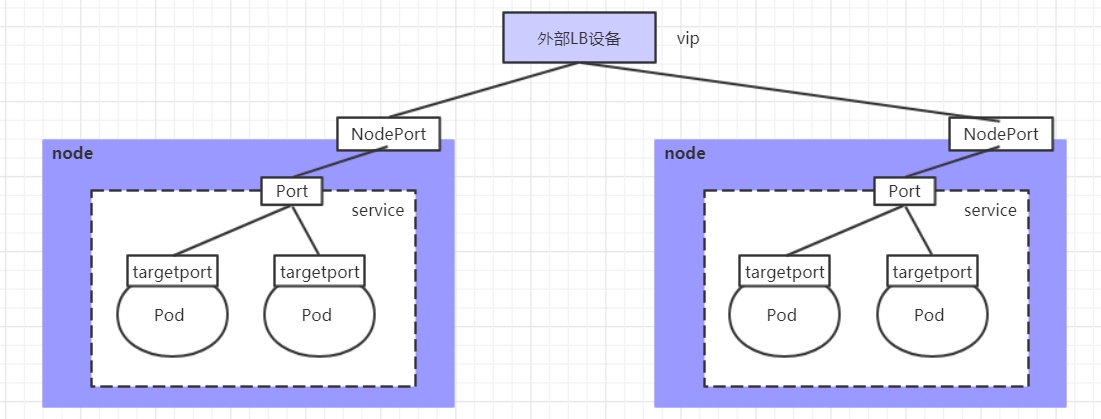

LoadBalancer和NodePort很相似,目的都是向外部暴露一个端口,区别在于LoadBalancer会在集群的外部再来做一个负载均衡设备,而这个设备需要外部环境支持的,外部服务发送到这个设备上的请求,会被设备负载之后转发到集群中。

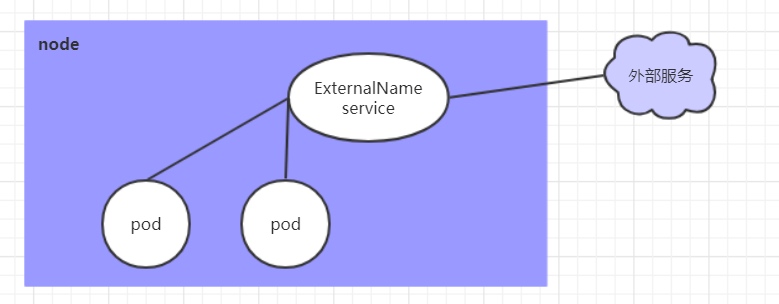

6.3.6 ExternalName类型的Service

ExternalName类型的Service用于引入集群外部的服务,它通过externalName属性指定外部一个服务的地址,然后在集群内部访问此service就可以访问到外部的服务了。

[root@k8s-master inventory]# cat service-externalname.yaml

apiVersion: v1

kind: Service

metadata:

name: service-externalname

namespace: dev

spec:

type: ExternalName

externalName: www.baidu.com

[root@k8s-master inventory]# kubectl apply -f service-externalname.yaml

service/service-externalname created

[root@k8s-master inventory]# kubectl get -f service-externalname.yaml -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-externalname ExternalName <none> www.baidu.com <none> 11s <none>

[root@k8s-master inventory]# kubectl exec -itn dev pc-deployment-0 -- /bin/sh

/ #

/ # cat /etc/resolv.conf

search dev.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.96.0.10

options ndots:5

/ # nslookup service-externalname.dev.svc.cluster.local

Server: 10.96.0.10

Address: 10.96.0.10:53

service-externalname.dev.svc.cluster.local canonical name = www.baidu.com

www.baidu.com canonical name = www.a.shifen.com

Name: www.a.shifen.com Address: 183.2.172.185

Name: www.a.shifen.com Address: 183.2.172.42

service-externalname.dev.svc.cluster.local canonical name = www.baidu.com

www.baidu.com canonical name = www.a.shifen.com

Name: www.a.shifen.com Address: 240e:ff:e020:966:0:ff:b042:f296

Name: www.a.shifen.com Address: 240e:ff:e020:9ae:0:ff:b014:8e8b

6.4 Ingress介绍

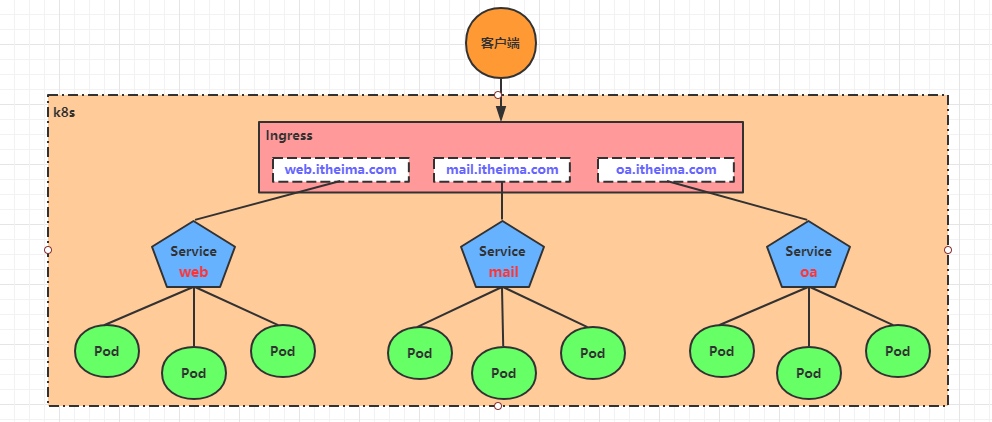

在前面课程中已经提到,Service对集群之外暴露服务的主要方式有两种:NotePort和LoadBalancer,但是这两种方式,都有一定的缺点:

- NodePort方式的缺点是会占用很多集群机器的端口,那么当集群服务变多的时候,这个缺点就愈发明显

- LB(LoadBalancer)方式的缺点是每个service需要一个LB,浪费、麻烦,并且需要kubernetes之外设备的支持

基于这种现状,kubernetes提供了Ingress资源对象,Ingress只需要一个NodePort或者一个LB就可以满足暴露多个Service的需求。工作机制大致如下图表示:

实际上,Ingress相当于一个7层的负载均衡器,是kubernetes对反向代理的一个抽象,它的工作原理类似于Nginx,可以理解成在Ingress里建立诸多映射规则,Ingress Controller通过监听这些配置规则并转化成Nginx的反向代理配置 , 然后对外部提供服务。在这里有两个核心概念:

- ingress:kubernetes中的一个对象,作用是定义请求如何转发到service的规则

- ingress controller:具体实现反向代理及负载均衡的程序,对ingress定义的规则进行解析,根据配置的规则来实现请求转发,实现方式有很多,比如Nginx, Contour, Haproxy等等

Ingress(以Nginx为例)的工作原理如下:

- 用户编写Ingress规则,说明哪个域名对应kubernetes集群中的哪个Service

- Ingress控制器动态感知Ingress服务规则的变化,然后生成一段对应的Nginx反向代理配置

- Ingress控制器会将生成的Nginx配置写入到一个运行着的Nginx服务中,并动态更新

- 到此为止,其实真正在工作的就是一个Nginx了,内部配置了用户定义的请求转发规则

6.5 Ingress使用

6.5.1 环境准备

搭建ingress环境

[root@k8s-master ~]# mkdir ingress-controller

# 获取ingress-nginx,本次案例使用的是1.31版本

# 修改deploy.yaml文件中的仓库

# 修改quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.30.0

# 为dyrnq/ingress-nginx-controller:v1.3.1

原文链接:https://blog.csdn.net/mushuangpanny/article/details/126964281

[root@k8s-master ingress-controller]# vi deploy.yaml

[root@k8s-master ingress-controller]# cat deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: dyrnq/ingress-nginx-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

[root@k8s-master ingress-controller]# grep image deploy.yaml

image: dyrnq/ingress-nginx-controller:v1.3.1

imagePullPolicy: IfNotPresent

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

加个主机网络:

在这个位置

400 spec:

401 minReadySeconds: 0

402 revisionHistoryLimit: 10

403 selector:

404 matchLabels:

405 app.kubernetes.io/component: controller

406 app.kubernetes.io/instance: ingress-nginx

407 app.kubernetes.io/name: ingress-nginx

408 template:

409 metadata:

410 labels:

411 app.kubernetes.io/component: controller

412 app.kubernetes.io/instance: ingress-nginx

413 app.kubernetes.io/name: ingress-nginx

414 spec:

415 hostNetwork: true

[root@k8s-master ingress-controller]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

# 查看ingress-nginx

[root@k8s-master ingress-controller]# kubectl get ns

NAME STATUS AGE

default Active 138d

dev Active 20d

ingress-nginx Active 86s

kube-flannel Active 19d

kube-node-lease Active 138d

kube-public Active 138d

kube-system Active 138d

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-wlzfp 0/1 Completed 0 95s

ingress-nginx-admission-patch-7t9zq 0/1 Completed 1 95s

ingress-nginx-controller-687f6cb7c5-l6cwl 1/1 Running 0 95s

# 查看service

[root@k8s-master ingress-controller]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.102.7.12 <none> 80:31310/TCP,443:31868/TCP 103s

ingress-nginx-controller-admission ClusterIP 10.100.63.61 <none> 443/TCP 103s

- 简介:cloud,云,cloud是在云厂商上部署,阿里云主机,腾讯云主机,云厂商里面有负载均衡器,借其可以实现负载均衡

- 自己搭建的是位于裸机,裸金属

- baremetal面向裸机

准备service和pod

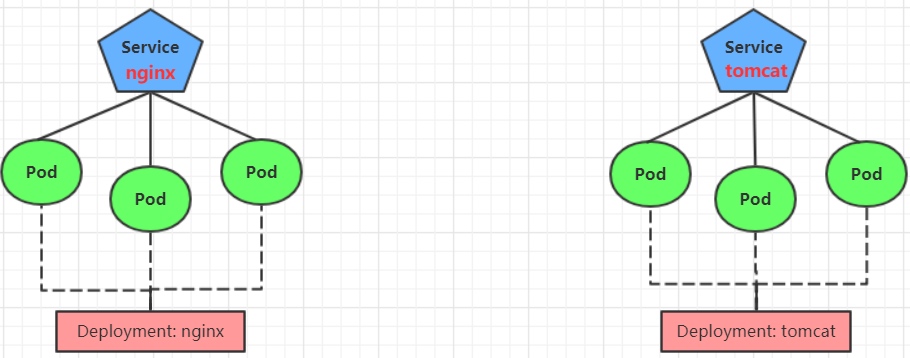

为了后面的实验比较方便,创建如下图所示的模型

创建tomcat-nginx.yaml

[root@k8s-master ingress-controller]# vi tomcat-nginx.yaml

[root@k8s-master ingress-controller]# cat tomcat-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-pod

template:

metadata:

labels:

app: tomcat-pod

spec:

containers:

- name: tomcat

image: tomcat:8.5-jre10-slim

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: dev

spec:

selector:

app: nginx-pod

clusterIP: None

type: ClusterIP

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

namespace: dev

spec:

selector:

app: tomcat-pod

clusterIP: None

type: ClusterIP

ports:

- port: 8080

targetPort: 8080

[root@k8s-master ingress-controller]# kubectl apply -f tomcat-nginx.yaml

deployment.apps/nginx-deployment created

deployment.apps/tomcat-deployment created

service/nginx-service created

service/tomcat-service created

# 查看

[root@k8s-master ingress-controller]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

nginx-deployment-6fdb77cb4-dmsk4 1/1 Running 0 3m32s

nginx-deployment-6fdb77cb4-hdbh2 1/1 Running 0 3m32s

nginx-deployment-6fdb77cb4-xbs5h 1/1 Running 0 3m32s

tomcat-deployment-96986c7bd-4lg4r 1/1 Running 0 3m32s

tomcat-deployment-96986c7bd-6n8rn 1/1 Running 0 3m32s

tomcat-deployment-96986c7bd-z8h89 1/1 Running 0 3m32s

[root@k8s-master ingress-controller]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-6fdb77cb4-dmsk4 1/1 Running 0 3m34s 10.244.1.46 k8s-node1 <none> <none>

nginx-deployment-6fdb77cb4-hdbh2 1/1 Running 0 3m34s 10.244.2.56 k8s-node2 <none> <none>

nginx-deployment-6fdb77cb4-xbs5h 1/1 Running 0 3m34s 10.244.2.55 k8s-node2 <none> <none>

tomcat-deployment-96986c7bd-4lg4r 1/1 Running 0 3m34s 10.244.1.47 k8s-node1 <none> <none>

tomcat-deployment-96986c7bd-6n8rn 1/1 Running 0 3m34s 10.244.2.57 k8s-node2 <none> <none>

tomcat-deployment-96986c7bd-z8h89 1/1 Running 0 3m34s 10.244.1.48 k8s-node1 <none>

[root@k8s-master ingress-controller]# kubectl run centos2 --image centos -n dev -- /bin/sleep 9000

pod/centos2 created

[root@k8s-master ingress-controller]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

centos2 1/1 Running 0 2m5s

nginx-deployment-6fdb77cb4-dmsk4 1/1 Running 0 23m

nginx-deployment-6fdb77cb4-hdbh2 1/1 Running 0 23m

nginx-deployment-6fdb77cb4-xbs5h 1/1 Running 0 23m

tomcat-deployment-96986c7bd-4lg4r 1/1 Running 0 23m

tomcat-deployment-96986c7bd-6n8rn 1/1 Running 0 23m

tomcat-deployment-96986c7bd-z8h89 1/1 Running 0 23m

# 通过svc来访问资源

[root@k8s-master ingress-controller]# kubectl get svc -n dev

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-service ClusterIP None <none> 80/TCP 23m

tomcat-service ClusterIP None <none> 8080/TCP 23m

# 进入镜像

[root@k8s-master ingress-controller]# kubectl exec -itn dev centos2 -- /bin/bash

[root@centos2 /]# curl nginx-service

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 创建的svc是有效的,在后端可以访问,下面做转发

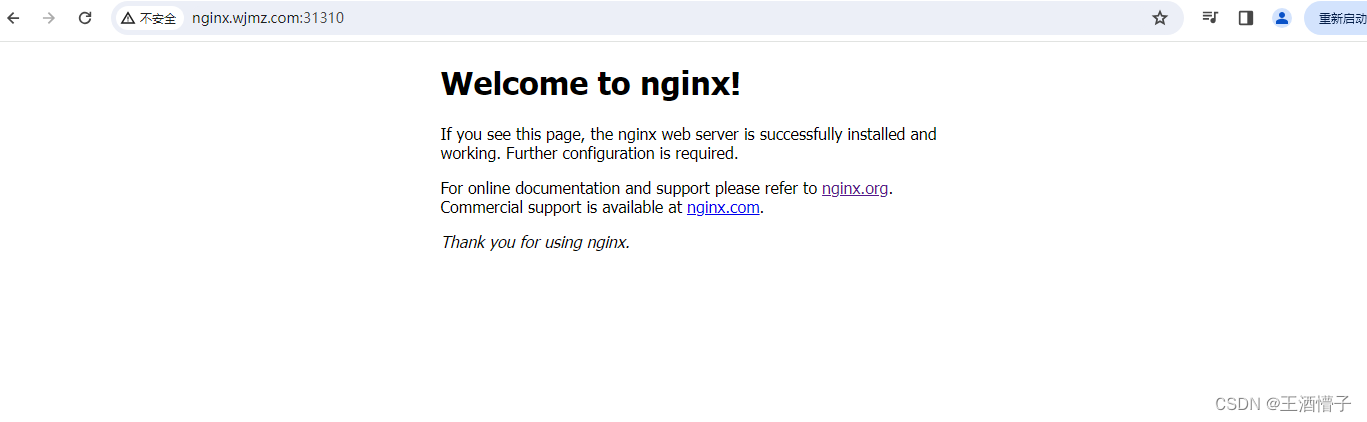

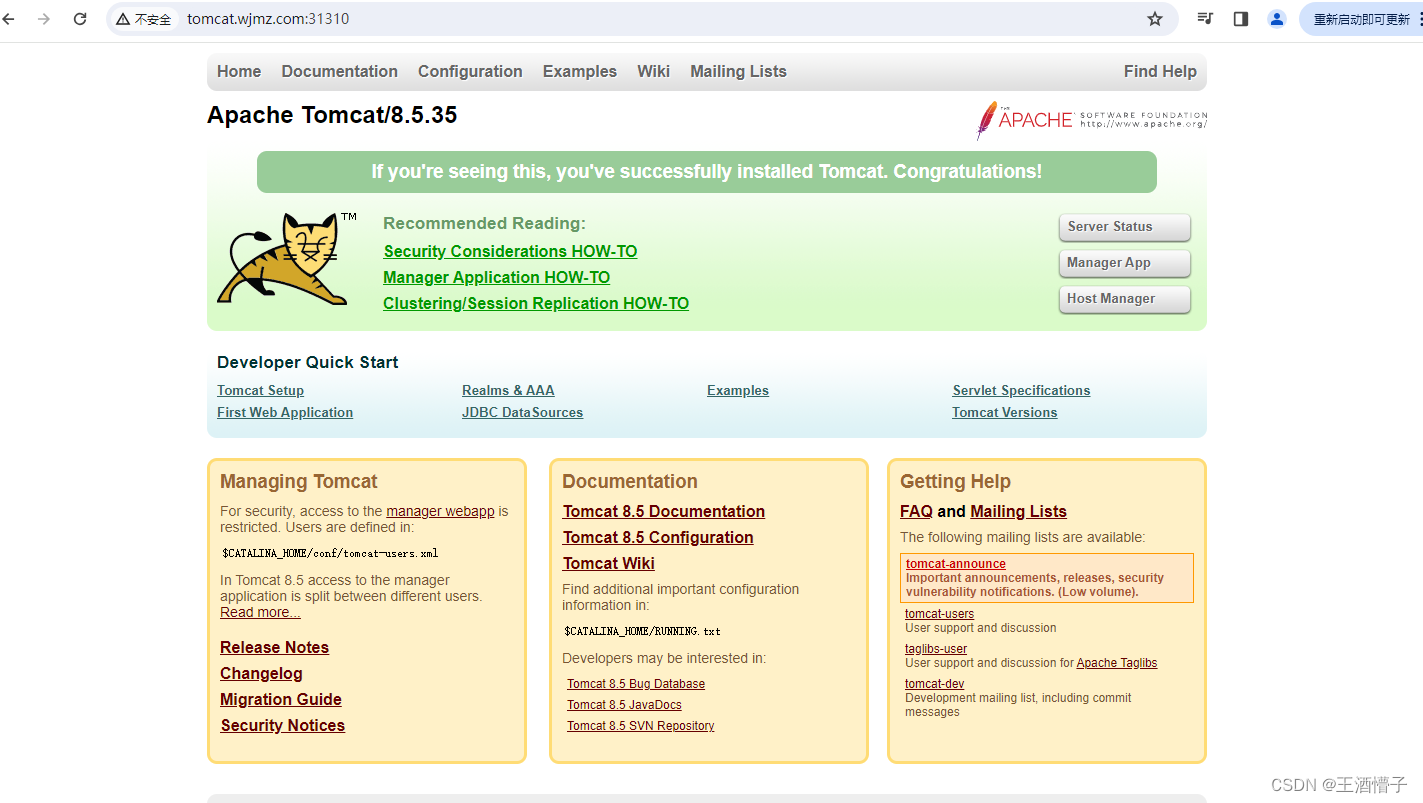

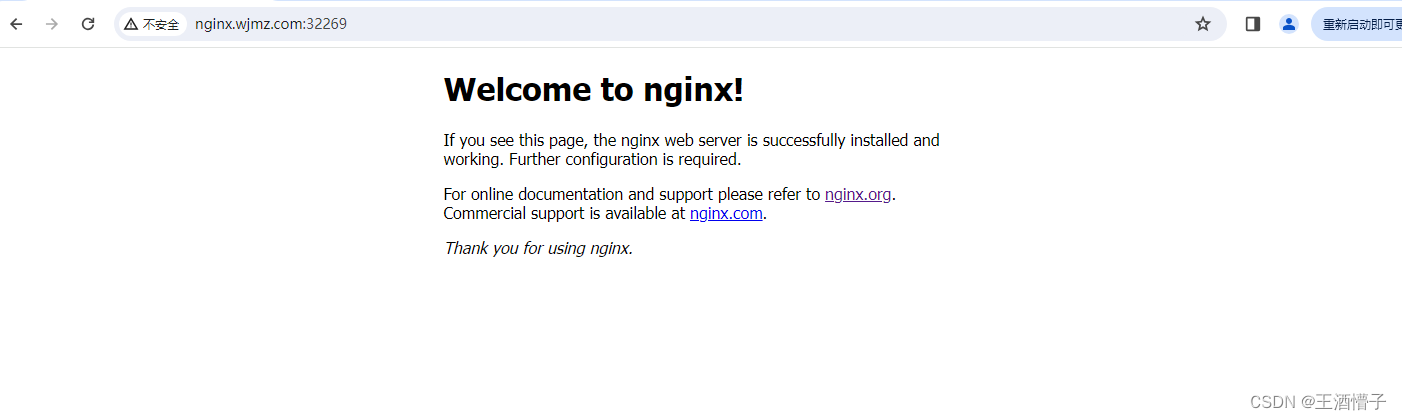

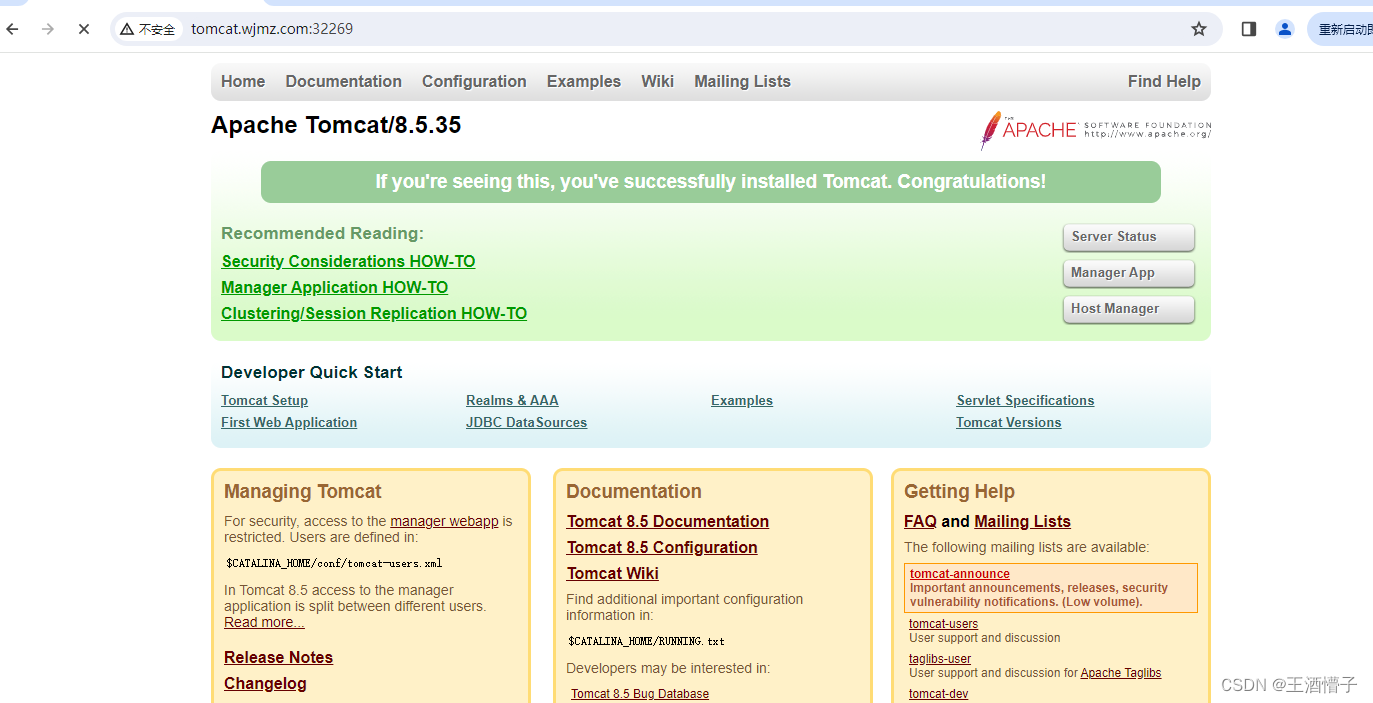

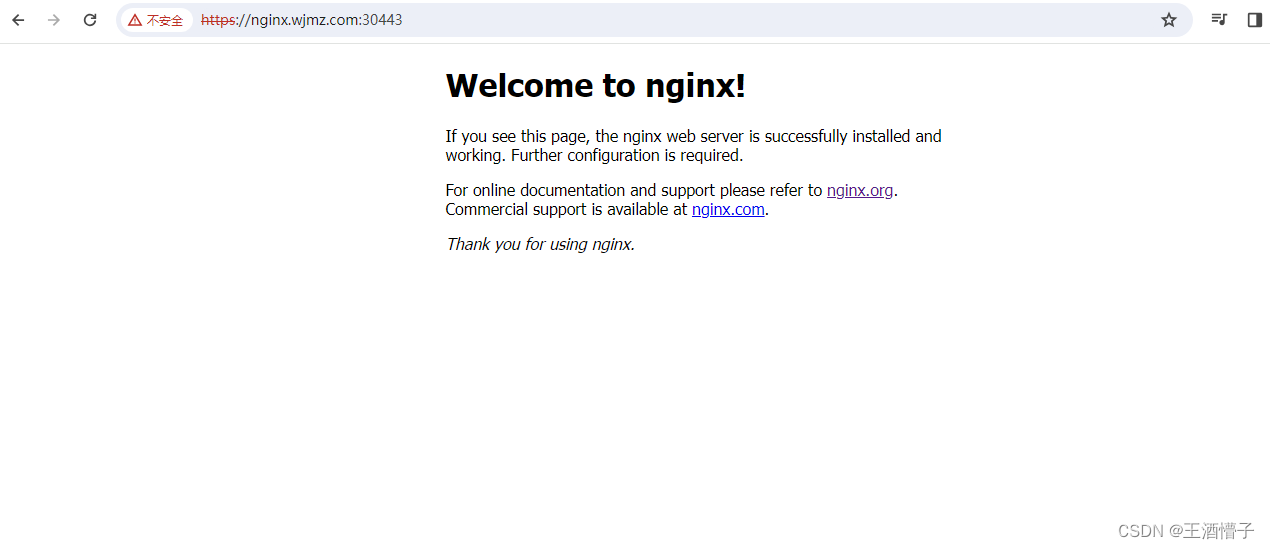

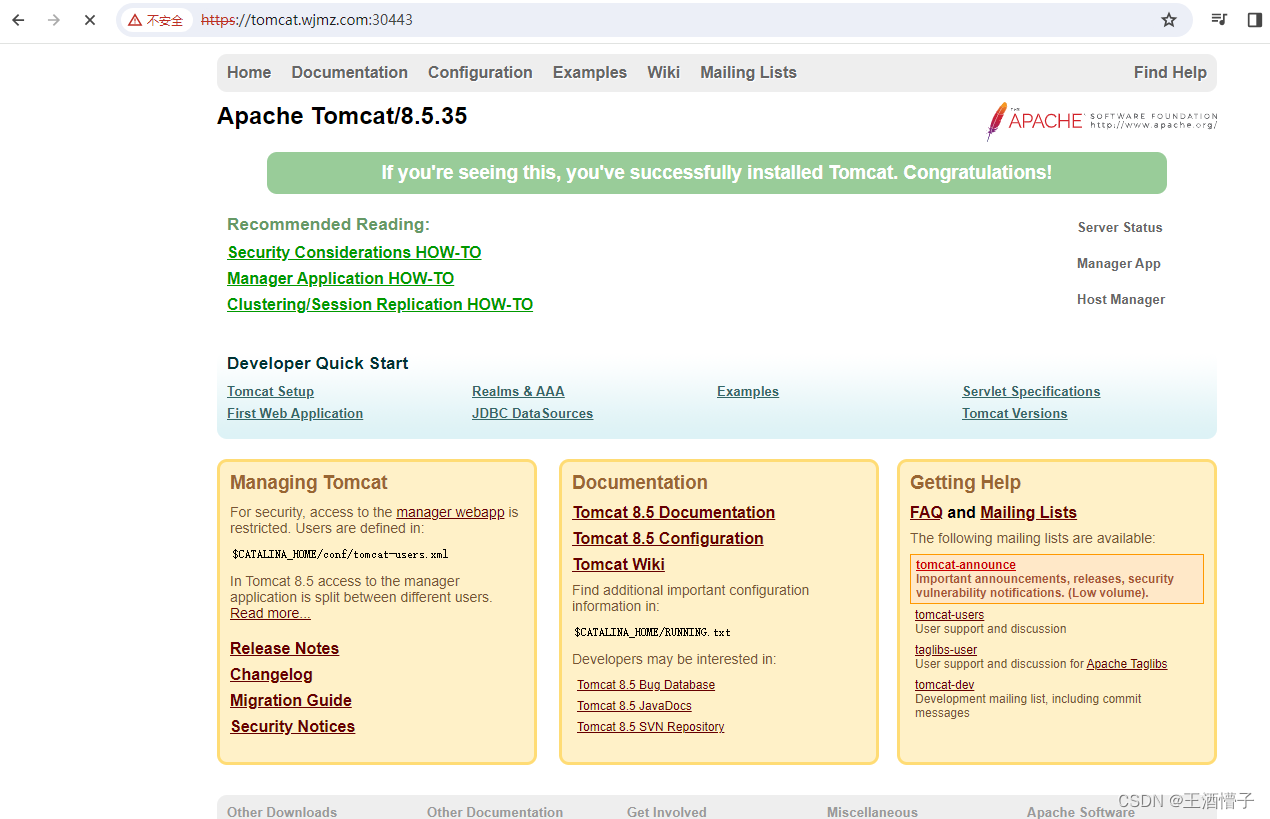

6.5.1.1Http代理—制作转发

- 创建ingress-http.yaml

[root@k8s-master ingress-controller]# cat ingress-http.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-http

namespace: dev

spec:

ingressClassName: nginx

rules:

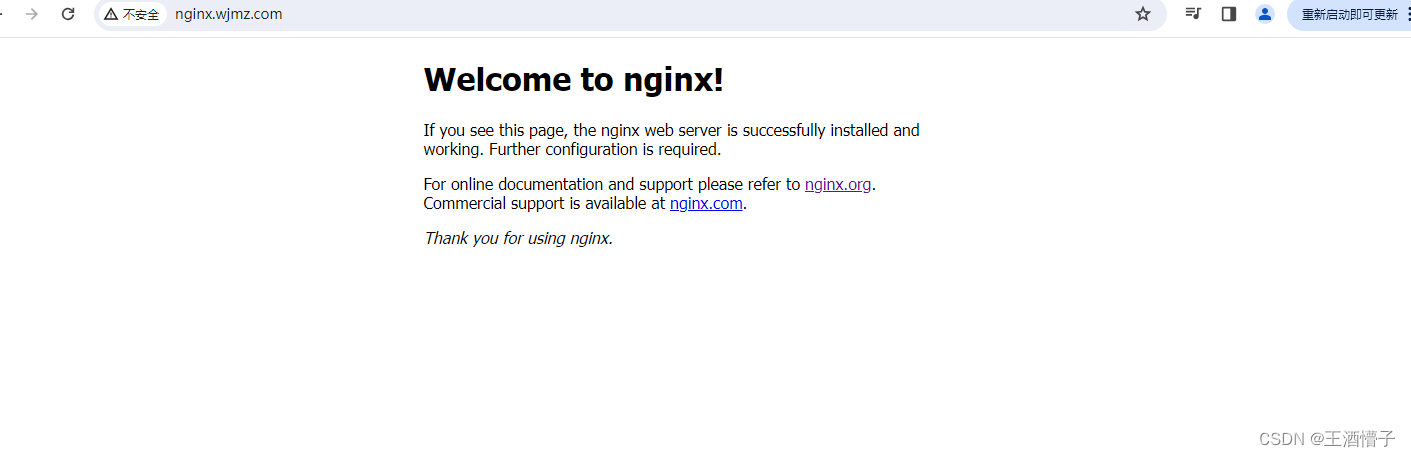

- host: nginx.wjmz.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

- host: tomcat.wjmz.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat-service

port:

number: 8080

# ingressClassName 可以指定选择的 Ingress Controller,使用名称选择,一般有多个控制器的时候使用。

部署 Nginx Ingress Controller 的名称是 nginx。

[root@k8s-master ingress-controller]# kubectl get -f ingress-http.yaml

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-http nginx nginx.wjmz.com,tomcat.wjmz.com 192.168.207.11 80 95s

[root@k8s-master ingress-controller]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.102.7.12 <none> 80:31310/TCP,443:31868/TCP 92m

ingress-nginx-controller-admission ClusterIP 10.100.63.61 <none> 443/TCP 92m

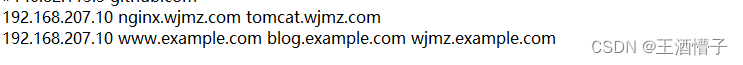

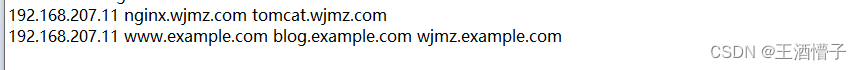

- 修改hosts文件,使其可以不加端口号访问

6.5.1.2 控制器在多个节点上部署

- 将之前创建的删除

[root@k8s-master ingress-controller]# kubectl delete -f tomcat-nginx.yaml

deployment.apps "nginx-deployment" deleted

deployment.apps "tomcat-deployment" deleted

service "nginx-service" deleted

service "tomcat-service" deleted

[root@k8s-master ingress-controller]# kubectl delete -f deploy.yaml

namespace "ingress-nginx" deleted

serviceaccount "ingress-nginx" deleted

serviceaccount "ingress-nginx-admission" deleted

role.rbac.authorization.k8s.io "ingress-nginx" deleted

role.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

clusterrole.rbac.authorization.k8s.io "ingress-nginx" deleted

clusterrole.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

rolebinding.rbac.authorization.k8s.io "ingress-nginx" deleted

rolebinding.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

clusterrolebinding.rbac.authorization.k8s.io "ingress-nginx" deleted

clusterrolebinding.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

configmap "ingress-nginx-controller" deleted

service "ingress-nginx-controller" deleted

service "ingress-nginx-controller-admission" deleted

deployment.apps "ingress-nginx-controller" deleted

job.batch "ingress-nginx-admission-create" deleted

job.batch "ingress-nginx-admission-patch" deleted

ingressclass.networking.k8s.io "nginx" deleted

validatingwebhookconfiguration.admissionregistration.k8s.io "ingress-nginx-admission" deleted

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

No resources found in ingress-nginx namespace.

[root@k8s-master ingress-controller]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

centos2 1/1 Running 0 101m

[root@k8s-master ingress-controller]# cp deploy.yaml deploy.yaml_bak

# 在所有节点都有一个控制器,有几个节点就有几个控制器

[root@k8s-master ingress-controller]# vi deploy.yaml

[root@k8s-master ingress-controller]# cat deploy.yaml

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-controller-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-controller-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: NodePort

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

replicas: 2

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

hostNetwork: true

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: dyrnq/ingress-nginx-controller:v1.3.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

app: ingress-controller

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: dyrnq/kube-webhook-certgen:v1.3.0

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.3.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

# 确保有两个控制器,加标签

493 nodeSelector:

494 kubernetes.io/os: linux

495 app: ingress-controller###添加

496 serviceAccountName: ingress-nginx

497 terminationGracePeriodSeconds: 300

498 volumes:

499 - name: webhook-cert

500 secret:

501 secretName: ingress-nginx-admission

502 ---

503 apiVersion: batch/v1

[root@k8s-master ingress-controller]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ld9h5 0/1 Completed 0 24s

ingress-nginx-admission-patch-q85pl 0/1 Completed 1 24s

ingress-nginx-controller-67cc8878b-mb4mw 0/1 Pending 0 24s

ingress-nginx-controller-67cc8878b-smfjk 0/1 Pending 0 24s

# 处于pending,没有满足标签选择器所定义的标签,给节点添加标签之后可以运行

# 给node1打标签,可以看到节点1运行起来了

[root@k8s-master ingress-controller]# kubectl label node k8s-node1 app=ingress-controller

node/k8s-node1 labeled

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ld9h5 0/1 Completed 0 51s

ingress-nginx-admission-patch-q85pl 0/1 Completed 1 51s

ingress-nginx-controller-67cc8878b-mb4mw 0/1 ContainerCreating 0 51s

ingress-nginx-controller-67cc8878b-smfjk 0/1 Pending 0 51s

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ld9h5 0/1 Completed 0 52s

ingress-nginx-admission-patch-q85pl 0/1 Completed 1 52s

ingress-nginx-controller-67cc8878b-mb4mw 0/1 Running 0 52s

ingress-nginx-controller-67cc8878b-smfjk 0/1 Pending 0 52s

# # 给node2打标签,可以看到节点2运行起来了v

[root@k8s-master ingress-controller]# kubectl label node k8s-node2 app=ingress-controller

node/k8s-node2 labeled

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ld9h5 0/1 Completed 0 60s

ingress-nginx-admission-patch-q85pl 0/1 Completed 1 60s

ingress-nginx-controller-67cc8878b-mb4mw 0/1 Running 0 60s

ingress-nginx-controller-67cc8878b-smfjk 0/1 ContainerCreating 0 60s

[root@k8s-master ingress-controller]# kubectl get pods -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-ld9h5 0/1 Completed 0 61s

ingress-nginx-admission-patch-q85pl 0/1 Completed 1 61s

ingress-nginx-controller-67cc8878b-mb4mw 0/1 Running 0 61s

ingress-nginx-controller-67cc8878b-smfjk 0/1 Running 0 61s

[root@k8s-master ingress-controller]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane 139d v1.27.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node1 Ready <none> 139d v1.27.0 app=ingress-controller,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node1,kubernetes.io/os=linux

k8s-node2 Ready <none> 139d v1.27.0 app=ingress-controller,beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node2,kubernetes.io/os=linux

[root@k8s-master ingress-controller]# cat tomcat-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: nginx-pod

template:

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-deployment

namespace: dev

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-pod

template:

metadata:

labels:

app: tomcat-pod

spec:

containers:

- name: tomcat

image: tomcat:8.5-jre10-slim

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: dev

spec:

selector:

app: nginx-pod

clusterIP: None

type: ClusterIP

ports:

- port: 80

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-service

namespace: dev

spec:

selector:

app: tomcat-pod

clusterIP: None

type: ClusterIP

ports:

- port: 8080

targetPort: 8080

# 运行:pod tomcat-nginx.yaml

[root@k8s-master ingress-controller]# kubectl apply -f tomcat-nginx.yaml

deployment.apps/nginx-deployment created

deployment.apps/tomcat-deployment created

service/nginx-service created

service/tomcat-service created

[root@k8s-master ingress-controller]# kubectl get pods -n dev

NAME READY STATUS RESTARTS AGE

centos2 1/1 Running 8 (13m ago) 20h

nginx-deployment-6fdb77cb4-gqx4j 1/1 Running 0 26s

nginx-deployment-6fdb77cb4-v6jzp 1/1 Running 0 26s

nginx-deployment-6fdb77cb4-wbvd8 1/1 Running 0 26s

tomcat-deployment-96986c7bd-4hvgl 1/1 Running 0 26s

tomcat-deployment-96986c7bd-6x9d9 1/1 Running 0 26s

tomcat-deployment-96986c7bd-xpw4w 1/1 Running 0 26s

[root@k8s-master ingress-controller]# cat ingress-http.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-http

namespace: dev

spec:

ingressClassName: nginx

rules:

- host: nginx.wjmz.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

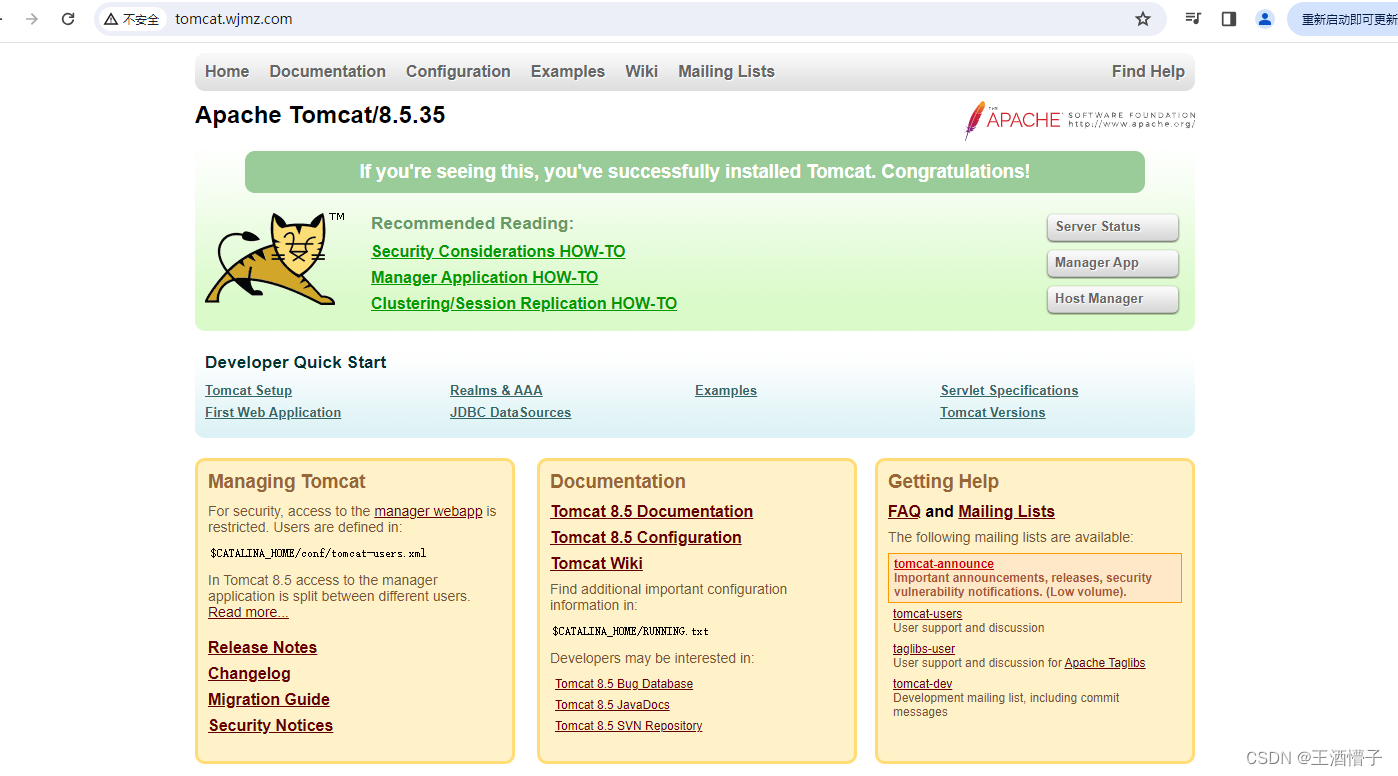

- host: tomcat.wjmz.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tomcat-service

port:

number: 8080

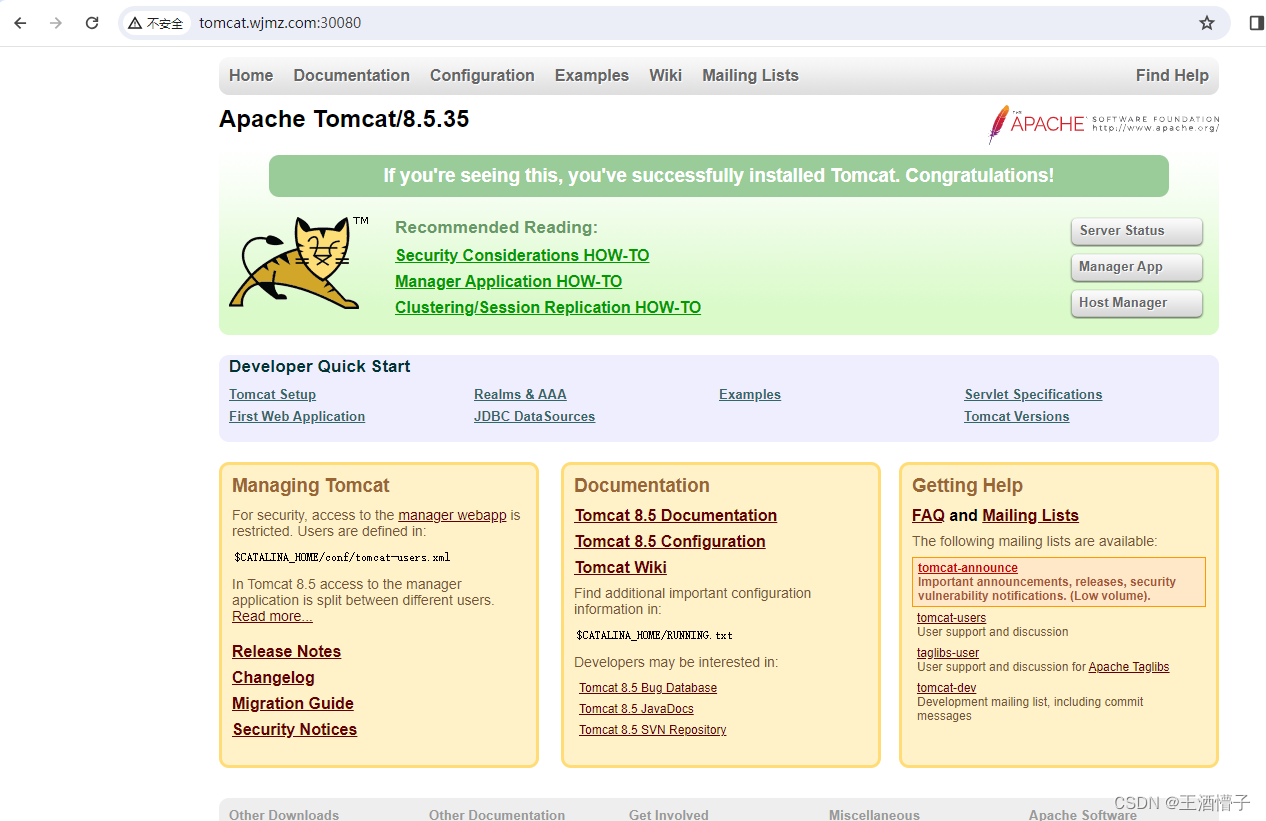

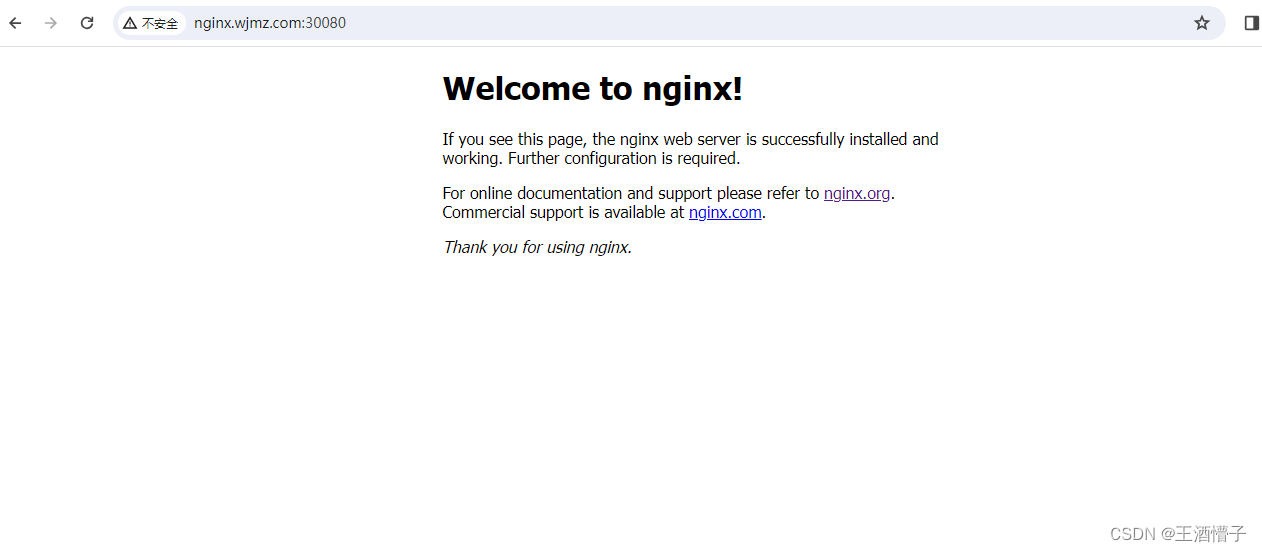

# 运行控制器

[root@k8s-master ingress-controller]# kubectl apply -f ingress-http.yaml

ingress.networking.k8s.io/ingress-http created

[root@k8s-master ingress-controller]# kubectl get -f ingress-http.yaml

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-http nginx nginx.wjmz.com,tomcat.wjmz.com 192.168.207.11,192.168.207.12 80 13s

[root@k8s-master ingress-controller]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.96.214.223 <none> 80:32269/TCP,443:32665/TCP 63m

ingress-nginx-controller-admission ClusterIP 10.100.165.211 <none> 443/TCP 63m

固定ip

在deploy.yaml中service中添加两个ip

[root@k8s-master ingress-controller]# kubectl delete -f deploy.yaml

namespace "ingress-nginx" deleted

serviceaccount "ingress-nginx" deleted

serviceaccount "ingress-nginx-admission" deleted

role.rbac.authorization.k8s.io "ingress-nginx" deleted

role.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

clusterrole.rbac.authorization.k8s.io "ingress-nginx" deleted

clusterrole.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

rolebinding.rbac.authorization.k8s.io "ingress-nginx" deleted

rolebinding.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

clusterrolebinding.rbac.authorization.k8s.io "ingress-nginx" deleted

clusterrolebinding.rbac.authorization.k8s.io "ingress-nginx-admission" deleted

configmap "ingress-nginx-controller" deleted

service "ingress-nginx-controller" deleted

service "ingress-nginx-controller-admission" deleted

deployment.apps "ingress-nginx-controller" deleted

job.batch "ingress-nginx-admission-create" deleted

job.batch "ingress-nginx-admission-patch" deleted

ingressclass.networking.k8s.io "nginx" deleted

validatingwebhookconfiguration.admissionregistration.k8s.io "ingress-nginx-admission" deleted

[root@k8s-master ingress-controller]# vi deploy.yaml

345 spec:

346 ipFamilies:

347 - IPv4

348 ipFamilyPolicy: SingleStack

349 ports:

350 - appProtocol: http

351 name: http

352 port: 80

353 nodePort: 30080###添加

354 protocol: TCP

355 targetPort: http

356 - appProtocol: https

357 name: https

358 port: 443

359 nodePort: 30443###添加

360 protocol: TCP

361 targetPort: https

362 selector:

[root@k8s-master ingress-controller]# kubectl apply -f deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

[root@k8s-master ingress-controller]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.99.212.97 <none> 80:30080/TCP,443:30443/TCP 11s

ingress-nginx-controller-admission ClusterIP 10.102.91.41 <none> 443/TCP 11s

# 查看控制器信息

[root@k8s-master ingress-controller]# kubectl describe svc -n ingress-nginx ingress-nginx-controller

Name: ingress-nginx-controller

Namespace: ingress-nginx

Labels: app.kubernetes.io/component=controller

app.kubernetes.io/instance=ingress-nginx

app.kubernetes.io/name=ingress-nginx

app.kubernetes.io/part-of=ingress-nginx

app.kubernetes.io/version=1.3.1

Annotations: <none>

Selector: app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

Type: NodePort

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.99.212.97

IPs: 10.99.212.97

Port: http 80/TCP

TargetPort: http/TCP

NodePort: http 30080/TCP

Endpoints: 192.168.207.11:80,192.168.207.12:80

Port: https 443/TCP

TargetPort: https/TCP

NodePort: https 30443/TCP

Endpoints: 192.168.207.11:443,192.168.207.12:443

Session Affinity: None

External Traffic Policy: Cluster

Events: <none>

6.5.1.3 Https代理

创建证书

[root@k8s-master ~]# mkdir crt

[root@k8s-master ~]# cd crt/

[root@k8s-master crt]# ls