GLES学习笔记---OpenGL绘制到ImageReader

2023-12-25 10:55:09

一、ImageReader简介

ImageReader 之前经常使用在camera应用里面,创建一个ImageReader,然后获取surface,配流和下发request时候将surface下发给framework,中间具体对ImageReader做了什么没有具体研究过,等到Hal层camera回帧的时候,ImageReader的onFrameAvailable就会回调上来,通过reader就可以获取图片了。

虽然使用上很简单,但是其实ImageReader里面要学习的东西还是很多的。

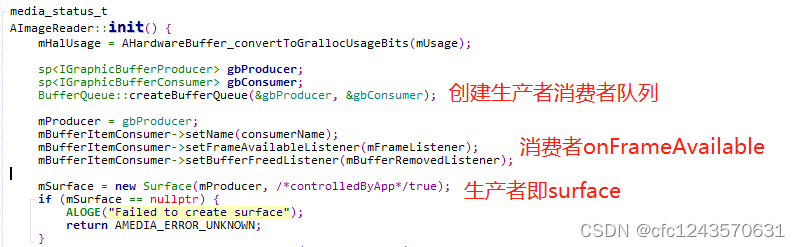

ImageReader本质上就是封装了BufferQueue和Surface的一些东西,init的时候创建了一个生产者消费者的队列,以下是init的主要代码

二、使用ImageReader接收Opengl绘制结果

为啥用ImageReader接收绘制结果呢?之前写过使用glReadPixels将绘制结果读取到内存,但是glReadPixels在读取较大图片时候很耗时的,尤其是低端机上,因为glReadPixels是直接从显存拷贝到内存,所以并不使用频繁读取的情况。

通过分析ImageReader源码大概可以简单了解到ImageReader基于BufferQueue和Surface的,那我们可以猜到ImageReader相关的内存应该是GraphicBuffer,GraphicBuffer方便于GPU&CPU共享。

下面这段是创建EGL环境的代码,主要就是:

media_status_t status = AImageReader_new(4000, 3000, AIMAGE_FORMAT_RGBA_8888, 3, &imageReader); 创建ImageReader

status = AImageReader_setImageListener(imageReader, &listener); 设置帧回调

ANativeWindow *nwin; status = AImageReader_getWindow(imageReader, &nwin); 获取ANativeWindow, 作为

eglCreateWindowSurface的输入,也就是我们之后绘制的载体了。

JNIEXPORT void JNICALL Java_com_sprd_opengl_test_MyNdk_init2

(JNIEnv *env, jobject obj, jobject surface) {

// egl ------------------------------------------------------------------- start

LOGD("init");

AImageReader *imageReader;

media_status_t status = AImageReader_new(4000, 3000, AIMAGE_FORMAT_RGBA_8888, 3, &imageReader);

LOGD("AImageReader_new status: %d", status);

listener.onImageAvailable = onFrameAvailable;

status = AImageReader_setImageListener(imageReader, &listener);

LOGD("AImageReader_setImageListener status: %d", status);

ANativeWindow *nwin;

status = AImageReader_getWindow(imageReader, &nwin);

LOGD("AImageReader_getWindow status: %d", status);

gl_cxt.nw = nwin;

gl_cxt.reader = imageReader;

EGLDisplay display = eglGetDisplay(EGL_DEFAULT_DISPLAY);

if (display == EGL_NO_DISPLAY) {

LOGD("egl display failed");

return;

}

if (EGL_TRUE != eglInitialize(display, 0, 0)) {

LOGD("eglInitialize failed");

return;

}

EGLConfig eglConfig;

EGLint configNum;

EGLint configSpec[] = {

EGL_RED_SIZE, 8,

EGL_GREEN_SIZE, 8,

EGL_BLUE_SIZE, 8,

EGL_ALPHA_SIZE, 8,

EGL_SURFACE_TYPE, EGL_WINDOW_BIT,

EGL_RENDERABLE_TYPE, EGL_OPENGL_ES2_BIT,

EGL_RECORDABLE_ANDROID, EGL_TRUE,

EGL_NONE

};

if (EGL_TRUE != eglChooseConfig(display, configSpec, &eglConfig, 1, &configNum)) {

LOGD("eglChooseConfig failed");

return;

}

EGLSurface winSurface = eglCreateWindowSurface(display, eglConfig, nwin, 0);

if (winSurface == EGL_NO_SURFACE) {

LOGD("eglCreateWindowSurface failed");

return;

}

const EGLint ctxAttr[] = {

EGL_CONTEXT_CLIENT_VERSION, 2,

EGL_NONE

};

EGLContext context = eglCreateContext(display, eglConfig, EGL_NO_CONTEXT, ctxAttr);

if (context == EGL_NO_CONTEXT) {

LOGD("eglCreateContext failed");

return;

}

if (EGL_TRUE != eglMakeCurrent(display, winSurface, winSurface, context)) {

LOGD("eglMakeCurrent failed");

return;

}

gl_cxt.display = display;

gl_cxt.winSurface = winSurface;

gl_cxt.context = context;

// egl ------------------------------------------------------------------- end

// shader ------------------------------------------------------------------- start

GLint vsh = initShader(vertexSimpleShape, GL_VERTEX_SHADER);

GLint fsh = initShader(fragSimpleShape, GL_FRAGMENT_SHADER);

GLint program = glCreateProgram();

if (program == 0) {

LOGD("glCreateProgram failed");

return;

}

glAttachShader(program, vsh);

glAttachShader(program, fsh);

glLinkProgram(program);

GLint status2 = 0;

glGetProgramiv(program, GL_LINK_STATUS, &status2);

if (status2 == 0) {

LOGD("glLinkProgram failed");

return;

}

gl_cxt.program = program;

LOGD("glLinkProgram success");

// shader ------------------------------------------------------------------- end

}这段是绘制的代码,里面有不少无用的代码,主要是绘制完成之后

eglSwapBuffers一下就可以了,这样才会触发onFrameAvailable回调。

JNIEXPORT void JNICALL Java_com_sprd_opengl_test_MyNdk_process2

(JNIEnv *env, jobject obj, jobject bitmap) {

LOGD("process2 1");

glUseProgram(gl_cxt.program);

AndroidBitmapInfo bitmapInfo;

if (AndroidBitmap_getInfo(env, bitmap, &bitmapInfo) < 0) {

LOGE("AndroidBitmap_getInfo() failed ! ");

return;

}

void *bmpPixels;

LOGD("process2 2, format: %d, stride: %d", bitmapInfo.format, bitmapInfo.stride);

AndroidBitmap_lockPixels(env, bitmap, &bmpPixels);

LOGD("process2 3");

unsigned int textureId;

glGenTextures(1, &textureId);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, textureId);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

int width = bitmapInfo.width;

int height = bitmapInfo.height;

LOGD("process2 4");

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, bmpPixels);

unsigned char *pOri = (unsigned char *)bmpPixels;

LOGD("process2 5 %d, %d, %d, %d, %d, %d, %d, %d", *(pOri), *(pOri+1), *(pOri+2), *(pOri+3),

*(pOri+114), *(pOri+115), *(pOri+116), *(pOri+117));

glBindTexture(GL_TEXTURE_2D, 0);

AndroidBitmap_unlockPixels(env, bitmap);

unsigned int offTexture, fbo;

glGenTextures(1, &offTexture);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, offTexture);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

// 没有这句调用, glCheckFramebufferStatus 返回 36054

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA, width, height, 0, GL_RGBA, GL_UNSIGNED_BYTE, NULL);

glGenFramebuffers(1, &fbo);

LOGD("fb status: %d, error: %d" , glCheckFramebufferStatus(GL_FRAMEBUFFER), glGetError());

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, offTexture, 0);

LOGD("fb status: %d, error: %d" , glCheckFramebufferStatus(GL_FRAMEBUFFER), glGetError());

glBindTexture(GL_TEXTURE_2D, 0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

/*顶点 纹理*/

float vertices[] = {-1.0f, -1.0f, 0.0f, 0.0f, 0.0f,

-1.0f, 1.0f, 0.0f, 0.0f, 1.0f,

1.0f, -1.0f, 0.0f, 1.0f, 0.0f,

1.0f, 1.0f, 0.0f, 1.0f, 1.0f};

unsigned int indices[] = {

0, 1, 2, // first triangle

1, 2, 3 // second triangle

};

// optimal

unsigned int VBO, EBO, VAO;

glGenVertexArrays(1, &VAO);

glBindVertexArray(VAO);

glGenBuffers(1, &VBO);

glBindBuffer(GL_ARRAY_BUFFER, VBO);

glBufferData(GL_ARRAY_BUFFER, sizeof(vertices), vertices, GL_STATIC_DRAW);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)0);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (void*)((0 + 3)*sizeof(float)));

glEnableVertexAttribArray(0);

glEnableVertexAttribArray(1);

glGenBuffers(1, &EBO);

glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, EBO);

glBufferData(GL_ELEMENT_ARRAY_BUFFER, sizeof(indices), indices, GL_STATIC_DRAW);

glBindVertexArray(0);

glBindVertexArray(VAO);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT|GL_DEPTH_BUFFER_BIT);

// draw to offline texture.

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, offTexture);

glBindFramebuffer(GL_FRAMEBUFFER, fbo);

LOGD("fb status: %d, error: %d" , glCheckFramebufferStatus(GL_FRAMEBUFFER), glGetError());

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (void*)0);

glBindFramebuffer(GL_FRAMEBUFFER, 0);

glBindTexture(GL_TEXTURE_2D, 0);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, textureId);

glDrawElements(GL_TRIANGLES, 6, GL_UNSIGNED_INT, (void*)0);

glBindTexture(GL_TEXTURE_2D, 0);

glFinish();

glDisableVertexAttribArray(0);

glDisableVertexAttribArray(1);

glBindVertexArray(0);

eglSwapBuffers(gl_cxt.display, gl_cxt.winSurface);

LOGD("process2 X");最后就是onFrameAvailable回调了,绘制完成之后onFrameAvailable就会触发,可以暂时不考虑我的memcpy那一段

AHardwareBuffer_lock耗时就十几毫秒(4000*3000*4的数据),所以很快就能从内存得到绘制结果了。

static void onFrameAvailable(void *context, AImageReader *reader) {

LOGD("MyNdk onFrameAvailable");

AImage *image = nullptr;

media_status_t status = AImageReader_acquireNextImage(reader, &image);

LOGD("AImageReader_acquireNextImage status: %d, image: %p", status, image);

AHardwareBuffer *inBuffer = nullptr;

status = AImage_getHardwareBuffer(image, &inBuffer);

LOGD("AImage_getHardwareBuffer status: %d, inBuffer: %p", status, inBuffer);

int width;

int height;

AImage_getWidth(image, &width);

AImage_getHeight(image, &height);

unsigned char *ptrReader = nullptr;

unsigned char *dstBuffer = static_cast<unsigned char *>(malloc(width * height * 4));

LOGD("AHardwareBuffer_lock E, w:%d, h:%d", width, height);

AHardwareBuffer_lock(inBuffer, AHARDWAREBUFFER_USAGE_CPU_READ_OFTEN, -1, nullptr,

(void **) &ptrReader);

LOGD("AHardwareBuffer_lock X");

memcpy(dstBuffer, ptrReader, width * height * 4);

AHardwareBuffer_unlock(inBuffer, nullptr);

LOGD("%d, %d, %d, %d", *ptrReader, *(ptrReader+1), *(ptrReader+2), *(ptrReader+3));

AImage_delete(image);

}

文章来源:https://blog.csdn.net/cfc1243570631/article/details/135190936

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!

本文来自互联网用户投稿,该文观点仅代表作者本人,不代表本站立场。本站仅提供信息存储空间服务,不拥有所有权,不承担相关法律责任。 如若内容造成侵权/违法违规/事实不符,请联系我的编程经验分享网邮箱:veading@qq.com进行投诉反馈,一经查实,立即删除!